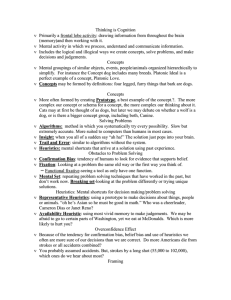

The Domain Specificity and Generality of Belief Bias

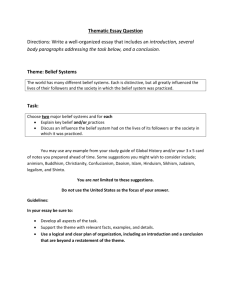

advertisement