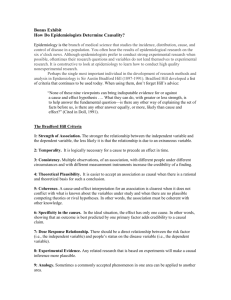

Chapter Eight- Causal Reasoning

advertisement