Automated process chain for Text Mining with RSS and Corpora

advertisement

Automated process chain for Text Mining

with RSS and Corpora- Builder

Knappe MARCUS

HS-Anhalt, Lange Straße 52, Köthen, 06366, Germany

Tel: +49 172 1854616, Email: Marcus.Knappe@inf.hs-anhalt.de

Abstract: With normal Data Mining methods cannot all the information of a

company or project uses. Text Mining makes many correlations visible in texts and

provides some contained information, which are not visible at first view. In this

paper, the general process chain of Text Mining is shown and a special process chain

with RSS. This was designed to collect easily a lot of text for analysis. Moreover,

anything said about the use of the analysis and visualization with a java framework

named Prefuse.

Keywords: Corpora, Feed, Prefuse, RSS, Text Mining.

1. Introduction

A study from IBM shows, that 80 % of all existent data are in unstructured form. For

example this could be emails, call center notices, free texts, news, blogs, twitter, surveys,

web forms, company internal documents and other text sources or documents.[1] The normal

Data Mining can’t use the contained pattern and trends for analysis like paradigmatic

relations, syntagmatic relations, semantic relations, logical relations and technical terms.

Paradigmatic relation is in the tradition of linguistic structuralism, the name for the

occurrence of two forms of words in similar contexts. Syntagmatic relation is in the

tradition of linguistic structuralism, the name for the common occurrence of two forms of

words in a sentence or text window. Semantic relations are sets of pairs of word forms of a

language. Between two word forms of a language, a semantic relation exists only if they are

in a paradigmatic or syntagmatic relation. The semantic relations of adjacent word forms

include in particular the category or functional specification, the unit or qualification,

modification and change. Logical relations are semantic relations, which support the logical

conclusions. These include in particular the preamble and narrower relationships,

synonyms, opposites, antonyms, and complementary concepts converse. The notion of

logical relations can be defined set-theoretically. A technical term is a word or a phrase,

which is characteristic for a given criterion for a field. To locate technical terms, a reference

corpus is required. The reference corpus is usually composed of a number of texts that

reflect the general linguistic usage. For general linguistic usage can also be some wellknown technical terms from the various technical languages belong. These technical terms,

however, occur in the reference corpus relatively rarely - compared with a corpus of

relevant technical language [2][3][4].

This connoted that normal Data Mining, which is concentrated in structured data’s like

gender, address, buying behavior and properties of a product, involved less than 20 % of the

available information’s. But the extracted information’s from Text Mining can be used by

Data Mining [1][4].

1. General process chain

In the next figure (fig. 1) the general process chain of Text Mining is shown.

Figure 1: General process chain of Text Mining [4]

The first step in the general process chain is the text acquisition. This initial step includes

all mechanism to collect and extract texts and get it in machine-readable form. For example

collect all threads in a forum or blog about a product from a company, extract the text from

this threads and save this text for the next step.

The second step is the key step in Text Mining, the Corpora Builder. He converts the

unstructured information’s of the text in structured Data. He builds cooccurrenzes and

extracts important information’s.

The last step is the used step. The extracted data from step two can now use in other

applications or they can be visualized. The other applications can be methods from Data

Mining or Data warehouse. For term relation visualization a sociogram (fig. 2), this is a

special network diagram with terms as nodes and the relation of two terms is represented by

the edge between the two nodes, is the best practice. For trend visualization a bar- or line

char with time as abscissa and the significance of a term for the time as ordinate is used.

Term7

Term2

Term6

Term1

Term5

Term4

Figure 2: Sociogram [4]

Term3

2. Special process chain with RSS

A big growing source for texts is Really Simple Syndication (RSS).

[5] This is the icon

for RSS and it is a XML- based data format for spread of text. This technique uses client

server connections. The prospect subscribes a RSS-Channel and the client search in

continues span for new RSS Feeds. This Feeds contained the headline, the language, the

date, a short text crack and the URL to get the full text version. [5]

Figure 3: Special process chain with RSS [4]

The followed description is for the figure 3.

The step 1.1: The Database covers round about 700 RSS sources, like Wall Street, BBC,

CNN, FAZ, Spiegel. The sources are classified in subject areas and they have a name, the

URL and next crawl rotation. The subject areas are used for generate subject area corpora’s

with step 1.3 and 2. These subject areas are for example politics, science, sport,

engineering, finance, society, business, technology and energy. The URL, where the new

Feeds can be downloaded, and the next crawl rotation, when a source has approximately

new Feeds, are important for the next step 1.2.

The step 1.2: An automated program starts every two hours a new crawl. To collect all

Feeds from the 700 RSS sources the program needs round 14 hours if there are all Feeds

new. But in real there are round 1.000 new Feeds every two hours. One RSS source

contains between 5 to 100 Feeds. If a “good” source contains for example 10 Feeds, but at

one day it publishes 25 new Feeds so a crawl only one per day miss some Feeds. Some

sources are “good” sources because they publish every two hours some Feeds, like BBC

and there are some “bad” sources too. Because they publish few Feeds per day or per week,

like “Swiss info economy in German”. If a crawl tests all 700 RSS sources for new Feeds it

takes more than two hours. Thus a continues learning process is needed to decide between

“good” and “bad” sources. This “good” and “bad” says nothing about the quality of the

text, but only on the publish ratio. The default value for next crawl rotation is 60 minutes. If

the RSS source is a “good” source and have new Feeds, than the next crawl rotation is

decreased by 2/3. At the other side, if the RSS source is a “bad” source and have in this

crawl zero new Feed, than the next crawl rotation is raised up by 3/2. The new Feeds are

saved in a Database, too. Every day round 12.000 new entries are collected at a weekday

and round 7.000 new entries are collected at a weekend day. These entries have important

properties, like language and date. With these specific things can be analyzed trends or

language specific properties. The collected URL is the interface to the complete message

and is used in the next step 1.3.

In the RSS Feed to text extractor (step 1.3) the desired Feeds can be selected by subject

area, language and/or date. They can be limited by count, too. After this configuration the

download process is started and the text content of the selected RSS Feeds would be

extracted. This text content is saved to his separate text file and they are grouped by

selection in directories.

The Corpora Builder (step 2) is the core of the process chain. He converts the extracted text

from the text Files to useful information’s. For this the Corpora- Builder comprise steps like

sentence segmentation, tokenization, optional lemmatization, stop word reduction, he

counts words and generates cooccurrenzes. The sentence segmentation scans the text for

sentence end chars, like ‘.’, ‘!’ and ‘?’. Here is the abbreviation dot a problem. For

example: “Google and Microsoft are U.S. companies.”. The first and second dots are not

sentence end dots, they are abbreviation dots and the third dot is a real sentence end dot.

The tokenization scans the separated sentence for morphemes, this are the smallest

meaningful units, the sentence blocks. For example from the previous example = {Google,

and, Microsoft, are, U, ., S, ., companies, .}. The lemmatization is an optional feature and it

is the reduction to the basic form of a term. For example “companies” can be reduced to

“company”. Some words like “the”, “a”, “I”, “and” and “or” are stop words. These words

are words that should be excluded from the analysis. These include, in general words or

word forms from the closed word classes, such as articles, conjunctions and prepositions. A

co-occurrence refers the statistically significant common appearance of two forms of words

in one sentence or text window (context).

With all this information’s a general reference Corpora can be build. Or more specific

reference Corpora’s for selection of language, subject area or date can build. With this,

analysis of pattern is possible. Technical terms and synonyms can be located easily. With

some selected date-Corpora’s, trends can be analyzed. The time difference-Corpora’s are

compared with each other and the differences and similarities are located. Now the new

information’s from Text Mining can be used by Data Mining methods, too (step 3.1)

[2][3][4][5].

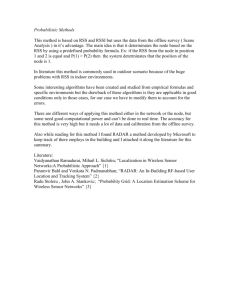

Figure 4: Example with Prefuse [4]

Prefuse is a java framework for graph visualization (step 3.2). At the figure 4 an example

for the German company DHL is represented. In the middle the search term, DHL, is

located and the most significance terms are linked with it. The boarder width represents the

significance of two linked terms. And with a mouse over the absolute significance can be

shown in a little textbox. If there are synonyms for one term, they can visualize with a

mouse over in a textbox, too. Like FEDERAL EXPRESS: FDX, FEDEX and FEDEX

EXPRESS. If there are other interesting terms with a click on them can be navigated

between them [4][6].

3. Conclusions

Text Mining will have a great future and will achieve together with Data Mining very good

synergies. The path shown here with RSS enables a simple, straightforward and not

memory-intensive collection of texts. The time analysis is very easy, because the date of the

feeds is stored. For the future a better RSS source collector is needed. The used RSS

sources are collected manual. The future should collect automatically sources, rate at

publish rotation and deled orphaned sources. The freeware visualization toolkit can perform

more and should be optimized.

References

[1] SPSS Text Mining Tage in Hamburg (24.03.2010)

[2] Heyer, G. et al. (2006), Text Mining: Wissensrohstoff Text,

Bochum: W3L-Verlag

GmbH

[3] Ferber, R. (2003), Information Retrieval : Suchmodelle und Data-Mining-Verfahren

für Textsammlungen und das Web, Heidelberg: dpunkt-Verlag GmbH

[4] Internal paper of the TextTech Informationsmanagement und Texttechnologie

GmbH

[5] Really Simple Syndication and the RSS icon http://www.rss-nachrichten.de/

[6] Prefuse visualization toolkit http://www.prefuse.org/