Comparing Group Means: The T-test and One

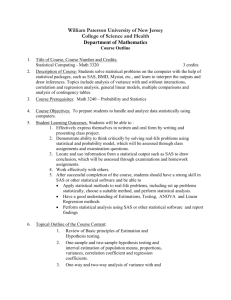

advertisement

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 1

Comparing Group Means: The T-test and One-way

ANOVA Using STATA, SAS, and SPSS

Hun Myoung Park

This document summarizes the method of comparing group means and illustrates how to

conduct the t-test and one-way ANOVA using STATA 9.0, SAS 9.1, and SPSS 13.0.

1.

2.

3.

4.

5.

6.

7.

Introduction

Univariate Samples

Paired (dependent) Samples

Independent Samples with Equal Variances

Independent Samples with Unequal Variances

One-way ANOVA, GLM, and Regression

Conclusion

1. Introduction

The t-test and analysis of variance (ANOVA) compare group means. The mean of a variable to

be compared should be substantively interpretable. A t-test may examine gender differences in

average salary or racial (white versus black) differences in average annual income. The lefthand side (LHS) variable to be tested should be interval or ratio, whereas the right-hand side

(RHS) variable should be binary (categorical).

1.1 T-test and ANOVA

While the t-test is limited to comparing means of two groups, one-way ANOVA can compare

more than two groups. Therefore, the t-test is considered a special case of one-way ANOVA.

These analyses do not, however, necessarily imply any causality (i.e., a causal relationship

between the left-hand and right-hand side variables). Table 1 compares the t-test and one-way

ANOVA.

Table 1. Comparison between the T-test and One-way ANOVA

T-test

One-way ANOVA

LHS (Dependent)

RHS (Independent)

Null Hypothesis

Interval or ratio variable

Binary variable with only two groups

µ1 = µ 2

*

T distribution

Prob. Distribution

* In the case of one degree of freedom on numerator, F=t2.

Interval or ratio variable

Categorical variable

µ1 = µ 2 = µ 3 = ...

F distribution

The t-test assumes that samples are randomly drawn from normally distributed populations

with unknown population means. Otherwise, their means are no longer the best measures of

central tendency and the t-test will not be valid. The Central Limit Theorem says, however, that

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 2

the distributions of y1 and y 2 are approximately normal when N is large. When n1 + n 2 ≥ 30 , in

practice, you do not need to worry too much about the normality assumption.

You may numerically test the normality assumption using the Shapiro-Wilk W (N<=2000),

Shapiro-Francia W (N<=5000), Kolmogorov-Smirnov D (N>2000), and Jarque-Bera tests. If N

is small and the null hypothesis of normality is rejected, you my try such nonparametric

methods as the Kolmogorov-Smirnov test, Kruscal-Wallis test, Wilcoxon Rank-Sum Test, or

Log-Rank Test, depending on the circumstances.

1.2 T-test in SAS, STATA, and SPSS

In STATA, the .ttest and .ttesti commands are used to conduct t-tests, whereas

the .anova and .oneway commands perform one-way ANOVA. SAS has the TTEST

procedure for t-test, but the UNIVARIATE, and MEANS procedures also have options for ttest. SAS provides various procedures for the analysis of variance, such as the ANOVA, GLM,

and MIXED procedures. The ANOVA procedure can handle balanced data only, while the

GLM and MIXED can analyze either balanced or unbalanced data (having the same or different

numbers of observations across groups). However, unbalanced data does not cause any

problems in the t-test and one-way ANOVA. In SPSS, T-TEST, ONEWAY, and UNIANOVA

commands are used to perform t-test and one-way ANOVA.

Table 2 summarizes STATA commands, SAS procedures, and SPSS commands that are

associated with t-test and one-way ANOVA.

Table 2. Related Procedures and Commands in STATA, SAS, and SPSS

STATA 9.0 SE

SAS 9.1

SPSS 13.0

.sktest;

.swilk;

UNIVARIATE

EXAMINE

Normality Test

Equal Variance

Nonparametric

T-test

ANOVA

GLM*

.sfrancia

.oneway

.ksmirnov; .kwallis

.ttest

.anova; .oneway

TTEST

NPAR1WAY

TTEST; MEANS

ANOVA

GLM; MIXED

T-TEST

NPAR TESTS

T-TEST

ONEWAY

UNIANOVA

* The STATA .glm command is not used for the T test, but for the generalized linear model.

1.3 Data Arrangement

There are two types of data arrangement for t-tests (Figure 1). The first data arrangement has a

variable to be tested and a grouping variable to classify groups (0 or 1). The second,

appropriate especially for paired samples, has two variables to be tested. The two variables in

this type are not, however, necessarily paired nor balanced. SAS and SPSS prefer the first data

arrangement, whereas STATA can handle either type flexibly. Note that the numbers of

observations across groups are not necessarily equal.

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Variable

x

x

…

y

y

…

Comparing Group Means: 3

Figure 1. Two Types of Data Arrangement

Group

Variable1

0

0

…

1

1

…

x

x

…

Variable2

y

y

…

The data set used here is adopted from J. F. Fraumeni’s study on cigarette smoking and cancer

(Fraumeni 1968). The data are per capita numbers of cigarettes sold by 43 states and the

District of Columbia in 1960 together with death rates per hundred thousand people from

various forms of cancer. Two variables were added to categorize states into two groups. See the

appendix for the details.

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 4

2. Univariate Samples

The univariate-sample or one-sample t-test determines whether an unknown population mean

µ differs from a hypothesized value c that is commonly set to zero: H 0 : µ = c . The t statistic

y−c

follows Student’s T probability distribution with n-1 degrees of freedom, t =

~ t ( n − 1) ,

sy

where y is a variable to be tested and n is the number of observations.1

Suppose you want to test if the population mean of the death rates from lung cancer is 20 per

100,000 people at the .01 significance level. Note the default significance level used in most

software is the .05 level.

2.1 T-test in STATA

The .ttest command conducts t-tests in an easy and flexible manner. For a univariate sample

test, the command requires that a hypothesized value be explicitly specified. The level()

option indicates the confidence level as a percentage. The 99 percent confidence level is

equivalent to the .01 significance level.

. ttest lung=20, level(99)

One-sample t test

-----------------------------------------------------------------------------Variable |

Obs

Mean

Std. Err.

Std. Dev.

[99% Conf. Interval]

---------+-------------------------------------------------------------------lung |

44

19.65318

.6374133

4.228122

17.93529

21.37108

-----------------------------------------------------------------------------mean = mean(lung)

t = -0.5441

Ho: mean = 20

degrees of freedom =

43

Ha: mean < 20

Pr(T < t) = 0.2946

Ha: mean != 20

Pr(|T| > |t|) = 0.5892

Ha: mean > 20

Pr(T > t) = 0.7054

STATA first lists descriptive statistics of the variable lung. The mean and standard deviation

of the 44 observations are 19.653 and 4.228, respectively. The t statistic is -.544 = (19.653-20)

/ .6374. Finally, the degrees of freedom are 43 =44-1.

There are three t-tests at the bottom of the output above. The first and third are one-tailed tests,

whereas the second is a two-tailed test. The t statistic -.544 and its large p-value do not reject

the null hypothesis that the population mean of the death rate from lung cancer is 20 at the .01

level. The mean of the death rate may be 20 per 100,000 people. Note that the hypothesized

value 20 falls into the 99 percent confidence interval 17.935-21.371. 2

∑y

∑(y

− y )2

s

, and standard error s y =

.

n

n −1

n

2

The 99 percent confidence interval of the mean is y ± tα 2 s y = 19 .653 ± 2.695 * .6374 , where the 2.695 is

1

y=

i

, s =

2

i

the critical value with 43 degree of freedom at the .01 level in the two-tailed test.

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 5

If you just have the aggregate data (i.e., the number of observations, mean, and standard

deviation of the sample), use the .ttesti command to replicate the t-test above. Note the

hypothesized value is specified at the end of the summary statistics.

. ttesti 44 19.65318 4.228122 20, level(99)

2.2 T-test Using the SAS TTEST Procedure

The TTEST procedure conducts various types of t-tests in SAS. The H0 option specifies a

hypothesized value, whereas the ALPHA indicates a significance level. If omitted, the default

values zero and .05 respectively are assumed.

PROC TTEST H0=20 ALPHA=.01 DATA=masil.smoking;

VAR lung;

RUN;

The TTEST Procedure

Statistics

Variable

lung

N

Lower CL

Mean

Mean

Upper CL

Mean

Lower CL

Std Dev

Std Dev

Upper CL

Std Dev

Std Err

44

17.935

19.653

21.371

3.2994

4.2281

5.7989

0.6374

T-Tests

Variable

DF

t Value

Pr > |t|

lung

43

-0.54

0.5892

The TTEST procedure reports descriptive statistics followed by a one-tailed t-test. You may

have a summary data set containing the values of a variable (lung) and their frequencies

(count). The FREQ option of the TTEST procedure provides the solution for this case.

PROC TTEST H0=20 ALPHA=.01 DATA=masil.smoking;

VAR lung;

FREQ count;

RUN;

2.3 T-test Using the SAS UNIVARIATE and MEANS Procedures

The SAS UNIVARIATE and MEANS procedures also conduct a t-test for a univariate-sample.

The UNIVARIATE procedure is basically designed to produces a variety of descriptive

statistics of a variable. Its MU0 option tells the procedure to perform a t-test using the

hypothesized value specified. The VARDEF=DF specifies a divisor (degrees of freedom) used in

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 6

computing the variance (standard deviation).3 The NORMAL option examines if the variable is

normally distributed.

PROC UNIVARIATE MU0=20 VARDEF=DF NORMAL ALPHA=.01 DATA=masil.smoking;

VAR lung;

RUN;

The UNIVARIATE Procedure

Variable: lung

Moments

N

Mean

Std Deviation

Skewness

Uncorrected SS

Coeff Variation

44

19.6531818

4.22812167

-0.104796

17763.604

21.5136751

Sum Weights

Sum Observations

Variance

Kurtosis

Corrected SS

Std Error Mean

44

864.74

17.8770129

-0.949602

768.711555

0.63741333

Basic Statistical Measures

Location

Mean

Median

Mode

Variability

19.65318

20.32000

.

Std Deviation

Variance

Range

Interquartile Range

4.22812

17.87701

15.26000

6.53000

Tests for Location: Mu0=20

Test

-Statistic-

-----p Value------

Student's t

Sign

Signed Rank

t

M

S

Pr > |t|

Pr >= |M|

Pr >= |S|

-0.5441

1

-36.5

0.5892

0.8804

0.6752

Tests for Normality

Test

--Statistic---

-----p Value------

Shapiro-Wilk

Kolmogorov-Smirnov

Cramer-von Mises

Anderson-Darling

W

D

W-Sq

A-Sq

Pr

Pr

Pr

Pr

0.967845

0.086184

0.063737

0.382105

<

>

>

>

W

D

W-Sq

A-Sq

0.2535

>0.1500

>0.2500

>0.2500

Quantiles (Definition 5)

3

Quantile

Estimate

100% Max

27.270

The VARDEF=N uses N as a divisor, while VARDEF=WDF specifies the sum of weights minus one.

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 7

99%

95%

90%

75% Q3

50% Median

25% Q1

27.270

25.950

25.450

22.815

20.320

16.285

Quantiles (Definition 5)

Quantile

10%

5%

1%

0% Min

Estimate

14.110

12.120

12.010

12.010

Extreme Observations

-----Lowest----

----Highest----

Value

Obs

Value

Obs

12.01

12.11

12.12

13.58

14.11

39

33

30

10

36

25.45

25.88

25.95

26.48

27.27

16

1

27

18

8

The third block of the output above reports a t statistic and its p-value. The fourth block

contains several statistics of normality test. Since N is less than 2,000, you should read the

Shapiro-Wilk W, which suggests that lung is normally distributed (p<.2535)

The MEANS procedure also conducts t-tests using the T and PROBT options that request the t

statistic and its two-tailed p-value. The CLM option produces the two-tailed confidence interval

(or upper and lower limits). The MEAN, STD, and STDERR respectively print the sample mean,

standard deviation, and standard error.

PROC MEANS MEAN STD STDERR T PROBT CLM VARDEF=DF ALPHA=.01 DATA=masil.smoking;

VAR lung;

RUN;

The MEANS Procedure

Analysis Variable : lung

Lower 99%

Upper 99%

Mean

Std Dev

Std Error t Value Pr > |t|

CL for Mean

CL for Mean

ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ

19.6531818

4.2281217

0.6374133

30.83

<.0001

17.9352878

21.3710758

ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 8

The MEANS procedure does not, however, have an option to specify a hypothesized value to

anything other than zero. Thus, the null hypothesis here is that the population mean of death

rate from lung cancer is zero. The t statistic 30.83 is (19.6532-0)/.6374. The large t statistic and

small p-value reject the null hypothesis, reporting a consistent conclusion.

2.4 T-test in SPSS

The SPSS has the T-TEST command for t-tests. The /TESTVAL subcommand specifies the value

with which the sample mean is compared, whereas the /VARIABLES list the variables to be tested.

Like STATA, SPSS specifies a confidence level rather than a significance level in the

/CRITERIA=CI() subcommand.

T-TEST

/TESTVAL = 20

/VARIABLES = lung

/MISSING = ANALYSIS

/CRITERIA = CI(.99) .

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 9

3. Paired (Dependent) Samples

When two variables are not independent, but paired, the difference of these two variables,

d i = y1i − y 2i , is treated as if it were a single sample. This test is appropriate for pre-post

treatment responses. The null hypothesis is that the true mean difference of the two variables is

D0, H 0 : µ d = D0 .4 The difference is typically assumed to be zero unless explicitly specified.

3.1 T-test in STATA

In order to conduct a paired sample t-test, you need to list two variables separated by an equal

sign. The interpretation of the t-test remains almost unchanged. The -1.871 = (-10.16670)/5.4337 at 35 degrees of freedom does not reject the null hypothesis that the difference is zero.

. ttest pre=post0, level(95)

Paired t test

-----------------------------------------------------------------------------Variable |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------pre |

36

176.0278

6.529723

39.17834

162.7717

189.2838

post0 |

36

186.1944

7.826777

46.96066

170.3052

202.0836

---------+-------------------------------------------------------------------diff |

36

-10.16667

5.433655

32.60193

-21.19757

.8642387

-----------------------------------------------------------------------------mean(diff) = mean(pre – post0)

t = -1.8711

Ho: mean(diff) = 0

degrees of freedom =

35

Ha: mean(diff) < 0

Pr(T < t) = 0.0349

Ha: mean(diff) != 0

Pr(|T| > |t|) = 0.0697

Ha: mean(diff) > 0

Pr(T > t) = 0.9651

Alternatively, you may first compute the difference between the two variables, and then

conduct one-sample t-test. Note that the default confidence level, level(95), can be omitted.

. gen d=pre–post0

. ttest d=0

3.2 T-test in SAS

In the TTEST procedure, you have to use the PAIRED instead of the VAR statement. For the

output of the following procedure, refer to the end of this section.

PROC TTEST DATA=temp.drug;

PAIRED pre*post0;

RUN;

4

d − D0

td =

~ t ( n − 1) , where d =

sd

http://www.indiana.edu/~statmath

∑d

n

i

, sd =

2

∑ (d

− d )2

s

, and s d = d

n −1

n

i

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 10

The PAIRED statement provides various ways of comparing variables using asterisk (*) and

colon (:) operators. The asterisk requests comparisons between each variable on the left with

each variable on the right. The colon requests comparisons between the first variable on the left

and the first on the right, the second on the left and the second on the right, and so forth.

Consider the following examples.

PROC TTEST;

PAIRED pro: post0;

PAIRED (a b)*(c d); /* Equivalent to PAIRED a*c a*d b*c b*d; */

PAIRED (a b):(c d); /* Equivalent to PAIRED a*c b*c; */

PAIRED (a1-a10)*(b1-b10);

RUN;

The first PAIRED statement is the same as the PAIRED pre*post0. The second and the third

PAIRED statements contrast differences between asterisk and colon operators. The hyphen (–)

operator in the last statement indicates a1 through a10 and b1 through b10. Let us consider an

example of the PAIRED statement.

PROC TTEST DATA=temp.drug;

PAIRED (pre)*(post0-post1);

RUN;

The TTEST Procedure

Statistics

N

Lower CL

Mean

Mean

Upper CL

Mean

Lower CL

Std Dev

Std Dev

Upper CL

Std Dev

Std Err

36

36

-21.2

-30.43

-10.17

-20.39

0.8642

-10.34

26.443

24.077

32.602

29.685

42.527

38.723

5.4337

4.9475

Difference

pre - post0

pre - post1

T-Tests

Difference

DF

t Value

Pr > |t|

pre - post0

pre - post1

35

35

-1.87

-4.12

0.0697

0.0002

The first t statistic for pre versus post0 is identical to that of the previous section. The second

for pre versus post1 rejects the null hypothesis of no mean difference at the .01 level (p<.0002).

In order to use the UNIVARIATE and MEANS procedures, the difference between two paired

variables should be computed in advance.

DATA temp.drug2;

SET temp.drug;

d1 = pre - post0;

d2 = pre - post1;

RUN;

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

PROC UNIVARIATE MU0=0 VARDEF=DF NORMAL; VAR d1 d2; RUN;

PROC MEANS MEAN STD STDERR T PROBT CLM; VAR d1 d2; RUN;

PROC TTEST ALPHA=.05; VAR d1 d2; RUN;

3.3 T-test in SPSS

In SPSS, the PAIRS subcommand indicates a paired sample t-test.

T-TEST PAIRS = pre post0

/CRITERIA = CI(.95)

/MISSING = ANALYSIS .

http://www.indiana.edu/~statmath

Comparing Group Means: 11

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 12

4. Independent Samples with Equal Variances

You should check three assumptions first when testing the mean difference of two independent

samples. First, the samples are drawn from normally distributed populations with unknown

parameters. Second, the two samples are independent in the sense that they are drawn from

different populations and/or the elements of one sample are not related to those of the other

sample. Finally, the population variances of the two groups, σ 12 and σ 22 are equal.5 If any one

of assumption is violated, the t-test is not valid.

An example here is to compare mean death rates from lung cancer between smokers and nonsmokers. Let us begin with discussing the equal variance assumption.

4.1 F test for Equal Variances

The folded form F test is widely used to examine whether two populations have the same

s2

variance. The statistic is L2 ~ F (n L − 1, n S − 1) , where L and S respectively indicate groups

sS

with larger and smaller sample variances. Unless the null hypothesis of equal variances is

rejected, the pooled variance estimate s 2pool is used. The null hypothesis of the independent

sample t-test is H 0 : µ1 − µ 2 = D0 .

t=

( y1 − y 2 ) − D0

s pool

s

2

pool

1

1

+

n1 n2

∑(y

=

1i

~ t (n1 + n2 − 2) , where

− y1 ) 2 + ∑ ( y 2 j − y 2 ) 2

n1 + n2 − 2

(n1 − 1) s12 + (n 2 − 1) s 22

.

=

n1 + n2 − 2

When the assumption is violated, the t-test requires the approximations of the degree of

freedom. The null hypothesis and other components of the t-test, however, remain unchanged.

Satterthwaite’s approximation for the degree of freedom is commonly used. Note that the

approximation is a real number, not an integer.

y − y 2 − D0

t' = 1

~ t (df Satterthwaite ) , where

s12 s 22

+

n1 n 2

df Satterthwaite =

5

(n1 − 1)(n2 − 1)

s12 n1

c

=

and

s12 n1 + s22 n2

(n1 − 1)(1 − c) 2 + (n2 − 1)c 2

E ( x1 − x 2 ) = µ1 − µ 2 , Var ( x1 − x 2 ) =

http://www.indiana.edu/~statmath

σ 12

n1

+

σ 22

⎛1

1 ⎞

= σ 2 ⎜⎜ + ⎟⎟

n2

⎝ n1 n 2 ⎠

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 13

The SAS TTEST procedure and SPSS T-TEST command conduct F tests for equal variance.

SAS reports the folded form F statistic, whereas SPSS computes Levene's weighted F statistic.

In STATA, the .oneway command produces Bartlett’s statistic for the equal variance test. The

following is an example of Bartlett's test that does not reject the null hypothesis of equal

variance.

. oneway lung smoke

Analysis of Variance

Source

SS

df

MS

F

Prob > F

-----------------------------------------------------------------------Between groups

313.031127

1

313.031127

28.85

0.0000

Within groups

455.680427

42

10.849534

-----------------------------------------------------------------------Total

768.711555

43

17.8770129

Bartlett's test for equal variances:

chi2(1) =

0.1216

Prob>chi2 = 0.727

STATA, SAS, and SPSS all compute Satterthwaite’s approximation of the degrees of freedom.

In addition, the SAS TTEST procedure reports Cochran-Cox approximation and the

STATA .ttest command provides Welch’s degrees of freedom.

4.2 T-test in STATA

With the .ttest command, you have to specify a grouping variable smoke in this example in

the parenthesis of the by option.

. ttest lung, by(smoke) level(95)

Two-sample t test with equal variances

-----------------------------------------------------------------------------Group |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------0 |

22

16.98591

.6747158

3.164698

15.58276

18.38906

1 |

22

22.32045

.7287523

3.418151

20.80493

23.83598

---------+-------------------------------------------------------------------combined |

44

19.65318

.6374133

4.228122

18.36772

20.93865

---------+-------------------------------------------------------------------diff |

-5.334545

.9931371

-7.338777

-3.330314

-----------------------------------------------------------------------------diff = mean(0) - mean(1)

t = -5.3714

Ho: diff = 0

degrees of freedom =

42

Ha: diff < 0

Pr(T < t) = 0.0000

Ha: diff != 0

Pr(|T| > |t|) = 0.0000

Ha: diff > 0

Pr(T > t) = 1.0000

sL2 3.41822

Let us first check the equal variance. The F statistic is 1.17 = 2 =

~ F (21,21) . The

sS 3.1647 2

degrees of freedom of the numerator and denominator are 21 (=22-1). The p-value of .7273,

virtually the same as that of Bartlett’s test above, does not reject the null hypothesis of equal

variance. Thus, the t-test here is valid (t=-5.3714 and p<.0000).

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

t=

Comparing Group Means: 14

(16.9859 − 22.3205) − 0

s 2pool

= −5.3714 ~ t (22 + 22 − 2) , where

1

1

s pool

+

22 22

(22 − 1)3.1647 2 + (22 − 1)3.4182 2

=

= 10.8497

22 + 22 − 2

If only aggregate data of the two variables are available, use the .ttesti command and list the

number of observations, mean, and standard deviation of the two variables.

. ttesti 22 16.85591 3.164698 22 22.32045 3.418151, level(95)

Suppose a data set is differently arranged (second type in Figure 1) so that one variable

smk_lung has data for smokers and the other non_lung for non-smokers. You have to use the

unpaired option to indicate that two variables are not paired. A grouping variable here is not

necessary. Compare the following output with what is printed above.

. ttest smk_lung=non_lung, unpaired

Two-sample t test with equal variances

-----------------------------------------------------------------------------Variable |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------smk_lung |

22

22.32045

.7287523

3.418151

20.80493

23.83598

non_lung |

22

16.98591

.6747158

3.164698

15.58276

18.38906

---------+-------------------------------------------------------------------combined |

44

19.65318

.6374133

4.228122

18.36772

20.93865

---------+-------------------------------------------------------------------diff |

5.334545

.9931371

3.330313

7.338777

-----------------------------------------------------------------------------diff = mean(smk_lung) - mean(non_lung)

t =

5.3714

Ho: diff = 0

degrees of freedom =

42

Ha: diff < 0

Pr(T < t) = 1.0000

Ha: diff != 0

Pr(|T| > |t|) = 0.0000

Ha: diff > 0

Pr(T > t) = 0.0000

This unpaired option is very useful since it enables you to conduct a t-test without additional

data manipulation. You may run the .ttest command with the unpaired option to compare

two variables, say leukemia and kidney, as independent samples in STATA. In SAS and

SPSS, however, you have to stack up two variables and generate a grouping variable before ttests.

. ttest leukemia=kidney, unpaired

Two-sample t test with equal variances

-----------------------------------------------------------------------------Variable |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------leukemia |

44

6.829773

.0962211

.6382589

6.635724

7.023821

kidney |

44

2.794545

.0782542

.5190799

2.636731

2.95236

---------+-------------------------------------------------------------------combined |

88

4.812159

.2249261

2.109994

4.365094

5.259224

---------+-------------------------------------------------------------------diff |

4.035227

.1240251

3.788673

4.281781

------------------------------------------------------------------------------

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 15

diff = mean(leukemia) - mean(kidney)

Ho: diff = 0

Ha: diff < 0

Pr(T < t) = 1.0000

t =

degrees of freedom =

Ha: diff != 0

Pr(|T| > |t|) = 0.0000

32.5356

86

Ha: diff > 0

Pr(T > t) = 0.0000

The F 1.5119 = (.6532589^2)/(.5190799^2) and its p-value (=.1797) do not reject the null

hypothesis of equal variance. The large t statistic 32.5356 rejects the null hypothesis that death

rates from leukemia and kidney cancers have the same mean.

4.3 T-test in SAS

The TTEST procedure by default examines the hypothesis of equal variances, and provides T

statistics for either case. The procedure by default reports Satterthwaite’s approximation for the

degrees of freedom. Keep in mind that a variable to be tested is grouped by the variable that is

specified in the CLASS statement.

PROC TTEST H0=0 ALPHA=.05 DATA=masil.smoking;

CLASS smoke;

VAR lung;

RUN;

The TTEST Procedure

Statistics

Variable

lung

lung

lung

smoke

N

0

1

22

22

Diff (1-2)

Lower CL

Mean

Mean

Upper CL

Mean

Lower CL

Std Dev

Std Dev

Upper CL

Std Dev

15.583

20.805

-7.339

16.986

22.32

-5.335

18.389

23.836

-3.33

2.4348

2.6298

2.7159

3.1647

3.4182

3.2939

4.5226

4.8848

4.1865

Statistics

Variable

lung

lung

lung

smoke

0

1

Diff (1-2)

Std Err

Minimum

Maximum

0.6747

0.7288

0.9931

12.01

12.11

25.45

27.27

T-Tests

Variable

Method

Variances

lung

lung

Pooled

Satterthwaite

Equal

Unequal

DF

t Value

Pr > |t|

42

41.8

-5.37

-5.37

<.0001

<.0001

Equality of Variances

Variable

Method

http://www.indiana.edu/~statmath

Num DF

Den DF

F Value

Pr > F

© 2003-2005, The Trustees of Indiana University

lung

Folded F

Comparing Group Means: 16

21

21

1.17

0.7273

The F test for equal variance does not reject the null hypothesis of equal variances. Thus, the ttest labeled as “Pooled” should be referred to in order to get the t -5.37 and its p-value .0001. If

the equal variance assumption is violated, the statistics of “Satterthwaite” and “Cochran”

should be read.

If you have a summary data set with the values of variables (lung) and their frequency (count),

specify the count variable in the FREQ statement.

PROC TTEST DATA=masil.smoking;

CLASS smoke;

VAR lung;

FREQ count;

RUN;

Now, let us compare the death rates from leukemia and kidney in the second data arrangement

type of Figure 1. As mentioned before, you need to rearrange the data set to stack up two

variables into one and generate a grouping variable (first type in Figure 1).

DATA masil.smoking2;

SET masil.smoking;

death = leukemia; leu_kid ='Leukemia'; OUTPUT;

death = kidney;

leu_kid ='Kidney';

OUTPUT;

KEEP leu_kid death;

RUN;

PROC TTEST COCHRAN DATA=masil.smoking2; CLASS leu_kid; VAR death; RUN;

The TTEST Procedure

Statistics

Variable

leu_kid

N

death

death

death

Kidney

Leukemia

Diff (1-2)

44

44

Lower CL

Mean

Mean

Upper CL

Mean

Lower CL

Std Dev

Std Dev

Upper CL

Std Dev

Std Err

2.6367

6.6357

-4.282

2.7945

6.8298

-4.035

2.9524

7.0238

-3.789

0.4289

0.5273

0.5063

0.5191

0.6383

0.5817

0.6577

0.8087

0.6838

0.0783

0.0962

0.124

T-Tests

Variable

Method

Variances

death

death

death

Pooled

Satterthwaite

Cochran

Equal

Unequal

Unequal

DF

t Value

Pr > |t|

86

82.6

43

-32.54

-32.54

-32.54

<.0001

<.0001

<.0001

Equality of Variances

Variable

http://www.indiana.edu/~statmath

Method

Num DF

Den DF

F Value

Pr > F

© 2003-2005, The Trustees of Indiana University

death

Folded F

Comparing Group Means: 17

43

43

1.51

0.1794

Compare this SAS output with that of STATA in the previous section.

4.4 T-test in SPSS

In the T-TEST command, you need to use the /GROUP subcommand in order to specify a

grouping variable. SPSS reports Levene's F .0000 that does not reject the null hypothesis of

equal variance (p<.995).

T-TEST GROUPS = smoke(0 1)

/VARIABLES = lung

/MISSING = ANALYSIS

/CRITERIA = CI(.95) .

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 18

5. Independent Samples with Unequal Variances

If the assumption of equal variances is violated, we have to compute the adjusted t statistic

using individual sample standard deviations rather than a pooled standard deviation. It is also

necessary to use the Satterthwaite, Cochran-Cox (SAS), or Welch (STATA) approximations of

the degrees of freedom. In this chapter, you compare mean death rates from kidney cancer

between the west (south) and east (north).

5.1 T-test in STATA

As discussed earlier, let us check equality of variances using the .oneway command. The

tabulate option produces a table of summary statistics for the groups.

. oneway kidney west, tabulate

|

Summary of kidney

west |

Mean

Std. Dev.

Freq.

------------+-----------------------------------0 |

3.006

.3001298

20

1 |

2.6183333

.59837219

24

------------+-----------------------------------Total |

2.7945455

.51907993

44

Analysis of Variance

Source

SS

df

MS

F

Prob > F

-----------------------------------------------------------------------Between groups

1.63947758

1

1.63947758

6.92

0.0118

Within groups

9.94661333

42

.236824127

-----------------------------------------------------------------------Total

11.5860909

43

.269443975

Bartlett's test for equal variances:

chi2(1) =

8.6506

Prob>chi2 = 0.003

Bartlett’s chi-squared statistic rejects the null hypothesis of equal variance at the .01 level. It is

appropriate to use the unequal option in the .ttest command, which calculates

Satterthwaite’s approximation for the degrees of freedom.

Unlike the SAS TTEST procedure, the .ttest command cannot specify the mean difference

D0 other than zero. Thus, the null hypothesis is that the mean difference is zero.

. ttest kidney, by(west) unequal level(95)

Two-sample t test with unequal variances

-----------------------------------------------------------------------------Group |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------0 |

20

3.006

.0671111

.3001298

2.865535

3.146465

1 |

24

2.618333

.1221422

.5983722

2.365663

2.871004

---------+-------------------------------------------------------------------combined |

44

2.794545

.0782542

.5190799

2.636731

2.95236

---------+-------------------------------------------------------------------diff |

.3876667

.139365

.1047722

.6705611

------------------------------------------------------------------------------

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

diff = mean(0) - mean(1)

Ho: diff = 0

Ha: diff < 0

Pr(T < t) = 0.9957

Comparing Group Means: 19

t =

Satterthwaite's degrees of freedom =

Ha: diff != 0

Pr(|T| > |t|) = 0.0086

2.7817

35.1098

Ha: diff > 0

Pr(T > t) = 0.0043

See Satterthwaite’s approximation of 35.110 in the middle of the output. If you want to get

Welch’s approximation, use the welch as well as unequal options; without the unequal option,

the welch is ignored.

. ttest kidney, by(west) unequal welch

Two-sample t test with unequal variances

-----------------------------------------------------------------------------Group |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------0 |

20

3.006

.0671111

.3001298

2.865535

3.146465

1 |

24

2.618333

.1221422

.5983722

2.365663

2.871004

---------+-------------------------------------------------------------------combined |

44

2.794545

.0782542

.5190799

2.636731

2.95236

---------+-------------------------------------------------------------------diff |

.3876667

.139365

.1050824

.6702509

-----------------------------------------------------------------------------diff = mean(0) - mean(1)

t =

2.7817

Ho: diff = 0

Welch's degrees of freedom = 36.2258

Ha: diff < 0

Pr(T < t) = 0.9957

Ha: diff != 0

Pr(|T| > |t|) = 0.0085

Ha: diff > 0

Pr(T > t) = 0.0043

Satterthwaite’s approximation is slightly smaller than Welch’s 36.2258. Again, keep in mind

that these approximations are not integers, but real numbers. The t statistic 2.7817 and its pvalue .0086 reject the null hypothesis of equal population means. The north and east have

larger death rates from kidney cancer per 100 thousand people than the south and west.

For aggregate data, use the .ttesti command with the necessary options.

. ttesti 20 3.006 .3001298 24 2.618333 .5983722, unequal welch

As mentioned earlier, the unpaired option of the .ttest command directly compares two

variables without data manipulation. The option treats the two variables as independent of each

other. The following is an example of the unpaired and unequal options.

. ttest bladder=kidney, unpaired unequal welch

Two-sample t test with unequal variances

-----------------------------------------------------------------------------Variable |

Obs

Mean

Std. Err.

Std. Dev.

[95% Conf. Interval]

---------+-------------------------------------------------------------------bladder |

44

4.121136

.1454679

.9649249

3.827772

4.4145

kidney |

44

2.794545

.0782542

.5190799

2.636731

2.95236

---------+-------------------------------------------------------------------combined |

88

3.457841

.1086268

1.019009

3.241933

3.673748

---------+-------------------------------------------------------------------diff |

1.326591

.1651806

.9968919

1.65629

-----------------------------------------------------------------------------diff = mean(bladder) - mean(kidney)

t =

8.0312

Ho: diff = 0

Welch's degrees of freedom = 67.0324

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Ha: diff < 0

Pr(T < t) = 1.0000

Comparing Group Means: 20

Ha: diff != 0

Pr(|T| > |t|) = 0.0000

Ha: diff > 0

Pr(T > t) = 0.0000

The F 3.4556 = (.9649249^2)/(.5190799^2) rejects the null hypothesis of equal variance

(p<0001). If the welch option is omitted, Satterthwaite's degree of freedom 65.9643 will be

produced instead.

For aggregate data, again, use the .ttesti command without the unpaired option.

. ttesti 44 4.121136 .9649249 44 2.794545 .5190799, unequal welch level(95)

5.2 T-test in SAS

The TTEST procedure reports statistics for cases of both equal and unequal variance. You may

add the COCHRAN option to compute Cochran-Cox approximations for the degree of freedom.

PROC TTEST COCHRAN DATA=masil.smoking;

CLASS west;

VAR kidney;

RUN;

The TTEST Procedure

Statistics

Variable

kidney

kidney

kidney

s_west

N

0

1

Lower CL

Mean

Mean

Upper CL

Mean

Lower CL

Std Dev

Std Dev

Upper CL

Std Dev

2.8655

2.3657

0.0903

3.006

2.6183

0.3877

3.1465

2.871

0.685

0.2282

0.4651

0.4013

0.3001

0.5984

0.4866

0.4384

0.8394

0.6185

20

24

Diff (1-2)

Statistics

Variable

kidney

kidney

kidney

west

0

1

Diff (1-2)

Std Err

Minimum

Maximum

0.0671

0.1221

0.1473

2.34

1.59

3.62

4.32

T-Tests

Variable

Method

Variances

kidney

kidney

kidney

Pooled

Satterthwaite

Cochran

Equal

Unequal

Unequal

DF

t Value

Pr > |t|

42

35.1

.

2.63

2.78

2.78

0.0118

0.0086

0.0109

Equality of Variances

Variable

Method

kidney

Folded F

http://www.indiana.edu/~statmath

Num DF

Den DF

F Value

Pr > F

23

19

3.97

0.0034

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 21

F 3.9749 = (.5983722^2)/(.3001298^2) and p <.0034 reject the null hypothesis of equal

variances. Thus, individual sample standard deviations need to be used to compute the adjusted

t, and either Satterthwaite’s or the Cochran-Cox approximation should be used in computing

the p-value. See the following computations.

t' =

3.006 − 2.6183

= −2.78187 ,

.30012 .5984 2

+

20

24

2

s n

.30012 20

c = 2 1 12

=

= .2318 , and

s1 n1 + s2 n2 .30012 20 + .5984 2 24

(n1 − 1)(n2 − 1)

(20 − 1)(24 − 1)

df Satterthwaite =

=

= 35.1071

2

2

(n1 − 1)(1 − c) + (n2 − 1)c

(20 − 1)(1 − .2318) 2 + (24 − 1).2318 2

The t statistic 2.78 rejects the null hypothesis of no difference in mean death rates between the

two regions (p<.0086).

Now, let us compare death rates from bladder and kidney cancers using SAS.

DATA masil.smoking3;

SET masil.smoking;

death = bladder; bla_kid ='Bladder'; OUTPUT;

death = kidney;

bla_kid ='Kidney';

OUTPUT;

KEEP bla_kid death;

RUN;

PROC TTEST COCHRAN DATA=masil.smoking3; CLASS bla_kid; VAR death; RUN;

The TTEST Procedure

Statistics

Variable

bla_kid

death

death

death

Bladder

Kidney

Diff (1-2)

N

44

44

Lower CL

Mean

Mean

Upper CL

Mean

Lower CL

Std Dev

Std Dev

Upper CL

Std Dev

Std Err

3.8278

2.6367

0.9982

4.1211

2.7945

1.3266

4.4145

2.9524

1.655

0.7972

0.4289

0.6743

0.9649

0.5191

0.7748

1.2226

0.6577

0.9107

0.1455

0.0783

0.1652

T-Tests

Variable

Method

Variances

DF

t Value

Pr > |t|

death

death

death

Pooled

Satterthwaite

Cochran

Equal

Unequal

Unequal

86

66

43

8.03

8.03

8.03

<.0001

<.0001

<.0001

Equality of Variances

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Variable

Method

death

Folded F

Comparing Group Means: 22

Num DF

Den DF

F Value

Pr > F

43

43

3.46

<.0001

Fortunately, the t-tests under equal and unequal variance in this case lead the same conclusion

at the .01 level; that is, the means of the two death rates are not the same.

5.3 T-test in SPSS

Like SAS, SPSS also reports t statistics for cases of both equal and unequal variance. Note that

Levene's F 5.466 rejects the null hypothesis of equal variance at the .05 level (p<.024).

T-TEST GROUPS = west(0 1)

/VARIABLES = kidney

/MISSING = ANALYSIS

/CRITERIA = CI(.95) .

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 23

6. One-way ANOVA, GLM, and Regression

The t-test is a special case of one-way ANOVA. Thus, one-way ANOVA produces equivalent

results to those of the t-test. ANOVA examines mean differences using the F statistic, whereas

the t-test reports the t statistic. The one-way ANOVA (t-test), GLM, and linear regression

present essentially the same things in different ways.

6.1 One-way ANOVA

Consider the following ANOVA procedure. The CLASS statement is used to specify

categorical variables. The MODEL statement lists the variable to be compared and a grouping

variable, separating them with an equal sign.

PROC ANOVA DATA=masil.smoking;

CLASS smoke;

MODEL lung=smoke;

RUN;

The ANOVA Procedure

Dependent Variable: lung

Source

DF

Sum of

Squares

Model

Error

Corrected Total

1

42

43

313.0311273

455.6804273

768.7115545

Mean Square

F Value

Pr > F

313.0311273

10.8495340

28.85

<.0001

F Value

28.85

Pr > F

<.0001

R-Square

Coeff Var

Root MSE

lung Mean

0.407215

16.75995

3.293863

19.65318

Source

smoke

DF

1

Anova SS

313.0311273

Mean Square

313.0311273

STATA .anova and .oneway commands also conduct one-way ANOVA.

. anova lung smoke

Number of obs =

44

Root MSE

= 3.29386

R-squared

=

Adj R-squared =

0.4072

0.3931

Source | Partial SS

df

MS

F

Prob > F

-----------+---------------------------------------------------Model | 313.031127

1 313.031127

28.85

0.0000

|

smoke | 313.031127

1 313.031127

28.85

0.0000

|

Residual | 455.680427

42

10.849534

-----------+---------------------------------------------------Total | 768.711555

43 17.8770129

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 24

In SPSS, the ONEWAY command is used.

ONEWAY lung BY smoke

/MISSING ANALYSIS .

6.2 Generalized Linear Model (GLM)

The SAS GLM and MIXED procedures and the SPSS UNIANOVA command also report the F

statistic for one-way ANOVA. Note that STATA’s .glm command does not perform one-way

ANOVA.

PROC GLM DATA=masil.smoking;

CLASS smoke;

MODEL lung=smoke /SS3;

RUN;

The GLM Procedure

Dependent Variable: lung

Source

DF

Sum of

Squares

Model

Error

Corrected Total

1

42

43

313.0311273

455.6804273

768.7115545

Mean Square

F Value

Pr > F

313.0311273

10.8495340

28.85

<.0001

R-Square

Coeff Var

Root MSE

lung Mean

0.407215

16.75995

3.293863

19.65318

Source

smoke

DF

Type III SS

Mean Square

F Value

Pr > F

1

313.0311273

313.0311273

28.85

<.0001

The MIXED procedure has the similar usage as the GLM procedure. The output here is skipped.

PROC MIXED; CLASS smoke; MODEL lung=smoke; RUN;

In SPSS, the UNIANOVA command estimates univariate ANOVA models using the GLM

method.

UNIANOVA lung BY smoke

/METHOD = SSTYPE(3)

/INTERCEPT = INCLUDE

/CRITERIA = ALPHA(.05)

/DESIGN = smoke .

6.3 Regression

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 25

The SAS REG procedure, STATA .regress command, and SPSS REGRESSION command

estimate linear regression models.

PROC REG DATA=masil.smoking;

MODEL lung=smoke;

RUN;

The REG Procedure

Model: MODEL1

Dependent Variable: lung

Number of Observations Read

Number of Observations Used

44

44

Analysis of Variance

Source

DF

Sum of

Squares

Mean

Square

Model

Error

Corrected Total

1

42

43

313.03113

455.68043

768.71155

313.03113

10.84953

Root MSE

Dependent Mean

Coeff Var

3.29386

19.65318

16.75995

R-Square

Adj R-Sq

F Value

Pr > F

28.85

<.0001

0.4072

0.3931

Parameter Estimates

Variable

Intercept

smoke

DF

Parameter

Estimate

Standard

Error

t Value

Pr > |t|

1

1

16.98591

5.33455

0.70225

0.99314

24.19

5.37

<.0001

<.0001

Look at the results above. The coefficient of the intercept 16.9859 is the mean of the first group

(smoke=0). The coefficient of smoke is, in fact, mean difference between two groups with its

sign reversed (5.33455=16.9859-22.3205). Finally, the standard error of the coefficient is the

1

1

1

1

,

denominator of the independent sample t-test, .99314= s pool

+

= 3.2939

+

n1 n2

22 22

where the pooled variance estimate 10.8497=3.2939^2 (see page 11 and 13). Thus, the t 5.37 is

identical to the t statistic of the independent sample t-test with equal variance.

The STATA .regress command is quite simple. Note that a dependent variable precedes a list

of independent variables.

. regress lung smoke

Source |

SS

df

MS

-------------+------------------------------

http://www.indiana.edu/~statmath

Number of obs =

F( 1,

42) =

44

28.85

© 2003-2005, The Trustees of Indiana University

Model | 313.031127

1 313.031127

Residual | 455.680427

42

10.849534

-------------+-----------------------------Total | 768.711555

43 17.8770129

Comparing Group Means: 26

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

0.0000

0.4072

0.3931

3.2939

-----------------------------------------------------------------------------lung |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------smoke |

5.334545

.9931371

5.37

0.000

3.330314

7.338777

_cons |

16.98591

.702254

24.19

0.000

15.5687

18.40311

------------------------------------------------------------------------------

The SPSS REGRESSION command looks complicated compared to the SAS REG procedure

and STATA .regress command.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT lung

/METHOD=ENTER smoke.

Note that ANOVA, GLM, and regression report the same F (1, 42) 28.85, which is equivalent

to t (42) -5.3714. As long as the degrees of freedom of the numerator is 1, F is always t^2

(28.85=-5.3714^2).

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 27

7. Conclusion

The t-test is a basic statistical method for examining the mean difference between two groups.

One-way ANOVA can compare means of more than two groups. The number of observations

in individual groups does not matter in the t-test or one-way ANOVA; both balanced and

unbalanced data are fine. One-way ANOVA, GLM, and linear regression models all use the

variance-covariance structure in their analysis, but present the results in different ways.

Researchers must check four issues when performing t-tests. First, a variable to be tested

should be interval or ratio so that its mean is substantively meaningful. Do not, for example,

run a t-test to compare the mean of skin colors (white=0, yellow=1, black=2) between two

countries. If you have a latent variable measured by several Likert-scaled manifest variables,

first run a factor analysis to get that latent variable.

Second, examine the normality assumptions before conducting a t-test. It is awkward to

compare means of variables that are not normally distributed. Figure 2 illustrates a normal

probability distribution on top and a Poisson distribution skewed to the right on the bottom.

Although the two distributions have the same mean and variance of 1, they are not likely to be

substantively interpretable. This is the rationale to conduct normality test such as Shapiro-Wilk

W, Shapiro-Francia W, and Kolmogorov-Smirnov D statistics. If the normality assumption is

violated, try to use nonparametric methods.

Figure 2. Comparing Normal and Poisson Probability Distributions ( σ 2 =1 and µ =1)

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 28

Third, check the equal variance assumption. You should be careful when comparing means of

normally distributed variables with different variances. You may conduct the folded form F test.

If the equal variance assumption is violated, compute the adjusted t and approximations of the

degree of freedom.

Finally, consider the types of t-tests, data arrangement, and functionalities available in each

statistical software (e.g., STATA, SAS, and SPSS) to determine the best strategy for data

analysis (Table 3). The first data arrangement in Figure 1 is commonly used for independent

sample t-tests, whereas the second arrangement is appropriate for a paired sample test. Keep in

mind that the type II data sets in Figure 1 needs to be reshaped into type I in SAS and SPSS.

Table 3. Comparison of T-test Functionalities of STATA, SAS and SPSS

STATA 9.0

SAS 9.1

SPSS 13.0

Test for equal variance

Approximation of the

degrees of freedom (DF)

Second Data Arrangement

Aggregate Data

Bartlett’s chi-squared

(.ttest command)

Satterthwaite’s DF

Welch’s DF

var1=var2

.ttesti command

Folded form F

(TTEST procedure)

Satterthwaite’s DF

Cochran-Cox DF

Reshaping the data set

FREQ option

Levene’s weighted F

(T-TEST command)

Satterthwaite’s DF

Reshaping the data set

N/A

SAS has several procedures (e.g., TTEST, MEANS, and UNIVARIATE) and useful options for

t-tests. The STATA .ttest and .ttesti commands provide very flexible ways of handling

different data arrangements and aggregate data. Table 4 summarizes usages of options in these

two commands.

Table 4. Summary of the Usages of the .ttest and .ttest Command Options

by(group var) unequal welch

Usage

var=c

Univariate sample

var1=var2

Paired (dependent) sample

Var

O

Equal variance (1 variable)

**

var1=var2

Equal variance (2 variables)

Var

O

O

O

Unequal variance (1 variable)

var1=var2

O

O

Unequal variance (2 variables)

* The .ttesti command does not allow the unpaired option.

** The “var1=var2” assumes second type of data arrangement in Figure 1.

http://www.indiana.edu/~statmath

*

unpaired

O

O

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 29

Appendix: Data Set

Literature: Fraumeni, J. F. 1968. "Cigarette Smoking and Cancers of the Urinary Tract:

Geographic Variations in the United States," Journal of the National Cancer Institute, 41(5):

1205-1211.

Data Source: http://lib.stat.cmu.edu

The data are per capita numbers of cigarettes smoked (sold) by 43 states and the District of

Columbia in 1960 together with death rates per 100 thousand people from various forms of

cancer. The variables used in this document are,

cigar = number of cigarettes smoked (hds per capita)

bladder = deaths per 100k people from bladder cancer

lung = deaths per 100k people from lung cancer

kidney = deaths per 100k people from kidney cancer

leukemia = deaths per 100k people from leukemia

smoke = 1 for those whose cigarette consumption is larger than the median and 0 otherwise.

west = 1 for states in the South or West and 0 for those in the North, East or Midwest.

The followings are summary statistics and normality tests of these variables.

. sum cigar-leukemia

Variable |

Obs

Mean

Std. Dev.

Min

Max

-------------+----------------------------------------------------cigar |

44

24.91409

5.573286

14

42.4

bladder |

44

4.121136

.9649249

2.86

6.54

lung |

44

19.65318

4.228122

12.01

27.27

kidney |

44

2.794545

.5190799

1.59

4.32

leukemia |

44

6.829773

.6382589

4.9

8.28

. sfrancia cigar-leukemia

Shapiro-Francia W' test for normal data

Variable |

Obs

W'

V'

z

Prob>z

-------------+------------------------------------------------cigar |

44

0.93061

3.258

2.203 0.01381

bladder |

44

0.94512

2.577

1.776 0.03789

lung |

44

0.97809

1.029

0.055 0.47823

kidney |

44

0.97732

1.065

0.120 0.45217

leukemia |

44

0.97269

1.282

0.474 0.31759

. tab west smoke

|

smoke

west |

0

1 |

Total

-----------+----------------------+---------0 |

7

13 |

20

1 |

15

9 |

24

-----------+----------------------+---------Total |

22

22 |

44

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Comparing Group Means: 30

References

Fraumeni, J. F. 1968. "Cigarette Smoking and Cancers of the Urinary Tract: Geographic

Variations in the United States," Journal of the National Cancer Institute, 41(5): 12051211.

Ott, R. Lyman. 1993. An Introduction to Statistical Methods and Data Analysis. Belmont, CA:

Duxbury Press.

SAS Institute. 2005. SAS/STAT User's Guide, Version 9.1. Cary, NC: SAS Institute.

SPSS. 2001. SPSS 11.0 Syntax Reference Guide. Chicago, IL: SPSS Inc.

STATA Press. 2005. STATA Reference Manual Release 9. College Station, TX: STATA Press.

Walker, Glenn A. 2002. Common Statistical Methods for Clinical Research with SAS

Examples. Cary, NC: SAS Institute.

Acknowledgements

I am grateful to Jeremy Albright, Takuya Noguchi, and Kevin Wilhite at the UITS Center for

Statistical and Mathematical Computing, Indiana University, who provided valuable comments

and suggestions.

Revision History

•

•

•

2003. First draft

2004. Second draft

2005. Third draft (Added data arrangements and conclusion).

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 1

Regression Models for Event Count Data

Using SAS, STATA, and LIMDEP

Hun Myoung Park

This document summarizes regression models for event count data and illustrates how to

estimate individual models using SAS, STATA, and LIMDEP. Example models were tested in SAS

9.1, STATA 9.0, and LIMDEP 8.0.

1.

2.

3.

4.

5.

6.

7.

Introduction

The Poisson Regression Model (PRM)

The Negative Binomial Regression Model (NBRM)

The Zero-Inflated Poisson Regression Model (ZIP)

The Zero-Inflated Negative Binomial Regression Model (ZINB)

Conclusion

Appendix

1. Introduction

An event count is the realization of a nonnegative integer-valued random variable (Cameron and

Trivedi 1998). Examples are the number of car accidents per month, thunder storms per year, and

wild fires per year. The ordinary least squares (OLS) method for event count data results in

biased, inefficient, and inconsistent estimates (Long 1997). Thus, researchers have developed

various nonlinear models that are based on the Poisson distribution and negative binomial

distribution.

1.1 Count Data Regression Models

The left-hand side (LHS) of the equation has event count data. Independent variables are, as in

the OLS, located at the right-hand side (RHS). These RHS variables may be interval, ratio, or

binary (dummy). Table 1 below summarizes the categorical dependent variable regression

models (CDVMs) according to the level of measurement of the dependent variable.

Table 1. Ordinary Least Squares and CDVMs

Model

Dependent (LHS)

OLS

CDVMs

Ordinary least

squares

Interval or ratio

Binary response

Binary (0 or 1)

Ordinal response

Nominal response

Event count data

Ordinal (1st, 2nd , 3rd…)

Nominal (A, B, C …)

Count (0, 1, 2, 3…)

Method

Moment based

method

Maximum

likelihood

method

Independent (RHS)

A linear function of

interval/ratio or binary

variables

β 0 + β 1 X 1 + β 2 X 2 ...

The Poisson regression model (PRM) and negative binomial regression model (NBRM) are basic

models for count data analysis. Either the zero-inflated Poisson (ZIP) or the zero-inflated

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 2

negative binomial regression model (ZINB) is used when there are many zero counts. Other

count models are developed to handle censored, truncated, or sample selected count data. This

document, however, focuses on the PRM, NBRM, ZIP, and ZINB.

1.2 Poisson Models versus Negative Binomial Models

e −µ µ y

, has the same mean and variance

y!

(equidispersion), Var(y)=E(y)= µ . As the mean of a Poisson distribution increases, the

probability of zeros decreases and the distribution approximates a normal distribution (Figure 1).

The Poisson distribution also has the strong assumption that events are independent. Thus, this

distribution does not fit well if µ differs across observations (heterogeneity) (Long 1997).

The Poisson probability distribution, P( y | µ ) =

The Poisson regression model (PRM) incorporates observed heterogeneity into the Poisson

distribution function, Var ( y i | xi ) = E ( y i | xi ) = µ i = exp( xi β ) . As µ increases, the conditional

variance of y increases, the proportion of predicted zeros decreases, and the distribution around

the expected value becomes approximately normal (Long 1997). The conditional mean of the

errors is zero, but the variance of the errors is a function of independent variables,

Var (ε | x) = exp( xβ ) . The errors are heteroscedastic. Thus, the PRM rarely fits in practice due to

overdispersion (Long 1997; Maddala 1983).

Figure 1. Poisson Probability Distribution with Means of .5, 1, 2, and 5

vi

yi

Γ( y i + vi ) ⎛ vi ⎞ ⎛ µ i ⎞

⎟ ,

⎟ ⎜

⎜

The negative binomial probability distribution is P( y i | xi ) =

y i !Γ(vi ) ⎜⎝ vi + µ i ⎟⎠ ⎜⎝ vi + µ i ⎟⎠

where 1 / v = α determines the degree of dispersion and Γ is the Gamma probability distribution.

As the dispersion parameter α increases, the variance of the negative binomial distribution also

increases, Var ( yi | xi ) = µi (1 + µi vi ) . The negative binomial regression model (NBRM)

incorporates observed and unobserved heterogeneity into the conditional mean,

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 3

µ i = exp( xi β + ε i ) (Long 1997). Thus, the conditional variance of y becomes larger than its

conditional mean, E ( yi | xi ) = µi , which remains unchanged. Figure 2 illustrates how the

probabilities for small and larger counts increase in the negative binomial distribution as the

conditional variance of y increases, given µ = 3 .

Figure 2. Negative Binomial Probability Distribution with Alpha of .01, .5, 1, and 5

The PRM and NBRM, however, have the same mean structure. If α = 0 , the NBRM reduces to

the PRM (Cameron and Trivedi 1998; Long 1997).

1.3 Overdispersion

When Var ( yi | xi ) > E ( yi | xi ) , we are said to have overdispersion. Estimates of a PRM for

overdispersed data are unbiased, but inefficient with standard errors biased downward (Cameron

and Trivedi 1998; Long 1997). The likelihood ratio test is developed to examine the null

hypothesis of no overdispersion, H 0 : α = 0 . The likelihood ratio follows the Chi-squared

distribution with one degree of freedom, LR = 2 * (ln LNB − ln LPoisson ) ~ χ 2 (1) . If the null

hypothesis is rejected, the NBRM is preferred to the PRM.

Zero-inflated models handle overdispersion by changing the mean structure to explicitly model

the production of zero counts (Long 1997). These models assume two latent groups. One is the

always-zero group and the other is the not-always-zero or sometime-zero group. Thus, zero

counts come from the former group and some of the latter group with a certain probability.

The likelihood ratio, LR = 2 * (ln LZINB − ln LZIP ) ~ χ 2 (1) , tests H 0 : α = 0 to compare the ZIP

and NBRM. The PRM and ZIP as well as NBRM and ZINB cannot, however, be tested by this

likelihood ratio, since they are not nested respectively. The Voung’s statistic compares these

non-nested models. If V is greater than 1.96, the ZIP or ZINB is favored. If V is less than -1.96,

the PRM or NBRM is preferred (Long 1997).

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 4

1.4 Estimation in SAS, STATA, and LIMDEP

The SAS GENMOD procedure estimates Poisson and negative binomial regression models.

STATA has individual commands (e.g., .poisson and .nbreg) for the corresponding count data

models. LIMDEP has Poisson$ and Negbin$ commands to estimate various count data models

including zero-inflated and zero-truncated models. Table 2 summarizes the procedures and

commands for count data regression models.

Table 2. Comparison of the Procedures and Commands for Count Data Models

Model

SAS 9.1

STATA 9.0

LIMDEP 8.0

Poisson Regression (PRM)

Negative Binomial Regression (NBRM)

Zero-Inflated Poisson (ZIP)

Zero-inflated Negative Binomial (ZINB)

Zero-truncated Poisson (ZTP)

Zero-truncated Negative Binomial (ZTNB)

GENMOD

GENMOD

-

.poisson

.nbreg

.zip

.zinb

.ztp

.ztnb

Poisson$

Negbin$

Poisson; Zip; Rh2$

Negbin; Zip; Rh2$

Poisson; Truncation$

Negbin; Truncation$

The example here examines how waste quotas (emps) and the strictness of policy implementation

(strict) affect the frequency of waste spill accidents of plants (accident).

1. 5 Long and Freese’s SPost Module

STATA users may take advantages of user-written modules such as SPost written by J. Scott

Long and Jeremy Freese. The module allows researchers to conduct follow-up analyses of

various CDVMs including event count data models. See 2.3 for examples of major SPost

commands.

In order to install SPost, execute the following commands consecutively. For more details, visit J.

Scott Long’s Web site at http://www.indiana.edu/~jslsoc/spost_install.htm.

. net from http://www.indiana.edu/~jslsoc/stata/

. net install spost9_ado, replace

. net get spost9_do, replace

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 5

2. The Poisson Regression Model

The SAS GENMOD procedure, STATA .poisson command, and LIMDEP Poisson$

command estimate the Poisson regression model (PRM).

2.1 PRM in SAS

SAS has the GENMOD procedure for the PRM. The /DIST=POISSON option tells SAS to use

the Poisson distribution.

PROC GENMOD DATA = masil.accident;

MODEL accident=emps strict /DIST=POISSON LINK=LOG;

RUN;

The GENMOD Procedure

Model Information

Data Set

Distribution

Link Function

Dependent Variable

Observations Used

COUNT.WASTE

Poisson

Log

Accident

778

Criteria For Assessing Goodness Of Fit

Criterion

Deviance

Scaled Deviance

Pearson Chi-Square

Scaled Pearson X2

Log Likelihood

DF

Value

Value/DF

775

775

775

775

2827.2079

2827.2079

4944.9473

4944.9473

-667.2291

3.6480

3.6480

6.3806

6.3806

Algorithm converged.

Analysis Of Parameter Estimates

Parameter

DF

Estimate

Standard

Error

Intercept

Emps

Strict

Scale

1

1

1

0

0.3901

0.0054

-0.7042

1.0000

0.0467

0.0007

0.0668

0.0000

Wald 95% Confidence

Limits

ChiSquare

Pr > ChiSq

0.2986

0.0040

-0.8350

1.0000

69.84

53.13

111.25

<.0001

<.0001

<.0001

0.4816

0.0069

-0.5733

1.0000

NOTE: The scale parameter was held fixed.

You will need to run a restricted model without regressors in order to conduct the likelihood ratio

test for goodness-of-fit, LR = 2 * (ln LUnrestricted − ln LRe stricted ) ~ χ 2 ( J ) , where J is the difference in

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 6

the number of regressors between the unrestricted and restricted models. The chi-squared

statistic is 124.8218 = 2* [-667.2291 - (-729.6400)] (p<.0000).

PROC GENMOD DATA = masil.accident;

MODEL accident= /DIST=POISSON LINK=LOG;

RUN;

The GENMOD Procedure

Model Information

Data Set

Distribution

Link Function

Dependent Variable

MASIL.ACCIDENT

Poisson

Log

accident

Number of Observations Read

Number of Observations Used

778

778

Criteria For Assessing Goodness Of Fit

Criterion

Deviance

Scaled Deviance

Pearson Chi-Square

Scaled Pearson X2

Log Likelihood

DF

Value

Value/DF

777

777

777

777

2952.0297

2952.0297

4919.9745

4919.9745

-729.6400

3.7993

3.7993

6.3320

6.3320

Algorithm converged.

Analysis Of Parameter Estimates

Parameter

DF

Estimate

Standard

Error

Intercept

Scale

1

0

0.3168

1.0000

0.0306

0.0000

Wald 95% Confidence

Limits

0.2568

1.0000

0.3768

1.0000

ChiSquare

Pr > ChiSq

107.20

<.0001

NOTE: The scale parameter was held fixed.

2.2 PRM in STATA

STATA has the .poisson command for the PRM. This command provides likelihood ratio and

Pseudo R2 statistics.

. poisson accident emps strict

Iteration 0:

Iteration 1:

Iteration 2:

log likelihood = -1821.5112

log likelihood = -1821.5101

log likelihood = -1821.5101

http://www.indiana.edu/~statmath

© 2003-2005, The Trustees of Indiana University

Regression Models for Event Count Data: 7

Poisson regression

Number of obs

LR chi2(2)

Prob > chi2

Pseudo R2

Log likelihood = -1821.5101

=

=

=

=

778

124.82

0.0000

0.0331

-----------------------------------------------------------------------------accident |

Coef.

Std. Err.

z

P>|z|

[95% Conf. Interval]

-------------+---------------------------------------------------------------emps |

.0054186

.0007434

7.29

0.000

.0039615

.0068757

strict | -.7041664

.0667619

-10.55

0.000

-.8350174

-.5733154

_cons |

.3900961

.0466787

8.36

0.000

.2986076

.4815846

------------------------------------------------------------------------------

Let us run a restricted model and then run the .display command in order to double check that

the likelihood ratio for goodness-of-fit is 124.8218.

. poisson accident

Iteration 0:

Iteration 1:

log likelihood =

log likelihood =

-1883.921

-1883.921

Poisson regression

Log likelihood =

-1883.921

Number of obs

LR chi2(0)

Prob > chi2

Pseudo R2

=

=

=

=

778

0.00

.

0.0000

-----------------------------------------------------------------------------accident |

Coef.

Std. Err.

z

P>|z|

[95% Conf. Interval]

-------------+---------------------------------------------------------------_cons |

.3168165

.0305995

10.35

0.000

.2568426

.3767904

-----------------------------------------------------------------------------. display 2 * (-1821.5101 - (-1883.921))

124.8218

2.3 Using the SPost Module in STATA

The SPost module provides useful commands for follow-up analyses of various categorical

dependent variable models. The .fitstat command calculates various goodness-of-fit statistics