APPENDIX B

Mathematical Computations

on Fixed-Point Processors

Fixed-point processors are widely used because of high speed and low cost. However, most real-world applications use real arithmetic and cannot be performed directly on fixed-point processors. For this, we use

approximations, fixed-point arithmetic, look-up tables, modular arithmetic, and so on, to get the equivalent

output of real arithmetic using a fixed-point processor. In this appendix, we discuss the fixed-point representation of real data elements and fixed-point computation of real arithmetic. In addition, we discuss modular

arithmetic and related theory on groups and Galois fields.

B.1 Numeric Data Fixed-Point Computing

Fixed-point numbers are useful for representing real values, usually in base 2, when the computing processor

has no floating-point unit (FPU) for performing the real arithmetic. Most low-cost embedded processors do not

have an FPU. How then are results for real arithmetic computed on fixed-point processors? In the following, we

discuss fixed-point computation using the reference embedded processor.

B.1.1 Fixed-Point Computing with the Reference Embedded Processor

The reference embedded processor support 8-, 16-, 32-, and 40-bit fixed-point data in hardware. Special

features in the computation units allow support of other formats in software. This appendix describes various aspects of these data formats. It also describes how to implement a block floating-point format in

software.

Unsigned or Signed: Two’s-Complement Format

Unsigned integer numbers are positive, and no sign information is contained in the bits. Therefore, the value

of an unsigned integer is interpreted in the usual binary sense. The least significant words of multiple-precision

numbers are treated as unsigned numbers.

Signed numbers supported by the reference embedded processor is in two’s complement format. Signed

magnitude, 1’s-complement, binary-coded decimal (BCD), and excess-n formats are not supported.

Integer or Fractional

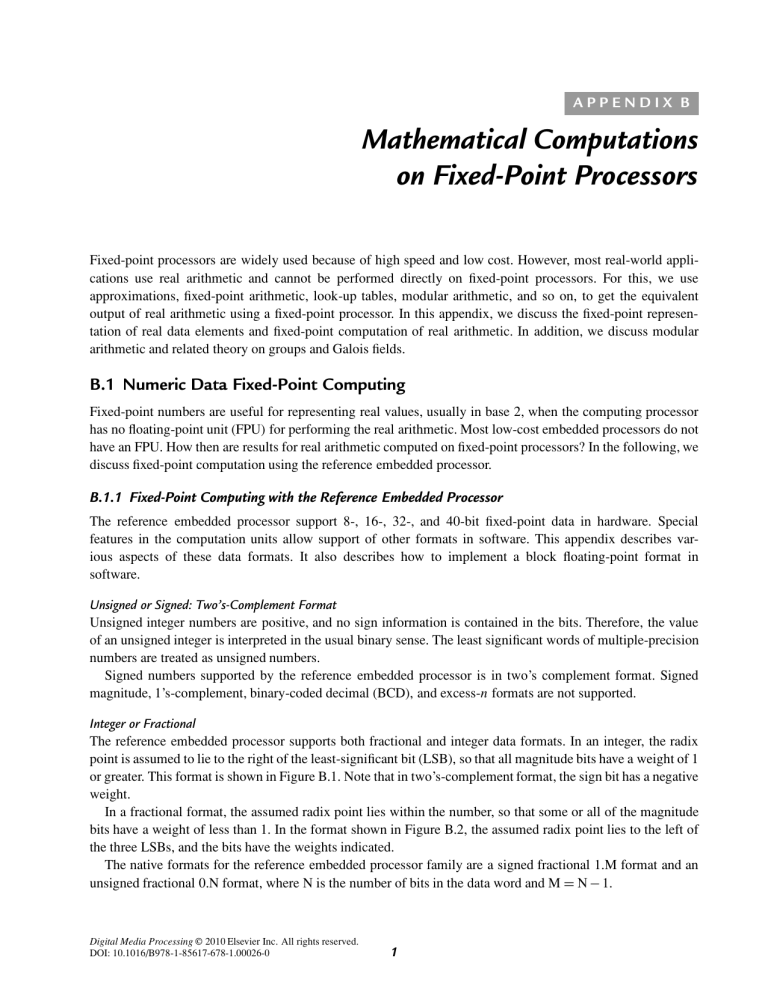

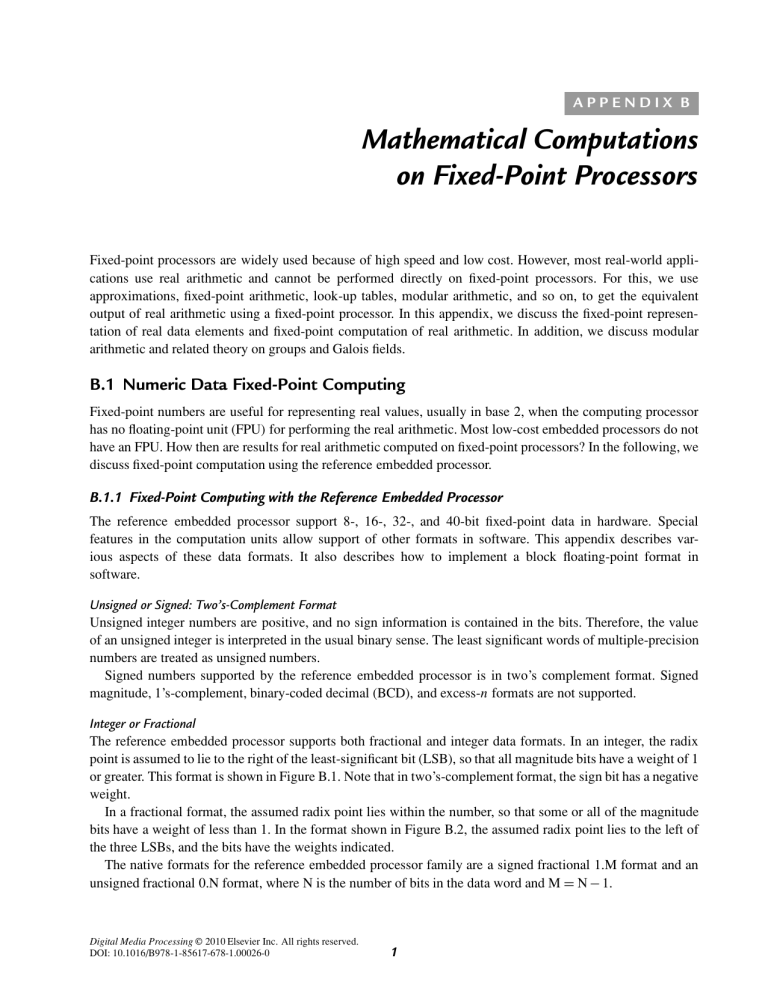

The reference embedded processor supports both fractional and integer data formats. In an integer, the radix

point is assumed to lie to the right of the least-significant bit (LSB), so that all magnitude bits have a weight of 1

or greater. This format is shown in Figure B.1. Note that in two’s-complement format, the sign bit has a negative

weight.

In a fractional format, the assumed radix point lies within the number, so that some or all of the magnitude

bits have a weight of less than 1. In the format shown in Figure B.2, the assumed radix point lies to the left of

the three LSBs, and the bits have the weights indicated.

The native formats for the reference embedded processor family are a signed fractional 1.M format and an

unsigned fractional 0.N format, where N is the number of bits in the data word and M = N − 1.

Digital Media Processing © 2010 Elsevier Inc. All rights reserved.

DOI: 10.1016/B978-1-85617-678-1.00026-0

1

2

Digital Media Processing, Appendix B

Bit

15

15

Weight 2(2 )

14

14

2

13

2

13

...

2

1

0

2

21

2

2

0

Sign Bit

Radix Point

(a)

Bit

15

14

13

Weight

215

214

213

...

2

1

0

22

21

20

Sign Bit

Figure B.1: Integer format: (a) signed

integer, and (b) unsigned integer.

Radix Point

(b)

Bit

15

Weight ⫺(212)

14

13

11

210

2

...

4

3

2

1

0

21

20

2⫺1

1⫺2

2⫺3

Sign Bit

Radix Point

(a)

Bit

Weight

Figure B.2: Fractional format:

(a) signed fractional (13.3), and

(b) unsigned fractional (13.3).

15

2

12

14

13

211

210

...

4

3

2

1

0

21

20

2⫺1

2⫺2

2⫺3

Sign Bit

Radix Point

(b)

The notation used to describe a format consists of two numbers separated by a period (.); the first number is

the number of bits to the left of the radix point, and the second is the number of bits to the right of the radix

point. For example, 16.0 (“sixteen dot zero”) format is an integer format; all bits lie to the left of the radix point.

The format in Figure B.2 is 13.3 (“thirteen dot three”).

Table B.1 shows the ranges of signed numbers representable in the fractional formats that are possible with

16 bits.

In the reference embedded processor, arithmetic is optimized for numerical values in a fractional binary format

denoted by 1.15. In the 1.15 format, the 1 sign bit (the most significant bit [MSB]) and 15 fractional bits represent

values from −1 to 0.999969.

Figure B.3 shows the bit weighting for 1.15 numbers as well as some examples of 1.15 numbers and their

decimal equivalents.

Binary Multiplication

In addition and subtraction, both operands must be in the same format (signed or unsigned, radix point in the

same location), and the result format is the same as the input format. Addition and subtraction are performed the

same way for both signed or unsigned inputs.

In multiplication, however, the inputs can have different formats, and the result depends on their formats.

The assembly language in the reference embedded processor allows us to specify whether the inputs are both

signed, both unsigned, or one of each (mixed mode). The location of the radix point in the result can be

derived from its location in each of the inputs. This is shown in Figure B.4. The product of two 16-bit numbers

is a 32-bit number. If the input formats are M · N and P · Q, the product has the format (M + P) · (N + Q).

For example, the product of two 13.3 numbers is a 26.6 number. The product of two 1.15 numbers is a 2.30

number.

Mathematical Computations on Fixed-Point Processors

3

Table B.1: Fractional formats and ranges

Format

# of

Integer

Bits

# of

Fractional

Bits

Max Negative

Value (0×8000)

in Decimal

Max Positive Value

(0×7FFF) in Decimal

Value of 1 LSB

(0×0001) in Decimal

1.15

1

15

0.999969482421875

−1.0

0.000030517578125

2.14

2

14

1.999938964843750

−2.0

0.000061035156250

3.13

3

13

3.999877929687500

−4.0

0.000122070312500

4.12

4

12

7.999755859375000

−8.0

0.000244140625000

5.11

5

11

15.999511718750000

−16.0

0.000488281250000

6.10

6

10

31.999023437500000

−32.0

0.000976562500000

7.9

7

9

63.998046875000000

−64.0

0.001953125000000

8.8

8

8

127.996093750000000

−128.0

0.003906250000000

9.7

9

7

255.992187500000000

−256.0

0.007812500000000

10.6

10

6

511.984375000000000

−512.0

0.015625000000000

11.5

11

5

1023.968750000000000

−1024.0

0.031250000000000

12.4

12

4

2047.937500000000000

−2048.0

0.062500000000000

13.3

13

3

4095.875000000000000

−4096.0

0.125000000000000

14.2

14

2

8191.750000000000000

−8192.0

0.250000000000000

15.1

15

1

16383.500000000000000

−16384.0

0.500000000000000

16.0

16

0

32767.000000000000000

−32768.0

1.000000000000000

1.15 Number

(Hexadecimal)

Decimal

Equivalent

0 3 0001

0 3 7FFF

0 3 FFFF

0 3 8000

0.000031

0.999969

20.000031

21.000000

0

22

2

21

2

22

2

23

24

2

2

25

26

2

2

27

28

2

29

2

210

2

211

2

212

2

213

2

214

2

2215

Figure B.3: Bit weighting for 1.15 format numbers.

General Rule

M·N

3 P·Q

(M 1 P)·(N 1 Q)

4-Bit Example

16-Bit Example

1.111 (1.3 Format)

3 11.11 (2.2 Format)

1111

1111

1111

1111

111.00001 (3.5 Format 5 (1 1 2).(2 1 3))

5.3

3 5.3

10.6

1.15

3 1.15

2.30

Figure B.4: Multiplication with fractional data format.

Fractional Mode and Integer Mode

A product of 2 two’s-complement numbers has two sign bits. Since one of these bits is redundant, you can shift

the entire result left 1 bit. Additionally, if one of the inputs was a 1.15 number, the left shift causes the result to

have the same format as the other input (with 16 bits of additional precision). For example, multiplying a 1.15

number by a 5.11 number yields a 6.26 number. When shifted left 1 bit, the result is a 5.27 number, or a 5.11

number plus 16 LSBs.

The reference embedded processor provides a means (a signed fractional mode) by which the multiplier result

is always shifted left 1 bit before being written to the result register. This left shift eliminates the extra sign bit

when both operands are signed, yielding a result that is correctly formatted.

4

Digital Media Processing, Appendix B

Table B.2: Data formats to store in memory or to hold in registers

Format

Representation in Memory

Representation in 32-bit Register

32.0 Unsigned Word

dddd dddd dddd dddd dddd dddd dddd dddd

dddd dddd dddd dddd dddd dddd

dddd dddd

32.0 Signed Word

sddd dddd dddd dddd dddd dddd dddd dddd

sddd dddd dddd dddd dddd dddd

dddd dddd

16.0 Unsigned Half Word

dddd dddd dddd dddd

0000 0000 0000 0000 dddd dddd

dddd dddd

16.0 Signed Half Word

8.0 Unsigned Byte

sddd dddd dddd dddd

dddd dddd

ssss ssss ssss ssss sddd dddd dddd dddd

0000 0000 0000 0000 0000 0000

dddd dddd

8.0 Signed Byte

sddd dddd

ssss ssss ssss ssss ssss ssss sddd dddd

0.16 Unsigned Fraction

.dddd dddd dddd dddd

0000 0000 0000 0000 .dddd dddd

dddd dddd

1.15 Signed Fraction

s.ddd dddd dddd dddd

ssss ssss ssss ssss s.ddd dddd dddd dddd

0.32 Unsigned Fraction

.dddd dddd dddd dddd dddd dddd dddd dddd .dddd dddd dddd dddd dddd dddd

dddd dddd

1.31 Signed Fraction

s.ddd dddd dddd dddd dddd dddd dddd dddd

s.ddd dddd dddd dddd dddd dddd

dddd dddd

Packed 8.0 Unsigned Byte

dddd dddd dddd dddd dddd dddd dddd dddd

dddd dddd dddd dddd dddd dddd

dddd dddd

Packed 0.16 Unsigned Fraction

.dddd dddd dddd dddd .dddd dddd dddd dddd

.dddd dddd dddd dddd .dddd dddd

dddd dddd

Packed 1.15 Signed Fraction

s.ddd dddd dddd dddd s.ddd dddd dddd dddd

s.ddd dddd dddd dddd s.ddd dddd

dddd dddd

When both operands are in 1.15 format, the result is 2.30 (30 fractional bits). A left shift causes the multiplier

result to be 1.31, which can be rounded to 1.15. Thus, if we use a signed fractional data format, it is most

convenient to use the 1.15 format as shown in Figure B.3. For more information about data formats, see the data

formats listed in Table B.2.

Block Floating-Point Format

A block floating-point format enables a fixed-point processor to gain some of the increased dynamic range

of a floating-point format without the overhead needed to perform floating-point arithmetic. However, some

additional programming is required to maintain a block floating-point format.

A floating-point number has an exponent that indicates the position of the radix point in the actual value. In

block floating-point format, a set (block) of data values shares a common exponent. A block of fixed-point values

can be converted to block floating-point format by shifting each value left by the same amount and storing the

shift value as the block exponent.

Typically, block floating-point format allows you to shift out nonsignificant MSBs, increasing the precision

available in each value. Block floating-point format can also be used to eliminate the possibility of a data value

overflowing (see Figure B.5). Each of the three data samples shown has at least two nonsignificant, redundant

sign bits. Each data value can grow by these 2 bits (two orders of magnitude) before overflowing. These bits are

called guard bits.

If it is known that a process will not cause any value to grow by more than the 2 guard bits, then the process

can be run without loss of data. Later, however, the block must be adjusted to replace the guard bits before the

next process. The block floating-point adjustment is performed as follows.

Assume that the output of the SIGNBITS instruction is SB, and that SB is used as an argument in the EXPADJ

instruction (see Analog Devices, Inc., ADSP-BF53x/BF56x Blackfin Processor Programming Reference, Rev.

1.0, June 2005, for the usage and syntax of these instructions). Initially, the value of SB is +2, corresponding

to the 2 guard bits. During processing, each resulting data value is inspected by the EXPADJ instruction, which

Mathematical Computations on Fixed-Point Processors

5

2 Guard Bits

0 3 0FFF 5 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1

0 3 1FFF 5 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1

0 3 07FF 5 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1

Sign Bit

Figure B.5: Data with guard bits.

To detect bit growth into two guard bits, set SB 5 22

1. Check for bit growth

One Guard Bit

EXPADJ instruction checks

exponent, adjusts SB

0 3 1FFF 5 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1

Exponent 5 12, SB 5 12

0 3 3FFF 5 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1

Exponent 5 11, SB 5 11

0 3 07FF 5 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1

Exponent 5 14, SB 5 14

Sign Bit

2. Shift right to restore guard bits

Two Guard Bits

0 3 0FFF 5 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1

0 3 1FFF 5 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1

0 3 03FF 5 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

Sign Bit

Figure B.6: Block floating-point adjustment.

counts the number of redundant sign bits and adjusts SB if the number of redundant sign bits is <2. In this

example, SB = +1 after processing, indicating that the block of data must be shifted right 1 bit to maintain the 2

guard bits. If SB were 0 after processing, the block would have to be shifted 2 bits right. In both cases, the block

exponent is updated to reflect the shift. Figure B.6 shows the data after processing but before adjustment.

B.1.2 Fixed-Point Arithmetic Precision and Accuracy

As an example, we consider computing of (8/3)∗ (1/7) + 1/5 = 0.5809523809523809 . . . on a fixed-point processor. We perform 8- and 16-bit precision arithmetic computations and examine the accuracy of output results.

Fixed-Point Representation

Usually, we scale the given real numbers to ensure that the range of all values fall below 1. Then we represent

the values using 1.N fixed-point (also called Q1.N) format, where N + 1 is the width of the arithmetic registers.

See the previous subsection for more detail on fixed-point format representation of real numbers. If we rearrange

the expression (8/3)∗ (1/7) + 1/5 as 8∗ (1/3)∗ (1/7) + 1/5, then all real quantities are <1 and we need not

perform any scaling for representation in Q1.N fixed-point format. See Example B.1 for 1.7 and 1.15 fixed-point

representation of the previous real values.

Range

Range is the difference between the two most extreme end numbers representable. For example, the signed 3.5

fixed-point format numbers have a range from −3 to 2.96875 (i.e., the range is 5.96875).

6

Digital Media Processing, Appendix B

Precision

The number of bits (usually less than or equal to the width of arithmetic registers) allowed for representing

arithmetic values is referred to as precision. For example, with 1.N representation, we can get the precision

of N bits. Because fixed-point operations can produce results that require more precision than the precision of

processor registers, there is a chance for information loss. This is due to conversion of a high-precision result to

a low-precision result by rounding or truncating the output to fit the output into the processor register.

Resolution

Resolution is the smallest non-zero magnitude representable. For example, the resolution that we can get with

13.3 fixed-point representation is 1/23 = 0.125. It is possible to trade range with resolution.

Dynamic Range

Dynamic range is the ratio of the maximum absolute value representable and minimum non-zero absolute value

representable. The dynamic range for a signed Q1.N fixed-point representation is 2N .

Accuracy

The accuracy of fixed-point arithmetic determines how close we are with the output of fixed-point arithmetic

from the real arithmetic. The accuracy of fixed-point arithmetic depends on many factors. The obvious factor

is precision; the greater the precision, the more accurate the result we get. In addition, accuracy depends on a

few other factors, such as the format used to represent the data, how the arithmetic is performed, and so on.

Example B.1 explains the fixed-point arithmetic accuracy improvement by increasing the precision of arithmetic

while using the same fixed-point format (i.e., Q1.N) and arithmetic flow.

■ Example

B.1

Compute 8*(1/3)*(1/7) + (1/5) using 8- and 16-bit fixed-point processors. Use 1.N fixed-point representation.

With real arithmetic: 8*(1/3)*(1/7) + (1/5) = 0.580952380952380952

On the 8-bit processor:

1/3: 43 (in 1.7 format)

1/7: 18 (in 1.7 format)

1/5: 26 (in 1.7 format)

(1/3)*(1/7) = 43*18 = 774 (2.14 in format) = 6 (in 1.7 format)

8*(1/3)*(1/7) = 48 (in 1.7 format)

8*(1/3)*(1/7) + (1/5) = 48 + 26 = 74 (1.7 format) = 0.578125

Absolute error: 0.002827380952380952 = 2.8 × 10−3

On 16-bit processor:

1/3: 10923 (in 1.15 format)

1/7: 4681 (in 1.15 format)

1/5: 6554 (in 1.15 format)

(1/3)*(1/7) = 10923*4681 = 51130563 (2.30 in format) = 1560 (in 1.15 format)

8*(1/3)*(1/7) = 12480 (in 1.15 format)

8*(1/3)*(1/7) + (1/5) = 12480 + 6554 = 19034 (1.15 format) = 0.58087158203125

Absolute error: 0.000080798921130952 = 8.0 × 10−5

■

With the 16-bit processor, the computation error is much less compared to the 8-bit processor. In general,

the accuracy of the result increases as we increase the precision as long as the same data representation and

arithmetic flow are used.

Rules for Addition

Adding and subtracting of two fixed-point numbers requires that both numbers have the same precision. If the

precision of the two numbers is different, then we shift one or the other (or both) so that their radix points

Mathematical Computations on Fixed-Point Processors

7

line up. For example, to add or subtract two numbers A (in 3.5 format) and B (in 2.6 format), either we shift

A right by 1 bit or shift B left by 1 bit, or shift both A and B by some amount to get the desired output

format.

Rules for Multiplication

Multiplication of two fixed-point numbers A (in p · m format) and B (in q · n format) result in a fixed-point

number C whose format is given by p + q · m + n. To get the output with r bits of precision, we multiply the

C with 2r−(m+n) . In case of signed numbers multiplication, we left shift C by 1 bit and then multiply with

2r−(m+n+1) to get the signed output with the desired r bits of precision.

Rules for Division

Division of one fixed-point number A = a × 2m (in p · m format) with another fixed-point number B = b × 2n (in

q · n format) results in a fixed-point number C(= (a/b) × 2m−n ), whose precision is m − n bits. Now, if m = n,

we get the output with zero bits precision. In such case, when a < b, we output C = 0. To get the output with r

bits of precision, we premultiply the numerator A with 2r before the division operation. For example, dividing

a = 0.3 (or A = 5 in 4.4 format) with b = 0.6 (or B = 10 in 4.4 format) results in A/B = 0 (with integer division

A/B = 5/10 = 0). Now, if we premultiply A with 22 (i.e., r = 2), we get A = 19 (in 2.6 format) or A/B = 1

with 2 bits of precision.

Overflow

Given the limited precision of processor arithmetic registers, it is possible that the registers may overflow in the

above operations due to multiplication (as the precision of intermediate results is the sum of precision of inputs),

repeated addition, or premultiplication in case of division. Typically, we avoid overflow by having increased

precision accumulators or registers to hold the intermediate values. In addition, scaling down the inputs (at the

loss of precision) may prevent this overflow situation.

B.1.3 Fixed-Point Arithmetic Using Reference Embedded Processor MAC

For multiply-and-accumulate (MAC) functions, the processor provides two choices—fractional arithmetic for

fractional numbers (1.15) and integer arithmetic for integers (16.0).

For fractional arithmetic, the 32-bit product output is format adjusted—sign-extended and shifted 1 bit to the

left—before being added to accumulator A0 or A1. For example, bit 31 of the product lines up with bit 32 of A0

(which is bit 0 of A0.X), and bit 0 of the product lines up with bit 1 of A0 (which is bit 1 of A0.W). The LSB is

zero filled. The fractional multiplier result format appears in Figure B.7.

Zero

Filled

Shifted

Out

P Sign,

7 Bits

Multiplier P Output

31 31 31 31 31 31 31 31 31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0 31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0

A0.X

A0.W

Figure B.7: Fractional multiplier result format.

8

Digital Media Processing, Appendix B

P Sign,

8 Bits

Multiplier P Output

31 31 31 31 31 31 31 31 31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0 31 30 29 28 27 26 25 24 23 22 21 20 1 1 1 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0

A0.X

A0.W

Figure B.8: Integer multiplier result format.

For integer arithmetic, the 32-bit product register is not shifted before being added to A0 or A1. Figure B.8

shows the integer mode result placement.

In fractional or integer operations, the multiplier output product is fed into a 40-bit adder/subtracter, which

adds or subtracts the new product with the current contents of the A0 or A1 register to produce the final 40-bit

result.

Rounding Multiplier Results

On many multiplier operations, the processor supports multiplier results rounding (RND option). Rounding is

a means of reducing the precision of a number by removing a lower-order range of bits from that number’s

representation and possibly modifying the remaining portion of the number to more accurately represent its

former value. For example, the original number will have N bits of precision, whereas the new number will have

only M bits of precision (where N > M). The process of rounding, then, removes N − M bits of precision from

the number.

The RND_MOD bit in the ASTAT register determines whether the RND option provides biased or unbiased

rounding. For unbiased rounding, set RND_MOD bit = 0. For biased rounding, set RND_MOD bit = 1.

Unbiased Rounding

The convergent rounding method returns the number closest to the original. In cases where the original number

lies exactly halfway between two numbers, this method returns the nearest even number, the one containing an

LSB of 0. For example, when rounding the 3-bit, two’s-complement fraction 0.25 (binary 0.01) to the nearest

2-bit, two’s-complement fraction, the result would be 0.0, because that is the even-numbered choice of 0.5 and

0.0. Since it rounds up and down based on the surrounding values, this method is called unbiased rounding.

Unbiased rounding uses the ALU’s capability of rounding the 40-bit result at the boundary between bits 15

and 16. Rounding can be specified as part of the instruction code. When rounding is selected, the output register

contains the rounded 16-bit result; the accumulator is never rounded.

The accumulator uses an unbiased rounding scheme. The conventional method of biased rounding adds a 1

into bit position 15 of the adder chain. This method causes a net positive bias because the midway value (when

A0.L/A1.L = 0×8000) is always rounded upward.

The accumulator eliminates this bias by forcing bit 16 in the result output to 0 when it detects this midway

point. Forcing bit 16 to 0 has the effect of rounding odd A0.L/A1.L values upward and even values downward,

yielding a large sample bias of 0, assuming uniformly distributed values.

The following examples use x to represent any bit pattern (not all zeros). The example in Figure B.9 shows a

typical rounding operation for A0; the example also applies for A1.

Mathematical Computations on Fixed-Point Processors

x x x x x x x x x x x x x x x x 0 0 1 0 0 1 0 1 1

9

x x x x x x x x x

x x x x x x

.

.

Add 1 and Carry:

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

.

.

.

.

.

.

.

.

.

.

.

.

.

Rounded Value:

x x x x x x x x x x x x x x x x 0 0 1 0 0 1 1 0 0

A0.X

x x x x x x x x x

x x x x x x

A0.W

Figure B.9: Typical unbiased multiplier rounding.

Unrounded Value:

x x x x x x x x x x x x x x x x 0 1 1 0 0 1 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Add 1 and Carry:

.

.

.

.

.

.

.

.

. .

.

.

.

.

.

.

.

.

.

.

.

.

.

. 1 .

. .

.

.

.

.

.

.

.

.

.

.

. .

A0 Bit 16 5 1:

x x x x x x x x x x x x x x x x 0 1 1 0 0 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Rounded Value:

x x x x x x x x x x x x x x x x 0 1 1 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

A0.X

A0.W

Figure B.10: Avoiding net bias in unbiased multiplier rounding.

The compensation to avoid net bias becomes visible when all lower 15 bits are 0 and bit 15 is 1 (the midpoint

value) as shown in Figure B.9.

In Figure B.10, A0 bit 16 is forced to 0. This algorithm is employed on every rounding operation, but is

evident only when the bit patterns shown in the lower 16 bits of the next example are present.

Biased Rounding

The round-to-nearest method also returns the number closest to the original. However, by convention, an original

number lying exactly halfway between two numbers always rounds up to the larger of the two. For example,

when rounding the 3-bit, two’s-complement fraction 0.25 (binary 0.01) to the nearest 2-bit, two’s-complement

fraction, this method returns 0.5 (binary 0.1). The original fraction lies exactly midway between 0.5 and 0.0

(binary 0.0), so this method rounds up. Because it always rounds up, this method is called biased rounding.

10

Digital Media Processing, Appendix B

Table B.3: Biased rounding in multiplier operation

A0/A1 Before RND Biased RND Result Unbiased RND Result

0×00 0000 8000

0×00 0001 8000

0×00 0000 0000

0×00 0001 8000

0×00 0002 0000

0×00 0002 0000

0×00 0000 8001

0×00 0001 0001

0×00 0001 0001

0×00 0001 8001

0×00 0002 0001

0×00 0002 0001

0×00 0000 7FFF

0×00 0000 FFFF

0×00 0000 FFFF

0×00 0001 7FFF

0×00 0001 FFFF

0×00 0001 FFFF

The RND_MOD bit in the ASTAT register enables biased rounding. When the RND_MOD bit is cleared, the

RND option in multiplier instructions uses the normal, unbiased rounding operation, as discussed previously.

When the RND_MOD bit is set (=1), the processor uses biased rounding instead of unbiased rounding. When

operating in biased rounding mode, all rounding operations with A0.L/A1.L set to 0×8000 round up, rather than

only rounding odd values up. For an example of biased rounding, see Table B.3.

Biased rounding affects the result only when the A0.L/A1.L register contains 0×8000; all other rounding

operations work normally. This mode allows more efficient implementation of bit-specified algorithms that use

biased rounding (e.g., Global System for Mobile Communications [GSM] speech compression routines).

Truncation

Another common way to reduce the significant bits representing a number is to simply mask off the N – M lower

bits. This process is known as truncation, and results in a relatively large bias. Instructions that do not support

rounding revert to truncation. The RND_MOD bit in ASTAT has no effect on truncation.

B.2 Galois Fields

Galois field arithmetic is popularly used in cryptographic algorithms and error correction algorithms. To better

understand Galois field arithmetic, we first discuss its underlying algebraic systems and arithmetic operations

defined on such algebraic systems. The material provided here is basically descriptive and no attempt is made

to be mathematically rigorous. For detail on Galois field theory, see Lidl and Niederreiter (1994).1

B.2.1 Modular Arithmetic

Modular arithmetic plays an important role in digital computers. A simple example will demonstrate the importance of modular arithmetic. Assume that we perform a division operation to get the value of 4/3 using real

arithmetic and we obtain the output as 1.33333333333 . . . ; now, if we want to perform this division with a digital

computer, we need a hardware processor that supports floating-point operations. Floating-point processors are

very costly and they are generally slow. On the other hand, if we use a fixed-point hardware processor (which

is very fast and is less expensive) to perform the above division, then we cannot get its output value with full

accuracy due to the limited precision of fixed-point processor registers. For example, in the 2.30 signed Q-format

representation (see Section B.1 for the fixed-point or Q-format representation of real numbers), we can represent

the output of division as 1.33333333302289 . . .; because the output of division cannot be represented accurately

using fixed-point processor registers, we never get back the actual input used in the division (i.e., we can only get

numerator as 3.99999999906867 instead of 4 when we multiply the output again with denominator 3). In this

case, the division operation is not lossless and most algorithms used in cryptography or error correction require

a lossless arithmetic system. Therefore, an alternate way of performing division operation using a fixed-point

hardware is necessary to get back actual inputs. In this alternate approach, we perform arithmetic using a modulo operation and this results in lossless arithmetic operations. In the following, we solve the previous division

problem using modulo-5 arithmetic.

1

Rudolf Lidl and Harald Niederreiter, Introduction to Finite Fields and Their Applications, 2nd ed. Cambridge University Press, 1994.

Mathematical Computations on Fixed-Point Processors

11

If P is an integer, then a set of integer numbers between 0 and P − 1 is used in modulo-P arithmetic. With

modulo-P, it is true that the following rule, n = k + P = k, holds for all integer values of k, and we write this in

equation form as k = n mod P. For example, with modulo-5 arithmetic, all numbers. . . , −9, −4, 1, 6, 11, . . .

have the same value k = 1. Therefore, 4/3 = 4∗ (1/3) = 4∗ (2) = 8 mod 5 = 3. Then, if we multiply the output

3 with denominator 3, we get back an actual value of the numerator as 3∗ 3 = 9 mod 5 = 4. Two advantages of

the modular arithmetic system are that it is lossless and it is fast.

In the previous example, we solved a particular case with modular-5 arithmetic, where the multiplicative

inverse for denominator value 3 exists in the modulo-5 elements set is equal to 2. Many questions may arise with

respect to the division problem such as the following:

1. Is it possible to compute any division with modulo-5 arithmetic (e.g., can we compute 11/9) and get back

the original value when we multiply the output result with denominator 9?

2. Why use modulo-5 for solving 4/3 (rather than the modulo-6 elements set)?

3. Can we perform division with modulo-P arithmetic if the denominator is zero?

The answer to all the previous questions is “no.” If we perform division 11/9 with modulo-5 arithmetic, we

cannot get back 11 when we multiply the output result with 9 (as element 11 is not in the set of modulo-5

elements, and no multiplicative inverse exists for 9 in the set of modulo 5 elements). We cannot perform division

4/3 with modulo-6 arithmetic (as no multiplicative inverse exists for 3 in the set of modulo-6 elements). We cannot

perform division with modulo-P arithmetic if the denominator is zero (as no multiplicative inverse exists for zero

in the set of modulo-P elements). Then, how can we use this modulo-P set of elements without having a proper

abstract system with rules to solve a general arithmetic division problem? We need a proper algebraic system with

a set of elements and rules to answer the previous question. In the following, we define such an algebraic systems

called as groups to systematically answer the previous questions. Note that the algebraic systems such as groups

are invented not for solving the previous division problem. Groups concepts are developed to analyze symmetry

properties of structures and for solving higher-degree polynomial roots using their symmetry properties. Here,

we consider the division problem as an example to demonstrate the importance of algebraic systems.

B.2.2 Groups

We define an algebraic system called a group as follows:

A set G on which a binary operation o is defined is called a group if it satisfies the following properties:

Closure property—If a, b belongs to G, then c = a o b also belongs to G.

Associative property—For any a, b, and c belongs to G, a o (b o c) = (a o b) o c.

Identity property—G contains an element e such that for any a that belongs to G, a o e = e o a = a.

Inverse property—For any element a in G, there exists another element a in G such that a o a = a o a = e.

The element a is called an inverse of a with respect to binary operation o. If a, b belong to G and if they satisfy

the condition a o b = b o a, then we say that G follows the commutative property and we call such groups abelian.

The notion of groups can be extended to any system as long as it satisfies the previous properties. In the

following, we discuss two examples of groups with addition and multiplication as defined binary operations

on particular number systems. We can perform subtraction and division operations with the same groups, as

the binary operations subtraction and division come under the inverse operations of addition and multiplication

operations.

Additive Group under Modulo-M Addition

If M is a positive integer, then the set G with elements {0, 1, 2, . . . , M − 1} forms a group under modulo-M addition. As an example, we consider an additive group under modulo-M addition for M = 7 and the corresponding

number set G = {0, 1, 2, 3, 4, 5, 6}. We then verify using examples of whether this system with an underlying

binary operation satisfies the group properties.

If a = 2 and b = 5, then c = a + b = (2 + 5) mod 7 = 7 mod 7 = 0, which also belongs to G, and hence the

closure property is satisfied.

12

Digital Media Processing, Appendix B

Table B.4: Modulo-7

addition

+

0

1

2

3

4

5

6

0

0

1

2

3

4

5

6

1

1

2

3

4

5

6

0

2

2

3

4

5

6

0

1

3

3

4

5

6

0

1

2

4

4

5

6

0

1

2

3

5

5

6

0

1

2

3

4

6

6

0

1

2

3

4

5

If a = 3, b = 5, and c = 4, then a + (b + c) = 3 + (5 + 4) = 3 + 9 = 12 mod 7 = 5, (a + b) + c = (3 + 5) + 4 =

8 + 4 = 12 mod 7 = 5, and hence the associative property is satisfied.

If a = 6, then for e = 0, a + e = 6 + 0 = 6 = a, and e + a = 0 + 6 = 6 = a. Therefore, e = 0 is an identity

element of G.

If a = 4, then a + a = 4 + 3 = 7 mod 7 = 0, a + a = 3 + 4 = 7 mod 7 = 0. Therefore, a = 3 is an inverse

for a = 4 under the modulo-7 addition.

If a = 2 and b = 6, a + b = 2 + 6 = 8 mod 7 = 1, and b + a = 6 + 2 = 8 mod 7 = 1. Hence, the commutative

property is satisfied.

We can verify group properties for other elements of the previous additive group in the same way. The additive

group under modulo-7 addition is given in Table B.4.

Multiplicative Group under Modulo-P Multiplication

If P is a prime integer, then the set G with elements {1, 2, . . . , P − 1} forms a group under modulo-P multiplication. As an example, we consider multiplicative group under modulo-P multiplication for P = 5 and the

corresponding set G = {1, 2, 3, 4}. We verify using examples of whether this system with an underlying binary

operation satisfies group properties.

If a = 2 and b = 3, then c = a ∗ b = 2 ∗ 3 = 6 mod 5 = 1, which also belong to G, and hence the closure

property satisfied.

If a = 2, b = 4, and c = 3, then a ∗ (b ∗ c) = 2∗ (4 ∗ 3) = 2 ∗ 12 = 24 mod 5 = 4, (a ∗ b) ∗ c = (2 ∗ 4) ∗ 3 = 8 ∗ 3 =

24 mod 5 = 4. Hence, the associative property is satisfied.

If a = 3, then for e = 1, a ∗ e = 3 ∗ 1 = 3 = a, and e ∗ a = 1 ∗ 3 = 3 = a. Therefore, e = 1 is an identity element

of G.

If a = 2, then for a = 3, a ∗ a = 2 ∗ 3 = 6 mod 5 = 1, and a ∗ a = 3 ∗ 2 = 6 mod 5 = 1. Therefore, a = 3

is an inverse for a = 2 under modulo-5 multiplication.

If a = 2 and b = 4, a ∗ b = 2 ∗ 4 = 8 mod 5 = 3, b ∗ a = 4 ∗ 2 = 8 mod 5 = 3. Hence, the commutative property

is satisfied.

We can verify group properties for other elements of the previous multiplicative group in the same way. The

multiplicative group under modulo-5 multiplication is given in Table B.5.

Assuming that the properties and concepts of the algebraic system group have been understood in the previous

examples, solving the general division problem with a fixed-point processor can be easily explained. To compute

the division a/b using modulo-P arithmetic, we have to choose P such that a, b belongs to set {1, 2, 3, . . . , P − 1}.

Table B.5: Modulo-5

multiplication

*

1

2

3

4

1

1

2

3

4

2

2

4

1

3

3

3

1

4

2

4

4

3

2

1

Mathematical Computations on Fixed-Point Processors

13

We now have constructive answers to the questions raised in the Section B.2.1. To perform division 11/9, we

have to choose a minimum prime number P as 13 for set G, and then only 9 and 11 belong to G. We cannot

perform 4/3 division with the modulo-6 number set, as 6 is not a prime number and this particular set cannot

form a multiplicative group because the inverse property of the group will not be satisfied. We cannot perform

division with the denominator as zero since the element zero is not in the multiplicative group.

Subgroups

If H is a non-empty subset of G, and H satisfies all the properties of group discussed in this section, then we call

H a subgroup of G. For example, the set of all rational numbers forms a group under real addition, and the set

of all integers forms a subgroup of the group of rational numbers under real addition.

The algebraic structure groups discussed in this section are constructed with one type of binary operation. In

practice, we may need an algebraic structure that supports more than one binary operation in solving a given

problem. In the next section, we construct another kind of algebraic structure (more abstract when compared

to groups) called fields, which support more than one binary operation. In the literature, many other algebraic

structures between groups and fields can be found; discussion of all such structures is beyond the scope of this

book. For detailed discussion of abstract algebra, see (Lidl and Niederreiter (1994)).

B.2.3 Fields

In this section, we use the concept of groups, discussed in the previous section, to construct fields. We use the

field algebraic structure in many areas of mathematics applications such as vector spaces, number theory, coding

theory, cryptography, and so on. An algebraic structure, field F, is defined as follows:

The set F together with two binary operations addition (+) and multiplication (∗ ) comprise a field if the

following conditions are satisfied:

• The set F is an abelian group under the addition operation.

• The set of non-zero elements in F forms an abelian group under multiplication.

• The elements a, b, and c belonging to F obey the following distributive law a ∗ (b + c) = a ∗ b + a ∗ c.

In brief, a field is a set of elements in which we can perform addition, subtraction, multiplication, and division

and still satisfy the closure property. The identity element of fields under addition is 0 and under multiplication

is 1. One of the best examples of fields that we use every day is a real number set along with binary operations

addition, subtraction, multiplication, and division. The real number system is an example of infinite fields. The

division (i.e., 4/3 = 1.3333333333 . . .) problem, considered as an example at the beginning of this appendix,

is carried out in the field formed by a set of real numbers. As discussed, working on real numbers (or infinite

fields) using fixed-point hardware processors is time consuming, and may not result in accurate outputs due to

the limited precision of registers used by fixed-point processors hardware. Therefore, here we construct the fields

that use modular arithmetic to work as a lossless system with fixed-point processors. Such fields are called finite

fields, or Galois fields.

Galois Fields

The number of elements in a field is known as the order of the field. A field with a finite order is called a finite

field or Galois field, named after Evariste Galois. If q is a prime number, then the set {0, 1, 2, . . . , q − 1} is a field

of order q under modulo-q addition and multiplication. In practice, we come across two types of fields: prime

and binary. Finite fields with a prime order (q = P, a prime number) are called prime fields, denoted by GF(P).

If q = 2, then we call those particular fields binary fields, denoted by GF(2). For a finite positive integer m, the

fields denoted by GF(q m ) are called extension fields. Of all extension fields, GF(2m ) fields became more famous

in the digital world as computers can efficiently perform arithmetic operations with these binary extension fields.

The finite fields are popularly used in cryptography algorithms. In cryptography applications, the order of the

field (in terms of the size of P or the size of m) is very large (a few hundred bits). See Section 2.5 to know more

about large Galois field operations implementation techniques. In this section, we discuss more on construction

of small-order (for m < 16) binary extension fields GF(2m ), which are extensively used in coding theory. See

Sections 3.5 and 3.6 in the book for usage of binary extension fields in coding theory applications.

14

Digital Media Processing, Appendix B

Table B.6: Representation of GF(23 ) field elements

Field Element

Element α Powers

0

1

—

0

α

α2

Polynomial Notation Binary Notation

—

1

000 (0)

001 (1)

1

x

010 (2)

2

x2

100 (4)

α3

3

x+1

011 (3)

α4

4

x2 + x

110 (6)

5

5

x + x+1

111 (7)

α6

6

x 2 +1

101 (5)

α

7

1

001 (1)

α

7

2

Primitive Element A primitive element of Galois field GF(q) is an element α such that every field element

except zero can be expressed as a power of α. Every Galois GF(q) field has at least one primitive element.

Primitive Polynomial A primitive polynomial p(x ) over GF(q) is a prime polynomial over GF(q) with the

property that in the extension field constructed via modulo p(x ), the field element represented by x is a primitive.

Every Galois field GF(q) has at least one primitive polynomial over it for every positive degree.

Galois Field Element Representation

If α is the primitive element of the Galois field GF(q m ) for q = 2 and m = 3, then the primitive polynomial p(x )

of degree m over GF(q) is p(x ) = x 3 + x + 1 or in binary vector notation p = [1011]. This primitive polynomial

is used to generate the elements of GF(23 ) field as given in Table B.6. As an example, we discuss how polynomial

expression for the field element α 4 is derived. If α is a primitive element and p(x ) is a primitive polynomial of

the Galois field GF(23 ), then

p(α) = α 3 + α + 1 = 0 => α 3 = α + 1, and α 4 = α · α 3 = α(α + 1) = α 2 + α

Therefore, the polynomial notation for field element α 4 is x 2 + x , and its equivalent binary notation is 110.

In Table B.6, there are 2m − 1 different field elements apart from the zero element in GF(2m ). A polynomial (in

a data message, parity, error, etc.) D(x ) over a field GF(2m ) is a mathematical expression, D(x ) = dn−1 x n−1 +

dn−2 x n−2 + · · · + d1 x + d0 , where the symbol x is intermediate, the coefficients dn−1 , dn−2 , . . . , d0 belong to

GF(2m ), and the indices and exponents are integer values. In the next section, we discuss Galois field arithmetic

operations.

B.2.4 Galois Field Arithmetic

Galois field elements—additions, subtractions, multiplications, and divisions—are used in coding theory (see

Sections 3.5 and 3.6 in the book) to encode or decode the data. In general, we may not find Galois-field arithmetic

operation instructions on a general embedded processor. Even if we have GF(2m ) arithmetic, it is limited to

particular values of m. For example, if we find Galois field arithmetic on a particular embedded processor for

m = 8, it will not work for a Galois field with m = 12. Therefore, in the following we discuss simulating Galois

field arithmetic operations without any specific instruction set, and we estimate the computational complexity

(with and without using look-up tables) for these arithmetic operations to implement on such an embedded

processor without Galois-field arithmetic instructions.

We describe the Galois field operations in the extension field GF(2m ), where each field element is represented

with an (m − 1)-degree polynomial

f (x ) = fm−1 x m−1 + fm−2 x m−2 + · · · + f2 x 2 + f1 x + f0

with coefficients that belong to binary field GF(2). We use a primitive element α(= 2) that satisfies the primitive

polynomial p(x ) = x m + r(x ), an irreducible polynomial of degree m, to generate all field elements of GF(2m )

Mathematical Computations on Fixed-Point Processors

15

except the zero element by expressing the power of α (i.e., field element β = α i where 0 ≤ i ≤ 2m − 2). There

are a total of q = 2m different Galois field elements in GF(2m ), including the zero field element, and we call

q an order of field. If we take the logarithm of field element β, then we obtain log(α i ) = i as a logarithm

value, and if we take the antilogarithm for i with respect to α, then we get α i = β as an antilogarithm value.

In Table B.6, the second column (to the left) provides logarithm values for field elements and the last column

provides antilogarithm values for field elements.

Galois Field Operations

Addition of GF(2m ) Field Elements Galois field element addition can be efficiently carried out using polynomial

(or antilogarithm) representation of field elements. The addition of two Galois field elements f (x ) and g(x ) in

the extension field is achieved by modulo-2 addition (i.e., XORing) of the corresponding binary coefficients of

two polynomials f (x ) and g(x ):.

f (x ) + g(x ) = ( fm−1 ⊕ gm−1 )x m−1 + · · · + ( f2 ⊕ g2 )x 2 + ( f1 ⊕ g1 )x + ( f0 ⊕ g0 )

Subtraction of GF(2m ) Field Elements The Galois field subtraction operation is exactly same as Galois field

addition operation.

Multiplication of GF(2m ) Field Elements Galois field element multiplication can be efficiently carried out

using exponent (or logarithm) representation of field elements. Multiplication of two field elements β(= α i ) and

γ (= α j ) of GF(2m ) is computed in a few simple steps as follows:

1.

2.

3.

4.

Get logarithm values of β and γ as i and j .

Add i and j as k = i + j .

Compute q = k mod n, where n = 2m − 1.

Get field element multiplication value δ = β · γ by taking the antilogarithm of q.

Inverse of GF(2m ) Field Elements

m

With GF(2m ), it is true that the field element α 2 −1 = α 0 = 1. If we want to perform the inverse of any field

element β = α i , we compute it as

1

α 2 −1

m

= α 2 −1− j

=

j

β

α

m

Division of GF(2m ) Field Elements In the GF(2m ) field, division of field element β = α i with γ = α j is

computed as follows:

If i ≥ j , then

δ = β/γ = α i− j

If i < j (i.e., when the numerator exponent is less than the denominator exponent), then

δ = β/γ =

m −1

αi

αi α2

=

αj

αj

= α i− j +2

m

−1

Exponentiation of GF(2m ) Field Elements: Exponentiation of Galois field element β = α i to the power j is

computed as follows:

1. k = i · j

2. q = k mod n, where n = 2m − 1

3. δ = β j = (α i ) j = α i· j = α q

Example Galois Field GF(28 )

In this section, we construct the commonly used (in Reed-Solomon coding) Galois field GF(28 ) whose field

elements are generated using the primitive polynomial p(x ) = x 8 + x 4 + x 3 + x 2 + 1. In the GF(28 ) Galois field,

each data element is represented with a seventh-degree binary polynomial or with an 8-bit (1 byte) binary vector.

We discuss more on the GF(28 ) field element representation later in this section. As discussed previously, the

16

Digital Media Processing, Appendix B

Galois_Log[256]

0x02fe, 0x0000,

0x00df, 0x0033,

0x00e0, 0x000e,

0x00f8, 0x00c8,

0x00e1, 0x0024,

0x0012, 0x0082,

0x00f9, 0x00b9,

0x00a6, 0x0006,

0x00e2, 0x0098,

0x00d0, 0x0094,

0x0013, 0x005c,

0x00a3, 0x00c3,

0x00fa, 0x0085,

0x0015, 0x0079,

0x00a7, 0x0057,

0x000d, 0x0067,

0x00e3, 0x00a5,

0x0044, 0x0092,

0x00d1, 0x005b,

0x00b2, 0x00dc,

0x0014, 0x002a,

0x006d, 0x0041,

0x00a4, 0x0076,

0x00f6, 0x006c,

0x00aa, 0x00fb,

0x00cb, 0x0059,

0x00f5, 0x0016,

0x00d5, 0x00e9,

0x00ea, 0x00a8,

=

0x0001,

0x00ee,

0x0034,

0x0008,

0x000f,

0x0045,

0x00c9,

0x00bf,

0x0025,

0x00ce,

0x0083,

0x0048,

0x00ba,

0x002b,

0x0007,

0x004a,

0x0099,

0x00d9,

0x0095,

0x00fc,

0x005d,

0x00a2,

0x00c4,

0x00a1,

0x0060,

0x005f,

0x00eb,

0x00e6,

0x0050,

Galois_aLog[ ] =

0x01, 0x02, 0x04,

0x4c, 0x98, 0x2d,

0x9d, 0x27, 0x4e,

0x46, 0x8c, 0x05,

0x5f, 0xbe, 0x61,

0xfd, 0xe7, 0xd3,

0xd9, 0xaf, 0x43,

0x81, 0x1f, 0x3e,

0x85, 0x17, 0x2e,

0xa8, 0x4d, 0x9a,

0xe6, 0xd1, 0xbf,

0xe3, 0xdb, 0xab,

0x82, 0x19, 0x32,

0x51, 0xa2, 0x59,

0x12, 0x24, 0x48,

0x2c, 0x58, 0xb0,

0x08,

0x5a,

0x9c,

0x0a,

0xc2,

0xbb,

0x86,

0x7c,

0x5c,

0x29,

0x63,

0x4b,

0x64,

0xb2,

0x90,

0x7d,

0x0019,

0x001b,

0x008d,

0x004c,

0x0021,

0x001d,

0x009a,

0x008b,

0x00b3,

0x008f,

0x0038,

0x007e,

0x003d,

0x004e,

0x0070,

0x00de,

0x0077,

0x0023,

0x00bc,

0x00be,

0x009e,

0x001f,

0x0017,

0x003b,

0x0086,

0x00b0,

0x007a,

0x00e7,

0x0058,

0x10,

0xb4,

0x25,

0x14,

0x99,

0x6b,

0x11,

0xf8,

0xb8,

0x52,

0xc6,

0x96,

0xc8,

0x79,

0x3d,

0xfa,

0x0002,

0x0068,

0x00ef,

0x0071,

0x0035,

0x00b5,

0x0009,

0x0062,

0x0010,

0x0096,

0x0046,

0x006e,

0x00ca,

0x00d4,

0x00c0,

0x00ed,

0x0026,

0x0020,

0x00cf,

0x0061,

0x0084,

0x002d,

0x0049,

0x0052,

0x00b1,

0x009c,

0x0075,

0x00ad,

0x00af;

0x20,

0x75,

0x4a,

0x28,

0x2f,

0xd6,

0x22,

0xed,

0x6d,

0xa4,

0x91,

0x31,

0x8d,

0xf2,

0x7a,

0xe9,

0x0032,

0x00c7,

0x0081,

0x0005,

0x0093,

0x00c2,

0x0078,

0x0066,

0x0091,

0x00db,

0x0040,

0x006b,

0x005e,

0x00e5,

0x00f7,

0x0031,

0x00b8,

0x0089,

0x00cd,

0x00f2,

0x003c,

0x0043,

0x00ec,

0x0029,

0x00bb,

0x00a9,

0x002c,

0x00e8,

0x40,

0xea,

0x94,

0x50,

0x5e,

0xb1,

0x44,

0xc7,

0xda,

0x55,

0x3f,

0x62,

0x07,

0xf9,

0xf4,

0xcf,

0x80,

0xc9,

0x35,

0xa0,

0xbc,

0x7f,

0x88,

0x93,

0xa9,

0xaa,

0x7e,

0xc4,

0x0e,

0xef,

0xf5,

0x83,

0x001a,

0x004b,

0x001c,

0x008a,

0x008e,

0x007d,

0x004d,

0x00dd,

0x0022,

0x00bd,

0x001e,

0x003a,

0x009b,

0x00ac,

0x008c,

0x00c5,

0x00b4,

0x002e,

0x0090,

0x0056,

0x0039,

0x00d8,

0x007f,

0x009d,

0x00cc,

0x00a0,

0x00d7,

0x0074,

0x1d,

0x8f,

0x6a,

0x5d,

0x65,

0xfe,

0x0d,

0x3b,

0x4f,

0x49,

0xfc,

0x95,

0x1c,

0xc3,

0xf7,

0x1b,

0x00c6,

0x0004,

0x00c1,

0x0065,

0x00da,

0x006a,

0x00e4,

0x0030,

0x0088,

0x00f1,

0x0042,

0x0028,

0x009f,

0x0073,

0x0080,

0x00fe,

0x007c,

0x0037,

0x0087,

0x00d3,

0x0053,

0x00b7,

0x000c,

0x0055,

0x003e,

0x0051,

0x004f,

0x00d6,

0x3a,

0x03,

0xd4,

0xba,

0xca,

0xe1,

0x1a,

0x76,

0x9e,

0x92,

0xe5,

0x37,

0x38,

0x9b,

0xf3,

0x36,

0x0003,

0x0064,

0x0069,

0x002f,

0x00f0,

0x0027,

0x0072,

0x00fd,

0x0036,

0x00d2,

0x00b6,

0x0054,

0x000a,

0x00f3,

0x0063,

0x0018,

0x0011,

0x003f,

0x0097,

0x00ab,

0x0047,

0x007b,

0x006f,

0x005a,

0x000b,

0x00ae,

0x00f4,

0x74,

0x06,

0xb5,

0x69,

0x89,

0xdf,

0x34,

0xec,

0x21,

0x39,

0xd7,

0x6e,

0x70,

0x2b,

0xfb,

0x6c,

0xe8,

0x0c,

0x77,

0xd2,

0x0f,

0xa3,

0x68,

0xc5,

0x42,

0x72,

0xb3,

0xdc,

0xe0,

0x56,

0xeb,

0xd8,

0xcd,

0x18,

0xee,

0xb9,

0x1e,

0x5b,

0xd0,

0x97,

0x84,

0xe4,

0x7b,

0xa5,

0xdd,

0xac,

0xcb,

0xad,

0x87,

0x30,

0xc1,

0x6f,

0x3c,

0xb6,

0xbd,

0x33,

0x15,

0xd5,

0xf6,

0x57,

0xa7,

0x45,

0x8b,

0x47,

0x13,

0x60,

0x9f,

0xde,

0x78,

0x71,

0x67,

0x66,

0x2a,

0xb7,

0xf1,

0xae,

0x53,

0x8a,

0x0b,

0x8e,

0x26,

0xc0,

0x23,

0xa1,

0xf0,

0xe2,

0xce,

0xcc,

0x54,

0x73,

0xff,

0x41,

0xa6,

0x09,

0x16,

0x01,…;

multiplication or division operations in a Galois field are computed using logarithm and antilogarithm look-up

tables. We generate the preceding Galois_Log[ ] and Galois_aLog[ ] look-up tables for all field elements of

GF(28 ) to efficiently perform arithmetic operations.

Values in the previous two look-up tables are used or interpreted as follows. We represent Galois field element

in polynomial or exponential form. A Galois field GF(28 ) element β is represented in the exponential form

with α i , where α(= 2) is a primitive element (see Section B.2.3 for the definition of a primitive element).

We can express all the field elements in GF(28 ) except the zero element as a power of α. The exponent form

representations of GF(28 ) field elements are given by the set

−, α 0 , α 1 , α 2 , . . . , α 254 , α 255 with α 255 = α 0

The Galois_Log[ ] look-up table consists of a logarithm (i.e., i = log(α i ), 0 ≤ i ≤ 255) or exponent value

for each element of GF(28 ). Out of 0 to 255 elements of GF(28 ), elements 1 to 255 can be represented in

exponent form using the primitive polynomial p(x ). To efficiently implement Galois field arithmetic (without

any conditional checks), we represent the 0 element of GF(28 ) with a value that is greater than 8 bits (since the

Mathematical Computations on Fixed-Point Processors

17

logarithm value for the 0 element is not defined and all 8-bit values are used to represent other GF(28 ) elements).

We use 0x02fe (see the following for more information on fixing the value 0x02fe) to represent the 0 element of

GF(28 ) in the logarithm domain. Since we used 16 bits for the logarithm value to represent element 0 of GF(28 ),

we represent all other 8-bit logarithm elements in the 16-bit form as well to simplify the Galois field arithmetic

operations programming using the Galois_Log[ ] look-up table.

A Galois field GF(28 ) element β (of 8-bit width) is also represented in the polynomial form with the binary

coefficients [ f7 , f6 , · · · f2 , f1 , f0 ] of the seventh-degree polynomial f (x ) = f7 x 7 + f6 x 6 +· · ·+ f2 x 2 + f1 x + f 0 .

Binary vector [ f7 f6 · · · f2 f1 f0 ] values from 0 to 255 represent all the field elements of GF(28 ). Although the

look-up table Galois_aLog[ ] consists of all 8-bit binary coefficient vector combinations of polynomial f (x ), we

do not store them in sequence from 0 to 255; instead, they are stored in such a way that the field element β will

be obtained from the look-up table Galois_aLog[ ] using its logarithm value i. In this way, we can easily switch

between polynomial form (used for additions and subtractions) and exponent form (used for multiplication,

division, and exponentiation). If we want to multiply two field elements β = α i with γ = α j , we get their

logarithm values i, j by using look-up table Galois_Log[ ], we add them (since α i · α j = α i+ j ), and then we

get the multiplication value δ(= β · γ = α i+ j ) by taking antilogarithm for (i + j ) with respect to α using

Galois_aLog[ ] table.

Galois Field GF(28 ) Arithmetic Implementation

Before implementing GF(28 ) field element arithmetic (with a few processor instructions) using look-up tables

Galois_Log[ ] and Galois_aLog[ ], we have to answer the following questions:

• How to perform modulo p(x ) operation when the two field element arithmetic result is not in range of the

GF(28 ) field

• How to perform a multiply operation when the 0 field element is an input to the multiplier (as a logarithm

of the field element does not exist)

• How to perform a modular exponentiation

• How to perform a two field element division when the numerator field element exponent is less than the

denominator field element exponent (as a result, the exponent index become negative)

One approach for solving these issues is to check and resolve each case separately. But this is very costly

in terms of cycles as it involves condition checks, conditional moves, and modulo 255(=28 − 1) computations

for every multiplication/division operation. Instead, we resolve such cases by increasing look-up table size and

using a few simple operations as described in the following.

Modulo p(x) Operation When we perform multiplication or exponentiation, there is a possibility that the result

will be more than 255 in the logarithm domain (i.e., outside the scope of GF(28 ) field). In that case, we perform

modulo 255 (on logarithm values) to ensure that the result belongs to GF(28 ). For multiplication, the result (in

the logarithm domain) can go up to 508 (as the maximum exponent of GF(28 ) element is 254). If we double the

antilogarithm look-up table size, then we can directly get modulo p(x ) of the result from the look-up table itself

(and thus, there is no need to compute mod p(x ) analytically for the output result).

Modular Exponentiation When we perform exponentiation, the result will be so large that doubling the lookup table is not sufficient to obtain the antilogarithm value for the exponentiation result. However, we can reduce

the exponentiation result to the look-up table range with three simple operations. For example, if field element

β = α 231 , and if we want to perform the exponentiation

γ = β 237 = (α 231 )237 = α 231x237 = α 54747 = α 177 = α 177 · 1 = α 177α 255 = α 432

then we can achieve the result 432 (from 54,747) with a single shift operation, one AND operation, and one

add operation as follows. If q = 54, 747, then r = q 8; q = q & 255; r = r + q; now, the look-up table

Galois_aLog[ ] at index r output γ , an antilogarithm of α 432 = α 177 , as 219. In general, if A = Q ∗ 256 + r, then

Q = A 8, r = A & 255, and by rearranging we have A = Q ∗ 255 + (Q +r). If Q < 256, then we can use Q +r

as an offset to access the look-up table Galois_aLog[ ]. Therefore, this method works only for exponentiation to

powers less than 255 ∗ 255.

18

Digital Media Processing, Appendix B

Multiplication/Division with the 0 Field Element At times a few coefficients (belonging to the GF(28 ) field)

of the polynomial D(x ) may not be present, and those coefficients are represented with 0 field elements. The

logarithm value for the 0 field element is not defined. If we multiply any field element with the 0 field element or

if we divide the 0 field element with any non-zero field element, the result is a 0 field element. We simulate these

operations, using look-up tables of larger size and representing the logarithm value for the 0 field element with

0x02fe or 766, as follows. We fill antilogarithm table Galois_aLog[ ] first 510 positions with antilogarithm values

by duplicating the first 255 values to perform modular multiplication or exponentiation. We fill Galois_aLog[ ]

table positions from 511 to 1535 with zeros. If we want to multiply any field element with the 0 field element,

then the sum of exponents of the two field elements will be more than 766 and less than 1535, and the result of the

multiplication operation remains zero. Similarly, if we divide the 0 field element with any non-zero field element,

then subtraction of exponents will be less than 766 and more than 510, and the result of division operation will

still be zero.

Division of Non-Zero Field Elements We achieve the division of two field elements by subtracting the denominator exponent from the numerator exponent. If the numerator exponent is greater than the denominator exponent,

then the exponent of the division result is positive, and we have no problem in computation. But, if the numerator exponent is less than the denominator exponent, then subtraction of exponents results in a negative value,

and we cannot handle the negative index with the look-up table. So, we have to get a positive index from the

negative index by adding 255 conditionally to the negative index. For example, if we want to divide β = α 121

with γ = α 187 , then

α 121 /α 187 = α −66 = α −66 · 1 = α −66 · α 255 = α 255−66 = α 189