Unmanned Systems Safety Guide - National Defense Industrial

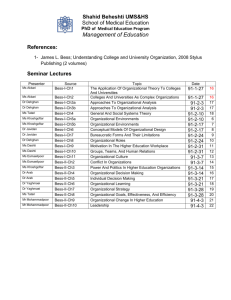

advertisement