Why Intel is designing multi-core processors

advertisement

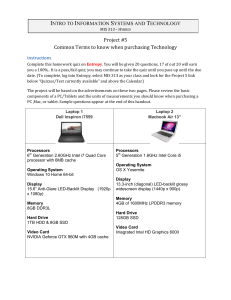

Why Intel is designing multi-core processors Geoff Lowney Intel Fellow, Microprocessor Architecture and Planning July, 2006 Moore’s Law at Intel 1970-2005 10 Feature Size (um) 1.E+09 1 1.E+07 0.79x 0.1 0.70x 0.01 1970 1980 1990 2000 2010 2020 100000 2.0x 1.E+05 1.E+03 1970 1980 1990 2000 2010 2020 1000 Frequency (MHz) CPU Power (W) 10000 2.0x 1000 100 100 1.5x 10 2 Transistor Count 1 1970 1980 1990 2000 2010 2020 July, 2006 1.4x Power trend not sustainable 10 1990 1995 2000 2005 2010 2015 Pentium® 4 Processor 386 Processor May 1986 @16 MHz core 275,000 1.5µ transistors ~1.2 SPECint2000 3 July, 2006 17 Years 200x 200x/11x 1000x August 27, 2003 @3.2 GHz core 55 Million 0.13µ transistors 1249 SPECint2000 Frequency: 200x 10000 Clock Frequency 1000 100 10 Performance: 1000x 1 Jan-85 Jan-87 Jan-89 10000 SPECint2000 100 10 4 Jan-93 Jan-95 Jan-97 Introduction Date 1000 1 Jan-85 Jan-87 Jan-89 July, 2006 Jan-91 Jan-91 Jan-93 Jan-95 Jan-97 Introduction Date Jan-99 Jan-01 Jan-03 Jan-05 Jan-99 Jan-01 Jan-03 Jan-05 SPECint2000/MHz (normalized) 7 Normalized SPECint2000/MHz PIII 6 PPro/II 5 4 P5 P55-MMX P4 3 486 2 486DX2 386 1 0 Jan-85 Jan-87 Jan-89 Jan-91 Jan-93 Jan-95 Jan-97 Jan-99 Jan-01 Jan-03 Jan-05 Introduction Date 5 July, 2006 5 3 2 1 0 Pipelined S-Scalar OOOSpec Deep Pipe 3 Pow er X Mips/W (%) 2 Power efficiency has dropped Increase (X) Increase (X) Performance scales with area**.5 Area X Perf X 4 1 0 Pipelined -1 6 July, 2006 S-Scalar OOOSpec Deep Pipe Pushing Frequency 10 8 6 4 2 0 Performance Diminishing Return 1 2 3 4 5 6 7 8 9 10 Relative Frequency (Pipelining) Maximized frequency by • Deeper pipelines • Pushed process to the limit Pipeline & Performance 10 8 •Dynamic power increases with frequency •Leakage power increases with reducing Vt 6 4 Sub-threshold Leakage increases exponentially 2 0 1 2 3 4 5 6 7 8 9 10 Relative Frequency Process Technology Diminishing return on performance. Increase in power 7 July, 2006 Moore’s Law at Intel 1970-2005 10 Feature Size (um) 1.E+09 1 1.E+07 0.79x 0.1 0.70x 0.01 1970 1980 1990 2000 2010 2020 Transistor Count 2.0x 1.E+05 1.E+03 1970 1980 1990 2000 2010 2020 1000 100000 Frequency (MHz) CPU Power (W) 10000 2.0x 1000 100 100 1.5x 10 8 1 1970 1980 1990 2000 2010 2020 July, 2006 1.4x Power trend not sustainable 10 1990 1995 2000 2005 2010 2015 Reducing power with voltage scaling Power = Capacitance * Voltage**2 * Frequency Frequency ~ Voltage in region of interest Power ~ Voltage ** 3 10% reduction of voltage yields • 10% reduction in frequency • 30% reduction in power • Less than 10% reduction in performance Rule of Thumb 9 Voltage Frequency Power Performance 1% 1% 3% 0.66% July, 2006 Dual core with voltage scaling RULE OF THUMB A 15% Frequency Power Performance Reduction Reduction Reduction Reduction In Voltage 15% 45% 10% Yields SINGLE CORE Area =1 Voltage = 1 Freq =1 Power = 1 Perf =1 10 July, 2006 DUAL CORE Area = Voltage = Freq = Power = Perf = 2 0.85 0.85 1 ~1.8 Multiple cores deliver more performance per watt Power Cache Power = ¼ 4 3 Big core C1 C2 Cache C3 11 July, 2006 C4 Performance = 1/2 Performance 2 2 1 1 Small core 1 1 Many core is more power efficient 4 4 3 3 2 2 1 1 Power ~ area Single thread performance ~ area**.5 Memory Gap Peak Instructions Per DRAM Access Growing Performance Gap 700 600 500 400 LOGIC GAP 300 200 100 MEMORY 1992 1994 1996 1998 2000 2002 0 Pentium 66MHz Pentium-Pro 200MHz PentiumIII 1100MHz Pentium4 2 GHz Reduce DRAM access with large caches Extra benefit: power savings. Cache is lower power than logic Tolerate memory latency with multiple threads 12 July, 2006 Multiple cores Hyper-threading Multi-threading tolerates memory latency Serial Execution Ai Idle Ai+1 Bi Idle Bi+1 Multi-threaded Execution Ai Idle Ai+1 Bi Bi+1 Execute thread B while thread A waits for memory 13 July, 2006 Multi-core has a similar effect Multi-core tolerates memory latency Serial Execution Ai Idle Ai+1 Bi Idle Multi-core Execution Ai Bi Idle Idle Ai+1 Bi+1 Execute thread A and B simultaneously 14 July, 2006 Bi+1 Core Area (with L1 caches) Trend Die area/core shrinking after peaking with PPro 350.0 PPro 300.0 Core Area - m m ^2 Pentium Processor 250.0 200.0 Pentium II processor 486 150.0 McKinley Pentium 4 processor 100.0 Prescott 386 50.0 Banias Yonah 4004 0.0 1970 1975 1980 1985 1990 1995 2000 Merom 2005 Introduction Date Why shrinking? Diminishing returns on performance. 15 July, 2006 Interconnect. Caches. Complexity. Reliability in the long term 100 150 Relative Relative ~8% degradation/bit/generation 100 50 50 Wider 0 0 16 22 32 45 65 90 0 13 18 0 100 Soft Error FIT/Chip (Logic & Mem) 120 140 160 180 200 Vt(mV) Extreme device variations Ion Relative 1 0 1 2 3 4 5 6 7 8 9 10 Time Time dependent device degradation 16 July, 2006 In future process generations, soft and hard errors will be more common. Redundant multi-threading: an architecture for fault detection and recovery Sphere of Replication Leading Thread Trailing Thread Input Replication Output Comparison Memory System Two copies of each architecturally visible thread Compare results: signal fault if different Multi-core enables many possible designs for redundant threading. 17 July, 2006 Moore’s Law at Intel 1970-2005 10 Feature Size (um) 1.E+09 1 1.E+07 0.79x 0.1 0.70x 0.01 1970 1980 1990 2000 2010 2020 Transistor Count 2.0x 1.E+05 1.E+03 1970 1980 1990 2000 2010 2020 1000 100000 Frequency (MHz) CPU Power (W) 10000 2.0x 1000 100 100 1.4x 1.5x 10 1 1970 1980 1990 2000 2010 2020 July, 2006 10 performance, 1990 1995 2000 2005memory, 2010 2015 Multi-core addresses power, complexity, reliability 18 Moore’s Law will provide transistors Intel process technology capabilities High Volume Manufacturing 2004 2006 2008 2010 2012 2014 2016 2018 Feature Size 90nm 65nm 45nm 32nm 22nm 16nm 11nm 8nm 2 4 8 16 32 64 128 256 Integration Capacity (Billions of Transistors) 50nm 19 Transistor for 90nm Process Influenza Virus Source: CDC Source: Intel July, 2006 Use transistors for multiple cores, caches, and new features. Multi-Core Processors CLOVERTOWN WOODCREST PENTIUM® M Single Core 20 July, 2006 Dual Core Quad Core Future Architecture: More Cores Open issues Cores • • • • How many? What size? Homogeneous? Heterogeneous? On-die Interconnect • • Topology Bandwidth Power delivery and management High bandwidth memory Reconfigurable cache Scalable fabric Core Core Core Core Core Core Core Core Core Core Core Core Cache hierarchy • • • Number of levels Sharing Inclusion Scalability 21 July, 2006 Core Core Core Fixed-function units The Importance of Threading Do Nothing: Benefits Still Visible • • • Operating systems ready for multi-processing Background tasks benefit from more compute resources Virtual machines Parallelize: Unlock the Potential • • • 22 Native threads Threaded libraries Compiler generated threads July, 2006 Performance Scaling Performance Amdahl’s Law: Parallel Speedup = 1/(Serial% + (1-Serial%)/N) 10 9 8 7 6 5 4 3 2 1 0 Serial% = 6.7% N = 16, N1/2 = 8 16 Cores, Perf = 8 Serial% = 20% N = 6, N1/2 = 3 6 Cores, Perf = 3 0 10 20 30 Number of Cores 23 Parallel software key to Multi -core success Multi-core July, 2006 How does Multicore Change Parallel Programming? SMP P1 P2 P3 P4 cache cache cache cache Memory No change in fundamental programming model Synchronization and communication costs greatly reduced • Makes it practical to parallelize more programs CMP Resources now shared C1 C2 C3 C4 cache cache cache cache • Caches • Memory interface • Optimization choices may be different Memory 24 July, 2006 Threading for Multi-Core Architectural Analysis Intel® VTune™ Analyzers Introducing Threads Intel® C++ Compiler Debugging Intel® Thread Checker Performance Tuning Intel® Thread Profiler Intel has a full set of tools for parallel programming 25 July, 2006 The Era Of Tera Terabytes of data. Teraflops of performance. Improved Medical care Text Mining Scientific Simulation Courtesy Tsinghua University HPC Center Machine vision X When personal computing finally becomes personal Y Immersive 3D entertainment Interactive learning Source: Steven K. Feiner, Columbia University. Telepresent meetings Virtual realities Computationally intensive applications of the future will Tele-present meetings be highly parallel 26 July, 2006 Courtesy of the Electronic Visualization Laboratory, Univ. of Illinois at Chicago. 21st Century Computer Architecture Slide from Patterson 2006 Old CW: Since cannot know future programs, find set of old programs to evaluate designs of computers for the future • E.g., SPEC2006 What about parallel codes? • Few available, tied to old models, languages, architectures, … New approach: Design computers of future for numerical methods important in future Claim: key methods for next decade are 7 dwarves (+ a few), so design for them! • 27 Representative codes may vary over time, but these numerical methods will be important for > 10 years Patterson, UC Berkeley, also predicts importance of parallel applications. July, 2006 Phillip Colella’s “Seven dwarfs” Slide from Patterson 2006 High-end simulation in the physical sciences = 7 numerical methods: Structured Grids (including locally structured grids, e.g. Adaptive Mesh Refinement) Unstructured Grids Fast Fourier Transform Dense Linear Algebra Sparse Linear Algebra Particles If add 4 for embedded, covers all 41 EEMBC benchmarks 8. Search/Sort 9. Filter 10. Combinational logic 11. Finite State Machine Note: Data sizes (8 bit to 32 bit) and types (integer, character) differ, but algorithms the same Monte Carlo Slide from “Defining Software Requirements for Scientific Computing”, Phillip Colella, 2004 Well-defined targets from algorithmic, software, and architecture standpoint Note scientific computing, media processing, machine learning, statistical computing share many algorithms 28 July, 2006 Workload Acceleration RMS Workload Speedup (Simulated) Ray Tracing (Avg.) 64 48x - 57x 56 Recognition Body Tracker Speedup 48 Mining Mod. Levelset FB_Est Gauss-Seidel Sparse Matrix (Avg.) 40 19x - 33x 32 Dense Matrix (Avg.) Kmeans SVD 24 SVM_classification 16 Cholesky (watson) Cholesky (mod2) 8 Forward Solver (pds-10) Synthesis 0 Forward Solver (world) 0 4 8 12 16 20 24 28 32 36 40 44 48 52 56 60 64 # of Cores Backward Solver (pds10) Backward Solver (watson) Group I – Scale well with increasing core count Examples: Ray tracing, body tracking, physical simulation Group II – Worst-case scaling examples, yet still scale 29 July, 2006 Examples: Forward & backward solvers Source: Intel Corporation Tera-Leap to Parallelism: ENERGY-EFFICIENT PERFORMANCE Energy Efficient Performance Tera-Scale Computing Quad-Core Dual Core Hyper-Threading Instruction level parallelism TIME 30 July, 2006 Science fiction becomes A REALITY More performance Using less energy Single-core chips Summary Technology is driving Intel to build multi-core processors. – – – – – Power Performance Memory latency Complexity Reliability Parallel programming is a central issue. Parallel applications will become mainstream 31 July, 2006