Demonstration of a Computer Vision and Sensor Fusion for

advertisement

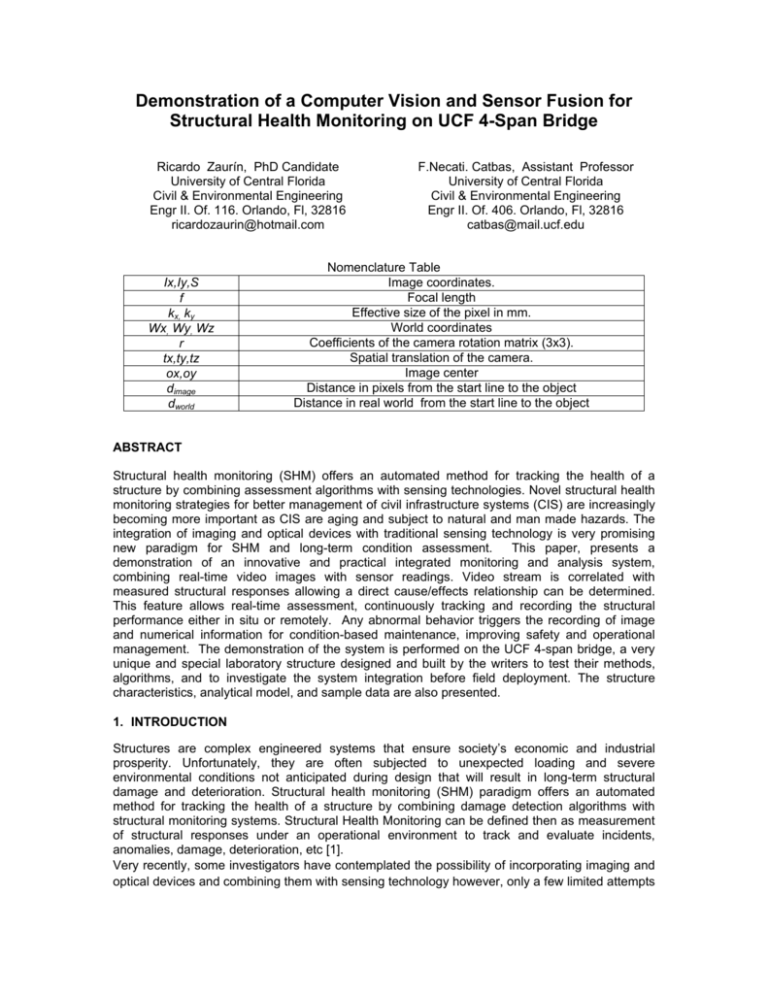

Demonstration of a Computer Vision and Sensor Fusion for Structural Health Monitoring on UCF 4-Span Bridge Ricardo Zaurín, PhD Candidate University of Central Florida Civil & Environmental Engineering Engr II. Of. 116. Orlando, Fl, 32816 ricardozaurin@hotmail.com Ix,Iy,S f kx, ky Wx, Wy, Wz r tx,ty,tz ox,oy dimage dworld F.Necati. Catbas, Assistant Professor University of Central Florida Civil & Environmental Engineering Engr II. Of. 406. Orlando, Fl, 32816 catbas@mail.ucf.edu Nomenclature Table Image coordinates. Focal length Effective size of the pixel in mm. World coordinates Coefficients of the camera rotation matrix (3x3). Spatial translation of the camera. Image center Distance in pixels from the start line to the object Distance in real world from the start line to the object ABSTRACT Structural health monitoring (SHM) offers an automated method for tracking the health of a structure by combining assessment algorithms with sensing technologies. Novel structural health monitoring strategies for better management of civil infrastructure systems (CIS) are increasingly becoming more important as CIS are aging and subject to natural and man made hazards. The integration of imaging and optical devices with traditional sensing technology is very promising new paradigm for SHM and long-term condition assessment. This paper, presents a demonstration of an innovative and practical integrated monitoring and analysis system, combining real-time video images with sensor readings. Video stream is correlated with measured structural responses allowing a direct cause/effects relationship can be determined. This feature allows real-time assessment, continuously tracking and recording the structural performance either in situ or remotely. Any abnormal behavior triggers the recording of image and numerical information for condition-based maintenance, improving safety and operational management. The demonstration of the system is performed on the UCF 4-span bridge, a very unique and special laboratory structure designed and built by the writers to test their methods, algorithms, and to investigate the system integration before field deployment. The structure characteristics, analytical model, and sample data are also presented. 1. INTRODUCTION Structures are complex engineered systems that ensure society’s economic and industrial prosperity. Unfortunately, they are often subjected to unexpected loading and severe environmental conditions not anticipated during design that will result in long-term structural damage and deterioration. Structural health monitoring (SHM) paradigm offers an automated method for tracking the health of a structure by combining damage detection algorithms with structural monitoring systems. Structural Health Monitoring can be defined then as measurement of structural responses under an operational environment to track and evaluate incidents, anomalies, damage, deterioration, etc [1]. Very recently, some investigators have contemplated the possibility of incorporating imaging and optical devices and combining them with sensing technology however, only a few limited attempts have been tested and implemented. Previously, a study proposed combining a network sensors array, a database for storage and archival, computer vision applications for detection and classification of traffic, probabilistic modeling structural reliability and risk analysis and damage detection with preliminary concepts and limited implementations [2]. In a similar study accelerometers and strain gages were employed in an attempt to correlate with traffic images [3]. A novel framework for structural health monitoring of bridges by combining computer vision and a distributed sensors network that allows not only to record events but to infer about the damaged condition of the structure was proposed very recently [4]. Video stream is prescribed to be used in conjunction with computer vision techniques to determine the class and the location of the vehicles moving over a bridge as well as for surveillance purposes. A database with information from vehicles training sets, experimental results from the sensors network and, analytical models is suggested. Then, the proposed system, by interpreting the images and by correlating those with the information contained in the database, evaluates the operational condition of the bridge and/or will emit alerts regarding suspicious activities. Another study by Zaurin & Catbas discussed some of the most common issues related to computer vision and sensor fusion applications as well as some possible practical solutions [5]. 2. STRUCTURE DESCRIPTION An experimental setup was devised and built by the researchers to investigate the issues concerning with sensor fusion and video monitoring. The set up consist of a four span bridgetype structure: two approaching/ending 4 ft spans and two 10 ft. span structure conformed by a 1/8” steel deck 48 in. wide, supported by two HSS 25x25x3 girders separated 24 in from each other. Supports were designed in such a way that could be easily changed to rolled, pinned or fixed boundary conditions as sown in Figure 1. Figure 1. Experimental Setup, FEM, and Details Girder and deck can be linked together at different locations to modify the stiffness of the system and to simulate damage. Radio controlled vehicles can crawl over the deck with different loading conditions (from 10lb. to 60 lb.). Wheel axis distance and speed are also variable. While a video camera is used to identify and tracking the vehicle, a set of strategically located sensors collects the data to be correlated with the video stream in real-time. It is important to mention that although the structure is not a scaled up bridge model, its responses correspond with the typical traffic induced values for most small to medium span bridges A more comprehensive description of this structure as well as the analytical model can be found in [4]. 3. FRAMEWORK DESCRIPTION Most of the previous work was based on studies just on ambient vibration and couldn’t differentiate ambient or traffic readings, unless testing was scheduled by closing the bridge. The system demonstrated herein consists of five main components, integrated and closely interrelated: the vision module, the distributed sensors network array, the analytical model, the database, and the diagnostic module (Figure 2). By knowing the position and magnitude of moving loads; sensors readings and video are synchronized and the structure is monitored at every instant by using operational traffic. Figure 2. SHM Components 3.1. Vision Module Video stream is collected from a camera located above the structure. Image processing and computer vision techniques are used for detecting and classifying moving loads (vehicles), as well as determining its position (tracking) while passing over the structure. 3.1.1. Detection For detecting moving objects, “Background Subtraction” method is used. It consist of building a model of the scene background, and for each pixel in the image, detect deviations of pixel feature values from the model to classify the pixel as belonging as part of either to background or to foreground. Although, pixel intensity or color is the most commonly used features for scene modeling, there are many others, and new approaches are explored. Morphological filtering and connected components grouping are used to eliminate these regions. Morphological filters reduce noise, eliminate isolated foreground pixels and, merges nearby disconnected foreground regions. Connected component grouping is used to identify all regions that are connected and eliminates those that are to small to correspond to real interest moving points. In this way, the remaining noise is eliminated. Figure 3 shows the different stages involved in the detection process. Background model Input Frame Background Subtraction & thresholding. After Thresholding Object sizes After dilation and hole filling Detected Vehicle Figure 3.- Different Stages on Vehicle Detection 3.1.2. Tracking For tracking, image/world correspondence has to be determined. The most common calibration process used requires the finding of intrinsic and extrinsic camera parameters that establish the relationship between image (I) and world (W) coordinates. r r t ⎤ ⎡Wx ⎤ ⎡r 0⎤ ⎢ 1,1 1,2 1,3 x ⎥ ⎢ ⎥ r r r t Wy Eq. (1) − fk y o y 0⎥⎥ ⎢ 2,1 2,2 2,3 y ⎥ ⎢ ⎥ ⎢ r3,1 r3,2 r3,3 t z ⎥ ⎢Wz ⎥ 0 1 0⎥⎦ ⎢ ⎥⎢ ⎥ 0 0 1 ⎦⎣ 1 ⎦ ⎣0 These parameters can be found by knowing a set of points in the image and real world, establishing a system of equations and using singular value decomposition to get the final solution( Eq. 1). Although this is a very common approach, it requires of going through a complicated process. The geometry of the camera and setup is shown in Figure 4. This 3-D problem is greatly simplified when reduced to a 2-D situation. The ‘road’ is assumed to be planar, hence all the z coordinates are the same or the difference is negligible. If the previous assumption is maintained, Lagrange interpolation method can be used as follows: Considering a set of data points (d image , d world ) where d image is the distance in pixels between line ⎡ Ix ⎤ ⎡− fk x ⎢ Iy ⎥ = ⎢ 0 ⎢ ⎥ ⎢ ⎢⎣ S ⎥⎦ ⎢⎣ 0 0 ox ‘S’ and a set of known points in the image, and d world is the distance in the real world between the line ‘S’ and the same set of points (Eq. 2 & Eq. 3), then: d world ( S ,i +1) = (Wxi +1 − Wxi )2 + (Wyi +1 − Wyi )2 + (Wzi +1 − Wzi ) 2 + d world ( S ,i ) d image ( S ,i +1) = ( Ixi +1 − Ixi ) 2 + ( Iy i +1 − Iy i ) 2 + ( Iz i +1 − Wz i ) 2 + d image( S ,i ) Eq. (2) Eq. (3) k d world (d image ) = ∑d Eq. (4) world ( j ) l j (d image ) j =0 Where the Lagrange basis polynomials are: l j (d image ) = k d image − d image (i ) i =0 i ≠ j d image ( j ) − d image (i ) ∏ Eq. (5) d ( S ,i 2 ) d ( S , i 3) d ( S ,i 4) d ( S , i 5) Figure 4. Geometry of the camera location and test set-up A set of known points on the structure is used as reference (10 points for this example). Reference points can be either selected by a user (Figure 5) or detected automatically using pattern matching algorithms. Figure 5. Selection of Reference Points Once the vehicle is detected, position in the image of the lower left corner is determined and its distance d image to the line S is calculated. This distance is entered in equation 4, obtaining the position of the vehicle (moving load) on the structure. Figure 6 shows the path of the vehicle while moving on the structure, calculated using the described procedure. A Matlab code was developed to perform this calibration /correlation process. One of the advantages when using this calibration method is that if by any reason, such as excessive wind, the camera moves and loses its calibration, recalibration can be performed in an automated way, by detecting the reference. Figure 6. Path followed by Vehicle points and correlating again the image coordinates with the corresponding real world. 4. SENSORS ARRAY/INSTRUMENTATION This setup is instrumented, as shown in Figure 7, with strain gages, accelerometers, displacement transducers, tiltmeters, temperature, and wind sensors although for this paper only strain values are presented. The current installation consists of an array of 35 dynamic sensors. A data acquisition system collects data as follows: Strain gages: 12 foil type dynamic strain gages have been deployed: 6 on girder 1 and 6 on girder collecting data at a rate of 3KHz and averaging every 100 points for more accurate date for an effective rate of 30 Hz. A total of 9 accelerometers are collecting at 510 Hz, 3 for girder 1 and 3 for girder 2. The displacement transducers are located at the center of each span collecting data with the same rate as the strain gages, and two dynamic tiltmeters. Temperature (2 sensors) and wind sensors (1 for speed and 1 for direction) are also attached for future studies. Figure 8 shows the front panel for data collection. 2 ft 10 ft 4 ft 2 ft 10 ft Strain gage Accelerometer Displacement Tilt meter NOTE: Location for strain gages, displacement, and accelerometer is symmetric for Girder 1 and 2 Figure 7. Sensors array Figure 8. Front Panel for Data Acquisition. 5. IMAGE AND SENSORS CORRELATION A demonstration application for the correlation of sensors and video images has been developed. One example of this correlation is presented in Figure 9 which shows the time history for two strain gages (SG-3 and SG-4) due to a vehicle crossing the structure. Video images of the vehicle are also presented. By applying image processing techniques as described before, vehicle position in the real world is calculated. Hence the position of the moving load (input) can be associated with the structure responses (output). Deviations of these input-output sets from the typical can be used as warnings for structural health monitoring and decision making. More results and interpretation will be presented at the conference. Figure 9. Correlation of Strain Responses and Position of the Vehicle 6. CONCLUSIONS AND FUTURE WORK The framework herein demonstrated, represents a novel and practical technology where new approaches and techniques are used for Structural Health Monitoring. Real-time integration of computer vision techniques and sensing technology are studied with very promising results. Installed sensors provide information of the structure spatial resolution, and video cameras for detecting and tracking traffic. Integration of traffic and its corresponding structural responses is showing progress as input moving loads and structural responses (output) are correlated. Deviations of these input-output sets from the typical can be used as warnings for structural health monitoring and decision making. 7. REFERENCES 1. 2. 3. 4. 5. Catbas, F.N., et al. Challenges in Structural Health Monitoring. in Proceedings of the 4th International Workshop on Structural Control. 2004. Columbia University, New York. Elgamal, A., et al. Health Monitoring Framework for Bridges and Civil Infrastructure. in 4th International Workshop on Structural Health Monitoring. 2003. Stanford University, Stanford, CA. Fraser, M. and A. Elgamal. Video and Motion Structural Monitoring Framework. in 4th China-Japan-US Symposium on Structural Control and Monitoring. 2006. Zaurin, R. and F.N. Catbas. Computer Vision Oriented Framework for Structural Health Monitoring of Bridges. in IMAC XXV.Society for Experimental Mechanics. 2007. Orlando, FL.: SEM. Zaurin, R. and F.N. Catbas. Issues in Using Video Images for Structural Health Monitoring. in SMSST07. 2007. China.