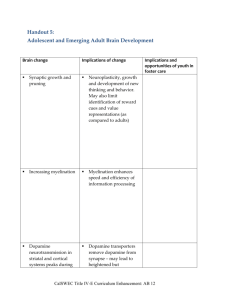

The debate over dopamine's role in reward: the case for incentive

advertisement