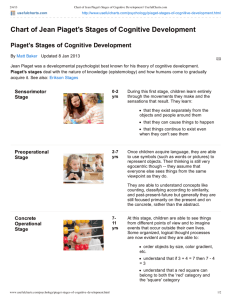

Intelligence for education: As described by Piaget and measured by

advertisement