Extension of GKB-FP algorithm to large-scale general

advertisement

Extension of GKB-FP algorithm to large-scale

general-form Tikhonov regularization

Fermı́n S. Viloche Bazán ∗, Maria C. C. Cunha and Leonardo S. Borges

†

Department of Mathematics, Federal University of Santa Catarina,

88040-900, Florianópolis SC, Brazil

e-mail: fermin@mtm.ufsc.br

Department of Applied Mathematics, IMECC-UNICAMP,

University of Campinas, CP 6065, 13081-970, Campinas SP, Brazil

e-mail:lsbplsb@yahoo.com

Abstract

In a recent paper [24] an algorithm for large-scale Tikhonov regularization in

standard form called GKB-FP was proposed and numerically illustrated. In this

paper, further insight into the convergence properties of this method is provided

and extensions to general-form Tikhonov regularization are introduced. In addition,

as alternative to Tikhonov regularization, a preconditioned LSQR method coupled

with an automatic stopping rule is proposed. Preconditioning seeks to incorporate smoothing properties of the regularization matrix into the computed solution.

Numerical results are reported to illustrate the methods on large-scale problems.

KEY WORDS: Tikhonov regularization, Large-scale problems, Ill-posed problems

1

Introduction

We are concerned with the computation of stable solutions to large-scale discrete illposed problems of the form

x = argminkb − Axk22

(1)

x∈Rn

where the matrix A ∈ Rm×n , m ≥ n, is severely ill-conditioned. Matrices of this type

arise, for example, when discretizing first kind integral equations with smooth kernel,

e.g., in signal processing and image restoration. In applications the vector b represents

the data and is assumed to be of the form b = bexact + e, where e denotes a noise vector

due to measurement or approximation errors, bexact denotes the unknown error-free data,

and xexact = A† bexact (where A† denotes the Moore-Penrose Pseudo Inverse of A) is the

noise-free solution of (1). In these cases, due to the noise in the data, the least squares

solution xLS = A† b is dominated by the noise and regularization methods are required to

∗

†

The work of this author is supported by CNPq, Brazil, grants 308709/2011-0, 477093/2011-6.

This research is supported by FAPESP, Brazil, grant 2009/52193-1.

1

construct stable approximations to xexact . In Tikhonov regularization [1], we replace xLS

by the regularized solution

xλ = argmin kb − Axk22 + λ2 kLxk22 ,

(2)

x∈Rn

where λ > 0 is the regularization parameter and L ∈ Rp×n is referred to as the regularization matrix. When L = In , the n × n identity matrix, the Tikhonov regularization

problem is said to be in standard form, otherwise, it is in general form. Solving (2) is

equivalent to solve the so-called regularized normal equations

(AT A + λ2 LT L)x = AT b,

(3)

whose solution xλ is unique when N (A) ∩ N (L) = {0}. In this paper N (·) denotes

the null space of (·) and R(·) denotes its column space. When A and L are small, the

regularized solution can readily be computed using the GSVD of the pair (A, L), and

good approximate solutions can be obtained provided that λ and L are properly chosen.

There are many Tikhonov parameter choice methods. These include the discrepancy

principle (DP) of Morozov [2], which depends on a priori knowledge of the norm of the

error e, and the so called noise-level free or heuristic parameter choice rules such as the

L-Curve criterion of Hansen and O’Leary [3], the Generalized Cross-Validation (GCV) of

Heath, Golub and Wahba [4] and the algorithmic realization of Regińska’s rule [5] via the

fixed-point (FP) method by Bazán [6], among others.

It is well-known that the regularization parameter selected by DP depends on the norm

of the error kek2 and that the error in the corresponding regularized solution converges

to zero as kek2 → 0 (under certain conditions), see, e.g., [7]. Unfortunately, this is not

the case with heuristic rules for which the regularization parameter is not a function of

the noise level. As a result, heuristic rules are not without difficulties and cannot be

successful in all problems. This is in accordance with a famous result of Bakushinski [8],

which asserts that parameter choice rules that do not depend explicitly on the noise level

should fail, at least for some problems. Nevertheless, noise-level free rules are widely used

in real applications, as for example the L-curve method. For discussions and analyses of

heuristic rules the reader is referred to [7, 9]. A numerical comparison of many heuristic

parameter choice rules can be found in [10], and, recently in [11].

From the practical point of view, all of the above and many other methods from the

literature can be readily implemented using the GSVD when A and L are small or of

moderate size. However, for large-scale problems the GSVD is computationally demanding, and one can use instead iterative methods such as LSQR or CGLS to approximate

xλ provided that the parameter λ is known a priori. Another way to proceed is to use

projection methods based on Lanczos/Arnoldi iterations; these include CGLS/LSQR and

the family of minimum residual methods [12, 13, 14, 15, 16, 17, 18, 19], where the number

of iterations k plays the role of the regularization parameter and where the choice of the

”best” k can be done using the discrepancy principle or L-curve [15, 20]. However, it is

well known that DP is likely to fail if the error norm kek is poorly estimated, and that

the L-curve criterion works well except possibly when the curve is not L-shaped, see e.g.,

Morigi [21] or when the curvature of the L-curve has more than a one local maximum,

see, e.g., Hansen et al. [20] or [22].

The difficulty in determining reliable stopping criteria for iterative methods can be

alleviated by combining them with an inner regularization method, which gives rise to

2

Hybrid methods. For the case L = In , two successful hybrid methods that do not require

knowledge of kek2 and combine projection over the Krylov subspace generated by the

Golub-Kahan bidiagonalization (GKB) method and standard Tikhonov regularization at

each iteration, are W-GCV [23] and GKB-FP [24]. Here the difference is on the projected

problem: while W-GCV uses weighted GCV as parameter choice rule, GKB-FP uses the

FP method. The case L 6= In has received less attention and there are few efficient

methods for large-scale regularization. An interesting approach that uses a joint bidiagonalization (JBD) procedure applied to the pair (A, L) and minimizes the Tikhonov

functional over the generated Krylov subspace is proposed by Kilmer et al. [15]. This

method may be infeasible for large-scale problems because the JBD procedure is computationally demanding. More recently, J. Lampe et. al [25], and Reichel et. al [26],

propose approximate solutions by minimizing the Tikhonov functional over generalized

Krylov subspaces; in both cases the regularization parameter for the projected problem is

determined by the discrepancy principle. A related method which also uses the discrepancy principle as parameter choice rule can be found in [27].

In this paper we assume that no estimate of kek2 is available and concentrate on

extensions of the GKB-FP algorithm to large-scale general-form Tikhonov regularization.

Two approaches are considered. The first approach relies on the observation that the

general-form Tikhonov regularization can be transformed into a Tikhonov problem in

standard form which can be handled efficiently by GKB-FP; therefore our first approach

is to apply GKB-FP to the transformed Tikhonov problem. As for the second approach,

it constructs regularized solutions by minimizing the general-form Tikhonov problem over

the Krylov subspace generated by the GKB algorithm applied to matrix A, as suggested

in [27]. In all cases, the underlying philosophy of GKB-FP is preserved, i.e., the fixed-point

method is always used on the projected problem. Also, to overcome possible difficulties

associated with the Tikhonov regularization parameter selection problem at each iteration,

as done by GKB-FP or W-GCV, we propose an iterative regularization algorithm based

on LSQR applied to the least squares problem min kb̄ − Āx̄k2 , where b̄ and Ā arise from

the transformed Tikhonov problem, coupled with a stopping rule that does not require

knowledge of the norm of the noise kek2 and that can be regarded as a discrete counterpart

of Regińska’s rule. Theoretically, we follow a paper by Hanke and Hansen [13], see also [28],

where it is shown how to construct regularized solutions to (2) via CGLS/LSQR in such

a way that the smoothing properties of L are incorporated into the iterative process, and

hence into the computed solution.

The paper is organized as follows. Section 2 presents an analysis of GKB-FP within

the framework of projection methods which provides valuable insight into the convergence

properties of the algorithm. In Section 3 the extensions of GKB-FP to general-form

Tikhonov regularization are presented and discussed. Our alternative to general-form

Tikhonov regularization comes in Section 4, and Section 5 contains numerical examples

devoted to illustrate the potential of the methods. Conclusions are in Section 6.

2

GKB-FP as a projection method

The purpose of this section is to provide further insight into the convergence properties

of GKB-FP. Recall that after k < n steps, the GKB algorithm applied to A with initial

vector b/kbk2 yields two matrices Uk+1 = [u1 , . . . , uk+1 ] ∈ Rm×(k+1) and Vk = [v1 , . . . , vk ] ∈

3

Rn×k with orthonormal columns, and a lower bidiagonal matrix Bk ∈ R(k+1)×k ,

such that

α1

β2

Bk =

α2

β3

..

.

..

.

αk

βk+1

,

β1 Uk+1 e1 = b = β1 u1 ,

AVk = Uk+1 Bk ,

AT Uk+1 = Vk BkT + αk+1 vk+1 eTk+1 ,

(4)

(5)

(6)

(7)

where ei denotes the i-th unit vector in Rk+1 [29]. The columns of Vk provide an orthonormal basis for the generated Krylov subspace Kk (AT A, AT b), which is an excellent

choice for use when solving discrete ill-posed problems [30]. GKB-FP combines the GKB

algorithm with standard form Tikhonov regularization in Kk (AT A, AT b), choosing the regularization parameter on the projected problem via the FP method [6]. The FP method

relies on an earlier work of Regińska [5], where the regularization parameter is chosen as

a minimizer of the function

Ψ(λ) = krλ k22 kxλ k2µ

2 , µ > 0,

(8)

where rλ = b − Axλ . Regińska proved that if the curvature of the L-curve is maximized

at λ = λ∗ , and if the slope of the L-curve corresponding to λ = λ∗ is −1/µ, then Ψ(λ) is

minimized at λ = λ∗ . However, no method to compute the minimum was done afterward.

More recently, Bazán [6] investigated the issue and, with a proper choice of µ, showed that

the minimum can be calculated via fixed-point iterations, giving rise to the FP method.

The key idea behind this parameter choice rule can be explained as follows. Let

Rλ = (AT A + λ2 In )−1 AT . Then the error in the regularized solution xλ can be expressed

as xexact − xλ = xexact − Rλ bexact − Rλ e, and therefore

kxexact − xλ k2 ≤ kxexact − Rλ bexact k2 + kRλ ek2 ≡ E1 (λ) + E2 (λ).

(9)

The term E1 (λ) represents the regularization error and increases with λ. The second

term denotes the error caused by the noise and decreases with λ, see, e.g., [31]. Thus,

in order to obtain a small error we should balance the terms as in this event the bound

is approximately minimized. However, neither E1 (λ) nor E2 (λ) is available, hence, an

alternative is to minimize a model for the bound. The motivation for using Ψ(λ) as a

model is that minimizing log(Ψ(λ)) we minimize the sum of competing terms, since kxλ k2

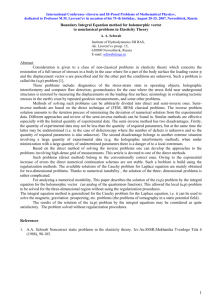

decreases with λ while krλ k2 increases, as illustrated in Figure 1.

The FP method can be summarized as follows:

• Given an initial guess, FP takes µ = 1 as a default value and proceeds by computing

the iterates

√

λj+1 = φ(λj ), j ≥ 0, where φ(λ) = µkrλ k2 /kxλ k2 , λ > 0,

(10)

until the largest convex fixed-point of φ is reached.

4

0.79

10

E (λ)

1

log(Ψ(λ))

1

10

E (λ)

2

E (λ)+E (λ)

1

2

0.78

10

0

10

0.77

10

0

0.01

0.02

0.03

0

0.01

0.02

0.03

Figure 1: Bound (9) and Ψ(λ) for shaw problem from [32], n = 512 and b = bexact +e where

e is white noise with kek2 = 0.005kbexact k2 . The small circle points out the location of

λoptimal = argmin{E1 (λ) + E2 (λ)} = 0.0102 and of λFP = argmin{Ψ(λ), µ = 1} = 0.0116.

The relative errors in xλoptimal and xλFP are 5.34% and 5.36% respectively.

• If µ = 1 does not work, µ is adjusted and the iterations restart; see [6, 33] for

details.

The GKB-FP algorithm computes approximations to the sought fixed-point by com√

(k)

(k)

puting a finite sequence of fixed-points of functions φ(k) (λ) = µkrλ k2 /kxλ k2 , where

(k)

xλ solves the constrained problem

(k)

xλ =

(k)

argmin

{kAx − bk22 + λ2 kxk22 }

(11)

x∈Kk (AT A,AT b)

(k)

and rλ = b − Axλ . Based on (5)-(7), it is straightforward to prove that

(k)

(k)

xλ = V k yλ ,

(k)

with yλ = argmin{kBk y − β1 e1 k22 + λ2 kyk22 },

(12)

y∈Rk

and the regularized solution norm and the corresponding residual norm satisfy

(k)

(k)

kxλ k2 = kyλ k2 ,

(k)

(k)

and krλ k2 = kBk yλ − β1 e1 k2 .

(13)

The evaluation of φ(k) (λ) for each λ can be done approximately in O(k) arithmetic

operations, which corresponds to the cost of solving the projected problem (12). Numerical

examples on large-scale problems reported in [24] show that the largest convex fixed-point

of φ(λ) is quickly captured. For a detailed description of GKB-FP, see [24] again; here

we summarize the main steps of GKB-FP, since, as we will see later, they will be also

followed by the methods proposed in this work.

GKB-FP Algorithm:

1. Perform p0 > 1 GKB steps applied to A with initial vector b/kbk2 .

2. For k ≥ p0 compute the fixed-point λ(k)

of φ(k) (λ) until a termination criterion is

FP

satisfied.

(k)

3. Once convergence is achieved compute xλ using (12).

5

The value of p0 can be freely chosen by the user. In our numerical experiments we take:

p0 = 10 for small problems, p0 = 15 for relatively large problems, and p0 = 20 for larger

problems. As for the initial guess of λ when computing the first fixed point at step k = p0 ,

it is set equal to 10−4 . For further details about the initial guess, see [6, 24].

To provide further insight into the convergence properties of GKB-FP, we will concentrate on the question of how well xλ can be approximated by “projecting” the large-scale

problem onto a subspace of small dimension. More specifically, assume that {Vk }k≥1 is a

family of k dimensional subspaces such that V1 ⊂ V2 ⊂ · · · ⊂ Vk ⊂ Vk+1 ⊂ · · · , and for

(k)

k > 1 consider approximations xλ defined by

(k)

xλ = argmin{kAx − bk22 + λ2 kLxk22 }.

(14)

x∈Vk

(k)

Then our goal is to bound the error kxλ − xλ k2 for the case where the regularization

parameter is selected by the FP method. To this end we first introduce some notation

that will be used later. Let the reduced singular value decomposition of A be given by

A = UΣVT ,

(15)

where U = [u1 , . . . , un ] ∈ Rm×n and V = [v1 , . . . , vn ] ∈ Rn×n have orthonormal columns

and Σ = diag(σ1 , . . . , σn ) is a diagonal matrix with σ1 ≥ σ2 ≥ · · · ≥ σn ≥ 0. In addition,

let Uk , Vk and Sk be defined by

Uk = [u1 , . . . , uk ],

Sk = span{v1 , . . . , vk },

Vk = [v1 , . . . , vk ],

and notice that if L = In , then the regularized Tikhonov solution xλ can be written as

n

X

σi (uTi b)

vi .

xλ =

σ 2 + λ2

i=1 i

(16)

We start with the following technical result.

Lemma 2.1. Let Pk denote the orthogonal projection on Vk . Define γk = kA − APk k2

and δk = kL − LPk k2 . Then ∀λ > 0 there holds,

r

γ2

(k)

kLxλ − Lxλ k2 ≤ δk2 + k2 k(In − Pk )xλ k2 .

(17)

λ

Proof : Since N (A) ∩ N (L) = {0}, it follows that the function < u, v ># =< Au, Av >

+λ2 < Lu, Lv >, where < ·, · > denotes the usual inner product in Rn , defines an inner

product in Rn . Note that (3) implies < Axλ − b, Ax > +λ2 < Lxλ , Lx >= 0, ∀x ∈ Rn and

(k)

that < Axλ − b, Aϕ > +λ2 < Lxλ , Lϕ >= 0, ∀ϕ ∈ Vk . Combining these we have

(k)

(k)

< xλ − xλ , ϕ ># = 0,

∀ϕ ∈ Vk .

(18)

Therefore, xλ = Pk xλ , where Pk denotes the orthogonal projector onto the subspace Vk

with respect to the inner product < ·, · ># and induced norm k · k# . Then

(k)

kxλ − xλ k2# =

≤

=

≤

=

kxλ − Pk xλ k2#

kxλ − Pk xλ k2#

.

kA(In − Pk )xλ )k22 + λ2 kL(In − Pk )xλ k22

2

2

2

2

2

kA(In − Pk )k2 k(In − Pk )xλ k2 + λ kL(In − Pk )k2 k(In − Pk )xλ k2

(γk2 + λ2 δk2 )k(In − Pk )xλ k22 .

6

(k)

(k)

Now it suffices to use the inequality λ2 kL(xλ − xλ )k22 ≤ kxλ − xλ k2# and the proof is

complete.

We are in a position to state the main result of the section.

Theorem 2.1. Let Pk and Pk be, respectively, the orthogonal projector onto Vk and Sk ,

and let Ωk be the subspace angle between Vk and Sk . Then for arbitrary λ > 0 there holds

k(In − Pk )xλ k ≤ sin(Ωk )kxλ k2 + γk

kb̃k k2

,

λ2

(19)

where b̃k = b − Uk UTk b. As a consequence, whenever L = In and the regularization parameter is selected by the fixed-point method, which is denoted by λFP , there holds

s

"

#

(k)

2

kxλFP − xλ k2

γ

k

b̃

k

γ

k 2

k

FP

≤ 1 + 2k sin(Ωk ) +

,

(20)

kxλFP k2

λFP

λFP krλFP k2

Proof : The regularized solution xλ can be written as xλ = Pk xλ +(In −Pk )xλ . Multiplying

on both sides of this equality by (In − Pk ) and taking norms

k(In − Pk )xλ k ≤ k(In − Pk )Pk xλ k2 + k(I − Pk )(In − Pk )xλ k2

(21)

The first term can be bounded as

k(In − Pk )Pk xλ k2 ≤ sin(Ωk )kxλ k2 ,

(22)

where we used the fact that k(I − Pk )Pk k2 = sin(Ωk ), see [34, Theorem 2.6.1, p. 76]. To

bound the second term, observe that from (16)

(In − Pk )xλ =

n

X

σi (uTi b)

v = (AT A + λ2 In )−1 AT b̃k = AT (AAT + λ2 Im )−1 b̃k ,

2

2 i

σ

+

λ

i=k+1 i

where we used the SVD of A to obtain (AT A + λ2 In )−1 AT = AT (AAT + λ2 Im )−1 . Thus,

k(In − Pk )(In − Pk )xλ k2 = k(In − Pk )AT (AAT + λ2 Im )−1 b̃k k2 ≤ γk

kb̃k k2

.

λ2

(23)

The inequality (19) follows by replacing (22) and (23) in (21).

On the other hand, if the regularization parameter λ is selected by the fixed-point

method, which means, λFP = krλFP k2 /kxλFP k2 , then (19) becomes

!

k(In − Pk )xλFP k

γk kb̃k k2

,

(24)

≤ sin(Ωk ) +

kxλFP k2

λFP krλFP k2

The second part of the proof concludes using this inequality in (17).

Theorem 2.1 shows that if the subspace Vk approximates Sk well, in which case sin(Ωk )

(k)

is small, then the solution of the projected problem (14), xλ , will be a good approximaFP

tion to xλFP provided that the ratio γk /λFP is sufficiently small. We shall illustrate this

by computing the relative error k(In − Pℓ )xλFP k2 /kxλFP k2 and its bound (24) for the case

7

where Vℓ coincides with Sℓ , as well as the bound for the case where we take approximations

to Sℓ instead. To this end, we consider two test problems from [32] and for fixed k, take

e k = Vk v, where

Seℓ , ℓ = 1, . . . , k, to be the subspace spanned by the first ℓ columns of V

k×k

Vk is from (6) and v ∈ R

is the matrix of right singular vectors of Bk also introduced

in (6). It is known that for the first values of ℓ the subspaces Seℓ approximate Sℓ well

and that the quality of the approximation deteriorates as ℓ approaches k, see, e.g., [30,

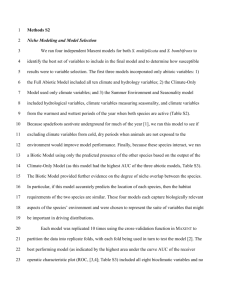

Chapter 6]. Computed quantities for k = 80 are displayed in Figure 2.

5

5

10

10

LHS

GKB

SVD

LHS

GKB

SVD

0

0

10

10

20

40

60

80

20

40

60

80

Figure 2: Relative error and estimates (24) for phillips (left) and heat (right) test problems

from [32], for n = 512 and b = bexact + e where e is white noise such that kek2 =

0.005kbexact k2 . Here, LHS stands for left-hand side of (24).

We now turn to describe the quantity γk as a function of k in connection with the GKB

process. To this end we shall assume exact arithmetic and that GKB runs to completion,

i.e., we are able to run n GKB steps without interruption, which is reasonable for discrete

ill-posed problems since the singular values decay gradually to zero without any gap.

Theorem 2.2. Assume that the GKB algorithm applied to A runs to completion, and let

bk be the bidiagonal matrices defined respectively by

Gk and G

αk+1

βk+2

Gk =

αk+2

..

.

Then for k = 1, . . . , n − 1 we have γk =

..

.

βn

αn

βn+1

kGk k2 ,

bk k2 ,

kG

bk

G

=

βn+1 eTn−k

(25)

if m > n,

, and γk+1 ≤ γk .

if m = n,

Proof : Assume that m > n. If the GKB algorithm runs to completion, from (6) it

follows that A = Un+1 Bn Vn , where Un+1 ∈ Rm×(n+1) and Vn ∈ Rn×n have orthonormal

columns, and Bn ∈ R(n+1)×n is lower bidiagonal. But matrix A can be rewritten as

B

V T,

(26)

A=U

0

where U = [Un+1 U ⊥ ] ∈ Rm×m with U ⊥ chosen so that U is orthogonal, V = Vn , B = Bn ,

and 0 ∈ R(m−n−1)×n is a zero matrix. Then Pk = Vk VkT , APk = AVk VkT = Uk+1 Bk VkT by

(5), and A − APk = U (B − U T Uk+1 Bk VkT V )V T . Hence

kA − APk k2 = kB − U T Uk+1 Bk VkT V k2 = kGk k2 ,

8

which proves the first statement of the theorem.

bn V,

Now if m = n and the GKB algorithm runs n steps, then we have that A = U B

n×n

n×n

bn ∈ R

where U, V ∈ R

have orthonormal columns, and B

defined by

α1

β2

bn =

B

α2

..

.

..

.

βn

αn

,

(27)

and the proof proceeds in the same way as above.

bk+1 ) is a

Finally, the second statement is a consequence of the fact that Gk+1 (resp. G

bk ), which completes the proof.

submatrix of Gk (resp. G

Remark 1: The number γk can be approximated using the 2-norm of the first column of

Gk , that is, if e1 denotes the unit vector in Rn−k , then

q

2

2

γk ' ||Gk e1 k2 = αk+1

.

(28)

+ βk+2

Remark 2: Provided that rank(APk ) = k, we have

γk ≥ σk+1 ,

(29)

the equality being attained when Vk is spanned by the first k right singular vectors of A.

In this case APk is the matrix of rank k that is closest to A in the 2-norm sense, see, e.g.,

[34, Thm. 2.5.3]. In practice, q

because Vk carries relevant information on the first k right

singular vectors, both γk and

2

2

αk+1

+ βk+2

approximate σk+1 , with γk decreasing as fast

as σk+1 , see Figure 3. As a result, whenever the fixed point of φ(k) (λ), λ(k)

, is close to

FP

λFP , the bound (17) becomes small because γk decreases quickly near zero. This explains

why GKB-FP often converges rapidly, as reported in [24].

2

2

10

10

σk+1

γk

0

10

γk

0

10

||Gke1||2

−2

||Gke1||2

−2

10

10

−4

−4

10

0

σk+1

10

20

40

60

80

0

20

40

60

80

Figure 3: Estimates (28) and (29) for phillips (left) and heat (right) test problems from

[32], for n = 512 and b = bexact + e where e is white noise such that kek2 = 0.005kbexact k2 .

3

Extensions of GKB-FP

We shall now consider extensions of GKB-FP to general-form Tikhonov regularization, concentrating, in particular, on problems that involve rank-deficient matrices L with

9

much more rows than columns, as often seen, e.g., in deblurring problems. Two welldistinguished approaches shall be considered. One approach is based on the fact that we

can apply GKB-FP to a related transformed problem. The second approach constructs

regularized solutions by solving the constrained problem (14).

3.1

Extensions of GKB-FP via standard-form transformation

Theoretically, we can always transform the general-form Tikhonov problem (2) into a

problem in standard form

x̄λ = argmin kb̄ − Āx̄k22 + λ2 kx̄k22 .

(30)

x̄∈Rp

If L is invertible we use y = Lx ⇔ x = L−1 y, b̄ = b, and Ā = AL−1 . When L is not

invertible the transformation takes the form

xλ = L†A x̄λ + xN ,

Ā = AL†A ,

b̄ = b − AxN ,

(31)

where xN lies in the null space of L and

† †

†

†

LA = I n − A I n − L L A L ,

is the A-weighted generalized inverse of L. If we know a full rank matrix W whose columns

span the null space N (L), then xN = W(AW )† b, and the A-weighted generalized inverse

of L reduces to L†A = In − W (AW )† A L† [28]. Thus, for the GKB-FP algorithm to be

successfully applied to (30), the matrix Ā must not be explicitly formed and the matrixvector products with Ā and ĀT must be performed as efficiently as possible. Is is clear

†

that these matrix-vector products depend on the way the products with L† and LT are

performed, and that computation of the product L† u requires determining the minimum

2-norm least squares solution of the problem

tLS = argmin kLt − uk2

(32)

†

as quickly and efficiently as possible; the same observations holds for LT v. Searching for

this efficiency, one can try iterative methods such as CGLS or LSQR and its subspace

preconditioned version [16]. Unfortunately, our computational experience with these techniques when kLxk2 is a Sobolev norm has been unsatisfactory due to the slow convergence of the iterates; however, for applications involving sparse regularization matrices

e.g., banded regularization matrices with small bandwidth, among others, the following

approaches can be considered:

3.1.1

LU-based approach

If L is assumed to be rank deficient with rank(L) = q < min{p, n}, we can determine

permutation matrices PL ∈ Rp×p and QL ∈ Rn×n such that

PL LQL = L̂Û

10

(33)

where L̂ ∈ Rp×q is “unit lower triangular”, Û ∈ Rq×n is “upper triangular”, and both have

rank q. Thus the linear squares problem (32) requires the minimum 2-norm least squares

solutions of the full-rank subproblems:

yLS = argmin kL̂y − PL uk2 ,

and tLS = argmin kÛ t − yLS k2 .

(34)

Once tLS is determined, we then get t = L† u = QL tLS . The approach becomes attractive

specially when L is banded with small bandwidth; in this case the two above subproblems

can be handled efficiently [28, 30], an important condition for GKB-FP to work well.

3.1.2

LD -based approach

In this case we consider regularization matrices L of the form

L=

Iñ ⊗ Ld1

Ld2 ⊗ Im̃

with Ld1 ∈ R(ñ−d1 )×ñ , and Ld2 ∈ R(m̃−d2 )×m̃ . The key idea is that if we use the SVDs of

the small matrices Ld1 and Ld2 : Ld1 = Ud1 Σd1 VdT1 , Ld2 = Ud2 Σd2 VdT2 , then the smoothing

seminorm satisfies the property

kLxk2 = kLD xk2 ,

with LD = D(Vd1 ⊗ Vd2 )T ,

(35)

where D is a nonnegative diagonal such that [14]

D2 = ΣTd1 Σd1 ⊗ Im + In ⊗ ΣTd2 Σd2 .

(36)

The null space of LD , N (LD ), is spanned by the columns of the matrix Vd1 ⊗Vd2 associated

with the zero entries of D, see [14] again. As a consequence, we can now solve the standard

Tikhonov problem (30) associated with

(37)

xλ = argmin kb − Axk22 + λ2 kLD xk22 ,

x∈Rn

taking advantage of the fact that Vd1 ⊗ Vd2 is orthogonal; in this case the products L†D u

T

and L†D v required in (32) can be performed efficiently as

L†D u = (Vd1 ⊗ Vd2 )D† u,

T

L†D v = D† (Vd1 ⊗ Vd2 )T v.

(38)

The main advantage here is that the cost of the subproblem (32) reduces essentially to a

matrix-vector product involving the Kronecker product Vd1 ⊗ Vd2 , which is important for

the efficiency of GKB-FP. Further savings are possible when the matrix A is a Kronecker

product of the form A1 ⊗ A2 , as seen in image processing and numerical analysis. To see

how this can be done, recall that Vd1 ⊗ Vd2 is orthogonal and consider the transformation

x̌ = (Vd1 ⊗ Vd2 )T x.

(39)

Then (37) reduces to

x̌λ = argmin kb − Ǎx̌k22 + λ2 kDx̌k22 ,

x̌∈Rn

with Ǎ = (A1 Vd1 ) ⊗ (A2 Vd2 ),

(40)

where only the matrices A1 Vd1 and A2 Vd2 need to be stored. Now it is evident that

the Tikhonov problem (40) can be solved much more efficiently than the one in (37).

Numerical results that illustrate the efficiency of this approach on deblurring problems

are postponed to the next section.

11

3.2

PROJ-L: GKB-FP free of standard-form transformation

We now consider regularized solutions obtained by minimizing the general-form Tikhonov

functional over the subspace Kk (AT A, AT b), as suggested in [27]. Therefore the approximate solution is now determined as

(k)

(k)

xλ = V k yλ ,

(k)

yλ = argmin{kAVk y − bk22 + λ2 kLVk yk22 }.

(41)

y∈Rk

Note that if we use the QR factorization of the product LVk , LVk = Qk Rk , using (5)-(6)

the least squares problem in (41) reduces to

(k)

yλ = argmin{kBk y − β1 e1 k22 + λ2 kRk yk22 },

(42)

y∈Rk

which can be computed efficiently in several ways, e.g., by a direct method or by first

transforming the stacked matrix [BkT λRkT ]T to upper triangular form, as done when

implementing GKB-FP [24]. Then it follows that

(k)

(k)

kLxλ k = kRk yλ k2 ,

(k)

(k)

krλ k2 = kBk yλ − β1 e1 k,

(43)

and the function φ(k) (λ) associated with the projected problem is

(k)

φ(k) (λ) =

√ kBk yλ − β1 e1 k2

.

µ

(k)

kRk yλ k2

(44)

Unlike the approach in [27] where the Tikhonov regularization parameter is determined

by the discrepancy principle, our proposal denoted by PROJ-L is to follow the same ideas

as GKB-FP. That is, for chosen p0 > 1 and k ≥ p0 , we determine the largest convex

fixed-point λ(k)

of φ(k) (λ), repeating the process until a stopping criterion is satisfied.

FP

Numerical examples have shown that the largest fixed-point of φ(λ) associated with the

large-scale problem is captured in a relatively small number of GKB steps.

To make our proposal computationally feasible, the following aspects must be considered

• the initial guess of the fixed-point method on the projected problem at step k + 1

is taken to be the fixed-point λ(k)

and

FP

• the QR factorization LVk = Qk Rk is calculated only once at step k = p0 and is

updated in subsequent steps.

Algorithms for updating the QR factorization can be found in [34, Chapter 12].

4

Alternative to Tikhonov regularization: smoothed

preconditioned LSQR

We have seen that GKB-FP requires the projected problem to be solved repeatedly

in order to calculate solution and residual norms for distinct values of the regularization

12

parameter λ. This may be expensive in connection with large-scale problems. An alternative is to use iterative regularization in which the number of iterations plays the role of

the regularization parameter. Methods in this class include CGLS/LSQR, GMRES, MINRES, and Landweber iterations, among many others, see., e.g., [14, 15, 16, 18, 19, 30, 35].

While the regularizing properties of these methods are reasonably well understood, less

clear is the situation when the task is to determine a proper number of iterations without

a priori knowledge about the norm of the noise. The purpose of this section is to show how

to construct regularized solutions via a version of LSQR which incorporates the smoothing properties of the regularization matrix L into the iterative process and automatically

stops the iterations without requiring the norm of the noise as input data.

4.1

Stopping rule for LSQR

Recall that LSQR constructs a sequence of approximations for the solution of (1) by

minimizing the residual over the Krylov subspace Kk (AT A, AT b). That is, the LSQR

approximations are defined by

xk =

argmin

x∈Kk (AT A,AT b)

kAx − bk2 ,

(45)

and can be expressed as

xk = V k yk ,

yk = argmin kBk y − β1 e1 k2 ,

(46)

y∈Rk

where Bk and Vk are from (4)-(6) [17]. To describe our stopping rule, we first consider

a truncation criterion for the method of truncated SVD (TSVD) solutions. Using the

notation introduced in (15) the TSVD solution xk is defined as

xk =

A†k b

=

k

X

uTj b

j=1

σj

vj ,

(47)

P

where Ak = kj=1 σj uj vjT and k is the truncation parameter. We shall assume that the

noise free data bexact satisfies the discrete Picard condition (DPC) [36], i.e., the coefficients

|uTj bexact |, on the average, decay to zero faster than the singular values, and that the noise

is Gaussian zero mean. These assumptions imply that there exists a integer k ∗ such that

|uTj b| = |uTj bexact + uTj e| ≈ |uTj e| ≈ constant, for j > k ∗ .

(48)

We now note that an error estimate for xk can be expressed as

kxk − xexact k ≤ kA†k bexact − xexact k2 + kA†k ek2 ≡ E1 (k) + E2 (k),

with

E1 (k) =

n

X

|uTj bexact |2

σj2

j=k+1

!1/2

,

E2 (k) =

k

X

|uTj e|2

j=1

σj2

!1/2

.

(49)

(50)

The first term, called the regularization error, decreases with k and can be small when

k is large. The second term measures the noise magnification error; it increases with k

13

and can be large for σj ≈ 0. Thus the choice of the truncation parameter requires a

balance between these two errors in order to make the overall error small. A closer look

at the two types of errors reveals that for k > k ∗ , the error E2 (k) increases dramatically

while E1 (k) remains under control due to the DPC, and the overall error should not be

minimized for k > k ∗ . Conversely, for k < k ∗ , E1 (k) increases regularly with k while E2 (k)

remains under control since the singular values σj dominate the coefficients |uTj e|; again

the overall error is not minimized. Therefore the error estimate should be minimized at

k = k ∗ . However, neither E1 (k) nor E2 (k) is available and k ∗ must be estimated by other

means. We propose to do this by minimizing the product

Ψk = krk k2 kxk k2 k ≥ 1,

(51)

P

where rk = b−Axk = nj=k+1 (uTj b)uj +b⊥ with b⊥ = (Im −UUT )b; this can be explained as

follows. Note from (48) that if k > k ∗ , the residual norm decreases approximately linearly

while the solution norm increases dramatically as 0 ≈ σk < |uTk e|. Thus Ψk cannot be

minimized for k > k ∗ . On the other hand, if k < k ∗ , the residual norm gets relatively

large since the coefficients |uTk b| are large, while the solution norm increases slowly with k.

Hence Ψk cannot be minimized for k < k ∗ . Thus, the sequence Ψk should be minimized

at k = k ∗ . We can thus conclude that a good choice of the truncation parameter for

TSVD is the minimizer of Ψk . Our experience is that this truncation criterion produces

a regularization parameter that very often coincides with the optimal parameter, the

minimizer of the relative error REk = kxexact − xk k2 /kxexact k2 , as seen in Fig. 4.

2

Ψk

E1(k)+E2(k)

10

REk

2

10

0

10

0

10

0

0

5

10

10

0

−2

5

10

10

0

5

10

Figure 4: Errors in xk , Ψk and relative errors for shaw test problem using the same data

as in with Fig. 1. The optimal relative error is 4.98% and reached at k ∗ = 7 = argmin Ψk .

The reader should compare this result with that of Tikhonov regularization, see Fig. 1

However, the SVD is infeasible for large-scale problems and the TSVD method may

not be of practical utility. As an alternative to TSVD for large-scale problems, we propose

to use LSQR coupled with a stopping rule defined similarly as the truncation criterion for

TSVD above. Therefore, our stopping rule for LSQR selects as stopping index the first

integer b

k satisfying :

b

k = argmin Ψk ,

Ψk = kb − Axk k2 kxk k2 .

(52)

The choice of the stopping rule can be supported as follows. First, the residual and

solution LSQR norms behave monotonically like the residual and solution TSVD norms,

i.e., while kb − Axk k2 decreases with k, kxk k2 increases, and second, the LSQR iterate xk

lives in the Krylov subspace Kk (AT A, AT b) which very often carries relevant information

14

on the dominant k right singular vectors of A [30], in which case xk approximates the k-th

TSVD solution well, and a similar observation applies to the sequences Ψk for TSVD and

LSQR, respectively, as we see in Figure 5.

Corner at k=7

4

10

Ψ (SVD)

L−Curve (LSQR)

Ψ (LSQR)

3

10

||xk||

RE (SVD)

k

k

2

10

RE (LSQR)

k

k

2

10

0

2

10

10

1

10

0

0

10

10

0

−2

5

10

10

0

5

10

||b−Axk||

Figure 5: L-curve, sequences Ψk associated with TSVD and LSQR for shaw test problem

with the same data of the previous figure and error histories of a few iterates.

From the practical point of view, our proposal for determining the stopping index b

k is

to evaluate the finite forward differences

∇Ψk = Ψk+1 − Ψk ,

k ≥ 1,

(53)

and then select the first index k for which ∇Ψk changes sign. More specifically, our

proposal is to select the first k such that ∇Ψk−1 ≤ 0 and ∇Ψk ≥ 0. The main feature of

this stopping rule is that only b

k+1 GKB steps are required to construct the approximation

xbk to the noise free solution of (1). All our numerical results to be presented in the sequel

are obtained with this stopping rule.

The following result bounds the error in the LSQR iterate xk as an approximation to

the k-th TSVD solution.

Theorem 4.1. Assume that the GKB algorithm runs to completion. Then the relative

distance between the LSQR and the TSVD solution can be bounded as

1

kxk − xk k2

≤

(Φk + γk ) ,

kxk k2

σk

(54)

where Φk = krk k2 /kxk k2 , and γk defined in the previous section.

For the proof of Theorem 4.1 we require a technical result.

Lemma 4.1. Let Ωk be the angle between the subspaces spanned by the k first right singular

vectors of A and the Krylov subspace Kk . Then sin Ωk ≤ γk /σk .

Proof: As in the proof of Theorem 2.2, we first assume m > n. In this case, after n GKB

steps matrix A can be decomposed as

A = Un+1 Bn+1 VnT ,

15

(55)

in which, for convenience of the proof, we write Un+1 = [Uk+1 U ⊥ ], Vn = [Vk V ⊥ ], and

k

k n −

Bk Ck

k+1

with Bk defined in (4). The other matrices, Ck , Dk ,

Bn+1 =

m−k−1

Dk F k

Ck

⊥

⊥

.

and Fk are clear from the context. Now by (55) we have AV = [Uk+1 U ]

Fk

Multiplying this equality with UTk and using the fact that UTk A = Σk VkT , where Uk

and Vk have k columns and

A, we get

of Σk contains the k largest singular values

Ck

T

⊥

Ck /σk (A),

.

Taking

norms

we

obtain

sin

Ω

≤

VkT V ⊥ = Σ−1

U

[U

U

]

k

k+1

k

k

Fk Fk

2

T ⊥

where we have used that kVk V k2 = sin Ωk , see, e.g., [34]. Thus, (4.1) follows because

the norm in this expression coincides with γk .

bn V T , with B

bn defined in (27), and the proof follows analogously.

If m = n then A = Un B

n

Proof of Theorem 4.1: Note that, based on (5)-(6), the LSQR iterate xk = Vk yk and

the associate residual rk = b − Axk satisfy

T

xk = Vk Bk† Uk+1

b,

T

Bk† Uk+1

rk = 0.

(56)

Then the error in xk with respect to xk can be written as

T

xk − xk = A†k b − Vk Bk† Uk+1

b

T

= (A†k − Vk Bk† Uk+1

)(rk + Axk )

T

= A†k rk + (A†k A − Vk Bk† Uk+1

A)xk .

But

A†k A = A†k Ak ,

T

T

Vk Bk† Uk+1

Axk = Vk Bk† Uk+1

AVk VkT xk = Vk Bk† Bk VkT xk = Vk VkT xk = xk ,

therefore

xk − xk = A†k rk − (In − A†k Ak )xk = A†k rk − (In − Pk )Pk xk .

Hence

kxk − xk k ≤ kA†k k2 krk k2 + k(In − Pk )Pk k2 kxk k2 ≤ kA†k k2 krk k2 + sin Ωk kxk k2 ,

where we have used the fact that k(In − Pk )Pk k2 = sin Ωk (see, Golub, [34, Theorem 2.6.1,

p. 76]). The assertion of the theorem follows on using Lemma (4.1).

Theorem 4.1 states that if the information contained in Kk (AT A, AT b) is rich enough

so that γk ≪ σk and if the sum γk + Φk is small compared to σk , then the LSQR iterate

xk will be close to the k-th TSVD solution. Theorem 4.1 can also be used to bound

the error in xk with respect to xexact by using the triangular inequality and bounds on

kxk − xexact k which require smoothness conditions on xexact . This is quite involved and is

not considered in the present paper.

We now turn to the stopping rule (52). Note that it is nothing more than a discrete

counterpart of the parameter choice rule (8) for µ = 1 which looks for a corner of the

16

continuous Tikhonov L-curve; hence, it should come as no surprise to see the minimizer

of Ψk closely related to a point on the discrete L-curve

(log kb − Axk k2 , log kxk k2 ),

k = 1, . . . , q,

(57)

located near the corner of the L-shaped region, see Fig. 5. Thus, the decision to stop

LSQR in connection with discrete ill-posed problems can, in principle, be also managed by

locating the corner of discrete L-curves for which several algorithms exist. Corner finding

methods include a method based on spline curve fitting by Hansen and O’Leary [3], the

triangle method by Castellanos et al. [37], a method by Rodriguez and Theis [38], and

an adaptive algorithm referred to as the “pruning algorithm” by Hansen et al. [20]. The

pruning algorithm advocates that to overcome difficulties of its predecessors, the corner

must be determined by evaluating the overall behavior of the L-curve. The effectiveness

of this approach and its capability to determine the “best” corner of discrete L-curves is

illustrated numerically in [20]. However, finding the corner using a limited sequence of

points is not an easy task, and the existing algorithms are not without difficulties, see,

e.g., Hansen [30, p. 190] for discussions on shortcomings, Hansen et al. [20] for a multiple

corner case, and Salehi Ravesh et al. [22] for an application of the L-curve criterion to

quantification of pulmonary microcirculation.

To illustrate the performance of the pruning algorithm in connection with LSQR

(with full reorthogonalization), we use gravity, heat, foxgood and shaw test problems

from [32], moler (with α = 0.5), lotkin, prolate and hilbert test problems from Matlab’s

“matrix gallery”. In all cases we consider coefficient matrices of size 1024 × 1024 and

distinct right-hand sides defined by b = Axexact + e, where e is generated by the Matlab

function randn with the state value set to 0 and with three distinct noise levels (NL)

NL = kek2 /kAxexact k2 = 10−4 , 10−3 , 10−2 . To ensure that the overall behavior of the

L-curve is contained in the data, we take q = 120 points. The corner of the L-curve,

denoted by kLC , is determined by using the Matlab code corner from [32], and the relative

error in xkLC is denoted by ELC . For comparison, the stopping index b

k determined by

minimizing Ψk , the relative error in xbk denoted by EΨ , optimal parameters and optimal

errors, denoted by kopt and Eopt , respectively, are also computed.

Average relative errors of 20 realizations as well as the minimum/maximum k determined by the pruning algorithm, the stopping rule (52) and the optimal one along the

realizations, are all displayed in Table 1.

Note that, except for the fact that the pruning algorithm failed solving moler and lotkin

test problems for NL = 10−4 and NL = 10−2 , respectively, the quality of the approximate

solutions produced by this algorithm and the stopping rule (52) remains comparable in

the other cases and other test problems. In particular, for the noise level NL = 10−4 , we

note that while the pruning algorithm produced approximate solutions of poor quality,

the solutions produced by the stopping rule (52) remained within tolerable bounds. The

pruning algorithm failed constructing reasonable approximate solutions because the corner

of the L-curve was not correctly identified several times, see Fig. 6 (left). Fig. 6 (center)

shows the discrete L-curve of the first realization with the corner determined by the

pruning algorithm (corresponding to kLC = 107) marked by (in red) and with the “true”

corner located at k = 19 marked by ◦ (in red). A similar observation applies for lotkin

test problem.

To learn more about the properties of the pruning algorithm, we investigated the

17

gravity

heat

foxgood

shaw

moler

lotkin

prolate

hilbert

gravity

heat

foxgood

shaw

moler

lotkin

prolate

hilbert

gravity

heat

foxgood

shaw

moler

lotkin

prolate

hilbert

kLC

13(19)

46(52)

4(5)

9(9)

18(108)

7(7)

20(22)

9(10)

kLC

11(15)

27(31)

3(4)

7(8)

9(10)

3(5)

17(17)

7(8)

kLC

7(11)

15(17)

2(2)

5(5)

4(4)

3(18)

10(13)

6(6)

b

k

11(14)

28(42)

5(5)

9(9)

19(21)

7(7)

10(12)

9(9)

NL = 10−4

kopt

ELC

11(13)

0.0522

28(31)

0.0902

4(4)

0.0096

9(9)

0.0325

9(11)

8.7458

7(9)

0.4384

9(13)

0.0223

10(12)

0.4358

EΨ

0.0109

0.0175

0.0119

0.0325

0.1283

0.4384

0.0002

0.4382

Eopt

0.0043

0.0125

0.0028

0.0325

0.0107

0.4317

0.0002

0.4258

b

k

10(11)

28(29)

3(4)

7(8)

9(10)

5(5)

12(16)

7(8)

NL = 10−3

kopt

ELC

9(11)

0.0368

20(22)

0.0774

3(3)

0.0201

7(9)

0.0514

7(8)

0.0496

5(7)

0.4478

6(11)

0.0260

7(9)

0.4396

EΨ

0.0224

0.0691

0.0201

0.0515

0.0654

0.4475

0.0145

0.4396

Eopt

0.0118

0.0222

0.0074

0.0439

0.0220

0.4445

0.0008

0.4391

b

k

7(8)

16(16)

2(2)

5(6)

4(4)

3(3)

7(12)

6(6)

NL = 10−2

kopt

ELC

6(8)

0.0454

14(16)

0.0674

2(3)

0.0311

6(8)

0.1094

5(6)

0.1885

3(5)

1.9 × 105

1(6)

0.0173

6(7)

0.4400

EΨ

0.0356

0.0674

0.0311

0.0660

0.1885

0.4522

0.0150

0.4400

Eopt

0.0266

0.0629

0.0217

0.0534

0.0788

0.4505

0.0071

0.4400

Table 1: Regularization parameters and relative errors of regularized solutions determined

by the pruning algorithm, the stopping rule (52) and the optimal one. The exact solution

for moler, lotkin, prolate and hilbert test problems were taken to be the solution of shaw

test problem from [32].

behavior of kLC as a function of the number of data points q being used. The results

for moler test problem with NL = 10−4 are displayed in Fig. 6 (right). We note that for

several values of q the corner index kLC stagnates at k = 21, which coincides with the

maximum minimizer of Ψk , and that for larger values of q this corner index falls far away

from k = 21. Corner indexes for heat and gravity test problems did not behave this way

and they are not reported here. The results suggest that the corner index kLC depends on

the number of points q, and that unfortunate choices of q might lead to wrong corners.

We shall return to this point later in connection with a deblurring problem.

18

Corner at k=107

150

kLC

150

kLC(q)

L−Curve

3

10

100

||xk||

100

2

50

10

50

0

0

0

10

0

20

10

0

50

100

||b−Axk||

Figure 6: Corner index determined by the pruning algorithm of 20 realizations for moler

test problem in connection with LSQR (left). L-curve of first realization (center); in this

case, the corner determined by pruning algorithm is marked by while the “true” corner

is marked by ◦. Corner index determined by pruning algorithm using q points with q

ranging from 30 until 120 (right).

4.2

P-LSQR

As commented earlier, our intention is to construct approximate solutions using a

version of LSQR that incorporates smoothing properties of the regularization matrix L

into the computed solution. We shall do this by applying LSQR to the least squares

problem min kĀx − b̄k with Ā and b̄ from (30), as suggested in [13], using (52) as stopping

rule. Our iterative regularization algorithm, which we denote by P-LSQR, can thus be

summarized as follows

• apply LSQR to kĀx− b̄k2 and compute the iterate x̄k using the stopping rule defined

in (52).

• once the stopping index is determined, take as approximate solution

xk = L†A x̄k + xN ,

with xN and L†A as in (31).

Obviously for P-LSQR to be computationally feasible, the dimension of N (L) must be

†

small and the products with L† and LT must be performed as efficiently as possible, i.e.,

all possibilities must be explored in order to reduce the computational cost, either through

fast matrix-vector products or through the use of preconditioners. However, the latter is

not considered in this paper.

5

Numerical examples

We give some examples to illustrate our methods. Two examples involve deblurring

problems and a third one involves a Super-resolution problem; as before, the data vector

is of the form b = Axexact + e, where e is generated by the Matlab code randn with the

state value set to 0, and NL = kek2 /kAxexact k2 is referred to as the the noise level. All

19

computations were carried out in Matlab. To simplify the notation, the extension of GKBFP based on the LU factorization of L is denoted by FP-LU and the LD -based approach

is denoted by FP-LD . Average values of regularization parameters, time and relative error

(k)

in xλ , are denoted by λ̄, t̄ and Ē, respectively, while the minimum and maximum number

of steps required by the algorithms are denoted by km and kM , respectively.

5.1

Deblurring problems

The goal in this case is to recover an image stored in a vector xexact ∈ RM N from a

blurred and noisy image b = bexact + e so that Axexact = bexact , where A plays the role of

blurring operator (often referred to as the PSF matrix), and bexact represents the blurred

image. For simplicity, we consider N × N images and use N 2 × N 2 PSF matrices defined

by A = (2πσ 2 )−1 T ⊗ T . In this case, σ controls the width of the Gaussian point spread

function and T is an N × N symmetric banded Toeplitz matrix with half-bandwidth

band [15]; in what follows we use σ = 2 and band = 16. The regularization matrix is

chosen as

L=

5.1.1

IN ⊗ L1

L1 ⊗ IN

,

L1 =

1

-1

1

-1

..

.

..

.

1

-1

∈ R(N −1)×N .

(58)

Rice test problem

Rice 64: The purpose here is two-fold: to illustrate that the methods proposed in this

work are less expensive than the joint bidiagonalization (JBD) algorithm by Kilmer et

al. [15] and, to learn more about the capabilities of the pruning algorithm to identify

the corner of discrete L-curves. We start with the observation that at the k-th step, the

JBD-based approach determines approximate solutions given by

(k)

xλ = argmin kb − Axk22 + λ2 kLxk22 ,

(59)

x∈Zk

where Zk is a Krylov subspace generated by the JBD algorithm applied to QA and QL ,

with QA ∈ Rm×n , QL ∈ Rp×n and Q = [QTA QTL ]T , where Q is from the QR factorization

b = [AT LT ]T . Each step of the JBD approach requires two matrix vector products of

of A

the form vb = QQT v, but the QR factorization is never computed; in practice one takes

b ,

vb = Au

LS

b 2.

with uLS = argmin kv − Auk

(60)

u∈Rn

This not only explains why the JBD-based approach is expensive but also shows that its

efficiency depends on the way uLS is computed. Two distinct ways were considered in this

paper: one uses LSQR as reported in [39], and the other one uses LSQR with subspace

preconditioning, as done in [12, 16]. We will report results obtained through the former,

which turned out to be the most efficient. To achieve our first goal we will use the JBD

algorithm with the FP method as parameter choice rule, which we call JBD-FP. JBDFP proceeds like PROJ-L in that for chosen p0 > 1 and for k ≥ p0 , the largest convex

fixed-point of φ(k) (λ) is computed and the process is repeated until a stopping criterion is

20

satisfied. Two distinct implementations of JBD-FP were considered: one implementation

denoted by JBD-FPL , which deals with problem (2) using the matrix L, and other denoted

by JBD-FPD , which deals with the equivalent problem (40). In this example we consider

a 64 × 64 subimage of the image rice which was used in [15] to illustrate a JBD-based

algorithm. Thus N = 64, A ∈ R4096×4096 and L ∈ R8064×4096 ; similarly as in [15], we

use data with 1% of white noise. Both GKB and JBD were implemented with complete

reorthogonalization; computation of fixed-points started with p0 = 10, and the iterations

stopped using a tolerance parameter ǫ = 10−6 .

For future comparison, regularization parameters determined by L-curve, a fixed-point

method, the stopping rule (52), optimal regularization parameters, and relative errors, are

all reported in Table 2. Regularization parameters for L-curve and Fixed-Point methods

were computed using the GSVD of the pair (A, R), where R ∈ R4095×4096 is from the

QR factorization of L. The optimal Tikhonov regularization parameter, defined as the

minimizer of kxλ − xexact k2 /kxexact k2 , is computed via exhaustive search. P-LSQR uses

Ā and b̄ from the explicit transformation approach implemented in std− form in [32]. As

it is apparent, see Figure 7, in this case the L-Curve has a well defined corner and φ(λ)

(with µ = 1) has a unique fixed-point that minimizes Ψ(λ). The true image, the blurred

and noisy image, and the images determined by L-Curve and fixed-point methods are all

displayed in Fig. 8.

λ

E

L-Curve

0.0783

8.03%

FP

0.0956

8.19%

optimal

0.0409

7.75%

P-LSQR

k = 48

8.31%

optimal

k = 83

7.82%

Table 2: Regularization parameters and relative errors.

Continuous L−Curve

Discrete L−Curve

1

10

φ(λ)

kLC(150)

10

10

10

log(Ψk)

3

0

10

−1

5

10

10

2

10

−2

2

10

10

−2

10

0

2

10

10

0

50

100

150

Figure 7: L-Curve, φ(λ), discrete L-curve and log(Ψk ) for rice test problem with N = 64.

The corner of the L-curve, the fixed-point of φ(λ), the “true” corner of the discrete L-curve,

and the minimizer of Ψk , are all marked by ◦. The corner determined by the pruning

algorithm of the first of 20 realizations using q = 150 points is marked by .

We now turn to the results obtained through JBD-FP and the methods proposed in

this paper. Average time of 20 realizations are Table 3. It becomes apparent that the

JBD-FP approaches are in fact more expensive than the methods proposed in this work,

and that the fastest one is P-LSQR followed by PROJ-L and FP-LD . Note that FP-LU

can be a good option. As far accuracy is concerned, all methods produced solutions with

relative error of approximately 8.2%; this is in accordance with the results obtained using

the GSVD of the pair (A, R) shown in Table 2.

21

Figure 8: True image (top left), blurred and noisy image (top right), reconstructed image

by LC (bottom left) and reconstructed image by FP (bottom right).

t̄

JBD-FPL

5.2206

JBD-FPD

4.2688

FP-LU

2.0084

FP-LD

0.8863

PROJ-L

0.2576

P-LSQR

0.2809

Table 3: Average time (in seconds) of 20 realizations.

Concerning the capability of the pruning algorithm to find the corner of discrete Lcurves, we arrive at the same conclusion as before: the corner index kLC can vary with

the number of points q of the discrete L-curve. Table 4 shows the corner index k and the

relative error in xk of the first realization for several values of q. The starting value of q

was chosen relatively close to the minimizer of Ψk , k = 48, to evaluate how the corner

index behaves in these cases. A false corner corresponding to q = 150 is displayed in

Fig. 7.

q

kLC (q)

Error

60

3

20.65%

80

3

20.65%

100

43

8.46%

120

43

8.46%

140

3

20.65%

160

3

20.65%

180

46

8.38%

200

43

8.46%

Table 4: Corner index kLC (q) selected by the pruning algorithm as a function of the

number of points q of the discrete L-curve (log kr̄k k2 , log kĀx − b̄k2 ), where r̄k and x̄k are

LSQR iterates of min kĀx − b̄k2 for rice test problem.

The conclusion we can drawn from the numerical experiments so far is that LSQR

coupled with the proposed stopping rule is cheaper than the pruning algorithm, that

the corner determined by the pruning algorithm depends on the number of points of the

discrete L-curve, and that for the tested problems our approach performs similarly as the

pruning algorithm when the latter works well. These conclusions explain why the pruning

algorithm will not be used in the following examples.

Rice 256: We now consider the 256 × 256 entire rice image. The PSF matrix A ∈

R65536×65536 , it has singular values decaying gradually to zero without any particular gap

(not shown here) and condition number κ(A) ≈ 3.40 × 1016 . The regularization matrix

22

L ∈ R130560×65336 . Only FP-LD , P-LSQR and PROJ-L are used. Average results of 20

realizations each with NL = 0.01 with and without complete reorthogonalization (labeled

as reorth = 1 and reorth = 0, respectively) are displayed in Table 5. Again, all methods

produced solutions with approximately the same quality regardless of whether reorthogonalization is used or not. However, in terms of speed, PROJ-L is superior. In this

example µ = 1 did not work in all runs and adjustments were needed. For details on such

an adjustment the reader is referred to [6].

λ̄

Ē

t̄

km (kM )

µ̄

FP-LD

0.0706

0.0820

7.9603

433 (512)

0.6519

P-LSQR

0.0851

4.3365

242 (268)

reorth = 0

PROJ-L

0.0874

0.0831

1.0652

28 (30)

1

FP-LD

0.0746

0.0812

54.6983

280 (290)

0.6635

P-LSQR

0.0845

15.9995

140 (141)

reorth = 1

PROJ-L

0.0874

0.0831

1.8808

28 (30)

1

Table 5: Results for entire rice test problem for NL = 0.01, p0 = 15 and ǫ = 10−6 .

5.1.2

Pirate test problem

In this example we consider a large image of size 512 × 512 called pirate, see Fig. 9.

Thus N = 512, the PSF matrix A ∈ R262144×262144 and the regularization matrix L ∈

R523264×262144 . As in the previous example, we report average results of 20 realizations

using FP-LD , P-LSQR and PROJ-L with and without complete reorthogonalization. Numerical results for the noise level NL = 0.01 are shown in Table 6. In this case, the

relative error in the computed solutions is approximately 14.7% and once more the fastest

algorithm is PROJ-L. Visual results of this experiment can be seen in figure 9. For this

test problem, the choice µ = 1 works satisfactorily in all runs.

λ̄

Ē

t̄

km (kM )

FP-LD

0.1563

0.1479

64.5863

516 (572)

P-LSQR

0.1483

36.4999

309 (335)

reorth = 0

PROJ-L

0.1491

0.1463

12.5072

39 (39)

FP-LD

0.1577

0.1472

426.0727

282 (282)

P-LSQR

0.1477

156.7809

163 (163)

reorth = 1

PROJ-L

0.1492

0.1462

17.6866

39 (39)

Table 6: Results for Pirate test problem with NL = 0.01, p0 = 20 and ǫ = 10−6 .

5.2

Super-resolution

High-resolution (HR) images are important in a number of areas such as medical

imaging and video surveillance. However, due to hardware limitations and cost of image

acquisition systems, Low-resolution (LR) images are often available. We consider the

problem of estimating an HR image form observed multiple LR images. Let the original

HR image of size M = M1 × M2 in vector form be denoted by x ∈ RM , and let the k-th

LR image of size N = N1 × N2 in vector form be denoted by bk ∈ RN , k = 1, 2, . . . , q,

23

Figure 9: True image, LR noisy image, and restored image by PROJ-L.

with M1 = N1 × D1 , M2 = N2 × D2 , where D1 and D2 represent down-sampling factors

for the horizontal and vertical directions, respectively. Assuming that the acquisition

process of the LR sequence involves blurring, motion, subsampling and additive noise, an

observation model that relates x to bk is written as [40]

b k = Ak x + ǫ k

(61)

where Ak is N × M , and ǫk stands for noise. The goal is to estimate the HR image x from

all LR images bk . In this case Tikhonov regularization takes the form

xλ = arg min {kb − Axk22 + λ2 kLxk22 }

(62)

x∈RM

where b = [bT1 . . . bTq ]T , A = [AT1 . . . ATq ]T , and L is a discrete 2D differential operator.

In this example we estimate the 96 × 96 image tree from a sequence of five noisy LR

images with D1 = D2 = 2. Therefore A ∈ R11520×9216 and L ∈ R18240×9216 . As both A and

the regularization matrices are not too large, the JBD-FP approaches are used again and

all implemented with complete reorthogonalization. Average results of 20 realizations are

shown in Table 7. We note again that PROJ-L is superior. For this example the choice

µ = 1 worked satisfactorily in all cases. The original HR image and two noisy LR images

are depicted in the first row of Fig. 10. One of the restored images determined by PROJ-L

is depicted in the second row of the same figure. Also, to illustrate the performance of

λ̄

Ē

t̄

km (kM )

FP-LU

0.0309

0.0496

16.4869

281 (284)

FP-LD

0.0309

0.0496

9.1734

281 (284)

P-LSQR

0.0502

3.2403

157 (160)

PROJ-L

0.0300

0.0535

0.8753

47 (57)

JBD-FP

0.0309

0.0497

21.6653

112 (114)

JBD-FP

0.0309

0.0497

25.1221

112 (114)

Table 7: Results for super-resolution problem for NL = 0.01, ǫ = 10−6 , and p0 = 15.

the algorithms using distinct regularization matrices, we consider the cases L = I and

L2,2D =

IN ⊗ L2

L2 ⊗ IN

,

L2 =

-1

2

..

.

-1

..

.

-1

..

.

2

-1

(N −2)×N

.

∈R

In this case we obtained solutions with relative error of approximately 28.80% and 4.95%,

respectively. That is, while the quality of the solutions for the case L = I deteriorate

24

Figure 10: First row: HR image (left) and two LR noisy images. Second row: Restored

image with L = I determined by GKB-FP (left), restored image with L from (58) determined by PROJ-L (center), and restored image with L2,2D determined by PROJ-L (right).

significantly, the quality of the solutions using L2,2D remain practically the same as that

obtained using L from (58). Visual results are depicted in Fig. 10.

We end the numerical results section with the observation that we also performed

numerical experiments involving the three above deblurring test problems using data

with distinct noise levels. The results showed that the extensions of GKB-FP perform

similarly as the original version of the algorithm, and are not show here.

6

Conclusions

We reviewed the GKB-FP algorithm and showed how to extend it to large-scale general form Tikhonov regularization. As a result, three distinct approaches that do not require estimates of the noise level were proposed and numerically illustrated on large-scale

deblurring problems. Numerical results for representative test problems using data with

realistic noise levels (e.g., 0.1% and 0.01%) showed that in term of accuracy and efficiency,

the extended versions of GKB-FP perform satisfactorily in as much the same way as the

original version of the algorithm, and are therefore competitive and attractive for largescale general-form Tikhonov regularization. In addition, to overcome possible difficulties

in GKB-FP when selecting the Tikhonov regularization parameter at each iteration, we

proposed a stopping rule for LSQR, and showed numerically that the smoothed preconditioned LSQR algorithm (P-LSQR) coupled with the new rule can be a good alternative

to large-scale general-form Tikhonov regularization.

Acknowledgments

The authors are grateful to the reviewers for their valuable comments which have

significantly improved the presentation of this work.

25

References

[1] Tikhonov AN. Solution of incorrectly formulated problems and the regularization

method. Soviet Mathematics Doklady 1963; 4:1035-1038.

[2] Morozov VA. Regularization Methods for Solving Incorrectly Posed Problems.

Springer: New York, 1984.

[3] Hansen PC, O’Leary DP. The use of the L-curve in the regularization of discrete

ill-posed problems. SIAM Journal on Scientific Computing 1993; 14:1487-1503.

[4] Golub GH, Heath M, Wahba G. Generalized cross-validation as a method for choosing

a good ridge parameter. Technometrics 1979; 21:215-222.

[5] Regińska T. A regularization parameter in discrete ill-posed problems. SIAM Journal

on Scientific Computing 1996; 3:740-749.

[6] Bazán FSV. Fixed-point iterations in determining the Tikhonov regularization parameter. Inverse Problems 2008; 24: DOI:10.1088/0266-5611/24/3/035001.

[7] Engl HW, Hanke M, Neubauer A. Regularization of Inverse Problems. Kluwer: Dordrecht, 1996.

[8] Bakushinski AB. Remarks on choosing a regularization parameter using quasioptimality and ratio criterion. USSR Computational Mathematics and Mathematical

Physics 1984; 24:181-182.

[9] Kindermann S. Convergence analysis of minimization-based noise level-free parameter choice rules for linear ill-posed problems. Electronic Transactions on Numerical

Analysis 2011; 38:233-257.

[10] Bauer F, Lukas MA. Comparing parameter choice methods for regularization of illposed problems. Mathematics and Computers in Simulation 2011; 81:1795-1841.

[11] Reichel L, Rodriguez G. Old and new parameter choice rules for discrete ill-posed

problems. Numerical Algorithms 2012; DOI 10.1007/s11075-012-9612-8.

[12] Bunse-Gerstner A, Guerra-Ones V, Madrid de la Vega H. An improved preconditioned LSQR for discrete ill-posed problem. Mathematics and Computers in Simulation 2006; 73:65-75.

[13] Hanke M, Hansen PC. Regularization methods for large-scale problems. Surveys on

Mathematics for Industry 1993; 3:253-315.

[14] Hansen PC, T. K. Jensen TK. Smoothing-Norm Preconditioning for Regularizing

Minimum-Residual Methods. SIAM Journal on Matrix Analysis and Applications

2006; 29:1-14.

[15] Kilmer ME, Hansen PC, Español MI. A projection-based approach to general-form

Tikhonov regularization. SIAM Journal on Scientific Computing 2007; 29:315-330.

26

[16] Jacobsen M, Hansen PC, Saunders MA. Subspace preconditioned LSQR for discrete

ill-posed problems. BIT 2003; 43:975-989.

[17] Paige CC, Saunders MA. LSQR: An algorithm for sparse linear equations and sparse

least squares. ACM Transactions on Mathematical Software 1982; 8:43-71.

[18] Saad Y, Schultz MH. GMRES: A generalized minimal residual algorithm for solving

nonsymmetric linear systems. SIAM Journal on Scientific and Statistical Computing

1986; 7:856-869.

[19] Neuman A, Reichel L, Sadok H. Implementations of range restricted iterative methods for linear discrete ill-posed problems. Linear Algebra and its Applications 2012;

436:3974-3990.

[20] Hansen PC, Jensen TK, Rodriguez G. An adaptive pruning algorithm for the discrete

L-curve criterion. Journal of Computational and Applied Mathematics 2007; 198:483492.

[21] Morigi S, Reichel L, Sgallari F, Zama F. Iterative methods for ill-posed problems and

semiconvergent sequences. Journal of Computational and Applied Mathematics 2006;

193:157-167.

[22] Salehi Ravesh M, Brix G, Laun FB, Kuder TA, Puderbach M, Ley-Zaporozhan J,

Ley S, Fieselmann A, Herrmann MF, Schranz W, Semmler W, Risse F. Quantification of pulmonary microcirculation by dynamic contrast-enhanced magnetic resonance imaging: Comparison of four regularization methods. Magnetic Resonance in

Medicine 2012; DOI: 10.1002/mrm.24220.

[23] Chung J, Nagy JG, O’Leary DP. A Weighted-GCV Method for Lanczos-Hybrid Regularization. Electronic Transaction on Numerical Analysis 2008; 28:149-167.

[24] Bazán FSV, Borges LS. GKB-FP:an algorithm for large-scale discrete ill-posed problems. BIT 2010; 50:481-507.

[25] Lampe J, Reichel L, Voss H. Large-Scale Tikhonov Regularization via Reduction by

Orthogonal Projection. Linear Algebra and its Applications 2012; 436:2845-2865.

[26] Reichel L, Sgallari F, Ye Q. Tikhonov regularization based on generalized Krylov

subspace methods. Applied Numerical Mathematics 2012; 62:1215-1228.

[27] Hochstenback ME, Reichel L. An Iterative Method For Tikhonov Regularization

With a General Linear Regularization Operator. Journal of Integral Equations and

Applications 2010; 22:463-480.

[28] Eldén L. A weighted pseudoinverse, generalized singular values, and constrained least

square problems. BIT 1982; 22:487-502.

[29] Golub GH, Kahan W. Calculating the singular values and pseudo-inverse of a matrix.

Journal of the Society for Industrial and Applied Mathematics Series B Numerical

Analysis 1965; 2:205-224.

27

[30] Hansen PC. Rank-deficient and discrete ill-posed problems. SIAM: Philadelphia, 1998.

[31] Kirsch A. An Introduction to the Mathematical Theory of Inverse Problems. Springer:

New York, 1996.

[32] Hansen PC. Regularization Tools: A MATLAB package for analysis and solution of

discrete ill-posed problems. Numerical Algorithms 1994; 6:1-35.

[33] Bazán FSV, Francisco JB. An improved Fixed-point algorithm for determining the

Tikhonov regularization parameter. Inverse Problems 2009; 25: DOI:10.1088/02665611/25/4/045007.

[34] Golub GH, Van Loan CF. Matrix Computations. The Johns Hopkins University Press:

Baltimore, 1996.

[35] Paige CC, Saunders MA. Solution of sparse indefinite systems of linear equations.

SIAM Journal on Numerical Analysis 1975; 12:617-629.

[36] Hansen PC. The Discrete Picard Condition For Discrete Ill-Posed Problems. BIT

1990; 30:658-672.

[37] Castellanos JJ, Gómez S, Guerra V. The triangle method for finding the corner of

the L-curve. Applied Numerical Mathematics 2002; 43:359-373.

[38] Rodriguez G, Theis D. An algorithm for estimating the optimal regularization parameter by the L-curve. Rendiconti di Matematica 2005; 25:69-84.

[39] Saunders MA. Computing projections with LSQR. BIT 1997; 37:96-104.

[40] Park SC, Park MK, Kang MG. Super-resolution image reconstruction: a technical

overview. IEEE Signal Processing Magazine 2003; 20:21-36.

28