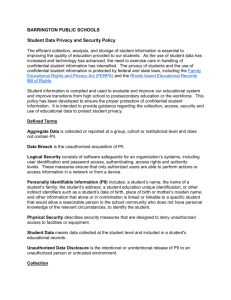

The PII Problem: Privacy and a New Concept of Personally

advertisement