High Performance Logging System for Embedded UNIX and GNU

advertisement

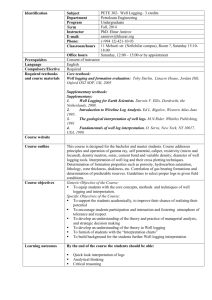

High Performance Logging System for Embedded UNIX and GNU/Linux Applications Jaein Jeong Cisco Systems San Jose, California 95134, USA jajeong@cisco.com Abstract—We present a high performance logging system for embedded UNIX and GNU/Linux applications. Compared to the standard UNIX and GNU/Linux logging method, syslog, our method has two orders of magnitude lower latency and an order of magnitude higher message throughput. This speed-up is mainly due to the use of a memory-mapped file as the means of interprocess communication, fewer memory copies and the batching of output messages in the logging daemon. In addition, our logging system also accepts syslog messages, providing compatibility with existing applications. Our logging system is in production use in the Cisco UCS Virtual Interface Card. I. I NTRODUCTION Logging is a helpful tool for analyzing the behavior of computer systems and applications. By analyzing a sequence of events in a log, one can see whether computer systems or applications behave properly. In production environments logs are even more important and may be the only way to analyze system problems. In this paper, we focus on logging systems for embedded UNIX applications (we will use UNIX to refer to all UNIX-like systems including GNU/Linux). Traditional UNIX applications log messages by writing them over a socket to a system daemon which then writes the messages to log files and/or forwards them to peers running on remote hosts. The system daemon is syslogd and the application library interface is syslog. There are two performance problems with this design. The first is the time it takes for an application to transmit a message to syslogd. The second is the inefficiency of syslogd writing messages to a file one at a time using unbuffered writes. These unbuffered writes are not a big problem on a conventional file system using a hard disk (the file system buffer cache reduces disk I/O and hard drives are fast compared to flash memory), but are very slow when writing to flash memory using a flash memory file system. The first of these problems increases application response times when there are many messages to log. The second reduces the number of messages that can be logged per second by at least an order of magnitude, and also increases the application response time as no new messages may be transmitted to syslogd while it is writing the previous message. In order to address these performance problems, we developed a logging method that uses shared memory to transfer messages to the system logging daemon and briefly buffers received messages before writing them to permanent storage. In our logging method, application processes create log messages by writing into a shared memory segment. A write lock is used to ensure applications don’t conflict with one another as they write into the shared memory. A logging daemon retrieves messages from the shared memory segment and dispatches them to one or more destinations, such as permanent local storage, a remote syslogd daemon, or a dynamically connectable network client. We note that our logging method drops incoming messages when the shared memory becomes full due to a burst of incoming messages. This trade-off was made to meet real-time requirements for applications. After a message is written to the shared memory, the logging daemon must be notified. This is done by writing to a pipe. The logging daemon waits on the receiving end of the pipe and wakes up when data (i.e. a message notification) is available. Event-driven applications may defer notification until their event handler completes. This has two positive effects, the most important of which is reducing the notification overhead from once per message to once per handler invocation. It also defers the cost of notification until the handler has completed its work. Finally, when the logging daemon is running it sets a flag in the shared memory area indicating that (since it’s already running) it doesn’t need to be notified. As the logging daemon processes each message, those that go to a network destination (either a remote syslogd or a dynamic log viewer) are sent to the destination immediately. Those to be stored locally are formatted into their final text representation and written via a pipe to a buffering process, flashlogger. After the first message that arrives when its buffer is empty, flashlogger waits for up to 0.5 seconds for additional messages. After the wait period or sooner if its buffer fills up, flashlogger writes its buffered messages to flash and resumes waiting for additional messages. This buffering reduces the number of writes to local storage and enables a much higher overall throughput than obtained by writing each message to storage individually. In addition to forwarding to remote syslogd processes, the logging daemon also accepts syslog messages from local (only) applications. This provides full compatibility for existing applications. Messages received via the syslog interface are dispatched the same way (i.e. according to their priority and possibly source) as messages received via the shared memory segment. In our embedded environment with flash storage, our log- 2 ging system is almost two orders of magnitude faster for an application than syslog. This is because logging a message only involves user-space memory copying without a context switch. The maximum message throughput (how many can be saved per second before having to drop or throttle messages) with the buffering is over an order of magnitude greater. The rest of this paper is organized as follows. In Sections II and III, we review previous work and the evolution of logging systems on which our work is based. We describe the design principles and the implementation details for our logging method in Sections IV and V. We evaluate the performance in Section VI, describe a further optimization in Section VII and conclude this paper in Section VIII. II. R ELATED W ORK Introduced in BSD in the early 1980’s, syslog [1], [2] is still the most notable logging method for UNIX applications due to its simplicity, flexibility and public availability. This is exemplified by a number applications that use or extend it [3]– [9]. In order to provide more features, a number of extensions of syslog have been implemented. Syslog-ng [10] and rsyslog [11] are two of the most popular ones [12]–[14]. Syslog-ng is an extension of syslog that was created to support the original BSD syslog protocol [1] from another syslog extension, nsyslogd [15]. Its goals are to provide additional features such as reliable transport, encryption, and richer set of information and filtering. Over time, it has evolved to support the latest syslog protocol [2] and is used by several UNIX distributions. Rsyslog [11] is another wellknown extension of syslog and is being used in many of latest Linux distributions. Rsyslog extends syslog not only in features but also in performance with a multi-threaded implementation. By using threads it avoids the problem of blocking the acceptance of new messages while writing previously received messages. However, these existing systems are not designed for embedded/flash logging and they use the same fundamental design in their message handling and thus have the same limitations when used for embedded flash logging as mentioned in Section I: (i) slow message passing due to message copying over the kernel to user space boundary, and (ii) unbuffered writes of messages to output devices. In the next section, we describe our shared-memory logging methodology and shows how it addresses the performance problems of existing logging systems. III. C ISCO UCS V IRTUAL I NTERFACE C ARD AND E VOLUTION OF L OGGING S YSTEM In this section, we introduce the Cisco UCS Virtual Interface Card and explain how its logging system evolved based on design requirements and experience using it. A. Cisco UCS Virtual Interface Card Cisco Unified Computing System (UCS) [16] is a datacenter server system that enables large deployments of virtualized servers with scalable performance through hardwareaccelerated I/O virtualization. Cisco UCS mainly consists of the following components: servers, virtualization interface cards and switches. A UCS server provides computation capability, and it can be used for a computation-intensive single server or a number of virtualized servers. A UCS virtual interface card [17], or VIC, is a high-speed network interface card for a UCS server, and one of its key features is support of virtual network interfaces (VNICs). To the server, a VNIC is seen as a separate device with a unique PCI bus ID and separate resources. To the network, it is seen as a network frame with a special tag, called VN-Tag. Thus, it is treated by a server in the same way as other PCI devices and does not cause any overhead for translation. A VIC is implemented by an ASIC that consists of a management processor, two fiberchannel processors, VNIC resources and resource mappings. The management processor, which the firmware runs on and is the target of our logging system, has a 500MHz MIPS 24Kc processor core and runs Linux kernel 2.6.23-rc5. B. Evolution of VIC Logging System • • • logd - A Simple Logging Daemon: We initially wrote logd, a simple logging daemon that accepts messages with different severity levels from multiple processes through IPC calls, formats the message and writes the formatted output message to flash storage using stdio functions. Although it is very simple, logd achieved its goals with reasonable performance. Since writes through stdio functions are buffered, logd was able to write messages reasonably quickly most of the time. Unbuffered syslogd: logd served its purpose well enough at first, but there came a need to support forwarding serious errors to syslogd on the UCS switches. Instead of extending logd with such a feature, we decided to use syslogd, which of course already has the feature. Using syslogd achieved the immediate goal but its write performance was much worse because syslogd writes log messages without buffering them. Buffered syslogd: In order to address the write performance problem of syslogd we introduced a buffering process, called flashlogger, between syslogd and the flash storage. flashlogger has an adjustable wait time (currently half a second) to collect messages before it writes out what it has collected. However, if its buffer fills up before the wait time has passed, it goes ahead and writes out the buffer immediately. This combination of syslogd and flashlogger was used for the logging system for VIC until we developed the new logging system that is introduced in this paper. IV. D ESIGN R EQUIREMENTS A. Faster Message Transfer A representation of syslogd is shown in Figure 1(a). The round-trip time between a user application process and the syslogd process is typically around 700us on average and has been observed as high as 13,000us under heavy load. This is not a small number for a modern computing system. The reason why accessing syslogd takes a long time is because 3 Switch Switch Switch Switch System Process System Process App log Process … syslogd App log Process System Process … Flash Flash Logger syslog App Process log … mqlogd System Process dequeue q enqueue App Process log JFFS2 USER System Process App log P Process … App log g Process KERNEL System Process Flash Memory Mapped File Flash Logger JFFS2 USER Buffer Named pipe Named pipe KERNEL (a) Typical UNIX Logging (b) Shared Memory Logging Fig. 1. Data Flow of Log Messages it requires passing data between user and kernel spaces and context switches between the application and syslogd. In order to address the long round-trip problem of syslogd, we designed a logging scheme that does not require message transfer between user and kernel spaces in its critical data path, as illustrated in Figure 1(b). With this scheme, an application or system process uses the same logging API to log a message. The message is copied to shared memory by the log functions and forwarded to the flash memory or a remote host by a message collector mqlogd. Since the shared memory is allocated in user space, message transfer can be very fast once the shared memory section is initialized. Thus, a user or system application is finished logging right after it writes a message to the shared memory instead of waiting for the reply from the message collector, and this improves latency. The faster message transfer of the shared memory logging is also attributable to the number of times a message is copied in memory, which is a significant factor for message transfer time. With syslogd, a message is copied four times in memory: first, it is formatted into a user buffer; second, it is written from the user buffer to a kernel socket; third, it is read from the kernel socket to a syslogd user buffer; and fourth, it is written from the syslogd user buffer to a file. With the shared memory logging, the number reduces to two. A process that logs a message formats the message into the shared memory. Since the logging daemon accesses the shared memory, the first three message copies in syslogd are reduced to a single message copy. B. Compatibility with Existing Logging Applications VIC applications use the following logging macros: log_info, log_debug, log_warn, and log_error. These macros format input strings and previously made syslog calls. Applications that use these logging macros were converted to shared-memory-based logging without modification of application code by changing the syslog calls to message copies to the shared memory. However, there are some applications that directly call syslog without using the logging macros. Therefore the logging daemon of our logging system also accepts messages intended for syslogd. This allows existing applications that use syslogd to be used with our logging system without modifications. One such application is klogd. C. Output Flash File System The syslogd program for VIC used the flashlogger program to buffer messages, and our logging system takes the same approach. D. Support of Destination-Aware Message Formatting One of the advantages of syslogd is that it allows log messages to be sent to multiple destinations of different types, where a destination may be a simple file, a named pipe or a socket on a remote host. However, there is a drawback. syslogd uses the same data representation shown in Figure 2 for all destinations. This representation has two problems. The first problem is inefficiency. The string representation of date and time requires more space than a binary or simpler text representation. In addition, the host name is redundant for flash logs because all of the events that are stored in the flash memory are local and are from the same host. The second problem is loss of precision. The message format specifies the time of a log message at a granularity of seconds, while the system supports timing in milliseconds. Logging data is more useful with more precise timings. Our logging system formats messages differently depending on the destination. For remote forwarding, it outputs log messages in the same way as the current Cisco version of syslogd does for compatibility. For writing to flashlogger, it changes the message format to use a higher precision timestamp while omitting the hostname. 4 Format: DateStr TimeStr Host app.module[pid] : Message Example: Mar 9 15:53:23 simserv01 log.logtest[16665]: This is a message Fig. 2. Message format for syslogd Header Entry (48B in size) CLIENT logperf Client Utility (an example) CLIENT log Receives API call Data Transfer mq Shared Memory Management Queue Management logger UDP Unix Socket SERVER mqlogd Outputs Log Data Transfer mq Shared Memory Management Queue Management Message Formatting Named Pipe UDP IP Socket TCP IP Socket OUTPUT UTILITY OUTPUT UTILITY OUTPUT UTILITY Flash l logger Fig. 3. Local Logging syslogd Remote Forwarding netcat Tail (4B) Client Utility (an example) syslog() system call Memory Mapped File Flag Unused Head (1B) (3B) (4B) Unused Prog Name (16B) Logging ID (16B) Data Entry (48B in size) Log Lvl (2B) Msg Length (2B) TimePID stamp (4B) (8B) Data Entry only with Msg String (48B in size) Msg String (48B) Dynamic Remote Forwarding Program Modules for the mqlogd Logging Service Reader Mutex PID (20B) (4B) Queue Memory Layout Header Entry (48B) V. I MPLEMENTATION A. Platform B. Modular Description Our logging method consists of a core library, server modules, client modules and output utilities as illustrated in Figure 3. The module mq, which is in charge of managing the memory-mapped file, the circular queue, and message formatting, is made as a library that can be used by both a logging daemon and clients. The logging daemon, mqlogd, accepts logging messages either through the shared memory or a UDP UNIX socket, and it outputs messages through the named pipe or a UDP IP socket. The client modules receive logging requests in a printf-like format and insert them into the shared memory. As for the client utilities, we consider two different kinds depending on how they submit logging requests: one uses shared memory and the other uses the syslog library call and UDP UNIX socket. Output utilities are connected to the server through a named pipe, a UDP IP socket, or a TCP IP socket. The named pipe interface is for a program such as flashlogger that stores logging messages locally. The UDP IP socket interface is to forward messages to the syslogd process on the switch. The server also supports dynamic remote forwarding through a TCP IP socket interface. This interface is different from the UDP IP socket interface in that it dynamically maintains forwarding destinations to a client such as netcat on a remote host. 0 Non-Header Entry (48B) 1 * BlockSize Non-Header Entry (48B) 2 * BlockSize … While our logging method can be used for any UNIX system that does logging, it is especially designed for the embedded firmware for the Cisco UCS Virtual Interface Card [17] where low-latency logging is desirable and the storage medium log files are written to is slow. Offset Non-Header Entry (48B) Fig. 4. (N-1) * BlockSize Memory Layout of the Circular Queue C. Shared Memory and Circular Queue Management 1) Data Representation: The shared memory is used as an array of fixed-sized blocks that are managed as a circular queue as illustrated in Figure 4. The first block in the memorymapped file is used as the header entry, which contains all the bookkeeping information for the circular queue and the logging service. The rest of the blocks are data entries, which contain the information for each logging message. Just after each message header, the message uses as many blocks as it needs for the message string. The length of the message string is specified in the message length field in the first data entry. Since data entries are consecutive, we can handle multiple segments of message string fields like a single field by pointing to the starting address of the first message string field. However, there is an exception to this. When a sequence of data entries roll around the end of the memory-mapped file, we cannot treat the message string as consecutive memory. In that case, the message string must be handled as two pieces: the first one is from the beginning of the message string and to the end of the memory-mapped file, the second one is from the beginning of the memory-mapped file and the location where 5 the last data entry ends. Thus, a message string can be handled as one or two consecutive byte arrays. 2) Notification Mechanism: The queue management module notifies the logging daemon when a client writes a logging message in the queue or when the queue is full. It implements two different methods for client-to-server notification: one using write-and-select (the default) and the other with a signal. Each time a client writes a logging message to the memorymapped file, it checks whether it should notify the logging daemon. If the daemon sets the notify flag in the header entry, the client does not send a notification. If the daemon clears the notify flag, the client sends a data-available command, which makes the daemon retrieve all the logging messages in the queue. This notification batching mechanism suppresses redundant notifications and helps improve the latency and throughput for message passing and dispatch. The client also sends a queue-full notice when it detects that the queue is full. It then drops the message. This makes the daemon output a queue-full message to the destinations in order to mark that there was a drop. 3) Locking Mechanism: The queue management module uses a locking mechanism in order to maintain the consistency of the circular queue. It implements two different mechanisms: semaphore lock and pthread mutex lock. The semaphore lock is a set of lock functions based on the Linux semaphore API. While it is not really fast due to a kernel access for each lock or unlock operation, it is widely supported. The pthread mutex lock uses the Linux pthread API. While it is faster than the semaphore lock, it is unreliable for inter-process use in our current version of Linux. Thus, the deployed queue management module uses the semaphore lock. D. Client Logging Interface Client applications in the original logging system first initialized application-wide logging objects before they called one of the logging macros (log_info, log_debug, log_warn, and log_error). This two-step approach fits well to the shared memory based logging and allowed existing applications to be converted to the new logging without modification of application code. In order to handle syslog messages the logging daemon waits on the UNIX socket at /dev/log, adding received messages to the circular queue. This functionality provides the same application interface as syslog, allowing clients for syslog to be used with the shared memory logging without any modification. port for syslogd, this makes the syglogd processes on the switches receive the message. In order to support dynamic remote forwarding, the logging daemon listens to a TCP port. When a client on a remote host makes a TCP connection to this port, the daemon adds the file descriptor of the TCP connection to a pool of active TCP connections. For each incoming logging message, the daemon formats the message and sends it to clients in the pool. When a remote client disconnects the TCP connection, any attempt to send a message to the client generates a file error. The daemon detects this as an inactive TCP connection and removes the file descriptor from the pool. The dynamic remote forwarding may look similar to the UDP port forwarding, but these two methods are designed for different purposes. The UDP port forwarding sends high-priority messages to the switches and is always enabled. The TCP dynamic remote forwarding, which is designed for easy debugging, sends messages of all priority levels and is enabled only on explicit requests to avoid unnecessary overhead. VI. E VALUATION A. Experiment Set-up 1) Metrics: For evaluation, we measured the performance of our shared memory-based service (mqlogd) and syslogd for two metrics – request latency and drop rate: • • 2) Parameters: For performance comparison of mqlogd and syslogd, we varied the number of clients and number of iterations for both logging services: • E. Server Output Interface After receiving each logging message, the logging daemon formats it and outputs the formatted message to the internal flash storage logger. For a logging message with a high priority level (i.e. error or warn), the logging daemon also outputs the formatted message to a UDP socket on remote hosts. By default, the daemon sends the formatted message string to UDP port 514 of the two switches to which a UCS Virtual Interface Card is attached. Since UDP port 514 is a designated Request Latency: We measured statistics (average, minimum, and maximum) for the request latency by repeating a logging message multiple times for each set of parameters. Among these statistics, average and maximum request time are more interesting because they show the expected and worst-case service times. Request Drop Rate: We also measured the request drop rate. syslogd has a zero drop rate at the sacrifice of latency, which is an order of magnitude higher. In contrast, the mqlogd logging service drops requests under high load. By measuring the request drop rate for various sets of parameters, we will show the conditions where the mqlogd logging service achieves lower request latency while achieving a zero or acceptably low drop rate. • Number of clients - 1 and 2: Changing the number of clients gives the lower bound for performance (a single client) and shows system performance as contention increases (two clients). No more than 2 clients were measured because most of the time, there is only one client logging messages. Number of iterations - 100, 1000, 5000, 10000, and 50000: Currently, the queue size of the system is set to 10240 items. In this test, we see how our logging service module behaves with different numbers of requests. For all of the measured configurations, five iterations were run. 6 Average Request Latency − 1 Client For evaluating the most suitable design out of a few design variations, we measured the performance while varying the following parameters – locking mechanism and notification mechanism: • Locking Mechanism - semaphore lock and pthread mutex lock: The purpose of this test is to see how the semaphore lock performs compared to the pthread mutex lock and how it performs when we change various parameters. Notification Mechanism - signal vs. write-and-select: While both signals and write-and-select are legitimate ways of notifying the server, signals may have side effects when used with the VIC library code. Thus, the purpose of this test is to see how write-and-select performs relative to signal, which has a smaller latency. B. Performance Results As for the average request latency, mqlogd showed more than 10-times speed-up for all configurations compared to syslogd. 1) Effects of Notification and Lock Mechanisms: For notification mechanisms, the measurements showed little difference between the implementation with write-and-select and the one with signal. In addition, signals cause problems with VIC library. This made us use write-and-select. For lock mechanisms, the implementation with the pthread mutex lock was about 40% faster than the one with the semaphore lock. This implies that we can easily get an additional 40% improvement by replacing calls to the semaphore lock with the pthread mutex lock when a working version is available. As for minimum request latency, mqlogd showed more than 20-times speed-up for all its configurations: 25times speed-up for semaphore lock implementations and 40times speed-up for pthread mutex lock implementations compared to syslogd. As for maximum request latency, mqlogd showed more than a 2x speed-up for all its configurations: by a factor of 2.3 for semaphore lock implementations and 4.0 for pthread mutex lock implementations compared to syslogd. 2) Effects of Queue Size: While syslogd did not drop requests, mqlogd dropped logging messages for a burst longer than or equal to 10000 (the queue size), and this became more severe as there were more requests. We can see that we need to have the queue size larger than the maximum expected size of a burst to avoid drops. 3) Effects of Multiple Clients: We measured the request latency and the request drop rate for both one and two clients. As for the average request latency, we have observed that having two clients makes about a factor of two increase. This ratio gets higher when the number of requests is larger than the size of queue as in the case of 50000 iterations. This shows that setting the queue size is important to have the performance bounded unsurprisingly. We notice that with two clients the request starts to drop with with smaller number of iterations than the case with a single client. syslogd mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd(signal, pthread) 1000 800 Latency (us) • 1200 600 400 200 0 100 1000 5000 10000 Number of Iterations 50000 # - Iterations 100 1000 5000 10000 50000 syslogd 710.6 741.0 748.0 739.8 716.0 mqlogd (select, 50.4 49.8 42.2 45.8 72.6 semaphore) mqlogd (signal, 90.4 49.2 46.0 50.4 85.2 semaphore) mqlogd (select, 191.4 27.4 23.4 27.8 78.8 pthread) mqlogd (signal, 69.2 26.2 23.4 31.2 94.2 pthread) Speed-up over syslogd mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd (signal, pthread) Fig. 5. 100 1000 5000 10000 50000 14.1 14.9 17.7 16.2 9.9 7.9 15.1 16.3 14.7 8.4 3.7 27.0 32.0 26.6 9.1 10.3 28.3 32.0 23.7 7.6 Average Request Latency for a Single Client 4) Effects of Client Interface Type: We evaluated the performance of our logging system using the UNIX socket interface and compared it with the performance with shared memory. For reference, we compared this with the performance of syslogd. The experiment results are shown in Figure 11. We find that the average latency with the UNIX socket is more than an order of magnitude longer on average than that with the shared memory and about the same level as that of syslogd. While we provided the UNIX socket interface for compatibility reasons, the use of the UNIX socket interface (e.g., syslog) should be minimized to achieve the best performance. VII. O PTIMIZATION We have shown that mqlogd provides a logging service that is at least 10 times faster than syslogd by using user-space 7 Minimum Request Latency − 1 Client 4 1000 4.5 syslogd mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd(signal, pthread) 900 800 Maximum Request Latency − 1 Client syslogd mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd(signal, pthread) 4 3.5 3 Latency (us) Latency (us) 700 x 10 600 500 400 2.5 2 300 1.5 200 1 100 0.5 0 100 1000 5000 10000 Number of Iterations 50000 # - Iterations 100 1000 5000 10000 50000 syslogd 575.2 574.6 560.8 478.2 302.4 mqlogd (select, 23.0 19.0 19.0 18.8 18.6 semaphore) mqlogd (signal, 23.4 21.0 21.0 20.4 20.2 semaphore) mqlogd (select, 122.4 10.0 10.0 10.0 10.0 pthread) mqlogd (signal, 16.4 10.0 10.2 10.0 10.0 pthread) Speed-up over syslogd mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd (signal, pthread) Fig. 6. 100 1000 5000 10000 50000 25.0 30.2 29.5 25.4 16.3 24.6 27.4 26.7 23.4 15.0 4.7 57.5 56.1 47.8 30.2 35.1 57.5 55.0 47.8 30.2 Minimum Request Latency for a Single Client shared memory as the means of inter-process communication. In this section, we discuss a notification mechanism that can improve this speed-up even further. A. Deferred Notification Mechanism In order to improve the processing time of logging requests, we introduce the deferred notification mechanism. With deferred notification, a logging client sends one notification for a batch of messages rather than sending a separate notification for each message. Deferred notification is expected to give a smaller latency than per-message notification, but it has a restriction in that it requires an application to determine an appropriate time to send notifications. This is easy for most applications in VIC because they are event driven and the completion of an event handler is a very suitable time to send 0 100 1000 5000 10000 Number of Iterations 50000 # - Iterations 100 1000 5000 10000 50000 syslogd 9129 11977 14366 24143 41100 mqlogd (select, 1984 11814 12234 12251 15844 semaphore) mqlogd (signal, 1976 9796 12635 13813 15060 semaphore) mqlogd (select, 696 7440 12223 14516 14980 pthread) mqlogd (signal, 789 8159 11850 11415 13927 pthread) Speed-up over syslogd mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd (signal, pthread) Fig. 7. 100 1000 5000 10000 50000 4.6 1.0 1.2 2.0 2.6 4.6 1.2 1.1 1.7 2.7 13.1 1.6 1.2 1.7 2.7 11.6 1.5 1.2 2.1 3.0 Maximum Request Latency for a Single Client a notification. B. Host-Side Round-Trip Time Measurement In this section, we compare the performance of the deferred notification mechanism by measuring the round-trip time from the host. To make this measurement, we execute a program called devcmd on the host. The devcmd program sends queries to the main firmware application, which may produce logging messages, and measures the total latency from the perspective of the host. Using devcmd is useful because the latency on the host side is the latency a user of VIC (i.e., a network driver) will experience. The devcmd program allows a user to specify a command to issue, and each command results in a different action in 8 Average Request Latency − 1 and 2 Clients Request Drop Rate − 1 Client 2000 100 90 80 mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd(signal, pthread) 1800 1600 1400 Latency (us) Percent 70 60 50 40 400 10 200 0 100 1000 5000 10000 Number of Iterations 50000 100 1000 5000 10000 50000 0.0% 0.0% 0.0% 21.2% 80.0% 0.0% 0.0% 0.0% 27.7% 83.1% 0.0% 0.0% 0.0% 35.0% 86.7% 0.0% 0.0% 0.0% 39.3% 87.6% Request Drop Rate for a Single Client firmware. Firmware processes the received command and logs messages when the minimum threshold for logging is set to the debug level. When the minimum threshold is set to the info level, firmware processes a received command without logging messages. The difference in the round-trip time between the two modes is the logging time for the specified command. The parameters we considered are summarized as follows: • 800 20 Fig. 8. • 1000 600 # - Iterations mqlogd (select, semaphore) mqlogd (signal, semaphore) mqlogd (select, pthread) mqlogd (signal, pthread) • 1200 30 0 syslogd (1 client) syslogd (2 clients) mqlogd (select, 1 client) mqlogd (select, 2 clients) Notification mechanisms: write-and-select, deferred and syslogd Commands: macaddr (gets the MAC address) and capability (checks availability of a given command) Minimum threshold for logging: info and debug Figure 12 shows the latency for the capability command. Figure 12(a) is the graph for total latency for all combinations of notification mechanisms and minimum threshold levels. As expected, the total latencies for the three notification mechanisms are very close when there is no logging. For total latencies with a minimum logging threshold of debug, we see differences among the notification mechanisms. Since the difference in latency between the debug level and the info level is the time to process logging messages, we can quantify the cost of each notification mechanism. Figure 12(b) shows that mqlogd with the deferred notification mechanism achieves more than 2x speed-up compared to the one with write-and-select. Figure 13 shows the latency for the macaddr command. 100 1000 5000 10000 Number of Iterations 50000 # - Iterations 100 1000 5000 10000 50000 syslogd (1 710.6 741.0 748.0 739.8 716.0 client) syslogd (2 1376.8 1433.0 1458.2 1400.4 1439.6 clients) mqlogd (select, 50.4 49.8 42.2 45.8 72.6 1 client) mqlogd (select, 139.4 73.6 72.2 81.8 206.4 2 clients) mqlogd (signal 90.4 49.2 46.0 50.4 85.2 1 client) mqlogd (signal 111.0 82.2 63.4 84.2 196.0 2 clients) Speed-up over 1 client syslogd mqlogd (select) mqlogd (signal) Fig. 9. 100 1000 5000 10000 50000 1.9 2.8 1.9 1.5 1.9 1.7 1.9 1.8 2.0 2.8 1.2 1.7 1.4 1.7 2.3 Average Request Latency for Two Clients We see that the ratio among each notification mechanism is similar to that Figure 12; mqlogd with the deferred notification mechanism achieves about 2x speed-up compared to the one with write-and-select. The difference is that absolute values of latency for macaddr are smaller than those for capability because macaddr is a trivial command – it’s essentially retrieving 4 bytes. VIII. C ONCLUSION We presented a logging system for embedded UNIX applications that provides faster logging times than syslogd, while providing application-level interface compatibility. It achieves almost two orders of magnitude speed-up in latency and an order of magnitude improvement in message throughput. This 9 Total Latency for ’capability’ Command Logging Latency for ’capability’ Command 2000 2000 Logging Time (us) 1500 Time (us) Time (us) Average (us) 1000 500 0 write−and− select (debug) write−and− select (info) deferred (debug) deferred (info) syslogd (debug) syslogd (info) 1500 1000 500 0 write−and− select (a) Total Latency Latency for the Capability Command Total Latency for ’macaddr’ Command Logging Latency for ’macaddr’ Command 1000 1000 Logging Time (us) Time (us) Time (us) Average (us) 500 write−and− select (debug) write−and− select (info) deferred (debug) deferred (info) syslogd (debug) syslogd (info) 500 0 write−and− select (a) Total Latency syslogd Latency for the Macaddr Command Request Drop Rate − 1 and 2 Clients 100 mqlogd (select, 1 client) mqlogd (select, 2 clients) as syslogd. ACKNOWLEDGMENTS I would like to thank my colleagues at Cisco, Jim Orosz, Brad Smith, Puneet Shenoy and James Dong for having fruitful discussions and helping edit and proofread this paper. 80 70 Percent deferred (b) Logging Latency Fig. 13. 90 syslogd (b) Logging Latency Fig. 12. 0 deferred 60 R EFERENCES 50 [1] C. Lonvick, “The BSD Syslog Protocol,” 2001. http://tools.ietf.org/html/ rfc3164. [2] R. Gerhards, “The Syslog Protocol,” 2009. http://tools.ietf.org/html/ rfc5424. [3] T. Qiu, Z. Ge, D. Pei, J. Wang, and J. Xu, “What happened in my network: mining network events from router syslogs,” in Proceedings of the 10th ACM SIGCOMM conference on Internet measurement (IMC ‘10), ACM, November 2010. [4] K. Slavicek, J. Ledvinka, M. Javornik, and O. Dostal, “Mathematical processing of syslog messages from routers and switches,” in Proceedings of the 4th International Conference on Information and Automation for Sustainability (ICIAFS 2008), IEEE, December 2008. [5] M. Bing and C. Erickson, “Extending unix system logging with sharp,” in Proceedings of the 14th Systems Administration Conference (LISA 2000), USENIX, December 2000. [6] M. Hutter, A. Szekely, and J. Wolkerstorfer, “Embedded system management using wbem,” in IFIP/IEEE International Symposium on Integrated Network Management, 2009 (IM ‘09), IEEE, June 2009. [7] T. Park and I. Ra, “Design and evaluation of a network forensic logging system,” in Convergence and Hybrid Information Technology, 2008. ICCIT’08. Third International Conference on, vol. 2, pp. 1125–1130, IEEE, 2008. [8] M. Roesch et al., “Snort-lightweight intrusion detection for networks,” in Proceedings of the 13th USENIX conference on System administration, pp. 229–238, Seattle, Washington, 1999. [9] M. Schütte, “Implementation of ietf syslog protocols in the netbsd syslogd,” 2009. [10] B. Scheidler, “syslog-ng.” http://www.balabit.com/network-security/ syslog-ng. [11] R. Gerhards, “rsyslog.” http://www.rsyslog.com. 40 30 20 10 0 100 1000 5000 10000 Number of Iterations 50000 # - Iterations 100 1000 5000 10000 50000 mqlogd (select, 0.0% 0.0% 0.0% 21.2% 80.0% 1 client) mqlogd (select, 0.0% 0.0% 10.0% 45.7% 91.9% 2 clients) Fig. 10. Request Drop Rate for Two Clients speed-up is attributed to the use of user-level shared memory as the means of inter-process message communication. It also supports the syslog interface, allowing system applications that use syslog to be interoperable without additional modifications while achieving about the same level of performance 10 Average Request Latency − 1 Client 1200 1000 syslogd mqlogd (select, semaphore) mqlogd (Unix socket) Latency (us) 800 600 400 200 0 100 1000 5000 10000 Number of Iterations 50000 # - Iterations 100 1000 5000 10000 50000 syslogd 701.8 717.8 707.2 714.4 691.2 mqlogd (select, 50.4 49.8 42.2 45.8 72.6 semaphore) mqlogd (UNIX 743.6 767.6 754.6 747.6 734.8 socket) Speed-up over syslogd mqlogd (select, semaphore) mqlogd (UNIX socket) 100 1000 5000 10000 50000 14.1 14.9 17.7 16.2 9.9 1.0 1.0 1.0 1.0 1.0 Fig. 11. Average Request Latency for a Single Client with Different Client Interfaces [12] A. Tomono, M. Uehara, M. Murakami, and M. Yamagiwa, “A log management system for internal control,” in Network-Based Information Systems, 2009. NBIS’09. International Conference on, pp. 432–439, IEEE, 2009. [13] F. Nikolaidis, L. Brarda, J.-C. Garnier, and Nikifeld, “A universal logging system for lhcb online,” International Conference on Computing in High Energy and Nuclear Physics (CHEP 2010), October 2010. [14] J. Garnier, “Lhcb online log analysis and maintenance system,” in Proceedings of the 13th International Conference on Accelerator and Large Experimental Physics Control Systems, October 2011. [15] D. Reed, “nsyslogd.” http://coombs.anu.edu.au/∼avalon/nsyslog.html. [16] S. Gai, T. Salli, and R. Andersson, Project California: a Data Center Virtualization Server UCS (Unified Computing System). lulu.com, March 2009. [17] Cisco Systems, “Cisco UCS M81KR Virtual Interface Card Data Sheet.” http://www.cisco.com/en/US/prod/collateral/ps10265/ps10280/ data sheet c78-525049.html.