Project management: cost, time and quality, two best guesses and a

International Journal of Project Management Vol. 17, No. 6, pp. 337±342, 1999

# 1999 Elsevier Science Ltd and IPMA. All rights reserved

Printed in Great Britain

0263-7863/99 $20.00 + 0.00

PII: S0263-7863(98)00069-6

Project management: cost, time and quality, two best guesses and a phenomenon, its time to accept other success criteria

Roger Atkinson

Department of Information Systems, The Business School, Bournemouth University, Talbot Campus, Fern

Barrow, Poole, Dorset BH12 5BB, UK

This paper provides some thoughts about success criteria for IS±IT project management. Cost, time and quality (The Iron Triangle), over the last 50 years have become inextricably linked with measuring the success of project management. This is perhaps not surprising, since over the same period those criteria are usually included in the description of project management. Time and costs are at best, only guesses, calculated at a time when least is known about the project. Quality is a phenomenon, it is an emergent property of peoples dierent attitudes and beliefs, which often change over the development life-cycle of a project. Why has project management been so reluctant to adopt other criteria in addition to the Iron Triangle, such as stakeholder bene®ts against which projects can be assessed? This paper proposes a new framework to consider success criteria, The Square Route.

# 1999 Elsevier Science Ltd and IPMA. All rights reserved

Keywords: Project management, success criteria

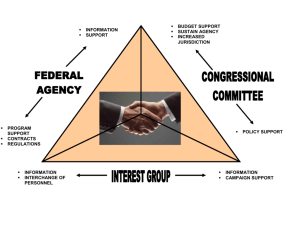

Research studies investigating the reasons why projects fail, for example Morris and Hough 1 and Gallagher, 2 provide lists of factors believed to contribute to the project management success or failure. At the same time some criteria against which projects can be measured are available, for example cost, time and quality often referred to as The Iron Triangle, Figure 1 .

Projects however continue to be described as failing, despite the management. Why should this be if both the factors and the criteria for success are believed to be known? One argument could be that project management seems keen to adopt new factors to achieve success, such as methodologies, tools, knowledge and skills, but continues to measure or judge project management using tried and failed criteria. If the criteria were the cause of reported failure, continuing to use those same criteria will simply repeat the failures of the past. Could it be the reason some project management is labelled as having failed results from the criteria used as a measure of success? The questions then become: what criteria are used and what other criteria could be used to measure success? This paper takes a look at existing criteria against which project management is measured and proposes a new way to consider success criteria, called the Square Route.

The paper has four sections. First, existing de®nitions of project management are reviewed, indicating

The Iron Triangle success criteria to be almost inextricably linked with those de®nitions. Next, an argument for considering other success criteria is put forward, separating these into some things which are done wrong and other things which have been missed or not done as well as they could have done. In the third section, other success criteria, proposed in the literature is reviewed. Finally The Iron Triangle and other success criteria are placed into one of four major categories, this is represented as The Square Route.

First a reminder about how project management is de®ned, the thing we are trying to measure.

What is project management?

Many have attempted to de®ne project management.

One example, Oisen, 3 referencing views from the

1950's, may have been one of the early attempts.

Project Management is the application of a collection of tools and techniques (such as the CPM and matrix organisation) to direct the use of diverse resources toward the accomplishment of a unique, complex, one-time task within time, cost and quality constraints. Each task requires a particular mix of theses tools and techniques structured to ®t the task environment and life cycle

(from conception to completion) of the task.

337

Project Management: Cost, time and quality, two best guesses: R. Atkinson

Figure 1 The Iron Triangle

Notice in the de®nition are included some the success criteria, The Iron Triangle. Those criteria for measuring success included in the description used by

Oisen 3 continue to be used to describe project management today. The British Standard for project management BS6079 4 1996 de®ned project management as:

The planning, monitoring and control of all aspects of a project and the motivation of all those involved in it to achieve the project objectives on time and to the speci®ed cost, quality and performance.

The UK Association of Project Management (APM) have produced a UK Body of Knowledge UK (BoK) 5 which also provides a de®nition for project management as:

The planning, organisation, monitoring and control of all aspects of a project and the motivation of all involved to achieve the project objectives safely and within agreed time, cost and performance criteria. The project manager is the single point of responsibility for achieving this.

Other de®nitions have been oered, Reiss 6 suggests a project is a human activity that achieves a clear objective against a time scale, and to achieve this while pointing out that a simple description is not possible, suggests project management is a combination of management and planning and the management of change.

Lock's 7 view was that project management had evolved in order to plan, co-ordinate and control the complex and diverse activities of modern industrial and commercial projects, while Burke 8 considers project management to be a specialised management technique, to plan and control projects under a strong single point of responsibility.

While some dierent suggestions about what is project management have been made, the criteria for success, namely cost, time and quality remain and are included in the actual description. Could this mean that the example given to de®ne project management

Oisen 3 was either correct, or as a discipline, project management has not really changed or developed the success measurement criteria in almost 50 years.

Project management is a learning profession. Based upon past mistakes and believed best practice, standards such as BS 6079 4 and the UK Body of

338

Knowledge 5 continue to be developed. But de®ning project management is dicult, Wirth, 9 indicated the dierences in content between six countries own versions of BoK's. Turner 10 provided a consolidated matrix to help understand and moderate dierent attempts to describe project management, including the assessment. Turner 10 further suggested that project management could be described as: the art and science of converting vision into reality.

Note the criteria against which project management is measured is not included in that description. Is there a paradox however in even attempting to de®ne project management?

Can a subject which deals with a unique, one-o complex task as suggested as early as Oisen 3 be de®ned?

Perhaps project management is simply an evolving phenomena, which will remain vague enough to be non-de®nable, a ¯exible attribute which could be a strength. The signi®cant point is that while the factors have developed and been adopted, changes to the success criteria have been suggested but remain unchanged.

Could the link be, that project management continues to fail because, included in the de®nition are a limited set of criteria for measuring success, cost, time and quality, which even if these criteria are achieved simply demonstrate the chance of matching two best guesses and a phenomena correctly.

Prior to some undergraduate lectures and workshops about project management, the students were asked to locate some secondary literature describing project management and produce their own de®nition. While there were some innovative ideas, the overriding responses included the success criteria of cost, time and quality within the de®nition. If this is the perception about project management we wish those about to work in the profession to have, the rhetoric over the years has worked.

Has this however been the problem to realising more successful projects? To date, project management has had the success criteria focused upon the delivery stage, up to implementation. Reinforced by the very description we have continued to use to de®ne the profession. The focus has been to judge whether the project was done right. Doing something right may result in a project which was implemented on time, within cost and to some quality parameters requested, but which is not used by the customers, not liked by the sponsors and does not seem to provide either improved eectiveness or eciency for the organisation, is this successful project management?

Do we need and how do we ®nd new criteria?

If the criteria we measure are in error and using numbers exclusively to measure and represent what we feel

Bernstein 11 reminds us can be dangerous, it is not unreasonable to suggest that the factors we have been focusing on over the years to meet those criteria, may also have been in error. It is possible to consider errors

12 as two types, Handy suggests there is a dierence between the two. Atkinson 13 described how this could be adopted and adapted into IS±IT projects thinking.

Type I errors being when something is done wrong, for example, poor planning, inaccurate estimating, lack of control. Type II errors could be thought of as when something is forgotten or not done as well as it could

Project Management: Cost, time and quality, two best guesses: R. Atkinson

Table 1 Results measures 24

* The overarching purpose of a measurement system should be to help the team rather than top managers, gauge its progress.

* A truly empowered team must play the lead role in designing its own measurement system.

* Because the team is responsible for a value-delivery process that cuts across several functions, . . .

it must create measures to track that process.

* A team should adopt only a handful of measures (no more than 15), the most common results measures are cost and schedule.

have been done, such as using incomplete criteria for success. I agree with Handy that the two error types are dierent. To provide an example, consider an analogy with musicians. An amateur musician will practice until they get a piece of music right. That is to say they are trying not to commit a Type I error and get the tune wrong. In contrast a professional musician will practice until the tune cannot be played any better. Professional musicians are trying not to commit a

Type II error, for them it's not a matter of whether the tune was right or wrong but the piece of music was played as good as it could have been. Error types can be considered simply as the sin of commission

(Type I error), or the sin of omission (Type II error).

Finding a negative, a Type II error is almost impossible, how do you know what to look for, or if you have found it, if you don't know what it is. While Type II errors are not easy to ®nd using research methods, it is possible to trip up over a Type II error. Classic examples such as the discovery of the smallpox vaccine, should provide evidence that it is worthwhile considering new ideas, when found by chance. History records, that what the people with smallpox had in common, was something missing, i.e., they all did not have cow pox. Dairy maids did have cow pox and dairy maids did not get small-pox. From cow-pox the small-pox vaccine was developed. A negative was discovered.

Continuing to research only where there is more light, i.e., trying to ®nd a common positive link of those with small-pox would never have provided a result. The common link was a Type II error, it was something that was missing. It is therefore worth considering and trying new additional criteria (Type II errors) against which project management could be measured. The worst that can happen is they are tried and rejected.

The rate of project management success at present is not as good as it could be, things could be better. What then are the usual criteria used to measure success?

method which when less than actual costs indicates the project is going o track. deWit 18 however points out that when costs are used as a control, they measure progress, which is not the same as success.

Naturally some projects must have time and or costs as the prime objective(s). A millennium project for example must hit the on-time objective or problems will follow. Projects measured against cost, time and quality are measuring the delivery stage, doing something right. Meyer 24 described these as Results

Measurement, when the focus is upon the task of project management, doing it right.

Table 1 gives the four guiding principles described by Meyer.

point made by Meyer 24

24 A signi®cant is thatÐperformance measurement for cross functional team based organisations which deliver an entire process or product to customers are essential. Project management does not use traditional, functional teams, performance measurement can therefore be argued as essential success criteria.

For other projects such as life critical systems, quality would be the overriding criteria. The focus now moves to getting something right. Time and costs become secondary criteria while the resultant product

25 is the focus. Alter describes process and organisational goals as two dierent measures of success. This takes the focus away from `did they do it right', to,

`did they get it right', a measure only possible post implementation. But projects are usually understood to have ®nished when the delivery of the system is complete. The on-time criteria of the delivery stage does not allow the resultant system and the bene®ts to be taken into consideration for project management success. Time, one criteria of the process function seems to overarch the chance of other criteria, post implementation from being included. Providing time is not critical, the delivery criteria are only one set against which success can be measured.

Measuring the process criteria for project management is measuring eciency. Measuring the success of the resultant system or organisation bene®ts, the criteria changes to that of assessing, getting something right, meeting goals, measuring eectiveness. So what are these criteria?

Criteria for success

The delivery stage: the process: doing it right

Oilsen 3 almost 50 years ago suggested cost, time and quality as the success criteria bundled into the description. Wright 14 reduces that list and taking the view of a customer, suggests only two parameters are of importance, time and budget. Many other writers

Turner,

McCoy,

15

19

Morris and Hough, 16

Ballantine 22

Wateridge, 17

Pinto and Slevin, 20 Saarinen deWit,

21

18 and all agree cost, time and quality should be used as success criteria, but not exclusively. Temporary criteria are available during the delivery stage to gauge whether the project is going to plan. These temporary criteria measurements can be considered to be measuring the progress to date, a type of measurement which is usually carried out as a method of control. For example Williams 23 describes the use of short term measures during project build, using the earned value

Post delivery stage: the system: getting it right

First, who should decide the criteria? Struckenbruck 26 considered the four most important stakeholders to decide the criteria, were the project manager, top management, customer-client, and the team members. Two other possible criteria which could be used to measure the success of the project are the resultant system (the product) and the bene®ts to the many stakeholders involved with the project such as the users, customers or the project sta.

DeLone et al.

27 identi®ed six post implementation systems criteria to measure the success of a system.

339

Project Management: Cost, time and quality, two best guesses: R. Atkinson

Table 2 Systems measures 27

* System quality

* Information quality

* Information Use

* Users satisfaction

* Individual impact

* Organisational impact

Table 3 Performance gaps 30

Actual project outcome needed by the customer

Gap1

Desired Project outcome as described by the customer

Gap2

Desired project outcome as perceived by the project team

Gap3

Speci®c project plan developed by the project team

Gap4

Actual project outcome delivered to the customer

Gap5

Project outcome as perceived by the customer

These are given in Table 2 . Ballantine et al.

22 undertook to review the DeLone work suggesting a 3D model of success whichÐseparated the dierent dimensions of the development, deployment and delivery levels. The DeLone paper called for a new focus on what might be appropriate measures of success at dierent levels. This paper attempts to help that process, not least by pointing out the probability of Type

II errors in project management, i.e., things not done as well as they could be and or things not previously considered.

Post delivery stage: the bene®ts: getting them right

Project management and organisational success criteria deWit 28 suggests are dierent, and questions even the purpose of attempting to measure project management and organisational success and link the two, since they require separate measurement criteria. They could however be measured separately. So what are they?

Shenhar 29 produced the results from 127 projects and decided upon a multidimensional universal framework for considering success. Only one stage was in the delivery phase which he decided was measuring the project eciency. The three other criteria suggested were all in the post delivery phase. These were impact on customer, measurable within a couple of weeks after implementation, business success, measurable after one to two years and preparing for the future, measurable after about four to ®ve years. Shenhar 29 suggested project managers need to ` see the big picture . . .

, be aware of the results expected . . .

and look for long term bene®ts '.

Project management seems to need to be assessed as soon as possible, often as soon as the delivery stage is over. This limits the criteria for gauging success to the

Iron Triangle and excludes longer term bene®ts from inclusion in the success criteria. Is it possible these exclusions of other success criteria are an example of a

Type II error, something missing? Cathedral thinkers as they have been described by Handy, 12 were those prepared to start projects which they did not live long enough to see to completion. Modern day project managers are the opposite of cathedral thinkers, modern day projects managers are expected to deliver results quickly and those results are measured as soon as possible. A feature of Rapid Application

Development (RAD) is that results are delivered quickly. A bene®t of RAD is that while time is `boxed' the results may produce be dirty solution, but that is accepted as success.

Customers and users are examples of stakeholders of information systems projects and the criteria they consider as important for success should also be included in assessing a project. Deane et al.

30 looked at aligning project outcomes with customer needs, and viewed these as potential gaps which would be present if the performance was not correct giving an ` ineective project result '. Five possible levels of project performance gaps were identi®ed, these are given in Table 3 .

Deane et al.

30 provided a focus upon a gap that could be thought to exist between the customer, team and the outcome. A well published cartoon sequence showing a similar set of gaps has been produced where a user required a simple swing to be built, but through the ®lters or gaps between customer, analyst, designer

Figure 2 Mallak et al.

31

340

Figure 3 Source: Atkinson and developer the simple swing ended up looking unlike the original request. There are others who are also involved when a systems project is implemented and Mallak et al.

31 ( Figure 2 ) bundled those into a stakeholders category, noting that there may be times when one group could be multi stakeholders, such as a project for a government who are the customers, they also supply the capital and are the eventual users.

The following writers Turner, 15

Hough,

Slevin,

16

20

Wateridge,

Saarinen 21

17 deWit, 18 McCoy, and Ballantine 22

Morris and

19 Pinto and were referenced earlier in this paper for including the criteria of The

Iron Triangle, cost, time and quality as a necessary criteria against which to measure the project management process. At the same time however, those same writers all included other criteria which could be used to measure the success of the project post implementation. Taking the points mentioned by those writers it seems possible to place them into three new categories.

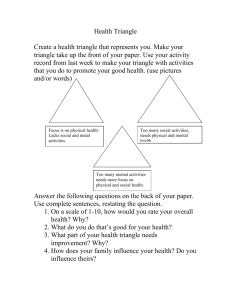

These are the technical strength of the resultant system, the bene®ts to the resultant organisation (direct bene®ts) and the bene®ts to a wider stakeholder community (indirect bene®ts). These three categories could be represented as The Square Route to understanding project management success criteria, as presented in

Figure 3 .

A breakdown of the four success criteria is oered in Table 4 .

The contents of Table 4 is not exhaustive, it is not meant to be. What it is intended to indicate are three other success criteria types and examples of these as suggested by other authors.

Project Management: Cost, time and quality, two best guesses: R. Atkinson

Summary and ideas

After 50 years it appears that the de®nitions for project management continue to include a limited set of success criteria, namely the Iron Triangle, cost, time and quality. These criteria, it is suggested, are no more than two best guesses and a phenomenon. A ®nite time resource is possibly the feature which dierentiates project management from most other types of management. However to focus the success criteria exclusively upon the delivery criteria to the exclusion of others, it is suggested, may have produced an inaccurate picture of so called failed project management.

An argument has been provided which demonstrates that two Types of errors can exist within project management. Type I errors when something is done wrong, while a Type II error is when something has not been done as well as it could have been or something was missed. It has further been argued the literature indicates other success criteria have been identi®ed, but to date the Iron Triangle seems to continue to be the preferred success criteria. The signi®cant point to be made is that project management may be committing a Type II error, and that error is the reluctance to include additional success criteria.

Using the Iron Triangle of project management, time, cost and quality as the criteria of success is not a

Type I error, it is not wrong. They are an example of a Type II error, that is to say, they are not as good as they could be, or something is missing. Measuring the resultant system and the bene®ts as suggested in the

Square Route, could reduce some existing Type II errors, a missing link in understanding project management success.

In trying to prevent a Type II error of project management this paper suggests the Iron Triangle could be developed to become the Square-Route of success criteria as shown in Figure 3 , providing a more realistic and balanced indication of success.

It is further suggested that the early attempts to describe project management which included only the

Iron Triangle and the rhetoric which has followed over the last 50 years supporting those ideas may have resulted in a biased measurement of project management success. Creating an unrealistic view of the success rate, either better or worse!

The focus of this paper is project management success criteria. To shift the focus of measurement for project management from the exclusive process driven criteria, The Iron Triangle, Figure 1 to The Square

Route, Figure 3 . It is further suggested that shift could be signi®cantly helped if a de®nition for project management was produced which did not include limited success criteria. De®ning project management is

Table 4 Square route to understanding success criteria

Iron Triangle The information system

Cost

Quality

Time

Maintainability

Reliability

Validity

Information± quality use

Bene®ts (organisation)

Improved eciency

Improved eectiveness

Increased pro®ts

Strategic goals

Organisational-learning

Reduced waste

Bene®ts (stakeholder community)

Satis®ed users

Social and

Environmental impact

Personal development

Professional learning, contractors pro®ts

Capital suppliers, content project team, economic impact to surrounding community.

341

Project Management: Cost, time and quality, two best guesses: R. Atkinson beyond the scope of this paper, but is a task which has been started by the de®nition oered by Turner art and science of converting vision into reality.

References

10 Ðthe

1. Morris PWG, Hough GH. The Anatomy of Major Projects.

John Wiley and Sons, Chichester, 1993.

2. Gallagher K. Chaos, Information Technology and Project

Management Conference Proceedings, London, 19±20 Sep.

1995, 19±36.

3. Oisen, RP, Can project management be de®ned?

Project

Management Quarterly , 1971, 2 (1), 12±14.

4. British Standard in Project Management 6079, ISBN 0 580

25594 8.

5. Association of Project Management (APM), Body of

Knowledge (BoK) Revised January 1995 (version 2).

6. Reiss B. Project Management Demysti®ed. E and FN Spon,

London, 1993.

7. Lock D. Project Management, 5th ed. Gower, Aldershot, 1994.

8. Burke R. Project Management. John Wiley and Sons,

Chichester, 1993.

9. Wirth, I and Trylo, D, Preliminary comparisons of six eorts to document the projects management body of knowledge.

International Journal of Project Management , 1995, 13 (3), 109±

118.

10. Turner, JR, Editorial: International Project Management

Association global quali®cation, certi®cation and accreditation.

International Journal of Project Management , 1996, 14 (1), 1±6.

11. Bernstein PL. Have we replaced old-world superstitions with a dangerous reliance on numbers? Harvard Business Review Mar-

Apr. 1996, 47±51.

12. Handy C. The Empty Raincoat. Hutchinson, London.

13. Atkinson RW. Eective Organisations, Re-framing the

Thinking for Information Systems Projects Success, 13±16.

Cassell, London, 1997.

14. Wright, JN, Time and budget: the twin imperatives of a project sponsor.

International Journal of Project Management , 1997,

15 (3), 181±186.

15. Turner JR. The Handbook of Project-based Management.

McGraw-Hill, 1993.

16. Morris PWG, Hough GH. The Anatomy of Major Projects.

John Wiley, 1987.

17. Wateridge, J, How can IS/IT projects be measured for success?

International Journal of project Management , 1998, 16 (1), 59±

63.

18. de Wit, A, Measurement of project management success.

International Journal of Project Management , 1988, 6 (3), 164±

170.

19. McCoy FA. Measuring Success: Establishing and Maintaining

A Baseline, Project management Institute Seminar/Symposiun

Montreal Canada, Sep. 1987, 47±52.

20. Pinto, JK and Slevin, DP, Critical success factors across the project lifecycle.

Project Management Journal , 1988, XIX , 67±

75.

21. Saarinen, T, Systems development methodology and project success.

Information and Management , 1990, 19 , 183±193.

22. Ballantine, J, Bonner, M, Levy, M, Martin, A, Munro, I and

Powell, PL, The 3-D model of information systems successes: the search for the dependent variable continues.

Information

Resources Management Journal , 1996, 9 (4), 5±14.

23. Williams, BR, Why do software projects fail?

GEC Journal of

Research , 1995, 12 (1), 13±16.

24. Meyer C. How the right measures help teams excel. Harvard

Business Review 1994, 95±103.

25. Alter S. Information Systems a management perspective, 2nd ed. Benjamin and Cummings, California, 1996.

26. Struckenbruck L. Who determines project success. Project management Institute Seminar/Symposiun Montreal Canada, Sep.

1987, 85±93.

27. DeLone, WH and McLean, ER, Information systems success: the quest for the dependent variable.

Information Systems

Research , 1992, 3 (1), 60±95.

28. de Wit A. Measuring project success. Project management

Institute Seminar/Symposiun Montreal Canada, Sep. 1987, 13±

21.

29. Shenhar, AJ, Levy, O and Dvir, D, Mapping the dimensions of project success.

Project Management Journal , 1997, 28 (2), 5±13.

30. Deane RH, Clark TB, Young AP. Creating a learning project environment: aligning project outcomes with customer needs.

Information Systems Management 1997, 54±60.

31. Mallak AM, Patzak GR, Kursted Jr, HA. Satisfying stakeholders for successful project management. Proceedings of the

13th Annual Conference on Computers and Industrial

Engineering 1991: 21(1±4), 429±433.

Roger Atkinson has 25 years experience of UK and international information systems project development,

13 years as a project manager. He was awarded an MPhil at Cran®eld, following research into the analysis and design arguments for information systems projects. For the last four years he has provided project management education to both undergraduate and postgraduate students as a senior lecturer in the

Business School at Bournemouth

University. He undertakes consultancy to both the public and private sectors and is currently studying for a PhD in project management.

342