Communicating Mathematics III: Z-Corp 650

advertisement

Communicating Mathematics III: Z-Corp 650

Claire Miller

April 20, 2011

1

Abstract

This project looks at fractal geometry with the aim of producing an interesting

3-dimensional model on the ZPrinter 650. After an introduction to the various

denitions of fractal dimension, both regular and randomised fractals are considered

and two methods are outlined for producing fractals. The role of fractals in nance

is looked into as a detailed example of the applications of fractals.

2

Contents

1 Introduction

6

2 An Introduction to Fractal Geometry

6

2.1

2.2

Fractal dimension . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6

2.1.1

Similarity dimension . . . . . . . . . . . . . . . . . . . . . . . . . .

7

2.1.2

The Moran Equation . . . . . . . . . . . . . . . . . . . . . . . . . .

7

2.1.3

Box-counting dimension . . . . . . . . . . . . . . . . . . . . . . . .

8

2.1.4

Hausdor Dimension . . . . . . . . . . . . . . . . . . . . . . . . . .

12

Which dimension should we use?

. . . . . . . . . . . . . . . . . . . . . . .

3 Constructing fractals

12

13

3.1

Iterated Function Systems . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

3.2

Apollonian Gaskets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

15

3.2.1

Soddy circles and Descartes' circle theorem

. . . . . . . . . . . . .

16

3.2.2

Construction of Apollonian gaskets . . . . . . . . . . . . . . . . . .

20

3.2.3

Properties of Apollonian gaskets

21

. . . . . . . . . . . . . . . . . . .

4 Examples of regular fractals and their properties

4.1

The Sierpi«ski Triangle

4.2

The T-Square Fractal

22

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

5 Randomised Fractals

26

5.1

Randomising the Sierpi«ski Gasket . . . . . . . . . . . . . . . . . . . . . .

27

5.2

The Koch curve and coastlines

27

5.3

. . . . . . . . . . . . . . . . . . . . . . . .

5.2.1

The standard Koch curve

. . . . . . . . . . . . . . . . . . . . . . .

27

5.2.2

The randomised Koch curve . . . . . . . . . . . . . . . . . . . . . .

29

The Chaos Game . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

30

5.3.1

The algorithm

30

5.3.2

Why does this work? . . . . . . . . . . . . . . . . . . . . . . . . . .

31

5.3.3

Can this be done for other fractals?

33

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . .

6 An Application:

Modelling stock prices through fractal times series

33

6.1

Introduction to Brownian Motion . . . . . . . . . . . . . . . . . . . . . . .

33

6.2

Basic model of Brownian Motion

34

6.3

Randomised model of Brownian motion

. . . . . . . . . . . . . . . . . . .

36

6.4

The Hurst Exponent and R/S Analysis . . . . . . . . . . . . . . . . . . . .

36

6.5

Do actual stocks behave in a fractal way?

38

. . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . .

7 Producing my 3D shape

40

7.1

Choosing my shape . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40

7.2

Programming the algorithm in MATLAB

. . . . . . . . . . . . . . . . . .

41

7.3

Producing an OBJ le . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

44

7.4

Printing with the ZPrinter 650

. . . . . . . . . . . . . . . . . . . . . . . .

47

7.5

The nal product . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

3

8 Conclusion

8.1

48

Acknowledgements

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

References

50

A Appendix

52

A.1

sierpinski.m . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

52

A.2

tsquare.m

53

A.3

sierpinskiR.m . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

A.4

koch.m . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

A.5

kochR.m . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

55

A.6

chaosgame.m

55

A.7

stockSim.m

A.8

stockSimR.m

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

4

This piece of work is a result of my own work except where it

forms an assessment based on group project work. In the case of

a group project, the work has been prepared in collaboration with

other members of the group. Material from the work of others not

involved in the project has been acknowledged and quotations and

paraphrases suitably indicated.

I have produced all the images that follow in this piece of work

myself using MATLAB and Adobe Photoshop unless an alternate

source is explicitly stated.

5

1

Introduction

In this project I am looking to use the ZPrinter 650, a 3D printer, to produce a model

of an interesting 3D shape, which could act as a teaching aid in a mathematics lecture. I

have decided to focus on creating a 3D fractal object. Fractals are a set of shapes that

have only really been studied in depth in the past 100 years. In fact, the term `fractal' was

only coined in 1975 [1, p.2]. In my opinion, fractals are often particularly aesthetically

pleasing, making them good candidates for a 3-dimensional model. In addition to this,

Benoît Mandelbrot died recently on 14th October 2010.

He was widely known as the

father of fractal geometry [2] and received numerous prizes for his work. Since his death,

fractals have been given a lot of media attention, for example in the BBC4 documentary

`The Secret Life of Chaos' [3], thus making it an interesting time to look into what is

known about fractals today.

Firstly I will look at the concept of fractal dimension and the various denitions and

methods used to calculate it.

I will then look at two dierent ways in which fractals

of a specic nature can be constructed in mathematics. I will apply what I have learnt

to two examples, before exploring randomised fractals, which have more applications in

analysing real world situations.

As a specic example of how fractals can be used to

model natural phenomena I will investigate the modelling of stock price charts through

fractal time series. I will then draw upon all of this information to demonstrate how I

nally decided upon my 3-dimensional shape and how I went about programming the

les required for the ZPrinter 650.

2

An Introduction to Fractal Geometry

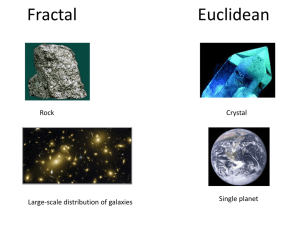

The word fractal was conceived by Mandelbrot [4, p.1] and came from the Latin word

`fractus', meaning broken. He dened a fractal as a rough or fragmented geometric shape

that can be split into parts, each of which is (at least approximately) a reduced-size copy

of the whole [1]. This property is called self-similarity. Fractals are seen in nature, as

well as resulting from mathematical algorithms. For example, ferns, trees and broccoli

all have a fractal structure [5, p.16 & p.136]. Fractals contain detail at arbitrarily small

scales and are often obtained by a recursive procedure.

In this section I am going to outline the main aspect of fractal geometry, fractal dimension,

and see how this can be dened and calculated.

The theory, which follows, is based

around two books by Kenneth Falconer, `Fractal Geometry: Mathematical Foundations

and Applications' [6] and `Techniques in Fractal Geometry' [7].

2.1 Fractal dimension

There are many dierent types of fractal dimension, each with its own denition. Fractal

dimension varies from topological dimension as it need not be a natural number [1, p.15]

and is designed to describe how jagged or complex a shape is. Some of the main denitions

are the similarity dimension, the box-counting dimension and the Hausdor dimension,

which I will now outline. Other denitions, which I will not cover, include the divider

dimension, the capacity dimension, the information dimension [5, p.202].

Before introducing the rst denition of fractal dimension, we need to understand what

is meant when a fractal is described as strictly self-similar.

Denition 2.1.

A fractal is

strictly self-similar

replicas of the whole structure.

fractals.

if its parts are identical smaller scaled

Strictly self-similar fractals are also known as

6

regular

2.1.1 Similarity dimension

The similarity dimension is perhaps the simplest denition of a fractal dimension but only

applies to strictly self-similar sets, such as the Cantor set (see Figure 1) or Koch curve

(see Figure 24 on Page 28). Strict self-similarity is required as the denition considers

the set to be made up of a number of replicas of itself, scaled by a common factor less

than 1.

Denition 2.2.

has

A set

F

similarity dimension

made up of

m copies of itself, m > 1, scaled by a factor 0 < r < 1

dimS F = −

log m

log m

=

log r

log 1r

(2.1)

the ratio of the natural logarithms.

Example 2.3.

The Cantor set is one of the rst described fractals [8, p.136] and is

constructed from a straight line by removing the centre third, dividing it into two shorter

lines and then repeating this process for each line segment. The Cantor set is the limit

of this process and is a collection of points as eventually the entire length of the line is

1

3 . Thus it has

log 2

log 2

=

0.6309

(4dp).

This

is

greater

than

the topological

similarity dimension

=

log 3

log( 13 )−1

dimension of a set of points, which is equal to zero, and a non-integer similarity dimension

removed. The set is made up of 2 copies of itself scaled by a factor of

demonstrates the fractal structure of the set. The set could also be considered to be made

up of 4 copies of itself scaled by a factor of

log 4

the same result as

log( 19 )−1

=

log 22

log 32

=

2·log 2

2·log 3

1

9 , by skipping a stage, and this would yield

log 2

= log

3.

Figure 1: The rst four stages of the Cantor set

complexityLinks.html

http://www.bordalierinstitute.com/

2.1.2 The Moran Equation

Unlike the similarity dimension, which can only be applied to self-similar sets where all

the self-similar pieces are scaled by the same factor, the Moran equation allows pieces to

be scaled dierently and thus can be applied to a larger set of fractals. This denition is

described by Mandelbrot in his online Yale classes on fractals [9].

Denition 2.4.

m > 1,

F which can be decomposed into m self-similar pieces,

0 < ri < 1, i = 1, .., m, the Moran equation states that

dim F = d, must satisfy

For a fractal

each scaled by a factor

the fractal dimension,

d

r1d + .. + rm

=

m

X

i=1

7

rid = 1

(2.2)

Pm d

f (d) =

i=1 ri is

limd→∞ f (d) = 0 because each ri

The Moran equation gives a unique solution for

decreasing function with

f (0) = m > 1

and

d

since

a strictly

is smaller

than 1. So by the Intermediate Value Theorem and since the function is strictly decreasing

there will exist a unique solution to

f (d) = 1.

It is important to check that, as a generalisation of the similarity dimension, the Moran

equation gives the same result as Denition 2.2 when the

this case, the Moran equation requires that

gives

log m + d log r = log 1 = 0 ⇒ d =

{ri }

are equal, say to

r.

In

mrd = 1.

Taking logarithms of this equation

Figure 2:

A fractal based on the Sier-

− log m

log r which is the denition for the similarity

dimension.

Example 2.5.

The fractal in Figure 2 is

made up of four self-similar copies of it-

1

2 and

1

one by a factor of

.

Hence the di4

mension d of this fractal must satisfy

d

d

3 · 12 + 41 = 1. By algebra or nuself, three scaled by a factor of

merical methods, such as the Bisection

method or Newton-Raphson method, it

can be found that

d ≈ 1.723

(3dp).

pi«ski triangle [10]

2.1.3 Box-counting dimension

The box-counting dimension, also known as the Minkowski-Bouligand dimension [11],

looks at the number of boxes or disks of a specied radius required to cover the area or

perimeter of a shape. As the radius is decreased, the fractal dimension of the shape can

be ascertained. The box-counting method is considered one of the easiest to implement

[7, p.19] and is therefore a very practical denition.

Denition 2.6.

r

that can cover

F ⊂ Rn , F 6= ∅, let Nr (F ) be the smallest number of sets of diameter

F . The lower and upper box-counting dimensions of F are dened [6,

For

p.38] as

dimB F = lim inf

log Nr (F )

− log r

(2.3)

dimB F = lim sup

log Nr (F )

− log r

(2.4)

r→0

and

r→0

If these are equal, their value is the

box-counting dimension

of

F

log Nr (F )

r→0 − log r

dimB F = lim

(2.5)

To demonstrate how the box-counting dimension is calculated, I will use the common

example of coastlines. This is an example of how the fractal dimension of a perimeter can

be calculated. I will look at a ctional coastline that I have constructed. Figure 3 shows

the number of boxes required to cover the coastline as

8

r

is halved each time.

r

Nr

log Nr

− log r

0.5

4

2

0.25

14

1.9037

0.125

35

1.7098

0.0625

71

1.5374

0.03125

139

1.4238

Figure 3: The rst stages of the box-counting dimension for my ctional coastline and a

table to show the calculations

From the table we see that as

r → 0

the relationship of the logarithms is decreasing

but it is unclear to which value it will converge.

incredibly arduous and as

r

Performing box-counting by hand is

decreases errors become more likely when counting up

N (r),

consequently computer programs have been written to carry out the algorithm, including

a MATLAB program for images and matrix structures.

I will therefore continue my

analysis of this coastline using MATLAB.

Box-counting in MATLAB

Designed and copyrighted by Frederic Moisy in 2008

h×w×3

matrices, where

h

boxcount.m [12] considers images as

w is the width of the

is the height of the image in pixels and

image in pixels. The third dimension of the matrix is 3 as we are considering each pixel of

the image in RGB colour format i.e. three separate numbers relating to the proportions

of red, green and blue making up the colour of the pixel. The code produces a plot of

the log-log relationship between

r

and

N (r)

and a second plot of the gradient of this

relationship. The accuracy of the program is, however, restricted by the quality of the

image as it is unable to consider values of

r

less than 1 pixel. It is able to give a rough

interval for the estimate of the fractal dimension by calculating the average gradient of

the log-log plot for small

r

plus or minus one standard deviation.

In Figure 4 you can see my MATLAB results when analysing my ctional coastline

Coastline.gif.

The rst plot shows a near linear relationship between

log r and log N (r)

(note that the axes are scaled logarithmically) with the second plot indicating that the

fractal dimension lies between 1.2 and 1.6.

9

Figure 4: MATLAB analysis for my ctional coastline

The data MATLAB has collected is summarised at the bottom of the gure and allows

us to build up a new table showing values of

r

10

and

N (r):

r

in pixels

N (r)

512

1

256

3

128

7

64

19

32

45

16

103

8

232

4

542

2

1374

1

3944

Table 1: Box-counting data for

Coastline.gif

The fractal dimension of my coastline is estimated as

(1.14278 , 1.45202)

from MATLAB

dimB = 1.2974 ± 0.15462 =

which is consistent with what we can see from the second plot and

with the trend I saw when calculating the data by hand.

Similar calculations have been carried out for the coast of Great Britain, as seen in

Figure 5, and have found that the box-counting dimension of the British coastline is

approximately 1.30 [13, p.58]. When this is compared to the coastline of Norway, which

has fractal dimension 1.52, it means that Norway's coastline is more jagged as its fractal

dimension is closer to 2, something which is easily understandable given all the fjords.

Figure 5:

The rst few stages of the box-counting algorithm for the British coastline

http://en.wikipedia.org/wiki/File:Great_Britain_Box.svg

11

2.1.4 Hausdor Dimension

The idea behind the Hausdor dimension is very similar to that of the box-counting

dimension except that the disks covering the set can have dierent radii. The disks form

a

δ -cover,

see Denition 2.7, which means they have a radius smaller than or equal to

δ.

The Hausdor dimension is dened with the help of the s-dimensional Hausdor measure

[6, p.29].

|U | = sup {|x − y| : x, y ∈ U } is the greatest distance between two elements

U.

Note that here

in

U,

and can be thought of as the `radius' of the set

Denition 2.7.

of

F ⊂R

n

s≥0

S∞

|Ui | ≤ δ ∀i and F ⊂ i=1 Ui ,

equal to δ covers all of F .

if

less than or

For

A nite or countable collection of subsets

and

δ>0

we dene the

(

Hδs (F ) = inf

{Ui }

of

Rn

is called a

δ -cover

i.e. the union of these subsets with `radius'

s-dimensional Hausdor measure

∞

X

)

s

|Ui | : {Ui } is a δ − cover of F

(2.6)

i=1

This inmum increases and approaches a limit as

covers is restricted as

δ

δ & 0.

This is because the choice of

number of elements. This limit may equal

0

or

∞.

Now dene

H s (F ) = lim Hδs (F )

δ→0

as the

δ-

decreases, so the inmum is taken of a set containing a decreasing

(2.7)

s-dimensional Hausdor measure of F .

s

H

(F ) will be larger for smaller values of s, as, in the limit once δ is less than 1, the sum

P∞

s

i=1 |Ui | will increase for smaller s since each |Ui | < δ < 1. It can be shown [7, p.22]

0

s

0

that for all sets F that there exists a value for s, say s , for which H (F ) = ∞ if s < s

s

0

and H (F ) = 0 for s > s . This leads to the denition of the Hausdor dimension:

Denition 2.8.

The

Hausdor dimension of F

is dened

dimH F = inf {s ≥ 0 : H s (F ) = 0} = sup {s ≥ 0 : H s (F ) = ∞}

(2.8)

2.2 Which dimension should we use?

Due to its more complex denition, the Hausdor dimension of a shape is more dicult

to calculate than the box-counting or similarity dimensions. However, these dimensions

are the same for all strictly self-similar fractals [14], such as the Cantor set, so for these

fractals we are able to choose whichever denition is most convenient. In cases where the

Hausdor dimension and box-counting dimension are not equal the following inequality

holds [7, p.25]:

dimH

≤ dimB ≤ dimB

So the box-counting dimension can be used as an upper bound for the Hausdor dimension

of a shape. One example where the dimensions dier is the set of rational numbers

[0, 1]

which has

dimH = 0

and

dimB = 1

[5, p.219].

12

Q

on

3

Constructing fractals

As I have stated previously, a regular fractal is one which is strictly self-similar.

This

means that, by zooming into any area of the fractal, you will nd an identical smaller

scaled version of the whole or some part of the whole. This type of fractal can be easily

produced using deterministic rules and iterating them ad innitum.

symmetrical or deterministic fractals

Sometimes called

[13, p.50], these are probably the set of fractals

which most people think of when they hear the word `fractal' and include the Cantor Set

which we saw earlier in Example 2.3 on Page 7. In this section I will consider a method of

producing regular fractals with deterministic rules, as well as looking at the construction

of a set of fractals, produced with circles, which are not strictly self-similar.

3.1 Iterated Function Systems

Iterated function systems, or IFS, were popularised by Barnsley in his book `Fractals

Everywhere' [15] and generate fractals by iterating a collection of transformations. The

resulting fractals are always strictly self-similar. Both the Cantor set and the Sierpi«ski

triangle, which follows in Section 4.1, can be generated using this method. To dene an

iterated function system, it is necessary to rst understand contractions mappings and

metric spaces.

Denition 3.1.

A mapping T : X → X is called a contraction mapping on X if there

c with 0 < c < 1 such that |T (x) − T (y)| 6 c |x − y| for all x, y ∈ X . If

|T (x) − T (y)| = c |x − y| then T maps sets to geometrically similar (smaller) sets and T

exists a

is called a

similarity.

Denition 3.2.

R

A

metric d denes a `distance' in a space X .

and must satisfy the following conditions for all

1.

d(x, y) ≥ 0

2.

d(x, y) = d(y, x)

3.

d(x, z) ≤ d(x, y) + d(y, z)

Example 3.3.

and

d(x, y) = 0

if and only if

It is a function

d : X ×X →

x, y, z ∈ X :

x=y

(Non-negativity)

(Symmetry)

(Triangle Inequality)

The simplest metric you can dene on any non-empty set is the discrete

metric

(

dD (x, y)

=

0 x=y

1 x=

6 y

To check that this denes a metric we need to check the 3 properties stated in Denition

3.2:

1.

dD (x, y) ∈ {0, 1} thus dD (x, y) ≥ 0.In the case when x = y , dD (x, y) = 0 as required

and dD (x, y) = 1 6= 0 otherwise.

2. If

x=y

then

dD (x, y) = 0 = dD (y, x),

otherwise

dD (x, y) = 1 = dD (y, x)

for

x 6= y .

3. Here there are a few cases to consider:

x = z then y can either be equal to both x and z , giving dD (x, z) = 0 ≤

dD (x, y) + dD (y, z) = 0 + 0, or y can take a dierent value, giving dD (x, z) =

0 ≤ dD (x, y) + dD (y, z) = 1 + 1 = 2.

(a) if

13

x 6= z then y can either be equal to one of x and z , giving dD (x, z) = 1 ≤

dD (x, y) + dD (y, z) = 1 + 0, or y can take a dierent value to both x and z ,

giving dD (x, z) = 1 ≤ dD (x, y) + dD (y, z) = 1 + 1 = 2.

(b) if

Thus the discrete metric,

Denition 3.4.

sure/metric

in

X

d.

A

dD ,

is a well dened metric.

metric space (X, d) is the pairing of a space X with a distance meacomplete if every Cauchy sequence of terms

A metric space is said to be

X with respect to the metric d [15, p.18], where a Cauchy se(xi ) such that for any > 0 there exists some n > 0 with d(xi , xj ) < converges to a limit in

quence is a sequence

for all

i, j > n.

Example 3.5.

Is the space X coupled with the discrete metric (X, dD ) complete? Firstly

(xi ) be a Cauchy sequence within this space, then for all ε > 0 there exists some n with

dD (xi , xj ) < ε for all i, j > n. Consider ε = 12 . Under the discrete metric dD (xi , xj ) < 12

if and only if xi = xj and hence since (xi ) is a Cauchy sequence there exists some n after

which xi = xj for all i, j > n. Thus every Cauchy sequence with respect to the discrete

metric dD is a constant sequence for large n, which of course converges to an element of

X . The metric space (X, dD ) is therefore complete.

let

With this understanding of contraction mappings and metric spaces we can now introduce

iterated function systems as follows:

Denition 3.6.

An

iterated function system (IFS) is a nite set of contraction mappings

{Ti : X → X | i = 1, .., n }, n ∈ N, on a complete

Sn metric

is invariant for the mappings {Ti } if F =

i=1 Ti (F ).

space

(X, d).

A subset

F ⊂X

These invariant sets are often

fractals.

Example 3.7.

mappings

T2 (F )

One simple example is the Cantor set as described in Example 2.3. Its

T1 (x) = 31 x and T2 (x) = 31 x + 23 . Thus T1 (F ) and

and right sections of F and F = T1 (F ) ∪ T2 (F ). Hence F , the

for the iterated function system {T1 , T2 }.

T1 , T2 : R → R

are just the left

Cantor set, is invariant

If the mappings

{Ti }

are given by

are ane maps, they can also be written in matrix form.

For

example in two dimensions:

x1

x2

Ti (x) = Ti

=

ai

ci

bi

di

x1

x2

+

ei

fi

A collection of such mappings is then often conveyed as a table of the values

(3.1)

{ai }, {bi }

etc. as seen in the next example.

Example 3.8.

The table for the mappings which create the Sierpi«ski triangle with side

length 1, see Section 4.1, is as follows [15, p.86]:

T

a

b

c

d

e

f

1

0.5

0

0

2

0.5

0

0

0.5

0

0

0.5

0.5

0.5

0.25

0

√

3

4

3

0.5

0

0

Table 2: IFS for the Sierpi«ski triangle

14

In this case the matrix

ai

ci

bi

di

is unchanged for each

factor of 0.5 and the translations

ei

fi

Ti

and represents scaling by a

change to position the three scaled copies of the

triangle at each vertex. In Figure 6 you can see the locations that the larger triangle is

mapped to by each

Ti .

Figure 6: The 3 mappings for the Sierpi«ski Triangle IFS

As well as drawing mathematical objects, IFS can be used to model natural objects which

behave in a fractal manner such as ferns and trees [15, p.87].

3.2 Apollonian Gaskets

Apollonian gaskets are produced by removing circles of varying sizes from an area to

leave a gasket of points which have not been removed.

Below, in Figure 7, are a few

examples of Apollonian gaskets, which give an idea of the style of fractal produced. In

this section we'll consider their construction and the geometry behind it, as well as some

of the properties of Apollonian gaskets. The concepts are based on a number of journal

articles [16, 17, 18] and a chapter in Mandelbrot's book `The Fractal Geometry of Nature'

[1].

Figure 7: Three examples of Apollonian gaskets from

Apollonian_gasket

15

http://en.wikipedia.org/wiki/

To better understand this method of producing fractals, we'll need to learn a bit about the

geometry of circles, in particular, the relationship between the radii of mutually tangent

circles.

3.2.1 Soddy circles and Descartes' circle theorem

A lot of information about the geometry of circles can be found in `A Treatise on the

Circle and the Sphere' [19, pp.31-43].

This book was written in 1916 before the main

theorem we will look at in this section, Theorem 3.11, was widely recognised but contains

important denitions for the relationships between circles, such as tangency, and their

characteristics.

Denition 3.9.

tangent

Two circles with centres

(xi , yi )

and radii

if they intersect at a single point. A circle is

contains the other and

externally tangent

ri ,

for

i = 1, 2,

internally tangent

are

mutually

to another if it

to it otherwise.

In Figure 8 we see some examples of both external and internal tangency of the white

circle to the black circle, on the left and right respectively.

Figure 8: Tangent circles

http://mathworld.wolfram.com/TangentCircles.html

This denition prescribes a specic relationship between the centres and radii of the two

circles, namely

2

2

(x1 − x2 ) + (y1 − y2 ) = (r1 ± r2 )

2

where `+' refers to externally tangent circles and `−' to internally tangent ones.

(3.2)

This

relationship means that the distance between the centres of the two circles must either

be equal to the sum or the dierence of the two radii. As seen in Figure 9, tangent circles

share a common tangent and the relationship follows directly from the diagram with the

help of Pythagoras' Theorem.

16

Figure 9: The relationship between the radii and centres of tangent circles

Consider the following problem: You are given three mutually tangent circles

with radii

a, b

and

to all three circles.

c

A, B

and

C

respectively and asked to draw a fourth circle which is also tangent

This is a specic form of Apollonius' problem, where in the more

generalised problem the three initial circles are not required to be mutually tangent.

In Figure 10 we can see two possible solutions to the problem, drawn in red. In the case

of the larger red circle it is internally tangent to each of the three initial circles and is

said to be circumscribed to the circles

A, B

and

C.

The smaller red circle is externally

tangent to the initial circles and is said to be inscribed in the circles

A, B

and

C.

Figure 10: Two solutions to Apollonius' problem given the three initial black circles

As it turns out these are the only two solutions to the problem and are called the

and outer Soddy circles

inner

[20]. The radii for these two circles can then be determined using

17

a formula from Descartes. Before stating the formula, here is a denition which will help

us to rearrange the formula and thus simplify the result.

Denition 3.10.

The

curvature

reciprocal of its radius i.e.

(or

k = ± 1r .

bend ) of a circle, k, is dened as plus or minus the

The curvature of a circle is positive if it is externally

tangent to all other circles and it is negative if it is internally tangent to all other circles.

A straight line has curvature 0 as it can be thought of as a circle with innite radius.

The statement of the theorem below is based upon its appearance in two articles `Beyond

the Descartes Circle Theorem' [17] and `A Tisket, a Tasket, an Apollonian Gasket' [16].

Figure 11 gives an example of four mutually tangent circles, to which you could apply

the theorem.

Figure 11: Four mutually tangent circles

Theorem 3.11. Descartes' Circle Theorem

If four mutually tangent circles have radii ri , for i = 1, .., 4, then

1

1

1

1

1

+ 2+ 2+ 2 =

r12

r2

r3

r4

2

1

1

1

1

+

+

+

r1

r2

r3

r4

2

Alternatively, considering the curvatures of the four circles, ki =

relationship

(3.3)

1

ri

for i = 1, .., 4, the

1

2

(k1 + k2 + k3 + k4 )

2

(3.4)

p

k4 = k1 + k2 + k3 ± 2 k1 k2 + k2 k3 + k3 k1

(3.5)

k12 + k22 + k32 + k42 =

holds. This can be rearranged to give

where the `+' refers to the inner Soddy circle and the `-' refers to the outer one.

Information regarding the proof of this theorem can be found in the article `On a Theorem

in Geometry' [21] by Daniel Pedoe.

18

The circles, whose radii we can calculate using this theorem, were named after Soddy

after he rediscovered Descartes' theorem in 1936 and was so inspired by the beauty of

Apollonian circles that he published a poetic version of the theorem in the journal Nature

[22]. His poem was called `The Kiss Precise' and it reads as follows:

Four circles to the kissing come

The smaller the benter.

The bend is just the inverse of

The distance from the center.

Though their intrigue left Euclid dumb,

There's now no need for rule of thumb.

Since zero bend's a dead straight line,

And concave bends have minus sign,

The sum of the squares of all four bends

Is half the square of their sum.

Soddy is also attributed with the reformulation of Descartes' theorem [18] which states

that

r4 =

Proof.

r1 r2 r3

p

r1 r2 + r2 r3 + r3 r1 ± 2 r1 r2 r3 (r1 + r2 + r3 )

Using the relationship

ki =

(3.6)

1

ri we will ensure that this formulation agrees with

(3.5) of Descartes' theorem:

r4

For

ri 6= 0, ∀i,

r1 r2 r3

p

r1 r2 + r2 r3 + r3 r1 ± 2 r1 r2 r3 (r1 + r2 + r3 )

we have

1

k4

k4

=

=

1

k1 k2 k3

=

1

k1 k2

+

1

k2 k3

+

1

k3 k1

1

1

1

+

+

±2

k1 k2

k2 k3

k3 k1

r

± 2 k1 k12 k3 k11 +

s

1

k1 k2 k3

s

k4

k3 + k1 + k2 ± 2

=

k4

k1 k2 k3

= k3 + k1 + k2 ± 2

1

k2

+

1

k3

1

1

1

+

+

k1

k2

k3

!

1

1

1

+

+

k1

k2

k3

· k1 k2 k3

p

k2 k3 + k1 k3 + k1 k2

This is equivalent to (3.5).

With the radius of the fourth circle determined by (3.6) and the relationship between the

centres and radii of mutually tangent circles given in (3.2) we can calculate the centre of

the fourth circle

(x4 , y4 )

and draw it.

19

3.2.2 Construction of Apollonian gaskets

Apollonian gaskets can be constructed in three ways [23]:

the Mandelbrot algorithm,

the Soddy algorithm and an IFS algorithm created by Alexei Kravchenko and Dmitriy

Mekhontsev.

I am going to outline the Soddy algorithm as it uses what I have just

explained about mutually tangent circles, whereas Mandelbrot's algorithm relies on circle

inversion.

An Apollonian gasket starts at stage 0 with three circles

mutually tangent.

and denoted

C4

C1 , C2

and

C3 ,

which are

The inner and outer Soddy circles are then added to the diagram

and

C5

respectively.

dierent triplets of circles, say

C1 , C2

We now have 5 circles and can begin to take

C4 , and look at the two Soddy circles for

C6 , corresponding to the inner Soddy circle,

this case C3 . This procedure is carried out

and

these three. This will give us one new circle

and one circle which we already have, in

with every triplet of circles adding 6 circles in total.

stage for all triplets of circles.

This is then continued at each

One can think of the process of adding inner Soddy

circles as lling each approximately triangular curved void with the largest possible circle

at each stage of the iteration.

By adding a circle, three new triangular shaped voids

are created, thus at each stage of the iteration

since only two circles are added at stage 1.

3+2+

Pn

i−1

= 3+2+2

i=2 2 · 3

1−3n

1−3

n > 0

there are

2 · 3n−1 circles

added,

This gives a total number of circles of

− 1 = 2 + 3n

after

n

stages. This algorithm

can be easily programmed on the computer using the formulae for the radii and centres

of mutually tangent circles discussed in the previous section, whereas a computer has no

intuitive way to t the largest possible circle in a triangular void.

In Figure 12 we see an Apollonian gasket generated from three circles of equal radius with

the circles

Ci

are labelled for stages 0 (shaded pink), 1 (shaded purple) and 2 (shaded

green).

Figure 12:

Apollonian gasket generated from 3 circles of equal radius (adapted from

http://en.wikipedia.org/wiki/File:Apollonian_gasket.svg)

20

3.2.3 Properties of Apollonian gaskets

Apollonian gaskets are a class of fractals which are not strictly self-similar [1, p.172].

Therefore to work out the fractal dimension of an Apollonian gasket we cannot use the

similarity dimension or Moran equation.

In this case the box-counting dimension is a

practical method to estimate the fractal dimension and, for a more accurate result, I will

once again use the MATLAB code

boxcount.m, as introduced in Section 2.1.3 on Page 9.

I

will consider the fractal dimension of the Apollonian gasket constructed from three circles

of equal radius as seen in Figure 12. MATLAB produces the plots seen in Figure 13 and

estimates the fractal dimension to be

dimB = 1.4328±0.27301 = (1.15979 , 1.70581).

This

interval is fairly large and does not really give us any information about the Apollonian

gasket, other than conrming that it does indeed behave in a fractal manner. At present

the exact dimension of this Apollonian gasket is still unknown but in 1973 it was proved

1.300197 < dim < 1.314534 [24] and more recently it has be shown

1.305688 [25]. Both of these estimates fall within our estimated interval.

that

that

dim ≈

Figure 13: MATLAB output for the Apollonian Gasket in Figure 12

One very interesting fact about the relationship between the bends (or curvatures) of

Apollonian gasket circles is that, if the rst four circles have integer bends, then so does

every other circle in the gasket [16]. In Figure 14 we see an example where the bends of

the rst four circles are -1 (the outer circumscribing circle), 2, 2 and 3. All other circles

are marked with their integer bends and naturally the smaller the circle the greater the

bend.

21

Figure 14: The bends of an Apollonian gasket with initial bends {-1,2,2,3} from

//en.wikipedia.org/wiki/File:ApollonianGasket-1_2_2_3-Labels.png

http:

As far as applications go, randomised (see Section 5 on Page 26) Apollonian gaskets can

be used to model foams or powders [16].

4

Examples of regular fractals and their properties

In order to better understand fractals I will investigate some two-dimensional fractals: the

Sierpi«ski triangle and the lesser known T-Square fractal. This will give me the chance

to analyse fractal dimensions myself and study the behaviour of these particular fractals.

4.1 The Sierpi«ski Triangle

Figure 15: The formation of the Sierpi«ski triangle

22

The Sierpi«ski triangle, also known as the Sierpi«ski gasket, is created through an iterative

algorithm.

Starting with an equilateral triangle, the midpoints of each side are found

and connected to form an inverted smaller triangle, which is then removed. The same

procedure is then applied to each of the remaining triangles at each stage. The Sierpi«ski

triangle is dened as the limit of this process and is strictly self-similar since each of the

three sub-triangles is an exact half-scaled replica of the whole.

Figure 16: The Sierpi«ski Triangle, created from my MATLAB code

sierpinski.m

(see

Appendix A.1)

Properties of the Sierpi«ski Triangle:

•

A

•

i

3 n

limn→∞ A · 4 = 0.

It has a zero area since each iteration

at stage 0, and

It has an innite perimeter since each iteration

an initial perimeter of

•

has the area

P,

and

limn→∞ P ·

i

A·

3 i

4 , assuming an area of

has perimeter

P·

3 n

tends to innity.

2

3 i

2 , assuming

log 3

log 2 ≈ 1.585 because it is the union of three

copies of itself scaled by one half. The dimension falling between 1 and 2 is appropriIt has similarity dimension

dimS =

ate since the Sierpi«ski Triangle can be seen as being `larger' than a 1-dimensional

object, since it has innite length, and `smaller' than a 2-dimensional object, since

it has zero area.

•

The IFS for the Sierpi«ski Triangle was seen in Example 3.8 on Page 14.

Another interesting property of the Sierpi«ski Triangle is its link to Pascal's Triangle [26].

It can be produced by taking Pascal's triangle modulo 2 and then placing a triangle over

any entries which are 1 (mod 2), as demonstrated in Figure 17 on page 24.

23

Figure 17: How a Stage 2 Sierpi«ski Triangle can be created from Pascal's Triangle with

8 rows.

4.2 The T-Square Fractal

Figure 18: Construction of the T-Square fractal

The T-Square fractal is generated from a square. At each outward pointing corner a new

1

2 , is then overlaid, centred on the original corner.

process is then repeated at each stage for all outward pointing corners.

square, scaled by a factor of

24

This

Figure 19: The T-Square Fractal after 5 iterations from my MATLAB code

tsquare.m

(see Appendix A.2)

The T-Square fractal is another example of a fractal with an innite perimeter bounding

a nite area, with the white area within the square tending to zero after many iterations.

But what is its dimension? There are two aspects of this shape for which we can analyse

the dimension: the enclosed area and the perimeter.

Looking at the enclosed area,

TA ,

we see that the area expands to ll the unit square,

1

2 . Thus when calculating the

box-counting dimension of the area it behaves just as the unit square and each sub-square

−2

contains a part of the area i.e. Nr (TA ) = r

where r is the length of the sides of each

assuming the initial square at stage 0 has sides of length

sub-square. This behaviour can be seen in Figure 20.

Figure 20: Box-counting for the T-Square fractal

We therefore have a box-counting dimension

log r−2

log Nr (TA )

dimB (TA ) = lim

= lim

=2

r→0

r→0 − log r

− log r

Looking at the boundary,

TP ,

(4.1)

we can use the denition of the similarity dimension (Def-

inition 2.2) to nd the fractal dimension.

25

Figure 21: Construction of self-similar corners in the T-Square fractal

In the above image we see that, at each new stage, each corner (shown in red) is transformed into three smaller corners (shown in green) which have been scaled by a factor of

1

2 . Thus, when the iterative process is carried out an innite number of times, each corner

will contain 3 smaller scaled replicas of itself. Therefore the similarity dimension of the

ln 3

3

T-Square fractal boundary is dimS (TP ) = −

= ln

ln 2 ≈ 1.585. This is the same as the

ln 12

dimension of the Sierpi«ski Triangle, which means that the perimeter of the T-Square

fractal is as complex and lls 2-dimensional space in the same way as the Sierpi«ski

Triangle.

5

Randomised Fractals

So far I have only discussed deterministic fractals and their properties, however, these

have very few applications in the real world as no natural/real world processes produce

such perfectly symmetrical, predictable shapes. Benoît Mandelbrot himself commented

on the asymmetry of nature, observing that clouds are not spheres, mountains are not

cones, coastlines are not circles [1, p.1]. For regular fractals, once you know the algorithm

for producing them, anyone can construct them and get an identical result. Mandelbrot

therefore described regular fractals as nothing but appetizers [8, p.139].

I am going

to look at another set of fractals, randomised fractals, which provide a more realistic

way of modelling natural objects [5, p.459] and can produce a variety of structures due

to their random nature. Random fractals are a combination of deterministic generating

rules (such as IFS) and elements of random behaviour.

There are many applications for such randomised structures in the modelling of natural

objects for experiments or even computer games. For example modelling the structure

of the bronchioles in the lungs [13, p.51] or generating coastlines, trees and clouds for

ctional landscapes in computer games.

In this section some examples of randomisation will be introduced along with their applications. The last part of the section focuses on a random algorithm which astoundingly

produces a regular fractal and how this is possible.

26

5.1 Randomising the Sierpi«ski Gasket

Here is an example of how a deterministic algorithm, which we've already seen, can

be randomised to produce random fractals.

Previously, the Sierpi«ski gasket has been

constructed by nding the midpoint of each side of a triangle, dividing it into 4 equilateral

triangles and removing the triangle in the centre. One way we can randomise the creation

of this structure is to take a random point along each side instead of the midpoint [5,

p.459].

We can then divide the triangle into four irregular triangles and remove the

centre triangle as usual. This procedure requires three random numbers

r1 , r2 , r3 ∈ (0, 1)

between 0 and 1 for each triangle at each stage and then the points along the triangle

edges are calculated as

(xij , yij ) = (ri · xi + (1 − ri ) · xj , ri · yi + (1 − ri ) · yj )

for

i, j ∈

{1, 2, 3}.

This produces some rather nice images but does not really have any practical application

other than to demonstrate what is meant by a randomised fractal.

Figure 22: Two examples of randomised Sierpi«ski gaskets with 3 and 4 iterations respectively, created from my MATLAB code

sierpinskiR.m

(see Appendix A.3)

5.2 The Koch curve and coastlines

The Koch curve is another example of a regular fractal which can be randomised. However, its randomised form has many uses in modelling irregular boundaries, such as coastlines and clouds. Particularly in computer games it is useful to be able to create complex

irregular structures, which require very little stored information for construction; the IFS

and instructions for randomisation will suce. I will now introduce both the standard

and randomised Koch curves and how they can be constructed.

5.2.1 The standard Koch curve

The standard Koch curve is a regular fractal produced by an iterative algorithm, starting

from a straight line segment. At each stage all line segments from the previous stage are

divided into three equal pieces. An equilateral triangle is then drawn on with the middle

third as its base, pointing outwards. Finally, the base of the triangle (the middle segment

of the original line) is removed. In Figure 23 we can see the rst 3 stages of the Koch

curve and in Figure 24 after many iterations.

27

Figure 23: The rst 3 stages of the Koch curve, created from my MATLAB code

koch.m

(see Appendix A.4)

In order to program this algorithm in MATLAB, it is important to consider the coordinates of the points that are introduced for each line segment at each stage. Consider two

A = (a1 , a2 ) and B = (b1 , b2 ), two arbitrary points connected by a straight line at

i − 1 of the curve. In the next stage of the iteration 3 new points will need to be

introduced: 2 separating the line segment into 3 pieces, C = (c1 , c2 ) and D = (d1 , d2 ),

and 1 marking the top of the equilateral triangle, E = (e1 , e2 ). Using the properties of

points

stage

straight lines and the angles of an equilateral triangle the coordinates can be worked out

as follows:

2a1 + b1 2a2 + b2

,

3

3

C

1

1

= A + (B − A) = (a1 , a2 ) + ((b1 , b2 ) − (a1 , a2 )) =

3

3

a1 + 2b1 a2 + 2b2

,

3

3

D

2

2

= A + (B − A) = (a1 , a2 ) + ((b1 , b2 ) − (a1 , a2 )) =

3

3

E

=

!

√

√

3

3

1

1

(a1 + b1 ) −

(b2 − b1 ) , (a2 + b2 ) +

(a2 − a1 )

2

2

2

2

If this algorithm is carried out ad innitum we have a Koch curve, which has innite

length. The Koch curve is continuous everywhere but dierentiable nowhere [27] since

there are no breaks in the curve but there are also no smooth lines at any point. In Figure

24 we see an approximation to the Koch curve, after 10 iterations, as MATLAB cannot

plot innite detail.

Figure 24: The Koch Curve after 10 iterations, created from my MATLAB code

28

koch.m

As a regular fractal we can also dene an iterated function system which summarises the

algorithm (as dened in Section 3.1).

The table below shows the four transformations

required to create the four smaller line segments from a line segment at each stage.

T

a

1

1

3

1

6

1

6

1

3

2

3

4

b

0

√

−√ 63

3

6

0

c

d

e

f

0

√

3

6√

− 63

0

1

3

1

6

1

6

1

3

0

0

1

3

1

2

2

3

0

√

3

6

0

1

3 and translation to

1

either end of the original line. While T2 and T3 represent shrinking by

3 as well as a

◦

rotation by 60 and appropriate translation.

T1

and

T4

represent a shrinking of each line segment by a factor of

5.2.2 The randomised Koch curve

The Koch curve can be randomised very simply by varying whether the equilateral triangle

added to each line segment points outwards or inwards [5, p.459]. The algorithm is almost

identical to that of the standard Koch curve except that for each line segment from the

previous stage a fair coin is tossed. If `heads' is tossed an equilateral triangle is attached

to the line segment pointing outwards and if `tails' is tossed an equilateral triangle is

attached to the line segment pointing inwards. Thus both possible outcomes for each line

segment have probability 0.5. In MATLAB I have simulated the coin toss by generating a

pseudorandom number

r

between 0 and 1 and dening `heads' as the event that

r < 0.5.

The two possible outcomes can be seen below:

Figure 25: The two possible outcomes for each line segment in a randomised Koch curve

As a result of this element of randomness there are innitely many possible randomised

Koch curves, if we carry out the algorithm ad innitum. In Figure 26 there is an example

of one possible curve. The similarities between the curve and a generic coastline are clear

to the naked eye as what appear to be bays, headlands and even islands appear along the

curve.

29

Figure 26:

A randomised Koch curve, created from my MATLAB code

kochR.m

(see

Appendix A.5)

Mathematically, it is similar to coastlines in the fact that it is said to have many length

scales [28, p.63].

This mean that if you were to measure the length of the curve you

would get dierent measurements depending on the length of the ruler you used, with

longer lengths measured by shorter rulers. If the fractal process for the randomised Koch

curve is made more complicated, then the fractals produced can look as realistic as if

they'd been traced from a shipping chart [8, p.139].

5.3 The Chaos Game

The chaos game, a term coined by Michael Barnsley [15, p.2], is an astounding example of

how a random algorithm can produce a regular fractal. There are many sets of rules for

the chaos game, which each produce a dierent fractal shape, but I am going to look at a

set which produces the Sierpi«ski Triangle, which I looked at earlier in Section 4.1. The

game begins with an initial game point

(x1 , y1 )

which can be chosen at random and this

is set as our current game point. After this, using a randomised iterative process and the

three vertices of an equilateral triangle, a sequence of game points is created and plotted.

The resulting gure after approximately 10,000 steps is clearly the Sierpi«ski gasket.

5.3.1 The algorithm

Here is a more precisely dened set of rules for the algorithm [29, p.10]:

1. Start with an equilateral triangle with vertices

B = (1, 0)

and

C=

√

( 21 , 23 ).

2. Randomly select the initial game point

A, B

and

z1 = (x1 , y1 ).

C.

For example

A = (0, 0),

This can be from within the

boundaries of the triangle but this is not compulsory.

3. Roll a fair die:

(a) If a 1 or 2 is rolled, plot the next game point

the current game point

zn

and

(b) If a 3 or 4 is rolled, plot the next game point

the current game point

zn

and

zn

and

at the midpoint between

zn+1

at the midpoint between

zn+1

at the midpoint between

B.

(c) If a 5 or 6 is rolled, plot the next game point

the current game point

zn+1

A.

C.

30

4. Repeat step 3 with the new game point as many times as desired.

I have programmed this algorithm in MATLAB and carried out the procedure for various

numbers of steps to produce the following diagrams:

Figure 27: Plots of the Chaos Game after various numbers of game points with initial

game point (0.1,0.1), created from my MATLAB code

I used

(0.1, 0.1)

chaosgame.m

(see Appendix A.6)

as my initial game point and it is clear in the diagrams that this point

is not part of the Sierpi«ski gasket, the sequence does however converge to points within

the gasket.

5.3.2 Why does this work?

A full proof is outlined in `Chaos and Fractals' [5, pp.297-352] but I will provide a simpler

sketch of the proof.

Firstly we need to consider the locations of points within the gasket itself. At the

there are

3k

possible subtriangles that a point

P

can be given an address comprising of the numbers

31

k th level

could sit in. Each of these subtriangles

si ∈ {1, 2, 3}

where 1 means the

lower left triangle, 2 means the lower right triangle and 3 means the upper triangle. This

method of labelling the subtriangles is best shown as a diagram (see Figure 28 below).

Figure 28: Labelling system for the subtriangles of the Sierpi«ski gasket

The probability that

P

sits in a particular subtriangle

s1 s2 ...sk

is therefore

1

, one divided

3k

by the total number of subtriangles at that level, since each subtriangle has equal area.

Now we will consider the 3 possible outcomes of step 3 of the algorithm in Section 5.3.1

to be contraction mappings

T3

T1 , T2

and

T3 ,

where

T1

T2

relates to outcome (a),

to (b) and

to (c).

T1 (xn , yn ) =(xn , yn ) +

T2 (xn , yn ) =(xn , yn ) +

T3 (xn , yn ) =(xn , yn ) +

1

2

1

2

1

2

((0, 0) − (xn , yn )) =

((1, 0) − (xn , yn )) =

√ 3

1

2, 2

− (xn , yn ) =

1

2

1

2

(xn , yn )

(xn , yn ) +

1

2

(xn , yn ) +

1

2, 0

√ 3

1

4, 4

We note that these are the very same contraction mappings that we saw for the Sierpi«ski

triangle IFS in Example 3.8 on Page 14 and recall that a fractal is invariant under the

IFS used in its construction. Hence we can conclude that, once a point

zn , n > N , also belong

(zn ), n > N , will converge to the

a point of the gasket, all subsequent points

we have such a

zN

the sequence

P

i.e.

is at most

coincides with

Sierpi«ski gasket.

z1 the sequence will get

> 0 from P . Consider P

Now we show that from any initial game point

to a point of the gasket

zN

to the gasket. So once

arbitrarily close

to be contained

D in the mth stage, with a diameter 21m , then we can choose m large

1

enough that m < . If D has an address s1 s2 ...sm , for example 132 as shown in Figure

2

28, where each si ∈ {1, 2, 3}, and the chaos game is left to run for long enough then we

within a triangle

D

so

This is guaranteed to happen as any sequence of mappings of length

m

will eventually detect a sequence of mappings

is within

of

P.

has probability

1

3m

>0

Ts1 ,Ts2 , ...,Tsm and

our point falls in

and the game can be left to run for an innite length of time.

Thus we can get arbitrarily close to any point

P

of the gasket, so will eventually converge

to one of its points and once this happens, as stated above, all points which follow will

also be on the gasket.

32

5.3.3 Can this be done for other fractals?

Other regular fractals can be constructed by

changing the vertices originally taken into account and adapting the process of taking the mid-

r.

zn+1

point to a more general compression ratio

This

means that the distance of a new point

from

1

r of the distance from

the previous point zn to that vertex. So for the

the appropriate vertex is

chaos game the compression ratio was

r = 2.

For

example, taking your vertices as the four vertices

of a square and the four midpoints of their sides

Figure 29: The Sierpi«ski car-

and compression ratio 3, the Sierpi«ski carpet can

pet after 4 iterations

be produced [30].

6

An Application:

Modelling stock prices through fractal times series

Fractal geometry and generated fractals have many applications in modelling natural

phenomena. As in Figure 5 on Page 11, we have seen how fractal dimension can be used

to study coastlines. It can be used in looking at the shapes of clouds [5, p.501] and to

draw articial landscapes and moonscapes [8, p.127]. I am going to focus on one specic

example of how fractals can model times series.

There are a great many geometric similarities between the graph of a time series and

charts of coastlines [13, p.51].

Most importantly, they are both innitely detailed; the

graph of a time series over the course of one day often looks very similar to the graph for

the entire year [28, p.126][13, p.47] and as you zoom in on a coastline you will only discover

more inlets and crevices. This property means that the length of a coastline or a time

series graph can not be measured as it will be innite and that a fractal dimension will

provide more useful information as to the nature of the shape. The set of time series that

I will focus on are stock prices, though much of the theory that follows can be applied to

examples of times series in other elds, such as meteorology (daily rainfall/temperature)

and sociology (employment/crime gures).

The ultimate goal of studying stock prices with fractal techniques is to be able to comment

on the behaviour of actual stocks and how they might behave in the future.

We shall

rst consider how we can generate models for a stock price over time with a deterministic

algorithm, then move on to a simple randomised algorithm. The material in this section

is based upon the ideas outlined in `The (mis)Behaviour of Markets' [8], `Fractal Market

Analysis' [29] and online Yale classes written by Mandelbrot [31].

6.1 Introduction to Brownian Motion

Brownian motion was rst used as a mathematical model for the stock market by Louis

Bachelier [8, p.9]. The term is named after Robert Brown who rst observed the motion

when examining pollen grains in water and in mathematical physics is often called the

Wiener process [28, p.140]. Brownian motion describes random movements and in one

dimension it describes a random walk with stationary independent increments. As it behaves randomly, it is a good place to start when trying to model something unpredictable

like stock prices.

Here are some key properties of Brownian motion that we'll try to

replicate in our modelling [32]:

33

Independent increments

Each change in price is independent of any previous price

changes so there is no need to look at historical charts of the stock price.

Stationarity

The process generating the price increments remains the same over time.

This means that if our stock price is modelled by

increment

S(t + h) − S(t)

Normal increments

is independent of

S(t)

then for all

h > 0

the

t.

S(t + h) − S(t) follow a normal distribution with

√

h i.e. [S(t + h) − S(t)] ∼ N (0, h).

√

Self-anity The changes of stock price S(t) scale proportional to t. In other words,

for any u ∈ R and any s, t > 0 the probability of an increment being less than u

is equal to the probability of the same increment, but with time scaled by s, being

√

√

s · u i.e. P (S(t + h) − S(t) < u) = P (S(s · (t + h)) − S(s · t) < s · u).

less than

mean

0

Price changes

and standard deviation

In reality, stock price changes are more complicated than the Brownian motion model

so it is important to be aware that these are assumptions for our model, not underlying

properties of stock prices. In fact, as Mandelbrot points out, the assumption of normal

increments is contradicted by the facts i.e. actual stock price data do not demonstrate

this property [8, p.87].

6.2 Basic model of Brownian Motion

Now we will look at a process for modelling Brownian motion which is described in `The

(mis)Behaviour of Markets' [8, p.119] and Mandelbrot's Yale classes. We begin with a

general trend for our stock price over time (the initiator)- a line connecting

(1, 1).

A generator consisting of three line segments, with turning points

(a, b)

(0, 0) to

(c, d),

and

is then inserted along the straight trend line, as seen in Figure 30. The locations of these

turning points can be determined if we consider the self-anity of Brownian motion. If

√

dti then we require |dSi | = dti so that the

4 2

5 1

scaling mirrors that of Brownian motion. Let (a, b) = ( , ) and (c, d) = ( , ) [8, p.172]

9 3

9 3

then we have the required relationship for each i = 1, 2, 3 as seen in Table 3:

dSi

represents the change in price over time

i

1

2

3

dti

−0=

− 49 =

1 − 59 =

4

9

5

9

4

9

1

9

4

9

dSi

− 0 = 23

1

2

1

3 − 3 = −3

1

2

1− 3 = 3

2

3

Relationship

√

dS1 = 23 = dt1 = 49

√

−dS2 = 13 = dt2 = 91

√

dS3 = 23 = dt3 = 49

Table 3: Ensuring the self-anity of Brownian motion for our generator

34

Figure 30: The generator for our Brownian motion model

Once we have our generator, we replace each line segment with a scaled copy of the

generator and iterate the process to produce a model of a stock price path, as seen in

Figure 31.

Figure 31: The chart for a stock price after 2, 3 and 10 iterations of our model, created

from my MATLAB code

stockSim.m

(see Appendix A.7)

This model, however, is far too regular to represent natural Brownian motion.

True

Brownian motion contains aspects of unpredictability and randomness, so we need to try

and introduce this to our model.

35

6.3 Randomised model of Brownian motion

One way to randomise this model is to change the order of the three line segments, which

form the generator, based on a pseudorandom number given by a computer. From our

basic model we will denote the three line segments

downward slope, and

U2 ,

the second upward slope.

permutations, but in our case

U1

and

U2

U1 ,

the rst upward slope,

D,

the

These 3 line segments allow for 6

are identical in length and gradient (see Table

U = U1 = U2 and are left with only

U DU , U U D and DU U . We can now adapt the algorithm

with either U DU , U U D or DU U , each with probability

3), so we will simplify our model by introducing

three possible permutations:

by replacing a line segment

1

3 , based on the pseudorandom number generated. This provides an innite number of

possible model stock paths connecting (0, 0) and (1, 1), each an example of Brownian

motion with an upward trend, known as upward drift.

This randomisation makes the

stock price charts look more realistic [8, p.120]. In Figure 32 we can see an example of a

chart produced with this randomised algorithm:

Figure 32: A randomised Brownian Motion stock price chart, created from my MATLAB

code

stockSimR.m

(see Appendix A.8)

Here I have specied the initial trend of the stock before randomising the uctuations

in its price, but more complicated models with undetermined trends can be produced by

investors [8, p.120]. These models are run many times and allow the investor to predict

the potential gains and risks from his portfolio and to act (buy or sell stock) accordingly.

6.4 The Hurst Exponent and R/S Analysis

We are now going to move away from simulating stock prices and look into how real market

data can be analysed. We will disregard the assumption that price changes are normally

distributed as this is considered to be unrealistic and additionally we will not assume

that the price changes are identically distributed. The implications of these changes to

the model in the real world are that investors may wait until a trend is conrmed before

36

reacting to market changes, rather than reacting immediately as Brownian motion would

imply [13, p.61]. So, if stock price decreases, some investors will wait to conrm the trend

before selling their stock, which will further decrease the stock price. By not assuming

identically distributed price changes we allow investors to behave dierently at dierent

times.

We move away from random walks to biased random walks, which Mandelbrot

called fractional Brownian motions.

This move from traditional Brownian motion to

fractional Brownian motion and the statistics we can calculate to analyse the nature of

stock price data are covered in chapter 4 of `Fractal Market Analysis' [29].

The main

statistic we will need to consider is the Hurst exponent.

The Hurst exponent,

H,

is a statistic named after Harold Hurst and Ludwig Hölder [8,

p.187] used to describe the bias of a biased random walk.

R/S analysis) denes

H

Rescaled range analysis (or

through the power law [13, p.63]

(R/S)t = C · tH

(6.1)

C is a constant and t is the number of observations being taken into account,

0 < t ≤ N the total number of observations. (R/S)t is calculated as follows [33]:

where

1. For the time series

X = X1 , X2 , ...XN

2. The mean adjusted values

the mean value

Yi = Xi − X̄ , i = 1, .., N ,

X̄ =

1

N

PN

i=1

Xi

is calculated.

are calculated.

3. A cumulative series of the mean adjusted values is calculated as

Zt =

Pt

i=1

Yi ,

t = 1, .., N .

4.

Rt = max(Z1 , Z2 , .., Zt ) − min(Z1 , Z2 , .., Zt ), t = 1, .., N ,

the range series of the

Z

values is calculated.

q P

t

2

St = 1t i=1 (Xi − µ) , t = 1, .., N ,

X1 to Xt .

5. The standard deviation series

where

µ

is the mean value of

6. Finally, the rescaled range series

(R/S)t = Rt /St

is formed for

is calculated,

t = 1, .., N .

The value of the Hurst exponent shows the dependence of the next time series value on

the previous value. For investors, this describes the volatility of the stock, which means

how drastically the stock price is likely to change. The critical regions for

H

are dened

below.

Denition 6.1.

The Hurst exponent

H ∈ [0, 1] describes the interdependance of intervals

as follows:

persistent

1

fractional Brownian motion, which shows evidence of long

2 gives

term memory in the time series.

• H >

• H=

1

2 gives standard Brownian motion as we saw in Section 6.1.

• H<

1

fractional Brownian motion. Also referred to as

2 gives

, the series is likely to jump to the other side of the mean at the next time

reverting

anti-persistent

mean-

step.

Trends are apparent [13, p.65] in nancial data that stock prices are persistent series.

This is intuitive as investors are likely to sell a stock if its value is decreasing, causing

a persistent downward trend.

An anti-persistent stock price series would imply that

investors were inclined to sell stock if its value looked to be increasing and then to buy

stock when it appears to be losing its value, which goes against common sense.

37

We can estimate the Hurst exponent by nding the slope of the log-log graph of

against

t,

R/S

as taking logarithms of (6.1) gives:

log(R/S) = H · log t + log C

(6.2)

6.5 Do actual stocks behave in a fractal way?

To see how these concepts can be applied to real stock price charts, I'll consider stock

price data for BP (British Petroleum plc). I have sourced daily stock price data for BP

from 3rd January 1977 to 28th February 2011 from

http://finance.yahoo.com/

[34].

Figure 33: Stock price charts for BP over dierent time periods

I produced charts for the stock price over 4 dierent periods: one month, one year, 5

years and the full 34 years. Using MATLAB I then calculated estimates for the fractal

dimensions of these charts.

38

From

To

Length of Time Period

Estimate for Fractal dimension

3rd Jan 1977

31st Jan 1977

1 month

1.12

3rd Jan 1977

30th Dec 1977

1 year

1.19

3rd Jan 1977

31st Dec 1981

5 years

1.26

3rd Jan 1977

28th Feb 2011

34 years

1.33

Table 4: Fractal dimensions of BP stock price charts

The theory [13, p.47] suggests that these fractal dimensions should all be equal, as a stock

price chart behaves the same over the period of a day as over several years.

However,

since I was only able to nd historic stock price data with the stock price recorded once

each day, the data is not innitely detailed.

By excluding any uctuations that occur

on a minute to minute basis, the fractal dimension of short time periods is lowered as

the the stock price data looks more like a straight line. This can be seen in Figure 33

in the rst chart for stock prices in January 1977, where the straight line segments are

clear and there are fewer uctuations than one would expect if the data had been more

detailed. This explains why the fractal dimension in the table appears to be increasing as

the time period is increased. We can, however, conrm that this example exhibits fractal

behaviour as all the dimensions calculated are greater than 1.

In order to look at the Hurst exponent of this real world data, I am going to use a

piece of MATLAB code written by Bill Davidson [35]. The code takes a vector of time

X, and then calculates all the Zt for t = 1, 2, 4, 8..., t < length(X). It

R/S and uses the polyfit function in MATLAB to t a straight line for

log(R/S) against log t. The gradient of this straight line is then given as the estimated

series values,

then calculates

Hurst exponent of the time series. The BP data from 1977 to 2011 has a Hurst exponent

H = 0.96

and is therefore clearly an example of a persistent time series, as the theory

suggested a stock price chart would be.

In this section we have learnt about how stock price paths can be simulated for the future,

allowing investors or banks to model their potential gains and try to alleviate risk. The

Hurst exponent was also introduced and allows investors to steer clear of more volatile

stocks (those with a lower Hurst exponent) when building a portfolio. I will now return

to looking at fractals more generally and consider possible 3D fractals.

39

7

Producing my 3D shape

7.1 Choosing my shape

So far I have looked at many examples of 2-dimensional fractals.

We have seen the

Cantor Set, Apollonian Gasket, Sierpi«ski Gasket, T-Square fractal and Koch curve. The

algorithms for each of these 2D fractals could be adapted to produce a 3-dimensional

equivalent object. However, when considering producing a 3D object with the help of the

ZPrinter 650 there are a few extra constraints which I need to take into account:

1. The shape should be one connected mass, so that separate parts do not get lost.

2. The shape should be structurally sound and strong. Weak vertices should be avoided

as this could cause the shape to break after printing.

3. The shape should not contain very small holes as these cannot be coated properly

with glaze in the post-printing stage (described later in Section 7.4).

The Cantor Set and Sierpi«ski Gasket have well known 3-dimensional counterparts, the

Cantor Dust and Sierpi«ski Sponge.

As you can see in Figure 34, these would not be

appropriate as a printed 3D model as the Cantor dust disregards condition 1 and the

Sierpi«ski sponge contains many weak points where the tetrahedra join, which contradicts

condition 2. Interestingly the Sierpi«ski sponge is an example of a fractal object with an

integer fractal dimension. As it is made up of 4 copies of itself scaled by a factor of

has similarity dimension

dimS =

log 4

log 2

=

2·log 2

log 2

1

2 , it

= 2.

Figure 34: Cantor Dust and Sierpinski Sponge, produced from my MATLAB code and

photographed in MeshLab