Matrices

advertisement

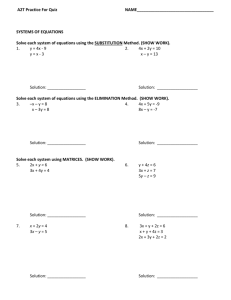

Matrices define matrix We will use matrices to help us solve systems of equations. A matrix is a rectangular array of numbers enclosed in parentheses or brackets. In linear algebra, matrices are important because every matrix represents uniquely a linear transformation. #1 0 0& # 2 3& % ( Examples of matrices are: % ( and %0 1 0( . The particular numbers constituting a $"5 4 ' % ( $0 0 1' matrix are called its entries or elements. To distinguish between elements, we identify # 2 3& their position in the matrix by row and column. E.g., in the matrix % ( the element "5 4 $ ' ! in the first row and second column is 3 and is designated by a12 . The size or dimension of a matrix is specified by the number of rows and the number of columns, in that order. #1 " 2 0 & ! E.g., the matrix % ( is a 2 X 3 matrix. $1 3 "1' ! used to describe systems of linear equations Matrices are a natural choice to describe systems of linear equations. Consider 2x " 3y + 4z = 14 ! the following system of linear equations in standard form: 3x " 2y + 2z = 12 . 4 x + 5y " 5z = 16 #2 " 3 4 & % ( The coefficient matrix of this system is the matrix: % 3 " 2 2 ( . This matrix consists of % ( ! $ 4 5 " 5' the coefficients of the variables as they appear in the original system. The augmented #2 " 3 4 : 14 & % ( matrix of this system is % 3 " 2 2 : 12 ( .!The augmented matrix includes the constant % ( $ 4 5 " 5 : 16 ' terms. The colons separate the coefficients from the constants. solving systems of linear equations with matrices Matrices may be used to solve systems of linear equations. The operations that we perform to!solve a system of linear equations correspond to similar elementary row 2x " 3y + 4z = 14 operations for a matrix. We'll solve the system 3x " 2y + 2z = 12 using Gaussian 4 x + 5y " 5z = 16 elimination (Gaussian elimination is a modified version of the elimination or linear combination method used to solve systems of linear equations) and similar row operations in matrices, side-by-side. First, we represent the system of linear equations ! with an augmented matrix. #2 " 3 4 : 14 & 2x " 3y + 4z = 14 % ( 3x " 2y + 2z = 12 % 3 " 2 2 : 12 ( % ( 4 x + 5y " 5z = 16 $ 4 5 " 5 : 16 ' ! ! Our goal is to add linear combinations of the first row with the second and third rows to "eliminate" the x terms, i.e., to make their x coefficients zero. Before we do this, we want the coefficient of x in the first equation to be equal to 1. So, we need to multiply 1 the first row by 1/2. We will use the shorthand notation R1 to indicate this. 2 # & 3 3 1" 2: 7 ( x " y + 2z = 7 % 2 2 % ( 3x " 2y + 2z = 12 % 3! " 2 2 : 12 ( % 4 5 " 5 : 16( 4 x + 5y " 5z = 16 % ( $ ' Next, we multiply the first row by –3 and add it to the second row, and we multiply the first row by –4 and add it to the third row, "3R1 + R2 and "4R1 + R3 . ! # & 3 3 x " y + 2z = 7 1" 2: 7 ( ! % 2 2 % ( 5 5 % ! ! y " 4z = "9 0 "4 : "9 ( % 2 ( 2 % 11y "13z = "12 0 11 "13 : "12( % ( $ ' Now we want to add a linear combination of the second row to the third row to eliminate the y term. But first we want the coefficient of the y term to be equal to 1, so ! 2 we multiply the second row by 2/5,! R2 . 5 # & 3 3 x " y + 2z = 7 1" 2: 7 ( % 2 2 % ( 8 18! 8 18 ( % y" z=" 0 1 " : " % 5 5 5 5( % 11y "13z = "12 0 11 "13 : "12( % ( $ ' Next, we multiply the second row by –11 and add it to the third row, "11R2 + R3 . # & 3 3 ! x " y + 2z = 7 1" 2: 7( % 2 2 ! % ( 8 18 8 18 ( ! % y" z=" 0 1 " : " % 5 5 5 5( % 23 138 23 138 ( z= : %0 0 ( $ 5 5 5 5 ' We can solve for z (z = 6) and back substitute to solve for y (y = 6) and x (x = 4). The final matrix form is called row echelon form and corresponds to the upper triangular form on the left. ! Gauss-Jordan elimination ! Gauss-Jordan elimination is a continuation of Gaussian elimination. Our goal is to add a linear combination of the third row with the first two rows to eliminate the z 5 terms. We want the coefficient of z to be equal to 1 in the third row, so multiply R3. 23 # & 3 3 x " y + 2z = 7 1" 2: 7( % 2 2 % ( 8 18 8 18 ( % ! y" z=" 0 1 " : " % 5 5 5 5( %0 0 z=6 1: 6( % ( $ ' Now we want to add a linear combination of the third row and the first two rows ! to eliminate the z terms. So we add, "2R3 + R1 and 8 R3 + R2 : 5 ! # & 3 3 1" 0 : " 5( x" y = "5 % 2 2 % ( 0: 6( y = 6 ! ! %0 1 %0 0 1: 6( z= 6 % ( $ ' Lastly, we add a combination of the second row and the first row to eliminate the 3 ! y term, namely R2 + R1: 2 ! "1 0 x = 4 0: 4% $ ' y = 6 0: 6' $0 1 $ ' z= 6 1: 6& ! #0 0 This last matrix is called reduced row echelon form. matrix algebra Matrix algebra was developed to be consistent with the operations performed on ! ! systems of equations. Two matrices are equal provided they have the same size and corresponding # 1 1& #1 " 2 0 & % ( entries are equal. E.g., % ( ) % "2 3 ( . $1 3 "1' % ( $ 0 "1' To add (or subtract) two matrices, they must be of the same size and then you add # 2 3& #1 "1& (or subtract) corresponding entries. Let A = % ( and B = % ( , then $"5 4 ' $ 3 0' ! # 1 4& "0 0% A"B =% ( . A zero matrix, e.g., $ ' , denoted by 0, is any matrix with all its $"4 4 ' #0 0& entries equal to zero. Zero matrices are additive!identities, i.e., A + 0 = 0 + A = A and ! A " A = 0. ! ! ! ! ! ! To multiply a matrix by a scalar, multiply each entry by that scalar. E.g., if # 2 3& #1 "1& # 5 5& A =% ( and B = % ( , then 2A + B = % ( . $"5 4 ' $ 3 0' $ "7 8 ' The product of two matrices A and B, if it exists, is the matrix AB satisfying: 1. The number of columns in A is the same as the number of rows in B 2. If A has a columns and B has b columns, then the size of AB is a X b ! 3. The entry in ! the ith row and the jth column of AB is the inner product of the ith row of A with the jth column of B. The inner product is defined to be the number obtained by multiplying the corresponding entries and then adding the products. # 2 3 &#1 "1 & # 2 )1+ 3) 3 2 ) "1+ 3) 0 & #11 " 2& E.g., the product AB = % (% (=% (=% ( . The $ "1 4 '$ 3 0' $ "1)1+ 4 ) 3 "1) "1+ 4 ) 0 ' $11 1' #1 "1 &# 2 3 & # 3 "1& product BA = % (% (=% ( . Notice that in general AB " BA . $ 3 0'$"1 4 ' $ 6 9 ' !Often is not possible to multiply two matrices together because of their sizes. # 2 3& "1 2 0% E.g., let A = % AB is exists, but BA does not! ! ( and B = $ ' . Then the product "5 4 3 0 1 $ ' # & ! "1 0% Matrices of the form $ ' where the main diagonal entries are all 1 and all other #0 1& # 2 3&#1 0& # 2 3& ! ! entries are 0 are multiplicative identities, i.e., % (% ( = % (. $"5 4 '$ 0 1' $ "5 4 ' #2 " 3 4 &# x & #2x " 3y + 4z& ! % (% ( % ( What is the product % 3 " 2 2 (% y ( ? % 3x " 2y + 2z ( % (% ( % ( $ 4 5!" 5'$ z ' $ 4 x + 5y " 5z' Inverse matrices inverse matrix We define a multiplicative identity for square matrices as a matrix of the form "1 0% " a b!%"1 0% "1 0%" a !b % " a b % I2 = $ ' , i.e., $ '$ ' = $ '$ '=$ '. #0 1& #c d &# 0 1& # 0 1&#c d & #c d & In the real numbers, multiplicative inverses are two real numbers a and b such that ab = 1. The same terminology applies to matrices, i.e., if A and B are n X n matrices such that AB = In and BA = In , then A and B are said to be inverses of each other. An ! inverse matrix of A is denoted by A"1 . # "2 1 & "1 2% "1 % ( ! Show that the ! inverse of A = $ 3 4 ' is A = % 3 " 1 ( . # & $ 2 2' ! ! ! We must show that AA ! ! # & #1 2&% "2 1 ( #1 =% ( 3 1( = % " $ 3 4 '% $0 $ 2 2' 0& ( . And, we must show that 1' # "2 1 & #1 2& #1 0& A A = % 3 1 (% ( = % (. % " ($ 3 4 ' $ 0 1' $ 2 2 '! finding inverse matrices Not all matrices have inverses. First, a matrix must be square in order to have an inverse. But even many square matrices do not have inverses. We will study two ways of finding inverse matrices of square matrices, if they exist. Our first method solves a system of equations to find the inverse matrix of "1 2% "1 # a b & A =$ ' . We need to find numbers a, b, c, and d in an inverse matrix A = % ( # 3 4& $c d' such that the following two matrix equations are true: "1 2%" a b % "1 0% " a b %"1 2% "1 0% $ '$ ' = $ ' and $ '$ '=$ ' # 3 4 &# c d & # 0 1& #c d &# 3 4 & # 0 1& ! " a + 2c b + 2d % "1 0% The first matrix equation is equivalent to: $ ' = $ ' . If these # 3a + 4c 3b + 4d & #0 1& ! matrices are equal, then ! their entries are equal. a + 2c = 1 b + 2d = 0 3a + 4c = 0 3b + 4d = 1 ! This is equivalent to two 2 x 2 systems of linear equations. Solving for a, we have a = -2. Solving for c, we have c = 3/2. Solving for b and d, we have b = 1 and # "2 1& ! d = -1/2. Therefore, A"1 = % (. $ 3 2 "1 2' If we had solved the second matrix equation, we would have the following systems of linear equations: a + 3b = 1 2a + 4b = 0 ! c + 3d = 0 2c + 4d = 1 You can verify that the solutions to this system of equations are the same. Our first method of finding inverse matrices solved systems of equations. Since we know that not all systems of equations have solutions, we may guess that not all ! "1 2% matrices have inverses. And we'd be right! E.g., the matrix A = $ ' does not have an #2 4 & inverse matrix, because the first resulting system of equations ( a + 2b = 1 and 2a + 4b = 0 ) does not have a solution. SHOW. We call matrices that have inverses singular and matrices that do not have inverses non-singular. Singular matrices must be ! and columns. square, i.e., they must have the same number of rows ! Our second method of finding inverse matrices uses elementary row operations (Gauss-Jordan method) on a specially-augmented matrix. We augment our initial matrix "1 ! "1 "1 2% "1 2 :1 0% A =$ ' by writing I2 next to A: A = $ ' . Our goal now is to change the matrix # 3 4& # 3 4 : 0 1& A on the left into I2 and the resulting matrix on the right will be A"1 . 1 ! ! ! ! "1 2!:1 0% (3R +R "1 2 : 1 0% ( R2 "1 2 : 1 0 % (2R2 +R1 1 ) 2 2 )* $ ' ))) ' )) $ ' ) ) ) )* ! *$ # 3 4 : 0 1& # 0 ( 2 : (3 1 & #0 1: 3 2 (1 2& ! ! #1 0 : " 2 1& 1& "1 # "2 % ( . Therefore, A = % (. $0 1: 3 2 "1 2' $ 3 2 "1 2' using inverse matrices to solve systems of linear equations ! x + 2y = 8 Let's now solve the following system of equations using A"1 : . 3x + 4 y = 6 ! ! "1 2% " x% "8% If A = $ ' , X = $ ' , and B = $ ' , then we can represent our system of equations # 3 4& # y& #6& ! by the matrix equation AX = B . If we multiply both sides of!the equation by A"1 , i.e., "1 "1 A "1 AX = A "1B , we have (A A)X = I 2 X = X = A B . Therefore, the matrix A"1B should !give us our!solutions for!x and y. 1 &# 8& # "16 + 6& #"10& ! # "2 ! A"1B = % (% ( = % (=% ( . Therefore, x = -10 and y = 9. Let's $!3 2 "1 2'$ 6' $ 12 " 3' $ 9 ' ! check our answer in the original system of equations. "10 + 2(9) = 8 3("10) + 4(9) = 6 ! " a b% 1 # d " b& "1 In general, we can show for any A = $ ' that A = % ( . Find ad " bc $ "c a ' #c d & #"1 2 & #"9 " 2&# "1 2 & #1 0& 1 #"9 " 2& #"9 " 2& ! A"1 "1 if A = % (. A = % (=% ( . Verify % (% ( = % (. 9 " 8 $"4 "1 ' $"4 "1 ' $ 4 " 9' $"4 "1 '$ 4 " 9' $0 1' 2x " ! 3y + 4z = 14 ! Solve the system 3x " 2y + 2z = 12 using inverse matrices. 4 x + 5y " 5z = 16 ! ! ! First, we write the systems of equations as the matrix equation AX = B , where #2 " 3 4 & " x% "14 % % ( $ ' $ ' A = % 3 " 2 2 (!, X = $ y ' , and B = $12 ' . To find X, we must find A"1B , where A"1 is the % ( $ ' $ ' ! $ 4 5 " 5' #z & #16 & inverse matrix of A. We can find A"1 manually as we did earlier, or we can use #0 ! 5 /23 2 /23 !& ( "1 % technology calculator) to find A = %1 " 26 /23 8 /23( . We can ! (e.g., a graphing ! % ( ! $1 " 22 /23 5 /23' #0 5 /23 2 /23&#14 & # 4 & % (% ( % ( "1 multiply A B = %1 " 26 /23 8 /23(%12 ( = % 6 ( . Therefore, x = 4, y = 6, and z = 6. % (% ( % ( $1 " 22 /23 5 /23'$16!' $ 6 ' ! ! ! ! ! Determinants and Cramer's Rule define determinant of a square matrix As we have seen, inverses of some square matrices may be used to solve systems of linear equations. Can we determine a priori which square matrices have inverses? We can define a number, called the determinant, for any square matrix. We can think of the determinant as a function of a square matrix returning a unique number as the output. If the determinant is equal to 0, then the square matrix does not have an inverse, i.e., it is singular. If the determinant is equal to any number but 0, then the square matrix does have an inverse and it is non-singular. We denote the determinant of a square matrix A using the notations det A or A . determinant formula for 2 x 2 matrices " a b% The formula for the determinant of a 2 x 2 matrix $ ' is defined as #c d & ! a b = ad " bc . We see that the formula subtracts the product of the minor diagonal from c d the product of the major diagonal. ! "1 5 "1 5 Compute . = ("1# 3) " ("2 # 5) = "3 " ("10) = 7 . "2 3 "2 3 determinant formula for 3 x 3 matrices and larger Computing determinants for larger square matrices becomes more cumbersome. " a1 a2 a3 % ! ! $ ' For example, the determinant of a 3 x 3 matrix $ a4 a5 a6 ' is defined as $ ' # a7 a8 a9 & a1 a2 a3 a5 a6 a4 a6 a4 a5 . This sum of products of entries and a4 a5 a6 = a1 " a2 + a3 a8 a9 a7 a9 a7 a8 a7 a8 a9 ! determinants of smaller (2 x 2) matrices is called the minor and co-factor expansion of the determinant along the first row. The minor of an entry is formed by eliminating all entries in the same row and column. Each minor is then multiplied by its co-factor which may be found by alternating signs among the entries. 1 2 "1 1 2 "1 "1 1 2 1 2 "1 Compute 2 "1 1 . 2 "1 1 = 1 = "2 + ("1) 0 2 4 2 4 0 4 0 2 4 0 2 1("2 " 0) " 2( 4 " 4 ) "1(0 " ("4 )) = 1("2) " 2(0) "1( 4 ) = "2 " 0 " 4 = "6 . We can choose any column or row to expand. Sometimes it is helpful to choose a 1 2 "1 ! ! 2 "1 1 2 row or column with a zero, e.g., row 3. 2 "1 1 = 4 = +2 "1 1 2 "1 4 0 2 4 (2 "1) + 2("1" 4 ) = 4 (1) + 2("5) = 4 "10 = "6 . rules for manipulating determinants ! ! There are three rules for manipulating determinants that make computations easier. These rules are listed on p. 642. 5 10 15 Compute 1 2 3 . We can factor a 5 out of the first row (Rule 1) to obtain "9 11 7 5 10 15 1 2 3 1 2 3 = 5 1 2 3 . We can subtract the second row from the first (Rule 2) to "9 11 !7 "9 11 7 1 2 3 1 2 3 obtain 5 1 2 3 = 5 0 0 0 . What would happen if we expanded on the second ! "9 11 7 ! "9 11 7 row? The determinant would be equal to 0. Cramer's rule for solving a system of linear equations Cramer's rule allows us to solve some systems of linear equations using ! determinants. ! See Cramer's Rule on p. 645 and its proof on p. 646. 3x + 4 y " z = 5 Use Cramer's Rule to solve the system of equations x " 3y + 2z = 2 . First, we 5x " 6z = "7 3 4 "1 5 4 "1 3 5 "1 3 4 5 write D = 1 " 3 2 , Dx = 2 " 3 2 , Dy = 1 2 2 , Dz = 1 " 3 2 . Then, we 5 0 "6 "7 0 " 6 5 " 7!" 6 5 0 "7 compute the values of each determinant: D = 103, Dx = 103, Dy = 103, Dz = 206 . Lastly, D 103 103 206 we solve for x, y, and z: x = x = = 1, y = = 1, and z = = 2 . Therefore, the D 103 103 103 ! solution is (1,1,2) . Verify that (1,1,2) is a solution. ! ! ! ! ! !