SET, MERGE, UPDATE, or MODIFY?

advertisement

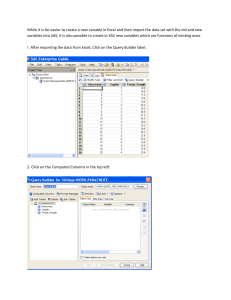

SET, MERGE, UPDATE, or MODIFY? (And What About Indexing?) ,Philip J. Weiss, Walsh Amerlca/PMSI the most part, older versions 01 SAS provided a smaller collection 01 Statements for performing these tasks than is now available. The basic DATA step tool kit, if it can be so defined, consisted primarily 01 SET, MERGE, and UPDATE statements which are used to combine SAS data sets in avariety 01 ways. AbstractManipulating multiple data sets within the same DATA step is a common programming task. The latest irltroduction 01 new data manipulation procedureS provides both increased flexibility and improved efficiency. Although these techniques are powerful arid eilective, their utility is weakened by potential misuse. Because the new statements process records using random and dynamic WHERE processing methods, SAse programmers may shun the, newer techniques and opt instead for more familiar and proven methods. Under earlier verSions of SAS, the SET statement had basically three uses. One could a) concatenate one or more data sets, b) interleave records from one data set with records from another data set, and c) Combine records from different data sets by utilizing more than one SET statement in the same DATA step. These capabilities still exist. Consequently, tools are needed to assist in the determination 01 better solutions. The objective 01 this, p<!per is to provide two essential tools. More specifically, the paper 1) compares and contrasts the new teclmiques with those' previously available; 2) develops decision-making criteria for choosing an appropriate data manipulation technique with efficiency as.a guiding principle., The MERGE statement has not changed syntactically from earlier to later SAS versions and is still used to joinrecords from two or more SAS data sets into a single observation. A MERGE statement is usually accompanied by a' BY Statement to combine or interleave records in a specific order. The UPDATE statement replaces variable values on a master (or primary) file with record values from what is called atransactlon file. This occurs through a comparison or merging 01 records based on variables contained in the BY group (a BY statement must be used). In addition, new records are capable 01 being added (i.e. appended). One constraint is that the master data set cannot have duplicate records in it by the variables contained in the BY statement. A new SAS data set is always created as output. IntrOductionSeve,ral new techniques are now available for manipulating data in the DATA step with Version 6.07. They consist 01: 1) a new SET option, specifically the KEY = option on the SET statement, 2) the MODIFY statement, and 3) the capability to create indexes in the DATA step. The addition of these techniques has introduced confusion as to which technique is best suited for a specific application., , ,The increase in flexibility has ultimately brought with it more complexity. The programmer now has to ask a more involved series 01 questions before choosing one 01 the available techniques over another. By not taking advantage 01 the new capabilities, and by performing data manipUlation in old ways, there is the potential to degrade computer resource effiCiency. It is also possible to misuse or misapply the new ,techniques in situations where altemate programming solutions are actually required. Enhancements to Version 6.07New to Version 6.07 are several techniques that significantly extend the capabilities of the DATA step. They are the MODIFY statement, the KEY= option on the SET statement, and the ability to create indexes during the DATA step with the INDEX= option. All 01 these features take advantage of the latest ability to read and modify records using random, or direct, access to the data Standard File Comparison Techniques- The MODIFY statement is new to Version 6.07 and can be used in a number 01 different ways. As with the UPDATE statement, the general purpose of the MODIFY statement is to update or replace variable values in a master file with those in a transaction file in the past, it was fairly easy to determine which data manipulation technique to use when combining or comparing records from two different data sets. For 127 data set have changed dramatically as new versions of Base SAS have been introduced. From a machine/CPU efficiency perspective, the process of indexing a data set has become easier and more cost effective. Base SAS Version 6.06 allowed batch users to create indexes only through PROC DATASETS or PROe SOL In addition to these methods, SAS Version 6.07 allows the programmer to index an Input (or external) file in the Data step using the INDEX= data set option. Index creation is accomplished by including INDEX= within parentheses after the data set name. An examination of the INDEX= data set option indicates that .either simple or complex indices can be created, the nature of which is determined by the number of variables that follow the INDEX= statement On separate parentheses, see Example 1).. in much the same way as is accomplished using the UPDATE statement. Unlike the UPDATE statement, however, the MODIFY statement 1) can be used without a BY statement, 2) can have duplicate values on the master data set, 3) does not require the data sets to be in sorted order if a BY statement is used, 4) allows the programmer to delete and append new observations to the master data set, and 5) replaces the SAS data set instead of making another copy, thus potentially saving on disk or work space. Processing differences are also apparent between the two statements. In the absence of an explicit WHERE clause, the UPDATE statement matches records based on specific variables contained in the BY statement; both data sets must be in sorted order. The MODIFY statement by contrast has identical record matching functionality through the inclusion of a BY statement; no preliminary sorting is necessary. While this process can be CPU intensive, the master data set can either be indexed or both the master and the transaction file can be sorted to reduce system processing overhead (see Beatrous and Armstrong, 1991 for indexing guidelines). Once an index t'las been successfully created, it . can be used to provide non-sequential .!\CCeSS to observations in the indexed data set Of greater impqrtance, however, is the use of indexes. for cOmparing or updating observations from one dala set with matching observations of another. One new technique for. data set comparison' is available by using the KEY= options on either the SET or MODIFY statements. The increased processing flexibility prOVided by the MODIFY statement (when compared to the UPDATE statement) creates situations where there is a greater chance for the programmer to produce erroneous output. When two data sets are being compared using the MODIFY statement,it is imperative that the programmer have a thorough understanding of the relationship between the two SAS files to avoid potential problems. This includes knowing whether or not the transaction data set contains duplicates or .observations not contained in the master data set, or whether . the master file contains duplicate observations. For bott'l the SET and MODIFY statements, data is accessed randomly according to the value of the key index variable for that observation. Syntactically, either statement (SET or MODIFY) cOntaining a KEY= option must be preceded by a SET statement. If no index has been created, or an index exists on a variable other than the' one listed in the KEY= option, an error message is issued. Even though the SET and the MODIFY statements appear similar to each other when the KEY= option is considered (see Example 1, following page), several significant differences do exist. In the MODIFY example on the left, data in the first SET statement (A2) acts as the transaction data set which will be used to perform operations on the master data set (A1). In the SET example, the reverse is true! In the latter example, the first SET statement contains the data (A2, called the primary data set) that will be modified and hence is somewhat equivalent to the master data set in the previous example. Data in the second SET statement (A 1, called' the lookup data set) is used to add variables to the output data set (A3) when a match is made. The reason new variables are initialized to blanks (after an observation in the primary data set has been retrieved) is because lookup table values are retained from the previous iteration if no match is found with the primary data set. Apart from some of the enhancements listed above, what the MODIFY statement holds in common with other Version 6.07 DATA step enhancements is the ability to access data directly or randomly (i.e. not sequentially). Either dynamic WHERE processing can be used (with a BY statement) or record processing can be handled via an index key (with KEY=). The new capability of controlling how records are processed brings with it the new responsibility on the part of the programmer to know when indexing is appropriate. Therefore, what follows is a discussion of the new index creation method available in the DATA step and the use of indexes with the MODIFY and SET statements. IndexingThe methods by which one is capable of indexing a 128 EXAMPLE 1 - KEY= options Used with MODIFY (left) and SET (right) statements OATA Al ONOEX.",(SPEC); INALE FlLE1 i DATA A1~NDEX=(SPEC»; INFILE ALE1; INPlIT @l MENUM $10. @37 SPEC S3. @42 OlDZlP $5.; DATAA2; INPUT @, MENUM $10. SPEC @42 NEWZIP 15.; sa P OATAA2; INALE FlLE2; INF1LE FiLa; INPUT @10 SPEC INPUT @10 . @15 DAlAA3; SET A2; MENUM-' $3. @15 NEWZIP $5.; DATAA1j SET A2: MODtFY A1 KEY=SPEC; OLOZlP,.,NEWZIP; , SPEC OlDZlP sa $5.; NEWZIP - ' SET AllCEY_SPEQ; IF NEWZIP NOT EQ • THEN OLOZlP_NEWZIP; RUN' RUN' option) does not me<lningfullyextend its data manipulation capabilities. Efficiency may, however, be imprOl(ed. The SET statement, on the ot~r hand, functions very differently •. when used In combination with a KEY= option. The SET statement actually loses some of Its functionality when used with the KEY= .option, while becoming more efficient at processing records. Processing ConsiderationsGiven that the SAS tool kit for comparing' and updating data sets has expanded so dramatically, the issue becomes one of determining. which technique should be used for a particular application. The question of which technique is more appropriate really begins by asking the question, 'What needs to be done with the files?'. In the chart that follows (Chart 1), several generic tasks are listed along with the tasks that are supported by the particular technique. As with the rest of this paper, this list is confined to techniques where two SAS data sets are being compared. While the list is not intended to be. exhaustive, it serves to illustrate some meaningful differences and similarities between the techniques shown in the chart. The chart serves to highlight the fact that several techniques can be used to perform essentially the same function. This is where the confusion may arise. For example, if the programmer wishes to add new variables from one data set to another, then it is unclear whether an UPDATE,.a MERGE, a SET, or a SET with a KEY = option is mand.ated. Ukewise, if one wishes to update or replace specific observations on one data set with another, all the listed techniques support that operation. This leads to doubts about which methOd should be used. Specifically, it shows that combining indexing with the MODIFY statement (specilically with a KEY= CHART 1 - Some SAS data set compsrlson techniques and their functionality . ACTION Techniques Update .. Update/ Replace Varlabla Values X Add New or Missing Variables Add New Records X '.' Delete Records Ap.....dI Inter- Concat- laeve Records _a Records X X Modily X X X X Modily w/ Key= X X X X Merge X X X X Set X X X Setw/Key- X X 129 X X X significantly larger (in terms of number of observations) than the other. While size is not in and of itseH a determinant of indexing efficiency, there is a moderate degree of correspondence. Large, when used in this context, can also mean that one file has less than 50% of records in common with the other based on one variable or a combination of variables. H one of the files can be judged to be larger than the other, then randomly accessing the data may' avoid having to process a signHicant number of the records contained in one or more of the files. Still, there needs to be some kind of CPU investment to create the index, but reuse of t,he same file may diminish the initial cost. Decision CrlterlaIn an effort to make the seleelion of an appropriate technique easier, a flow diagram (fOllowing page) is constructed based On specifiC, decision making criteria. Reelangles are process or action steps, while diamonds indicate depiSiQn branching processes. The object of this methodology is to begin with a collection of techniques and gradually to refine that collection until a smaller subset (or one) exists. The only rules to follow in using the diagram are that once a technique is dropped from consideration it should not be re-added to lower stages of the decisiQn making process. Unfortunately, the decision making process is not "strictly hierarchical in nature, but is organizable in terms of stages:' This specific Ordering has value in the sense thanposescquestions that are critical to ',processing !itrategy' and statement seleCtion. ,'i: I ":-(.;-\ When processing data non-sequentially, the Investment in computer resources occurs not at the comparison stage (as in a sequential MERGE), but c!uring the actual index creation for one of the files. The saVings occ,urs in being able to index one of the SAS data sets, save it as a SAS permanent data set, and then to be able to reuse that indexed data set aiter the initial invest(l1ent of constructing the index. Success of this strategy is predicated on the fact that the indexed file does not change very often. - BasicallY'there are three separate parts, or stages, to the decision'making process: They are; " 1) Accurately determining the relationship(s) '" betWeen the files. This invOlves'correctly identifYing the task that rnust oCcur 6h the master or primary datil set, as well 'as, '", analyiing the relative size of the master versus , the tranSaction <:lata Set. 'An example would be deciding whether one is goihg to' update or add r e t o r d s . · , Even though Stage 2 deals with potential resource use, the questiOn of whether to index or not remains , a central one. The key to quantifying resource use is to ask oneseH, how manY times a data set may be used to . update or modify records, especially considering subsequent processing. 2)-' Determining the repetitive nature of the specific , 'lip plication: The number of times a particular , data Set will be used ih successive operations is a good predictor of the kind and amount of computer resources that may be required. 3) Assess potentiaLiO(;ongrulties betwElllO the file and the'technique(s) under consideration. This meahs asking questions about the file parameters that may affect ,the uSeflilness or, success Of a particular Statement. ' . , " The first part of the decision tree (Stage 1) involves selecting the primaryfunctio,n of the, programming task, namely, to add records, change variable values, etc. The previous chart prpvides a starting point for identifying a set of manipulation techniques for conSideration. One of the first questions should, be whether or not the data set comparison process can be done with the aid of indexing. One method of'identifying whether indexing can be used is to determine whether one of the two files is 130 Despite the fact that indexing can be done in the , DATA stepwitho~ passing the data to a PROC, the cost (or investment) required to index a data Set will exceed the CPU necessary to process the data once through sequential methods (including sorting). l:h refore, H CPU is a concern, and file comparison , ,'will not occur on arej:Jetitive basis, then sequential proceSSing should be used. The MODIFY statement when accompanied with a BY statement, by virtue of the fact that it uses' dynamic WHERE processing (H no index has been created), will use more CPU than sequentiill processing methods. This is especially true Ha large number of observations are involved in the modification process. ,But, in those cases where MODIFY has to select on a small(er) portion of the overall data set (somewhere less than 50%), it can actually be more efficient. If more than 50% of the "observations are to be modHied, steps should be taken to reduce the amount of overhead by sorting the data sets prior to performing the merge, or by indexing' the master data set. If disk space or storage is restricted, then it is desirable to reduce ,the number of data sets being created in the e Decision Flow Diagram Stage 1 • SAS Data Set Comparison Stage 2 • -.. 1oouo7 No IStage 3 No peef•• a I~-:.;=I 131 • refinements in one's understanding take place and unique situations are not only encountered, but also accounted for, the decision making process can be expanded. It might even be the case that repetition and/or experience ingrains these ideas so firmly upon oneseH that diagrams and articles may never again be consulted. In the meantime.... program. The MODIFY works well in the latter scenario due to the fact that it modifies the data set 'in place'; MERGE and SET can also be modified to write over one of the output data sets. The final stage (Stage 3) of the decision making process involves determining whether the proposed task can be successfully processed using a particular technique. Various questions need to be posed regarding how that technique expects records to relate to one another between the files. Also important is how the proposed technique processes the records. References SAS Institute Inc. (1990) SAS Language, Reference Version 6, First Edition Cary, N.C.: SAS Institute Inc. SAS Institute Inc. (1991) The SAS System under MVS, Highlights of Release 6.07 Cary, N.C.: SAS Institute Inc. The MODIFY technique, for example, expects that transaction records have master file counterparts based on specific variable values. Transactions are defined literally (and logically) as successor records. Therefore, the SAS system treats transaction records as though they have a parent assignment to a master record. When two data sets are listed in a MODIFY statement and one of which is not a subset of the other, an error messages is issued regarding the presumed inconsistency. SAS Institute Inc. (1992) SAS Technical Report P222, Changes and Enhancements to Base SAS Software, Release 6.07 Cary, N.C.: SAS Institute Inc. Beatrous, Steve and Karen Armstrong, Effective Use of Indexes in the SAS System Proceedings of the Sixteenth Annual SAS Users Group International Conference, 16, 605-614. The issue of duplicates illustrates the last point. Integral to using one technique over another is understanding how that technique processes the records from each of the two files. Regarding DATA step file comparison, duplicates are treated differently when sequential and non-sequential data access methods are used. Depending on the desired output and/or the processing that must occur, certain techniques can give unwanted or unanticipated results. The final step, examining the remaining techniques, is left to programmer preference. SAS is a registered trademark or trademark of SAS Institute Inc. in the USA and other countries. -indicates USA registration. ConcluslonsFrom the comparison between standard and new techniques and from the decision making analysis presented here, it is clear that the new collection of file comparison techniques have their greatest utility , in specific applications. From a programming point 'of view, it behooves the programmer to know what those application scenarios are, as well as, what constraints are imposed on certain techniques. By structuring and formalizing the decision making process the programmer is less apt to make simple errors and spend less time trying to figure out exactly which technique to use. The previous analysis also gives the programmer a platform through which more detailed investigations into specific techniques can be launched. As 132