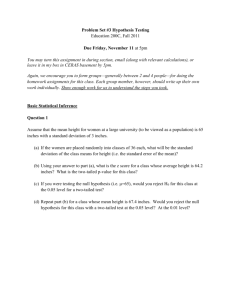

Study Guide

advertisement