Local Invariant Feature Detectors: A Survey

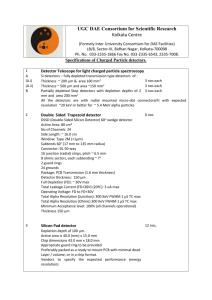

advertisement