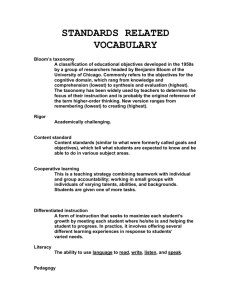

cognitive perspectives in psychology

advertisement