COGS 300 Lecture Notes 13W Term 2

advertisement

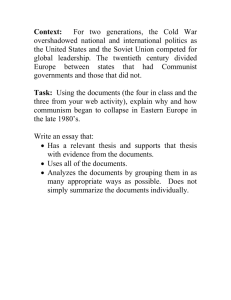

COGS 300 Notes January 9, 2014 Research Methodology Artificial Intelligence Artificial Intelligence. . . . . . is the intelligent connection of perception to action This definition of artificial intelligence is delightfully circular since the word “intelligence” appears twice. But, this is OK. Biology is the study of living things. Biology advances even though there never has been (and probably never will be) an accepted medical, legal and ethical definition of what it means for something to be alive. Consider the example of Helen Keller (1880–1968). In 1882, at 19 months of age, Keller caught a fever that was so fierce she nearly died. She survived but the fever left its mark — she could no longer see or hear. Because she could not hear she also found it very difficult to speak. Her teacher was Anne Sullivan. Keller relied a great deal on Anne Sullivan, who accompanied her everywhere for almost fifty years. Without her faithful teacher Keller would probably have remained trapped within an isolated and confused world. Given her life accomplishments, no one would suggest that Helen Keller was mentally disabled. But, her perceptual disabilities made it very difficult for her to learn. That she did learn is a great credit to her and to Anne Sullivan. It is fair to say that someone like Helen Keller is blind (i.e., unable to see), deaf (i.e., unable to hear) and mute (i.e., unable to speak). It is not fair to call her dumb. (The word “dumb” has a double meaning in English. According to Webster, it means, “lacking the power of speech” and also “markedly lacking in intelligence: synonym stupid”). Given this, referring to someone who cannot speak as “dumb” now is considered offensive. In the same way, I would argue that referring to machines that cannot see or hear as “dumb” also is offensive. Intelligence requires both cognitive (i.e., mental) and perceptual abilities. There are two main motivations for being interested in artificial intelligence, a scientific one and an engineering one. 1 Artificial Intelligence Artificial Intelligence Understanding Intelligence Making Machines Intelligent Let’s look a bit deeper at computational perception, in this case computational vision. The following diagram helps to identify various sub-fields related to computational vision. Computational Vision: Domains and Mappings 3D WORLD 2D IMAGE PERCEPTION 1 3 2 4 The four numbered arrows identify four sub-areas. Arrow 1: Computer graphics deals with how the 3D world determines the 2D image. For objects of known shape, made of known materials, illuminated and viewed in a known way, computer graphics renders (i.e., synthesizes) the requisite image. Arrow 2: Computer vision deals with the inverse problem, namely given an image what can we say about the shape, and material composition of the objects in view and about the way they are illuminated and viewed. 2 Arrow 3: Is perception of the 3D world direct? Our diagram takes view that visual perception of the 3D world is mediated by the 2D image. One can, of course, generate 2D images artifically that don’t correspond to any possible 3D scene and study how these images are perceived. This, indeed is one of the things that perceptual psychologists do. Sometimes, “seeing is deceiving.” Arrow 4: Different images can produce the identical perception. Examples of perceptual metamers are well known in areas such as lightness, colour, texture and motion. In general, arrow 4 deals with the question, “What is the equivalence class of images that give rise to identical perceptions?” We end our brief discussion of research methodology with a look at five dimensions of R&D (applicable to any field). The distinctions we make are important, especially to a COGS student contemplating his or her future career. R&D Dimensions pure applied theoretical experimental problem−driven technique−driven analytic synthetic science engineering Pendulum Analogy (in Computational Vision) image analysis bottom up statistical methods model free scene analysis top down symbolic methods model based 3 (Prefer) Spiral Analogy knowledge pull time technology push progress Bob’s Research Bob’s research is in “embodied” computer vision. The goal is provide computers with the ability to perceive the 3-D world visually and, in the context of robotics, the ability to act directly in the 3-D world in order to accomplish a given task or tasks. Research Questions For a specified task, the question becomes, “What information about the 3-D world is needed to accomplish the task?” This naturally leads to two further questions: 1. What limits our ability to acquire the needed information? 2. What could we do with the information if we had it? Let’s look at a few research examples. We begin with (early) examples of work done in remote sensing for the St. Mary Lake, BC, forestry test site. Given surface topography in the form of a Digital Terrain Model (DTM), one can produce a shaded relief map for any assumed direction of illumination. The standard used in cartography is illumination from the north west at an elevation of 45 degrees. Question: Can you guess why this illumination direction is “standard?” Example 1 shows the St. Mary Lake test site first under standard cartographic illumination. 4 Example 1: St. Mary Lake NW Next, we show the St. Mary Lake test site rendered with illumination from the north east at an elevation of 45 degrees. Example 1 (cont’d): St. Mary Lake NE Note: In British Columbia, sun illumination never comes from the north west or the north east. We can continue the fiction by producing a colour composite corresponding to a red sun from the north west and a cyan (i.e., blue+green) sun from the north east. 5 Example 1 (cont’d): St. Mary Lake NW (R) NE (BG) NASA’s Landsat satellites are in sun synchronous orbits. Their time of passage over most of British Columbia is approximately 9:30am PST. Thus, the direction of sun illumination is (roughly) constant, 2.5 hours before local solar noon, although the sun elevation does vary considerably between summer and winter. Example 2 shows how comparison of images acquired at different times can be used for change detection. First, here is an actual image of St. Mary Lake acquired in September, 1974. The illumination direction is approximately from the south east. Note: This illumination “from below” can result in a reversed perception of mountains and valleys. 6 Example 2: St. Mary Lake 1974 Now, here is an actual image of St. Mary Lake acquired in September, 1979. Again, the illumination direction is approximately from the south east. Example 2 (cont’d): St. Mary Lake 1979 Change becomes apparent when we combine the two images into a colour composite. 7 Example 2 (cont’d): St. Mary Lake 1974(BG) 1979(R) Fast forward now to recent work on sports broadcast video analysis. Example 3: Okuma, Lu and Gupta Here is a recent sample video sequence processed based on the combined thesis work of three LCI graduate students: Video: 1000 frame broadcast hockey sequence Credit: Kenji Okuma, Wei-Lwun Lu, Ankur Gupta http://www.cs.ubc.ca/~little/mcGill_1k.mpg Example 3 is a 1000 frame hockey broadcast video sequence processed using our (December, 2010) automated system. Ankur Gupta’s tool automatically registers each video frame to the (geometric) rink model. Kenji Okuma and Wei-Lwun Lu have an automatic tracker to detect players and to connect these detections into tracks. The visualization tool maps the detected tracks onto the rink model shown in the upper left. Each player is a circle with a small track of the last n frames behind it. The model of the rink also is shown in red and in red circles re-projected onto each video frame. 8 Tsinko 2010 Egor Tsinko’s 2010 UBC M.Sc thesis, “Background Subtraction with Pan/Tilt Camera” extended three standard background subtraction methods to accommodate a moving, pan/tilt camera. Example 3: Background Subtraction with Pan/Tilt Camera Egor Tsinko’s 2010 UBC M.Sc thesis explored background subtraction with a (moving) pan/tilt camera. See the web site http://www.cs.ubc.ca/nest/lci/thesis/etsinko/ for an on-line (PDF) copy of the thesis as well as for links demonstrating results applied to six different video sequences. (Details are described in the thesis). One test sequence is a hockey sequence we have used in other work http://www.cs.ubc.ca/nest/lci/thesis/etsinko/seq5_mog_image.mpg Pixels tinted red are foreground. These correspond to moving objects and to newly seen parts of the scene not yet determined to be stationary and therefore part of the background Duan 2011 Example 4: Puck Location and Possession Andrew Duan’s M.Sc thesis (August, 2011) integrates the determination of puck location and possession into our sports video analysis system Here’s what Andrew’s system does with the same hockey video sequence we saw before: Video: 1000 frame broadcast hockey sequence Credit: Xin Duan (Andrew) http://www.cs.ubc.ca/~duanx/files/PuckTracking_Possession.wmv 9 Chen 2012 Picasso’s “Light Drawings” Photo credit: Gjon Mili – Time & Life Pictures/Getty Images, 1949 Photographer Gjon Mili visited Pablo Picasso in the South of France in 1949. “Picasso gave Mili 15 minutes to try one experiment,” LIFE magazine wrote in its January 30, 1950, issue in which the centaur image above first appeared. Picasso was so fascinated by the results that he posed for five sessions. The photographs, known as Picasso’s “light drawings,” were made with a small electric light in a darkened room. Read more at http://life.time.com/culture/picasso-drawing-with-light/#ixzz1ncwBwLex Optical Motion Capture (Re-think Trade-offs) Commercial optical motion capture systems: • high speed, high resolution cameras • short exposure times • accurate shutter synchronization Chen 2012, UBC Computer Science M.Sc thesis: • inexpensive, consumer-grade cameras • standard video rates 10 • long (i.e., constant) exposure times • unsynchronized cameras Marker positions become motion streaks (which simplifies the tracking problem even for fast object motion) We are able to exploit the lack of camera synchronization to achieve temporal super-resolution. Motion Streaks One frame (left) and three successive frames (right), shown as RGB, from a spinning fan Figure credit: Xing Chen Demo: a 7-camera setup in ICCS X209 Figure credit: Xing Chen 11 Demo: Wired and Ready A simplified human skeleton with 15 landmark points Figure credit: Xing Chen Demo: Human Motion Capture • video: Arm motion • video: Jumping in place — long streaks occur in this video • video: Marching forward and back These videos play first at normal speed then at 1/4 their original speed. See the web page http://www.cs.ubc.ca/~woodham/ccd2013/ for links to an on-line (PDF) copy of Chen’s M.Sc thesis as well links to a poster and supplementary videos presented at the 2nd IEEE International Workshop on Computational Cameras and Displays (CCD 2013), June 28, 2013 Video credits: Xing Chen 12