BOSE-EINSTEIN CONDENSATION IN TWO-DIMENSIONAL TRAPS

A Dissertation Presented

by

JUAN PABLO FERNÁNDEZ

Submitted to the Graduate School of the

University of Massachusetts Amherst in partial fulfillment

of the requirements for the degree of

DOCTOR OF PHILOSOPHY

February 2004

Department of Physics

c Copyright by Juan Pablo Fernández 2004

°

All Rights Reserved

BOSE-EINSTEIN CONDENSATION IN TWO-DIMENSIONAL TRAPS

A Dissertation Presented

by

JUAN PABLO FERNÁNDEZ

Approved as to style and content by:

William J. Mullin, Chair

Suzan Edwards, Member

Robert B. Hallock, Member

Jonathan L. Machta, Member

Jonathan L. Machta, Department Chair

Department of Physics

To the memory of Gonzalo Cortés González,

César Pretol Castillo, and Jacob Ketchakeu

ACKNOWLEDGMENTS

On my very first semester at UMass I had the pleasure of attending Bill Mullin’s lectures on classical

mechanics, and from the beginning I noticed his excellence as a teacher, his willingness to never stop

learning, his kindness, and his modesty. That first impression was only strengthened during the

five years or so that we worked together. I will forever be grateful to Bill for always putting aside

whatever he was doing and listening to anything I had to say, for convincing me that my work was

valuable, and for teaching me a great deal of physics.

Having three outstanding scientists in one’s dissertation committee is an honor, but also, given

their example and their standards, an enormous challenge. My fear of disappointing Suzan Edwards,

Bob Hallock, and Jon Machta forced me to do my best, and for that alone they would deserve my

thanks. My debt to each of them, however, goes far beyond: Suzan introduced me to Matlab, the

programming language that I used to obtain the great majority of the results that I present here,

and Jon lent me the computers in which I ran those programs and wrote this thesis; Bob lent me

his support during difficult periods and gave me much valuable advice in his office across the hall.

David Hall made it possible for me to at last observe a condensate and witness the phenomenon

that I had been thinking about for so many years; besides, he generously provided me with the

very first figure of the thesis. Eugene Zaremba and Markus Holzmann kindly let me reproduce

figures from their published papers (Prof. Zaremba’s figure, regrettably, does not appear in this final

version, but can still be admired in Ref. 91); Prof. Holzmann, moreover, lent me the program he

wrote for Ref. 69, and by doing so helped me get seriously started on my research. I also benefited

greatly from conversations and correspondence with Panayotis Kevrekidis and Brandon van Zyl.

Werner Krauth wrote the Monte Carlo code that, with some adjustments, produced the results

that I report in the last chapter. Stefan Heinrichs taught me how to run, and extract information

from, Prof. Krauth’s program. Justin Herrmann and Tim Middelkoop allowed me to run the simulations in their computer cluster. The Department of Astronomy at UMass granted me access to

its computational facilities during the initial stages of this work.

Finally, I would like to thank my family, friends, and colleagues, in Amherst, in New Hampshire,

in Vermont, and in Colombia, for their warmth, their companionship, and their sense of humor.

v

ABSTRACT

BOSE-EINSTEIN CONDENSATION IN TWO-DIMENSIONAL TRAPS

FEBRUARY 2004

JUAN PABLO FERNÁNDEZ

Fı́sico, UNIVERSIDAD DE LOS ANDES

Ph.D., UNIVERSITY OF MASSACHUSETTS AMHERST

Directed by: Professor William J. Mullin

The fact that two-dimensional interacting trapped systems do not undergo Bose-Einstein Condensation (BEC) in the thermodynamic limit, though rigorously proved, is somewhat mysterious because

all relevant limiting cases (zero temperature, small atom numbers, noninteracting particles) suggest

otherwise. We study the possibility of condensation in finite trapped two-dimensional systems.

We first consider the ideal gas, which incorporates the inhomogeneity and finite size of experimental systems and can be solved exactly. A semiclassical self-consistent approximation gives us

a feel for the temperature scales; diagonalization of the one-body density matrix confirms that the

condensation is into a single state. We squeeze a three-dimensional system and see how it crosses

over into two dimensions.

Mean-field theory, our main tool for the study of interacting systems, prescribes coupled equations for the condensate and the thermal cloud: the condensate receives a full quantum-mechanical

treatment, while the noncondensate is described by different schemes of varying sophistication. We

analyze the T = 0 case and its approach to the thermodynamic limit, finding a criterion for the

dimensionality crossover and obtaining the coupling constant of the two-dimensional system that

results from squeezing a three-dimensional trap.

We next apply a semiclassical Hartree-Fock approximation to purely two-dimensional finite gases

and find that they can be described either with or without a condensate; this continues to be true

in the thermodynamic limit. The condensed solutions have a lower free energy at all temperatures

vi

but neglect the presence of phonons within the system and cease to exist when we allow for this

possibility. The uncondensed solutions, in turn, are valid under a more rigorous scheme but have

consistency problems of their own.

Path-integral Monte Carlo simulations provide an essentially exact description of finite interacting

gases and enable us to study highly anisotropic systems at finite temperature. We find that our

two-dimensional Hartree-Fock solutions accurately mimic the surface density profile and predict

the condensate fraction of these systems; the equivalent interaction parameter is smaller than that

dictated by the T = 0 analysis.

We conclude that, in two-dimensional isotropic finite trapped systems and in highly compressed

three-dimensional gases, there is a phenomenon resembling a condensation into a single state.

vii

TABLE OF CONTENTS

Page

ACKNOWLEDGMENTS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

v

ABSTRACT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vi

LIST OF TABLES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

LIST OF FIGURES

x

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

CHAPTER

1. INTRODUCTION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1

1.2

1.3

The creation myth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

BEC, two dimensions, and traps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

This thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2. THE IDEAL TRAPPED BOSE GAS . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.1

2.2

2.3

2.4

2.5

2.6

Introduction . . . . . . . . . . . . . . . . . . . .

Exact results for the ideal gas . . . . . . . . . .

The semiclassical approximation . . . . . . . .

The off-diagonal elements of the density matrix

Effects of anisotropy . . . . . . . . . . . . . . .

Summary . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

14

15

21

26

29

35

3. MEAN-FIELD THEORY OF INTERACTING SYSTEMS . . . . . . . . . . . . . . 36

3.1

3.2

3.3

3.4

3.5

3.6

3.7

3.8

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . .

The effective interaction . . . . . . . . . . . . . . . . . . . .

The Hartree-Fock-Bogoliubov equations . . . . . . . . . . .

The Gross-Pitaevskiı̆ equation and the Thomas-Fermi limit

Anisotropic systems at zero temperature . . . . . . . . . . .

Finite temperatures: The semiclassical approximation . . .

The interacting Bose gas in the Hartree-Fock approximation

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

36

37

38

42

46

49

51

61

4. THE TWO-DIMENSIONAL BOSE-EINSTEIN CONDENSATE . . . . . . . . . . 62

4.1

4.2

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

The model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.2.1

4.2.2

4.2.3

The Hartree-Fock-Bogoliubov equations . . . . . . . . . . . . . . . . . . . . . . 66

The thermodynamic limit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

The free energy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

viii

4.3

4.4

4.5

4.6

Numerical methods and results . . . . . . .

Conclusion . . . . . . . . . . . . . . . . . .

Appendix: The Hartree-Fock excess energy

Summary . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

72

76

76

77

5. PATH-INTEGRAL MONTE CARLO AND THE SQUEEZED INTERACTING BOSE GAS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

5.1

5.2

5.3

5.4

5.5

5.6

Introduction . . . . . . . . . . . . . . . . . . . . . . . . .

Path integrals in statistical mechanics . . . . . . . . . .

The Monte Carlo method and the Metropolis algorithm

An algorithm for PIMC simulations of trapped bosons .

The anisotropic interacting gas at finite temperature . .

Summary . . . . . . . . . . . . . . . . . . . . . . . . . .

6. CONCLUSION

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

78

80

82

89

97

106

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

APPENDICES

A. MATHEMATICAL VADEMECUM . . . . . . . . . . . . . . . . . . . . . . .

B. PERMUTATION CYCLES AND WICK’S THEOREM . . . . . . . . . . .

C. SPECTRAL DIFFERENTIATION AND GAUSSIAN QUADRATURE

D. MATHEMATICAL DETAILS OF PIMC SIMULATIONS . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

115

118

123

128

BIBLIOGRAPHY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

ix

LIST OF TABLES

Table

Page

1.1

Summary of methods used and systems studied in this thesis . . . . . . . . . . . . . . 11

3.1

Typical temperatures and frequencies found in experiments . . . . . . . . . . . . . . . 49

x

LIST OF FIGURES

Figure

Page

1.1

Experimental realization of Bose-Einstein condensation

. . . . . . . . . . . . . . . . . .2

2.1

Density and number density of a condensed Bose gas . . . . . . . . . . . . . . . . . . . 19

2.2

The growing condensate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.3

Front view of Fig. 2.2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.4

Condensate fraction of a two-dimensional ideal trapped Bose gas . . . . . . . . . . . . 21

2.5

Chemical potential of a two-dimensional ideal trapped Bose gas . . . . . . . . . . . . . 22

2.6

Position of the noncondensate maximum . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.7

Condensate fraction of a two-dimensional ideal trapped Bose gas in the semiclassical

approximation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.8

Density and number density of a condensed 2D gas in the semiclassical approximation . . 25

2.9

Eigenvalues of the two-dimensional isotropic trapped-ideal-gas density matrix . . . . . 28

2.10 The first seven radially symmetric eigenfunctions (including the ground state) of the

two-dimensional isotropic harmonic oscillator . . . . . . . . . . . . . . . . . . . . . . . 30

2.11 Condensate fraction of a three-dimensional ideal gas in a trap of increasing anisotropy . . 32

2.12 Surface density and surface number density of a three-dimensional ideal Bose gas in

a trap of increasing anisotropy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.1

Wavefunctions of two-dimensional isotropic trapped Bose gases at zero temperature . . 44

3.2

Typical forces exerted on an atom in a trap of increasing anisotropy . . . . . . . . . . 46

3.3

Condensate fraction of a three-dimensional Bose gas in the Hartree-Fock approximation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.4

Failure of the Hartree-Fock approximation at high temperatures

3.5

Chemical potential of a three-dimensional interacting Bose gas in the Hartree-Fock

approximation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.6

Two views of the density of an interacting 3D trapped Bose gas at various temperatures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

xi

. . . . . . . . . . . . 55

3.7

Number density of an interacting 3D trapped Bose gas at various temperatures . . . . 58

3.8

Densities and number densities of a two-dimensional isotropic ideal gas and the equivalent interacting system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3.9

Eigenvalues of the one-body density matrix of ideal and interacting two-dimensional

trapped gases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3.10 Ground state and azimuthally symmetric excited eigenfunctions of a two-dimensional

isotropic interacting trapped gas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.1

Condensate and noncondensate density profiles of a two-dimensional Bose-Einstein

gas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.2

Total density profiles of a two-dimensional gas . . . . . . . . . . . . . . . . . . . . . . . 74

4.3

Free energy per particle for the condensed and uncondensed solutions . . . . . . . . . 75

5.1

The density matrix as a path integral . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

5.2

A simple game that illustrates the Metropolis algorithm . . . . . . . . . . . . . . . . . 86

5.3

A variation on the game of Fig. 5.2 that shows the effect of choosing better a priori

probabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

5.4

Boxes and interactions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.5

The two steps involved in moving part of a cycle . . . . . . . . . . . . . . . . . . . . . 94

5.6

Moving a complete cycle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

5.7

Interactions between particles of changing identity . . . . . . . . . . . . . . . . . . . . 94

5.8

Monte Carlo and exact number densities of an ideal two-dimensional trapped gas . . . 98

5.9

Extracting the condensate number from a PIMC simulation . . . . . . . . . . . . . . . 98

5.10 Surface number density of a condensed ideal Bose gas of varying anisotropy . . . . . 101

5.11 Front view of the rightmost plot of Fig. 5.10 . . . . . . . . . . . . . . . . . . . . . . . 101

5.12 Finding the condensate fraction of an ideal Bose gas of varying anisotropy . . . . . . 102

5.13 Aspect ratios, obtained by Monte Carlo simulation, of condensed ideal and interacting

Bose gases of increasing anisotropy . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

5.14 Monte Carlo and mean-field number densities of a three-dimensional trapped gas . . 103

5.15 Monte Carlo number surface densities of a three-dimensional trapped gas of increasing

anisotropy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

xii

5.16 Monte Carlo number surface density and best-fit two-dimensional profile of an interacting three-dimensional Bose gas in a highly anisotropic trap . . . . . . . . . . . . . 105

5.17 Condensate fraction of a quasi-2D interacting Bose gas . . . . . . . . . . . . . . . . . 107

5.18 Occupation numbers and eigenfunctions of the one-body density matrix for a quasi2D Bose gas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

D.1 The Lévy construction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

xiii

CHAPTER 1

INTRODUCTION

We were experienced enough to know that when results in experimental

physics seem too good to be true, they almost always are!

—E. A. Cornell and C. E. Wieman [1]

1.1

The creation myth

It happened, appropriately enough, in the early morning. At 6:04:29 a.m. on 29 September 1995, at

W. Ketterle’s laboratory in Building 26 of the Massachusetts Institute of Technology (MIT), postdoc

N. J. van Druten scrawled “BEC” at the very bottom of a page of a lab notebook and followed it

with an exclamation point that was taller than the acronym, and almost as wide. “BEC” stood for

Bose-Einstein condensation, the topic of this thesis, and Ketterle’s group had just created the first

condensate of sodium atoms [2,3].

For the preceding five years, Ketterle and his collaborators had been repeating and perfecting a

procedure that is now followed all over the world: they would i) produce a vapor of alkali atoms;

ii) load it into a magneto-optical trap (MOT); iii) cool the vapor to microkelvin temperatures using

lasers that, when tuned to an appropriate transition frequency, exerted a strong damping force on the

atoms; iv) transfer the resulting “optical molasses” into a magnetic trap; v) cool the molasses down

to nanokelvin temperatures by applying a radiofrequency field that, by inducing spin-flip transitions

into untrapped states, encouraged the most energetic atoms to evaporate and let the remaining

ones collide until they achieved equilibrium at a lower energy; and vi) turn off the trap and use a

CCD camera to photograph the expansion of the liberated cloud and capture it on a screen. 1

1

This oversimplified account of the experiments does no justice to the ingenuity and effort required

to bring atoms to the densities and temperatures necessary for BEC by harnessing their complexity.

The interested reader may turn to Ref. 4, which includes a complete collection of references on the

experimental aspects of BEC, or to the Nobel lectures just cited. Our wording above is similar to

that used in a semipopular article [5], according to which “the possibility of seeing the long-sought

Bose-Einstein condensation” had been brought “tantalizingly close” by recent developments. The

article appeared three days after the first condensate was created.

1

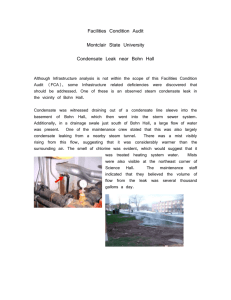

Figure 1.1. Experimental realization of Bose-Einstein condensation. Condensates like the one

shown here are routinely produced in D. S. Hall’s TOP trap at Amherst College. In Prof. Hall’s

words, “This particular condensate was released from our trap and allowed to expand for about

16 ms before we took the image, and probably contains somewhere around 300,000–400,000 atoms.

That image is 5 microns per pixel, and it came out of a cylindrically symmetric trap that was

90 Hz (axial) by 32 Hz (radial). The axial direction is up-down in the image—and is the direction

of greatest expansion upon release.”

Now Ketterle, van Druten, and the rest were seeing one of the pixellated, false-color images

that since that year have graced all sorts of science-related posters, calendars, and textbook covers:

out of a surface resembling a three-dimensional plot of a Gaussian there emerged a narrow, tall

peak located right at the center. Similar images taken at higher temperatures had shown only

the Gaussian, and lowering the temperature further yielded images where there was the peak and

little else. Moreover, the peak did not appear gradually, but, as hoped, emerged suddenly and

unambiguously at temperatures below a certain “critical” value Tc . The images obtained in the

experiments rendered the momentum distribution of the expanding cloud of atoms, or, since the

Fourier transform of a Gaussian is another Gaussian, they could also be interpreted as a depiction

of the density of atoms in the cloud. The sharp, narrow peak showed that a high fraction of the

sodium atoms had very low momentum, or, equivalently, that a macroscopic number of atoms had

accumulated at the center of the trap; both of these interpretations were consistent with BEC having

taken place.

Van Druten’s exclamation point was followed, in turn, by a much smaller, timid question mark

enclosed in parentheses: it was of course possible that a faulty apparatus, experimental noise,

2

stress, lack of sleep, and wishful thinking were conspiring to deceive them, but Ketterle and his

collaborators had at least two reasons to be confident that what appeared on their screen was

indeed a condensate. The first one was trivial: they had already been beaten to the finish line.

On 5 June of that year a group led by E. A. Cornell and C. E. Wieman of the Joint Institute for

Laboratory Astrophysics (JILA) in Colorado had created a similar, though much smaller, condensate

of 2000 rubidium atoms [6] in a time-orbiting-potential (TOP) trap. (R. Hulet and his collaborators

at Rice University in Texas had also created a condensate using lithium [7], but their evidence was

inconclusive until months later.)

The second reason was a real one, and was based on the fact that the trapping potentials were,

in all cases, anisotropic: while a “classical” thermal cloud obeys the equipartition theorem and

expands isotropically when freed from an anisotropic trap, the Bose-Einstein condensate, despite

its macroscopic size, is a fully quantum-mechanical entity that obeys the uncertainty principle and,

when released, should expand faster along the direction in which it had been confined more tightly

(see Fig. 1.1). This is just what happened at MIT and had happened before at JILA.

More than a year later, after Ketterle and his group had succeeded in imaging condensates nondestructively and observing them undergo the transition in situ, there came another breakthrough: the

group used a laser to gently cut a condensate in two, separated the pieces, put them back together,

and obtained a series of clean interference fringes [8] that showed that the atoms in the condensate

were all in the same quantum state, exactly as Einstein had predicted some 70 years before. There

remained no doubt that the Bose-Einstein condensate, until then a creature made out of ink, paper,

and mathematics, had finally been given physical form.

Some time before these events, but surely aware of their imminence, A. J. Leggett wrote a

paragraph that, in our view, goes a long way toward assessing their importance (the emphasis is

Leggett’s):

[T]he temperature, roughly 3 K, of the cosmic black-body radiation [ . . . ] is believed

to be the present temperature (in so far as one can be defined) of outer space, and

according to almost all cosmologies in current fashion the universe as a whole was never

cooler than this [ . . . ]. If this is correct, then when we cool bulk matter to temperatures

below the point marked we are in effect creating new physics which Nature herself has

never explored: indeed, if we exclude the possibility that on some planet of a distant star

alien life-forms are doing their own cryogenics, then the new phases of matter which we

create in the laboratory at low temperatures have never previously existed in the history

of the cosmos. [9]

What’s more, the JILA, MIT, and Rice experiments in a sense created a whole new field, removing

Bose-Einstein condensation from its niche somewhere between atomic physics and 4 He studies and

3

putting it right at the forefront as a general-interest discipline that, in turn, has breathed life

into many new subfields and opened the possibility of illuminating some of the dark corners still

left in more-established ones. A macroscopic manifestation of quantum mechanics akin to laser

action, superfluidity, and superconductivity, BEC had been known for decades to be at least partly

responsible for these other phenomena; since 1995 it became possible to extricate pure BEC behavior

from the other complications that characterize low-temperature systems and thus make further

progress toward their understanding.

Of great help in this endeavor is yet another astounding feature of the systems in which BEC was

finally created, namely their (in many respects) textbook-problem simplicity—for the most part, they

can be accurately described by ensembles of spinless point masses in perfect harmonic confinement

and affected by very simple interaction potentials—which makes them an excellent laboratory in

which to study the quantum-mechanical N -body problem using the methods that have been developed over time to treat other systems. Now, the particular characteristics of these systems—their

finite size, the Bose statistics they obey, and their inhomogeneity, among others—bring with them

a set of difficulties and subtleties that need to be dealt with.

This dissertation will use two standard many-body methods, mean-field theory and path-integral

Monte Carlo simulations, to approach and partially answer, in the context of these finite trapped

systems, a question almost as old as Einstein’s original prediction: can Bose-Einstein condensation

occur in two dimensions?

1.2

BEC, two dimensions, and traps

Predicted by Einstein [10,11] based on S. N. Bose’s [12] reinterpretation of Planck’s law of blackbody

radiation, Bose-Einstein condensation is a phase transition whereby, at the appropriate combination

of temperature and density, a system composed of bosons2 will experience a sudden avalanche of

particles into its ground state, resulting in a macroscopic accumulation. This phase transition is a

consequence of the indistinguishability of the particles that compose the system, as can be seen from

the following simple model [13]: Suppose we have two balls that we can place in any of two boxes,

2

Most textbooks and popular books and articles define bosons to be particles of integer spin.

However, bosons were originally defined in terms of their statistical behavior, of which BEC is the

fundamental characteristic at low temperatures. In fact, the spin-statistics theorem states that

particles with half-integer spin cannot be bosons and particles with integer spin cannot be fermions.

In any event, the integer-spin identification is adequate for our purposes, even though the “particles”

that we will consider are far from elementary. For example, 87 Rb, where BEC was first produced,

consists of 124 spin- 21 particles.

4

each of which can fit any number of balls (this last condition distinguishes Bose-Einstein statistics

from Fermi-Dirac statistics). If we initially make the balls distinguishable by painting one of them

white, we have four distinct ways

|•◦k

|

|◦ k• |

|• k◦ |

|

k•◦|

(1.1)

of placing them in the boxes, of which two (or 50% of the total) have two balls in the same box. On

the other hand, if the balls are identical, we now have only three different ways

|••k

|

|• k• |

|

k••|

(1.2)

of placing them in the boxes, of which two (or 67% of the total) have more than one ball in the same

box. The indistinguishability of the balls has enhanced their chances of occupying the same box. If

we identify the boxes with energy states and the balls with atoms, it follows that, as the temperature

of the system (and hence its average energy) decreases, the atoms will tend to congregate in the

state of minimum energy.

As Bose and Einstein showed, this feature was implicit in the Bose-Einstein distribution function, a generalization of Planck’s blackbody distribution that prescribes the average number of

atoms, hNk i, that occupy a given energy state Ek in a system at temperature T :

hNk i =

1

;

eβ(Ek −µ) − 1

(1.3)

here we have introduced the inverse temperature, β ≡ 1/kB T , where kB is Boltzmann’s constant,

and the chemical potential µ, which ensures that the total number of particles N is, on average,

conserved:

hN i =

X

k

hNk i.

(1.4)

Since the occupation numbers must be all positive, the chemical potential in (1.3) must be smaller

than the ground-state energy: µ < E0 . For a three-dimensional ideal gas in a box, where E = p2 /2m,

we can in principle turn the sum (1.4) into an integral [14,15] to obtain

N=

V

(2π~)3

Z

∞

0

4πp2 dp

eβ(p2 /2m−µ)

5

−1

,

(1.5)

in which case the chemical potential must always be negative. The substitution x ≡ βp 2 /2m turns

Eq. (1.5) into

V

2π

N=

(2π~)3

µ

2m

β

¶3/2 Z

∞

0

x1/2 dx

V

≡ 3 g3/2 (eβµ ),

x−βµ

e

−1

λT

(1.6)

where we have introduced the de Broglie wavelength, λ2T ≡ 2π~2 β/m ≡ 1/ΛT , and the Bose-Einstein

integral

g3/2 (x) ≡

∞

X

xk

,

k 3/2

k=1

(1.7)

whose definition and properties are reviewed in Appendix A. This implies the following general

relation to be obeyed by the number density, n ≡ N/V , of the Bose gas:

λ3T n = g3/2 (eβµ ).

(1.8)

As the temperature of the system decreases, β becomes larger. The chemical potential must correspondingly increase in order to keep the occupation numbers from becoming negative, and eventually, at some temperature Tc , becomes (infinitesimally smaller than) the ground-state energy

of the system (zero in this case). The right-hand side of (1.8) becomes ζ(3/2) ≈ 2.612, where

P∞

ζ(z) = gz (1) ≡ k=1 k −z is the Riemann zeta function, and we are left with Einstein’s criterion for

condensation:

nc

= ζ(3/2) ≈ 2.612.

(ΛTc )3/2

(1.9)

An inconsistency then arises: the right-hand side of (1.9) is bounded, and yet there is nothing

that prevents us in theory from fixing the temperature at a value lower than T c and increasing the

density beyond nc by adding particles—especially since, with a vanishing chemical potential, it costs

no energy to do so. To resolve this inconsistency, Einstein postulated that any extra particles would

have to occupy the ground state, whose relative importance had been neglected when introducing

the continuum approximation (1.5), and force the system to condense at those lower temperatures;

BEC had been conceived.

The exact same analysis in two dimensions yields

nc

= ζ(1),

ΛTc

(1.10)

and here we meet our dissertation topic: ζ(1), also known as the harmonic series, diverges, and

as a consequence this two-dimensional homogeneous ideal system can accommodate any number of

particles at any temperature without the need to invoke a condensation: there is no Bose-Einstein

condensation in two dimensions. (Or is there?)

6

Einstein accompanied his 1924 prediction of a condensation with a proposal of three systems

where it could be detected [16]: hydrogen, helium, and the electron gas. The third was ruled

out the next year with the introduction of Fermi-Dirac statistics.3 F. London’s observation [19]

that the λ point of 4 He occurs at a temperature close to Einstein’s Tc alerted physicists to the

importance of BEC in low-temperature systems and started a line of research that continues to

this day; however, the particular characteristics of helium—the low mass of its atoms and their

complicated interactions—make it a difficult system in which to study BEC directly. 4

Research into the first system received a boost when it was realized [21] that atomic hydrogen

(kept that way by spin polarization) should remain in the gaseous phase at all temperatures. By

the mid-1980s, two rival groups, one led by D. Kleppner, T. J. Greytak, and D. E. Pritchard at MIT

and the other led by I. F. Silvera at Harvard University, had managed to stabilize and cool down

spin-polarized hydrogen (in a container, using standard low-temperature methods) to a combination

of density and temperature not far from the BEC régime [22]. These efforts literally ran into a

wall, however, when it was found that the atoms stuck to the walls of the container and recombined

into molecules; this was initially suppressed by heating the walls, but the improvement in density

thus attained turned out to be short-lived. As a solution the experimenters decided to enclose the

hydrogen atoms in magnetic traps.

Now, when we put an ideal Bose-Einstein gas in a (harmonic isotropic) trap, a similar analysis

to the one above leads to

1

N=

(2π~)3

Z

3

dx

Z

∞

0

4πp2 dp

eβ(p2 /2m+mω2 r2 /2−µ) − 1

=

µ

kB T

~ω

¶3

g3 (eβµ ).

(1.11)

The density now depends on the position:

λ3T n(r) = g3/2 (eβ(µ−mω

2 2

r /2)

);

(1.12)

if we evaluate this density at x = 0 we recover Einstein’s criterion (1.9) (to quote from Ref. 23,

“the chief effect of the trap is merely to concentrate the particles to the density at which BEC commences”), and below the (higher) Tc that results any additional atoms pile up in the oscillator

3

There has been, however, an active search for BEC in a similar system, the exciton gas in

semiconductors [17,18].

4

In early 2001, BEC was observed in a dilute gas of 4 He in the 23 S1 metastable state [20].

7

ground state. More interesting for our purposes is the result we obtain when we repeat the analysis

in two dimensions; we obtain

N=

µ

kB T

~ω

¶2

g2 (eβµ ),

(1.13)

and g2 (x), like g3/2 (x), is bounded in the limit µ → 0, which leaves open the possibility of a

p

condensation in this system below a temperature kB Tc = ~ω 6N/π 2 [24]. (Note, however, that

the system density once again yields g1 (x), and diverges in this limit.) This possibility became

√

even more attractive when it was realized that the critical temperature was proportional to N in

two dimensions, while in three it went as N 1/3 and was thus much lower; this fact will show up

repeatedly in this thesis.

The quest for Bose-Einstein condensation in hydrogen at MIT—which, at one point or another,

involved Cornell, Hulet, Ketterle, and Wieman [3]—continued to be beset by difficulties, this time

at the evaporative-cooling stage, but eventually came to a happy conclusion in 1998 [25]. We

cannot help but close this section with Kleppner’s account [26] of the night when the first hydrogen

condensate was created:

Late one night last June, a phone call from the lab implored me to come quickly. I had

a pretty good idea of what was up because BEC in hydrogen had seemed imminent for

more than a week. As I drove in the deep night down Belmont Hill toward Cambridge,

still dopey with sleep, the blackness of the sky suddenly gave way to a golden glow. I was

not surprised because I had a premonition that the heavens would glow when BEC first

occurred in hydrogen. Abruptly, streamers of Bose-Einstein condensates shot across the

sky, shining with the deep red of rubidium and brilliant yellow of sodium. Small balls of

lithium condensates flared and imploded with a brilliant red pop. Stripes of interference

fringes criss-crossed the zenith; vortices grew and disappeared; blimp-shaped condensates

drifted by, swaying in enormous arabesques. The spectacle was exhilarating but totally

baffling until I realized what was happening: The first Bose-Einstein condensates were

welcoming hydrogen into the family!

1.3

This thesis

We have just seen that, while Bose-Einstein condensation does not occur in a two-dimensional ideal

homogeneous gas, the introduction of a trap changes the character of the system and allows the

transition to happen. However, when one restores the interactions between atoms their ability to

condense is lost once again, at least in the thermodynamic limit; this result, though rigorously proved

(by P. C. Hohenberg in 1967 [27], and adapted to the trapped case by W. J. Mullin in 1997 [28]),

continues to be somewhat of a mystery, since every relevant limiting case (not just the ideal gas)

seems to suggest otherwise: the equations of many-body theory consistently predict the existence of

8

BEC long-range order at zero temperature; for small numbers of particles, both experiments [29,30]

and Monte Carlo simulations [31–33] show results consistent with the presence of BEC.

On the other hand, these results may be due to other processes (or combinations thereof) that

have been predicted to happen in other two-dimensional systems. The Kosterlitz-Thouless transition [34], for example has has been proved to take place [35] in two-dimensional systems that are

free in one direction and harmonically confined in the other. There is the possibility of a transition

into a “smeared” BEC [36–38], in which a band of states become populated in such a way that

their total population is macroscopic. Finally, there is the possibility of a quasicondensation: the

presence of coherence over a region that, though finite, might still be larger than that prescribed by

the characteristic length of the system [39–41].

In this dissertation we will look into a few different questions regarding the existence of BoseEinstein condensation in two-dimensional traps. First of all, is it possible to find an approximation

scheme that predicts the existence of a BEC in a finite system? If that is the case, what will this

condensed system look like? Moreover, is this condensation into a single state? In other words, how

large is the population of the condensate (that is, the ground state of the system) when compared to

those of the low-lying states? Finally, the standard way of creating a two-dimensional condensate in

the laboratory [30] consists of taking a three-dimensional system and squeezing it in one direction,

creating in effect a pancake-shaped trap. In that case, how well can the resulting quasi-2D condensate

be described by our two-dimensional scheme? Do the density profiles match? Are the condensate

fractions consistent? How does the ground-state population compare, once again, to those of the

excited states?

Our scope is narrow: we will restrict ourselves to the depiction and analysis of density profiles,

the calculation of condensate fractions, and the comparison of ground-state occupations to those

of a few excited states. Except in the case of the ideal gas, we will avoid the transition itself,

mainly because most of the methods we use are expected to break down—and indeed do—before

the temperature reaches its critical value. We will not consider the dynamics of the system at all,

though experiments are going in that direction. In particular, and being aware of the enormity of

our omission, we will shy away from discussing superfluidity; the Kosterlitz-Thouless transition will

receive little more than a passing mention. The gas will always be assumed to be at temperatures

so low that it is at least partially condensed. It will also be invariably assumed to be in equilibrium;

in particular, whenever we speak of raising or lowering the temperature of a system, we will assume

that this is done slowly enough so that equilibrium is not disturbed. (And even then we leave aside

the problem of fluctuations about equilibrium values.)

9

We have tried to be as thorough as possible within this restricted range, and to make our

presentation self-contained. Though we have tried to relegate the goriest details to the appendices,

the main text is still full of mathematics. We have striven to fill in the steps that, though taken for

granted in research papers, are far from trivial. The reader will also note that our restricted subject

in fact ramifies into a maze of subdivisions and special cases; moreover, these subtopics can be and

have been addressed using one or more—and even combinations—of the methods we present.

As we said above, the systems under study are finite, dilute, harmonically trapped Bose-Einstein

gases below their transition temperatures. They will be either ideal or interacting, and either at

absolute zero or at finite temperature. The traps that confine them will be of three types: threedimensional and isotropic, two-dimensional and isotropic, or three-dimensional and isotropic in the

x- and y-directions, with a variable confinement along the z-direction always greater than those

along the other two. (Table 1.1 on the following page contains a partial listing of the systems that

we have studied, the methods we have used to study them, and the results we have obtained.)

We begin by studying the ideal gas in Chapter 2. Though hardly a realistic system, the ideal

gas has been an important reference and starting point from the very beginning. For our purposes,

the ideal trapped gas is important because it can be treated exactly at every temperature, for any

(finite) number of atoms, and in traps of any dimensionality or degree of anisotropy. This exact

method will allow us to look at the transition in some detail, to define and exhibit the quantities

that we will be calculating later, and to introduce a semiclassical approximation that will recur,

with similar successes and limitations, in the rest of the thesis; we do note that this semiclassical

approximation will be used to describe only the noncondensate; the condensate itself will receive the

same fully quantum-mechanical treatment as in the exact theory. We will display various results

that will enable us to get a feel for the temperature scales and occupation numbers involved and will

acquaint us with the typical shapes of the density profiles and of the wavefunctions that characterize

the various states in which the atoms can be.

In Chapter 3 we begin our study of interacting gases using mean-field theory. We develop

the standard Gross-Pitaevskiı̆ theory (also known as the Hartree-Fock-Bogoliubov equations) in

as general and detailed a way as we can; immediately afterwards we go on to introduce diverse

approximations of increasing crudity, settling eventually upon, and extracting results from, two of

the simplest:

While it is perhaps the least sophisticated approach to the study of BEC, being easier to solve

than even the ideal gas, the zero-temperature Thomas-Fermi limit nonetheless yields predictions

remarkably close to reality and is a standard tool for fitting experimental data. Though the assump-

10

Table 1.1. Summary of methods used and systems studied in this thesis

3D

2D

Compressed 3D

Works always

Works always

Works always

Fails at high T

Fails at high T

Fails at high T

Works always

Works always

Not tested enough

Works always

Works always

Fails at high λ1

but can be adapted

for that case

◦ HFB + GP2

Yields results

similar to those of

the HF approximation or else fails

Always fails

Not tested enough

◦ HF + GP3

Fails at high T

Fails at high T

Not tested enough

◦ HFB + TF4

Not tested enough

Always fails

Not tested enough

Works where the

HF approximation

works

Works where the

HF approximation

works

Not tested enough

Always fails

Works always but

has a high free

energy

Not expected to

work

Works always

Works always

Works always

Works always

Works but

disagrees with the

compressed-3D

results

Described well at

high λ by the 2D

HF approximation

Ideal gas

◦ Exact solution

◦ Semiclassical approx.

Mean-field theory

◦ T =0

◦ Gross-Pitaevskiı̆ Eq.

◦ Thomas-Fermi limit

◦ T 6= 0

◦ Thermodynamic limit

◦ HF + TF5

Uncondensed solution

PIMC simulation

◦ Ideal gas

◦ Interacting gas

1

2

3

4

5

Compression ratio or anisotropy parameter, defined by λ ≡ ωz /ω, where ω ≡ ωx = ωy .

Semiclassical Hartree-Fock-Bogoliubov approximation for the thermal cloud combined with

the Gross-Pitaevskiı̆ equation for the condensate.

Semiclassical Hartree-Fock approximation for the thermal cloud combined with the GrossPitaevskiı̆ equation for the condensate.

Semiclassical Hartree-Fock-Bogoliubov approximation for the thermal cloud combined with

the Thomas-Fermi limit for the condensate.

Semiclassical Hartree-Fock approximation for the thermal cloud combined with the ThomasFermi limit for the condensate.

11

tions that lead to it lose validity when the system becomes very anisotropic, it is still possible to

slightly modify the model in order to accommodate the necessary changes, and as a result we get

an important connection between a squeezed 3D gas and a 2D gas.

The Gross-Pitaevskiı̆ theory models three-dimensional interactions by considering two-body collisions, and the relevant parameter that describes them, the s-wave scattering length, is an experimentally measurable quantity. The two-dimensional version of the theory is quite different, and,

even if we try to model 2D collisions using 3D methods, we still have to deal with the fact that the

corresponding interaction parameter has different dimensions, and, consequently, cannot be readily

expressed in terms of the standard three-dimensional coupling constant. Still, we do not expect

the interactions in the two-dimensional gas to depend too strongly on parameters other than the

scattering length, and, when the 2D system has been created by compressing a three-dimensional

trap in one direction, some relation between the characteristic length in that direction to those in

the others. The Thomas-Fermi limit provides that missing link.

The other simplified scheme will be our main workhorse when we turn to the study of finitetemperature systems in the rest of the thesis: this method combines an exact description of the

condensate by means of the Gross-Pitaevskiı̆ equation (exact in the sense that it is identical to that

prescribed by more-rigorous theories) with a semiclassical, Hartree-Fock treatment of the noncondensate.

After presenting all the theory, we proceed to discuss the numerical methods we used to solve

the Hartree-Fock equations and show some of the results we obtained. We initially study one of

the standard systems in the literature: a 104 -atom 3D isotropic gas with

87

Rb parameters. This

will give us the chance to display the condensate fraction, the density, and the chemical potential of

an interacting gas; in particular, we will verify that interactions affect the transition temperature,

deplete the condensate, and cause a noticeable increase in the size of the system. This study will

also allow us to see some limitations of the semiclassical approximation—notably its failure at high

temperatures. After that, we go back to the 2D gas: repeating what we did in Chapter 2 for the ideal

case, we will set up and diagonalize the off-diagonal one-body density matrix in the semiclassical

Hartree-Fock approximation; the resulting eigenvalues and eigenvectors will show us the effects

of interactions on the ground- and excited-state populations of system and on the shapes of the

corresponding wavefunctions.

The study of the strictly two-dimensional interacting trapped gas is taken up in more detail in

Chapter 4. At that point we will be confronted with another interesting fact: the mean-field equations derived and used in Chapter 3 can also be solved for a 2D interacting finite trapped system

12

without having to invoke the presence of a condensate [42]. The approximation scheme that allows

these “uncondensed” solutions is in principle more rigorous than the one we have been using, since,

while still semiclassical, it does not make use of the Hartree-Fock approximation. When we try to

solve this model in the presence of a condensate, we encounter phononlike quasiparticles at the low

end of the energy spectrum; on the other hand, if we momentarily consider high temperatures and

artificially remove the condensate, the phonons disappear from the spectrum—and uncondensed

solutions can be found all the way down to T = 0. This would imply that phonons destroy the

long-range order of the system and prevent it from condensing, in agreement with previous explanations [43]. However, these uncondensed solutions have consistency problems of their own (in

particular, they do not reduce to the correct limit as T → 0) and are found to have a higher free

energy than the Hartree-Fock condensed ones. This renews our faith in the validity of the HartreeFock solutions, along with the infrared energy cutoff they impose, and encourages us to put them

through one last test.

Chapter 5 looks at the shape and condensate fraction of a quasi-2D interacting system created by

deforming a three-dimensional trap into the shape of a pancake. Our aim is to see how well the surface

density profile and condensate fraction of that squeezed system can be described using the density

profile and condensate fraction predicted for a pure 2D gas by the Hartree-Fock approximation. As

a guide, and in the absence of experimental results, we will introduce and use another, different,

method of analysis. Path-integral Monte Carlo simulations [44] are, for interacting gases, the closest

we have to an exact method like the one we use in Chapter 2 to treat the ideal gas [45]; we will spend

a good fraction of Chapter 5 justifying this last statement, explaining the method, and describing

its implementation for the specific case of a trapped Bose gas. After checking the correctness of our

simulations by reproducing results obtained in earlier chapters, we will proceed to display the results

we obtained for squeezed interacting systems and compare them to our mean-field predictions.

Chapter 6 will wrap up our considerations and suggest some directions for future work.

13

CHAPTER 2

THE IDEAL TRAPPED BOSE GAS

The weakly interacting Bose gas can be treated using the mean-field

approximation, though at the low densities likely to be of experimental

interest, the corrections are not expected to be important.

—V. Bagnato and D. Kleppner [24]

2.1

Introduction

As it turns out, corrections due to interactions are much more important than Bagnato and Kleppner

expected. When interpreting their data, experimentalists use the zero-temperature Thomas-Fermi

limit (to be discussed later), not the ideal gas, as a first approximation. It has even been argued that,

even though the phenomenon was discovered in the ideal gas, the very existence of a condensation is a

consequence of interactions.1 There are, however, many reasons why the ideal gas is worth studying,

and they are not restricted to its excellence as a pedagogical tool. The condensates created in the

laboratory occur in systems that are both decidedly finite and very inhomogeneous; the ideal gas

can be adapted to incorporate these nontrivial effects, many of which carry over to the interacting

case without modification. Moreover, the noninteracting gas is becoming less of an idealization with

every passing day: recent work in the study of Feshbach resonances has enabled experimentalists

to tune the strength of interatomic interactions, and the possibility of creating an essentially ideal

trapped gas is becoming progressively plausible.

We begin the chapter by introducing the one-body density matrix as the quantity that includes

all the information that can be gleaned about a finite system of trapped noninteracting bosons.

After discussing some exact quantum results where the inhomogeneity and finite size are already

present, we introduce a semiclassical approximation that enables us to set a temperature scale for the

1

Consider for example the following remarks by P. Nozières [38] (the emphasis is his): “[D]o the

condensate particles accumulate in a single state? Why don’t they share between several states that

are degenerate, or at least so close in energy that it makes no difference in the thermodynamic limit?

The answer is non-trivial: it is the exchange interaction energy that makes condensate fragmentation

costly. Genuine Bose-Einstein condensation is not an ideal gas effect: it implies interacting particles!”

14

system and that will reappear in a more sophisticated form when we treat the interacting gas in the

following chapters. This semiclassical approximation does not include the condensate, which has to

be added by hand; this limitation will provide us with a first opportunity to perform a self-consistent

calculation.

By explicitly diagonalizing the one-body density matrix of a gas in an isotropic 2D trap we

can obtain the populations of the ground state of the system and of its first few excited states.

We perform the diagonalization for distinguishable particles and for bosons and find that in the

distinguishable case the ground state is not preferentially populated, while in the Bose case the

occupation of the ground state is identical to that found by the more direct method. As we would

expect, the eigenvectors for both cases are the same and correspond to the eigenfunctions of the

harmonic oscillator.

We also squeeze a three-dimensional system by increasing one of the trapping frequencies; this

contributes to an enhancement of the density at the center of the trap and thus encourages the

system to condense. Indeed, we will see that we can take a system at a temperature above the

transition temperature and cause it to condense merely by confining it more tightly.

2.2

Exact results for the ideal gas

We start by studying a system of N distinguishable noninteracting atoms, each of mass m, trapped

by a σ-dimensional isotropic harmonic-oscillator potential characterized by an angular frequency ω

(we will consider anisotropic traps in later sections). Since there are no interactions, each atom moves

independently of the others, and we can consequently visualize the ensemble as being composed of

N one-body systems. Let us restrict ourselves momentarily to one of these. In an isotropic trap,

the motion of a single atom is described by the one-body Hamiltonian

H1 =

1

p2

+ mω 2 r2 ,

2m 2

(2.1)

where p and r are the momentum and position operators. The harmonic-oscillator potential sets the

length and energy scales of the system, and it is in terms of these that we will henceforth express

all physical quantities. As is well known [46], a single quantum-mechanical point mass confined

by a one-dimensional harmonic trap has a minimum energy, given by ~ω/2, that corresponds to a

15

ground state of Gaussian shape characterized by a length x0 such that x20 = ~/mω. Introducing

dimensionless coördinates x = r/x0 and momenta κ = x0 p/~, we can rewrite (2.1) as

H1 ≡

1

1

~ω H̃1 = ~ω (κ2 + x2 ),

2

2

(2.2)

whose eigenenergies in σ dimensions are given in our dimensionless units by

²n1 ···nσ ≡

2

En ···n = 2n1 + · · · + 2nσ + σ,

~ω 1 σ

(2.3)

where n1 , . . . , nσ are integers. (This is for Cartesian coördinates; the eigenfunctions and eigenvalues

in other coördinate systems are displayed in Appendix A).

When the system is at a temperature T , quantum statistics postulates that all relevant information is contained in the canonical one-body density matrix, ρ1 = e−βH1 /Z1 , where β ≡ 1/kB T , kB is

Boltzmann’s constant, and the one-body partition function Z1 ≡ Tr e−βH is a normalization factor

that ensures Tr ρ1 = 1. When we evaluate the diagonal elements of the (unnormalized) one-body

density matrix in real space we obtain

hx|e−β̃ H̃1 |xi =

2

1

cschσ/2 2β̃ e−x tanh β̃ ,

(2π)σ/2

(2.4)

where we have introduced the dimensionless inverse temperature β̃ ≡ t−1 ≡ 21 ~ωβ. This expression,

first found by F. Bloch, can be derived by explicitly summing over Hermite polynomials [47], by

using Feynman path integrals, by solving the Bloch equation H̃ρ1 = −∂ρ1 /∂ β̃ [15], or by purely

algebraic methods [48]. Appendix A sketches a proof for σ = 2 using Laguerre polynomials.

The one-body partition function, Z1 = (2 sinh β̃)−σ , can be found by integrating (2.4) with

respect to x or by evaluating the trace in its energy eigenbasis. If we divide Eq. (2.4) by Z 1 we

obtain the normalized diagonal density matrix,

ñ(x) =

2

1

tanhσ/2 β̃ e−x tanh β̃ ;

π σ/2

(2.5)

we shall henceforth use the notation ñ ≡ xσ0 n to refer to densities in real space.

PN

When we have N atoms, the Hamiltonian becomes H̃ =

j=1 H̃1,j , and the system will be

described by a density matrix

ρ=

N

Y

e−β̃ H̃1,j

1 −β̃Σj H̃1,j

=

e

,

ZN

Z1

j=1

16

(2.6)

where we used the fact that, in the absence of interactions, ZN = (Z1 )N . The corresponding reduced

one-body density matrix is defined by [49]

ρ1 = N Tr ρ = N

2:N

e−β̃ H̃1,1

Z1

(2.7)

and is identical to (2.5) but normalized to N :

ñ(x) =

2

N

tanhσ/2 β̃ e−x tanh β̃ .

σ/2

π

(2.8)

Up to now, the atoms, though identical, have been taken to be distinguishable: we have used

labels to tag them and even selected the “first” one when we calculated the reduced one-body

density matrix. When the system is composed of indistinguishable bosons, the situation is much

more involved (see Appendix B and Ref. 50 for some details), but the result is transparent and

intuitive. If we invoke the grand canonical ensemble, it is possible to show that the reduced onebody density matrix adopts a form that mimics the Bose-Einstein distribution: 2

ρ1 ≡

1

eβ̃(H̃1 −µ̃) − 1

=

∞

X

e−`β̃(H̃1 −µ̃) ;

(2.9)

`=1

the chemical potential µ̃ is determined by the subsidiary condition N = Tr ρ 1 . The fact that we

can express Eq. (2.9) as a sum over ` will be of enormous convenience throughout this work, but its

importance reaches far beyond; as Appendix B shows, it is a manifestation of Bose symmetry at its

deepest level, the closest we will get to being able to define what we mean by bosons. The real-space

diagonal density now becomes

∞

∞

X

2

1

e`β̃(µ̃−σ)

1 X

σ/2

`β̃ µ̃

−x2 tanh `β̃

hx|ρ1 |xi ≡ ñ(x) =

e−x tanh `β̃ .

e

csch

2`

β̃

e

=

(2π)σ/2 `=1

π σ/2 `=1 (1 − e−4`β̃ )σ/2

(2.10)

It is worth emphasizing that it is the difference µ̃ − σ, and not µ̃ by itself, that tends to zero below

the transition in the trapped case. This can be clarified further by noticing that, if we were to add

another particle to the system, it would have to have an energy of at least ~ωσ/2, the ground-state

2

Note that we use exactly the same notation for distinguishable and Bose-Einstein density matrices. In the few instances where this could lead to confusion, in Chapter 5 especially, we will use

the suffix B to denote quantities that obey Bose statistics.

17

energy of the oscillator, and the energy of the system would increase by that amount. We can readily

integrate any of the expressions above with respect to x to obtain [51,52]

∞

∞

X

e`β̃(µ̃−σ)

1 X e`β̃ µ̃

=

.

N= σ

σ

2

sinh `β̃

(1 − e−2`β̃ )σ

`=1

`=1

(2.11)

The first expression is especially interesting because it gives us the total particle number in terms

of the canonical one-body partition function; proofs of this result appear in Appendix B and in

Ref. 53. Equations (2.10) and (2.11) provide a complete description of the system, and, as Fig. 2.1

illustrates, they predict the occurrence of a condensation below a certain temperature.

The Bose-Einstein condensate is a pure (albeit macroscopic) system that can be described by

a single wavefunction. In the trapped ideal case, this wavefunction is the purely real ground state

of the harmonic oscillator. The condensate density is the square of this wavefunction, and as a

consequence the condensate density profile is the same at all temperatures:

ñ0 =

N0 −x2

;

e

π σ/2

(2.12)

only its normalization constant, which corresponds to the condensate number, varies. To calculate

the condensate number we plug the ground-state energy ²0 = σ into the Bose-Einstein distribution

function:

N0 =

1

eβ̃(²0 −µ̃) − 1

=

1

eβ̃(σ−µ̃) − 1

=

∞

X

e`β̃(µ̃−σ) .

(2.13)

`=1

We can then use (2.12) and (2.13) to resolve the total density (2.10) into its condensate and noncondensate components at any temperature and for any number of atoms. Figures 2.2 and 2.3

display the rapid growth of the condensate density with decreasing temperature and show that the

thermal density attains a maximum at a point away from the origin; we will later see that this

“delocalization” effect is enhanced by interactions.

The Bose-Einstein transition is characterized by abrupt changes in various quantities. Figure 2.4 on page 21 shows the temperature dependence of the condensate fraction for different values

of N . The onset of condensation, as one would expect, becomes more pronounced as N grows. It is

also evident that the system is completely condensed at zero temperature; indeed, in the limit β̃ → ∞

we can neglect the exponential in the denominator of Eq. (2.11) and once again obtain (2.13), provided we assume limβ̃→∞ β̃(µ̃ − σ) to be constant; this confirms that µ̃ ≈ σ at low temperatures.

The chemical potential stays essentially fixed at this value until the transition temperature, at which

point it experiences a sharp decrease and quickly becomes negative (see Fig. 2.5). The location of

18

0.3

ñ(x)/N

PSfrag replacements

0.2

0.1

5

0

0

2

4

x

6

25

8

50

75

T /Tc (%)

50

75

T /Tc (%)

100

PSfrag replacements

1

0.8

N (x)/N

0.5

0.6

0.4

5

0.2

0

0

2

4

x

6

25

8

100

Figure 2.1. Density and number density of a condensed Bose gas. Throughout this work we will

depict the density of a Bose system below the transition temperature using one of these two modes

of description. In this case, we have a two-dimensional ideal gas of N = 1000 atoms confined by

an isotropic trap. The plot at top shows the actual density of the gas, ñ(x), that has appeared

ourR discussion; the bottom panel shows the “number density” N (x) defined by N =

Rthroughout

∞

dx

N

(x)

= dσx ñ(x)—in two dimensions, N (x) = 2πx ñ(x); this is the quantity yielded directly

0

by the Monte Carlo simulations of Chapter 5. In each case, the total density (solid line) has been

resolved into its condensed (dotted) and thermal (dashed) components and has been divided by N .

19

0.15

ñ(x)/N

PSfrag replacements

0.1

0.05

5

0

0

2

95

4

x

90

6

85

8

T /Tc (%)

80

Figure 2.2. The growing condensate. This “closeup” view of Fig. 2.1 shows (when read from right

to left) the fast growth of the condensate in the region just below the critical temperature.

0.12

ñ(x)/N

ñ(x)/N

0.12

0.06

0

0

2

x

4

0

6

0.06

0

0

2

0

2

x

4

6

4

6

0.12

ñ(x)/N

ñ(x)/N

0.12

PSfrag replacements

0.06

0

2

x

4

0.06

0

6

x

Figure 2.3. Front view of Fig. 2.2. Note that the noncondensate (dash-dotted line) has a much

larger spatial extent than the condensate (dashed line). The delocalization hump is clearly visible.

20

1

PSfrag replacements

N0 /N

0.8

0.6

0.4

0.2

1.2

0

0

104

0.5

T /Tc

103

N

1

102

Figure 2.4. Condensate fraction of a two-dimensional ideal trapped Bose gas. The solid lines are

the exact results obtained from solving Eq. (2.11) for µ̃ and then using (2.13) to extract N 0 ; the

dashed lines show the infinite-N limit given by Eq. (2.17) below. We can see that already for N = 10 4

the infinite-N limit is quite accurate.

the thermal maximum also changes abruptly at the transition: as Fig. 2.6 illustrates, the “hump”

is suddenly shifted towards the origin, signalling that a sizable fraction of the particles have been

displaced to the most localized state available.

2.3

The semiclassical approximation

As written, the density matrix (2.10) gives an exact description of a finite trapped Bose gas of any

dimensionality at any temperature. It is difficult, however, to extract relevant quantities from it

in analytic form—the transition temperature is a case in point—and in order to do that we will

introduce a semiclassical approximation that we will also be using for the interacting gas.

The semiclassical approximation assumes that the temperature is high when compared to the

spacing between energy levels (which is microscopically small for a standard trap); if k B T À ~ω,

then β̃ ≡ ~ω/2kB T can be treated as a small parameter, and this allows us to expand the exponential

in the second expression of Eq. (2.11). However, since ` is unbounded, by performing that expansion

we are neglecting the sizable contribution to the sum of large-` values; we will soon see that these

21

PSfrag replacements

2.5

2

0.5

1.5

1

µ̃

0.5

0

−0.5

−1

−1.5

−2

0

0.2

0.4

0.6

T /Tc

0.8

1

1.2

Figure 2.5. Chemical potential of a two-dimensional ideal trapped Bose gas. The values of µ̃ shown

correspond to N = 100, 1000, and 10000 (solid, dashed, and dotted line respectively), just like in

Fig. 2.4.

1.17

PSfrag replacements

xmax

1.14

1.11

1.08

97

98

99

100

T /Tc (%)

101

102

103

Figure 2.6. Position of the noncondensate maximum, located at the hump shown in Fig. 2.3. In

this case we have N = 5 × 104 , 105 , and 1.5 × 105 (dash-dotted, dashed, and solid line respectively).

The inward shift caused by the temperature drop is more pronounced for higher N (compare to the

behavior of the chemical potential in Fig. 2.5).

22

1

0.8

N0 /N

0.6

PSfrag replacements

0.4

0.2

0

0

0.2

0.4

T /Tc

0.6

0.8

1

Figure 2.7. Condensate fraction of a two-dimensional ideal trapped Bose gas in the semiclassical

approximation. The figure shows the exact result for N = 1000 (solid line) along with the infinite-N

limit (2.17) (dotted line), the first-order condensate fraction predicted by the self-consistent procedure sketched in (2.19) (dashed line). The last two are virtually indistinguishable for most temperatures and clearly overestimate the condensate. Also shown is the finite-N approximation (2.22)

(dash-dotted line), which underestimates the condensate in 2D.

correspond to N0 , and thus it is necessary to insert the condensate number by hand [28]. The

expansion then yields

N ≈ N0 +

≈ N0 +

∞

1 X e`β̃(µ̃−σ)

(2β̃)σ `=1 `σ (1 − σ`β̃)

1

(gσ (eβ̃(µ̃−σ) ) + β̃σgσ−1 (eβ̃(µ̃−σ) ))

(2β̃)σ

for the total number of atoms; gσ (x) =

P∞

k=1

(2.14)

xk /k σ is a Bose-Einstein integral, already introduced

in Chapter 1, whose definition and properties are summarized in Appendix A. Equation (2.14)

can also be obtained directly from the assumption that the harmonic-oscillator energy levels are so

closely spaced that they may be taken to form a continuum; the subsequent replacement of sums by

integrals helps elucidate the identification of the neglected terms with the condensate fraction [53,54],

exactly as in the homogeneous gas [14,15].

As we remarked right below Eq. (2.10) on page 17, the transition can be characterized by the

vanishing of the quantity µ̃ − σ; when that happens, we have

23

N ≈ N0 +

ζ(σ)

σ ζ(σ − 1)

+

,

σ

2 (2β̃)(σ−1)

(2β̃)

(2.15)

where ζ(x) is the Riemann zeta function, or, upon introducing the “critical” temperature defined

(σ)

by tc

≡ 2(N/ζ(σ))1/σ ,

N0

=1−

N

µ

t

(σ)

tc

¶σ

−

σ −1/σ ζ(σ − 1)

N

2

(ζ(σ))(σ−1)/σ

µ

t

(σ)

tc

¶σ−1

.

(2.16)

If we keep the expansion to first order, we obtain

N0

=1−

N

µ

t

(σ)

tc

¶σ

=1−

µ

T

(σ)

Tc

¶σ

,

(2.17)

(σ)

which justifies in hindsight our definition of the critical temperature. For t > t c , the condensate

number vanishes: the ground state has an occupation no different than that of any other state.

Below the critical temperature, the condensate fraction obeys a simple power law in this limit,

and increases with decreasing temperature until it includes every particle in the system. To find

the corresponding approximation for the density we expand the exponential in the denominator of

Eq. (2.10) and obtain

ñ(x) ≈ ñ0 (x) +

µ

t

4π

¶σ/2

2

gσ/2 (eβ̃(µ̃−σ−x ) ),

(2.18)

where ñ0 is given once again by (2.12) but with N0 given by (2.17). By integrating both sides

of (2.18) over x we obtain the first two terms of Eq. (2.14); to this order, then, the number of

atoms is conserved and the approximation is consistent. As a result, Eqs. (2.18), (2.13), and (2.12)

combined provide in principle a complete specification of the system like that discussed on page 18.

The only unknown is the chemical potential, which we can calculate self-consistently: we initially

take N0 = N , µ̃ = σ and loop

N0

+σ

N +1

µ 0 ¶σ

t

gσ (eβ̃(µ̃−σ) )

N0 = N −

2

µ̃ = t log

(2.19)

until µ̃ and N0 stop changing. Some results of this procedure are shown in Figs. 2.7 and 2.8.

The second term in (2.16) is a correction [28,55,56] that takes into account the finite size of the

system and vanishes as N → ∞, in what is called the thermodynamic limit (TDL). 3 The Riemann

3

It has been remarked [28,43,57] that, just as the true TDL of a homogeneous system is taken

by simultaneously letting N → ∞ and V → 0 so that the density remains finite, when taking

24

0.8

N0 (x)/N , N 0 (x)/N

PSfrag replacements

ñ0 (x)/N , ñ0 (x)/N

0.3

0.2

0.1

0

0

1

2

x

3

0.2

2

4

6

4

6

x

0.4

N0 (x)/N , N 0 (x)/N

ñ0 (x)/N , ñ0 (x)/N

0.4

0

0

4

0.15

0.1

0.05

0