Robust Image Obfuscation for Privacy Protection in Web 2.0

advertisement

Robust Image Obfuscation for Privacy Protection in Web 2.0

Applications

Andreas Pollera , Martin Steinebacha and Huajian Liua

a Fraunhofer

Institute for Secure Information Technology, Darmstadt, Germany

ABSTRACT

We present two approaches to robust image obfuscation based on permutation of image regions and channel

intensity modulation. The proposed concept of robust image obfuscation is a step towards end-to-end security in

Web 2.0 applications. It helps to protect the privacy of the users against threats caused by internet bots and web

applications that extract biometric and other features from images for data-linkage purposes. The approaches

described in this paper consider that images uploaded to Web 2.0 applications pass several transformations, such

as scaling and JPEG compression, until the receiver downloads them. In contrast to existing approaches, our

focus is on usability, therefore the primary goal is not a maximum of security but an acceptable trade-off between

security and resulting image quality.

1. MOTIVATION AND CHALLENGE

Images users upload to Web 2.0 services (e.g. online social networks) raise many questions of privacy. They

are often a snapshot of non-public figures in private settings and reveal sensitive private information about the

depicted persons. Photos tell the viewers where and when a certain occurrence took place, who participated in

particular actions, and which relationships exist among the persons. Moreover, some images may be offensive or

embarrassing. As a consequence, users have to care about what they and their contacts share. To protect their

privacy, users have to control the image distribution inside and among these Web 2.0 services, and they have to

trust the service operators.

Current developments in the industry intensify the users’ privacy problem: Biometric software is making

its way into Web 2.0 applications, allowing new ways to link personal data. A first step is facial recognition,

e.g. introduced by the social network Facebook in June 2011.1 With facial recognition, a service provider can

automatically link uploaded images with other personal information of the identified persons. Pioneers of Web

2.0 facial recognition software like Polar Rose or face.com offered similar web services for social networks and

other Web 2.0 platforms several years ago.

Facial recognition will spread into more and more applications: For instance, facial recognition will become

part of augmented reality software on mobile devices, allowing device owners to instantly access personal data

of persons captured with the device camera - even of unknown persons they meet somewhere in public places.2

Besides that, the performance of biometric systems improves with the steadily increasing quality of captured

digital images. The systems will also benefit from 3-D imaging in consumer electronics enabling the software to

extract new biometric features e.g. from facial contours.

The concept of robust image obfuscation, we propose in this article, helps to tackle these privacy issues.

Designed for the use in end-to-end security mechanisms, we consider robust image obfuscation as a key concept

allowing users to take privacy protection into their own hands when distributing images through Web 2.0 services.

By an end-to-end security mechanism for images we mean that a sender obfuscates images with robust image

obfuscation, before uploading them to Web 2.0 services such as online social networks. With the aid of a key

distribution scheme, the sender defines who is able to de-obfuscate the image and thus belongs to the group

of intended receivers. The obfuscation prevents particular malicious image processing like the extraction of

Authors’ contact information:

Andreas Poller: andreas.poller@sit.fraunhofer.de

Martin Steinebach: martin.steinebach@sit.fraunhofer.de

Huajian Liu: huajian.liu@sit.fraunhofer.de

(a)

(b)

(c)

(d)

(e)

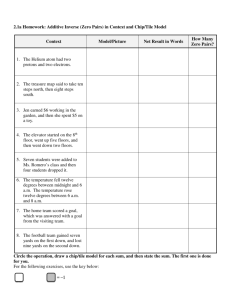

Figure 1: Robust image encryption of Li and Yu applied to JPEG: (a) original image, (b) encrypted image, (c)

decrypted image after applying JPEG compression with quality factor 90 (PSNR: 26 db), (d) with quality factor

50 (PSNR: 25 db), (e) scaling down to 90% of the original size (PSNR: 23 db)

biometric features or other misuse by Web 2.0 service providers and third parties. In this paper, we focus on the

challenges of the image obfuscation part. For key distribution, several approaches already exist, as we describe

in 2.

For Web 2.0 service providers, image obfuscation shall be transparent. Processing an obfuscated image must

not differ from processing a non-obfuscated image from a technical point of view. The obfuscation shall not

interfere with web application functions, like commenting images or painting notes in an overlay image.

On the other hand, we have to assume that an image passes considerable transformations on its way from

the sender to the receiver through a Web 2.0 service mashup. Consequently, the applied image obfuscation has

to be robust against those image transformations, at least against scaling and JPEG compression with changing

quality factors. Current image encryption techniques can not achieve this robustness to a sufficient degree, as

we explain in Section 2, although they allow a high level of protection for the content.

Robust image obfuscation weights security against robustness. While it cannot achieve the security level of

current image encryption algorithms, it offers a certain level of security and robustness at the same time. This

feature of robust image obfuscation is necessary for the use in current Web 2.0 applications and services.

In our research, we investigate obfuscation algorithms that are robust towards scaling and JPEG compression.

A single algorithm alone may not deliver a satisfying level of security, but it can serve as a primitive we can

combine with others to improve the protection of the image content.

In this paper, we present our first results on robust image obfuscation algorithms: Permutation in the spatial

domain (Section 5) and channel intensity modulation (Section 6).

2. RELATED WORK

Marko Hassinen and Petteri Mussalo suggested a concept for client-to-client security in web applications.3 They

described a scenario where an applet in the web browser of a user encrypts textual data in HTML input fields

before transmitting them to a web server. If another web browser receives the data later on, the applet is used

again to automatically decrypt the data on the client, if a certain key is available for the user. “Scramble!” is a

similar proposal for a transparent end-to-end encryption of textual data in social networks.4 The software has

been developed in the EU funded PrimeLife project. We want to extend these end-to-end encryption of textual

data for an end-to-end protection of image data.

Several approaches exists for image encryption like discrete parametric cosine transform proposed by Zhou

et al,5 or selective bitplane encryption proposed by Podesser et al6 and Yekkala et al.7 Even though these

approaches can establish a considerable security level for the protection of image content, our research showed

that they lack of sufficient robustness towards image transformations: Discrete parametric cosine transform is

not robust towards scaling and lossy JPEG compression. Bit plane encryption can handle JPEG compression

with higher quality factors, but decryption fails when the image is scaled.

Figure 2: Image with an image size of 1024 by

683 pixels, used to describe the image transforming in the online social network Facebook

Similar problems apply to the work of Li and Yu,8 who propose a robust image encryption scheme based on

permutation of DCT coefficients (discrete cosine transform) among JPEG tiles. They claim that their approach is

robust towards particular image transformations such as “noising, smoothing, compressing, and even print-scan

processing”, while providing a strong encryption at the same time. However, in the field of application we discuss

in Section 3, compression with serious changes in the JPEG quality rate, or scaling, considerably degrades image

quality (see Figure 1).

Especially the robustness towards scaling is very limited, since scaling changes the alignment between the tiles

used to permute DCT coefficients and the 8 · 8 pixel tiles used for lossy JPEG compression. Consequently, when

scaling the image, sharp gradations along the borders of JPEG tiles in the encrypted but not yet transformed

image move inside JPEG tiles. The result is blurring, which impacts the quality of the decrypted image. Using a

bicubic algorithm for scaling tightens this issue. For our test in Figure 1, the peak signal-to-noise ratio (PSNR)

already severely decreases to approx. 23 db when the image is scaled down in a small step to 90% of the original

size.

The work of Gschwandtner et al.9 comes closest to our approach. They introduce mapping functions for

secure and robust image encryption. The main focus is image encryption robust to transport errors in a network.

In their approach, they focus on the Cat map and the Baker map function with various iterations to encrypt an

image. The encrypted image can be decrypted again with a certain quality loss after transmission errors and

lossy compression. This quality loss depends on the utilization of additional functions to improve the security of

the encryption. For lossy compression, only unmodified map functions can be applied as otherwise robustness is

entirely lost. The authors also stress the fragility of their approach with respect to desynchronisation, e.g. by

pixel loss. This could mean that the approach also is not robust to scaling, an operation commonly applied in

the environments we aim at.

Li et al10 analyzed known and chosen plaintext attacks against permutation algorithms. The goal of our work

is not to present a strong cryptographic algorithm but to e. g. significantly hinder automatic feature extraction

from images done for linkage of personally identifiable information.

3. A BRIEF TOUR OF IMAGE DISTRIBUTION IN FACEBOOK

To frame the problem we discuss in this paper, we first take a quick look at how real-world Web 2.0 applications

transform and distribute images uploaded by their users. To this end, we will follow a fictional user, who uses

the online social network Facebook to communicate with her friends and to share media content, we call her

Alice.

Sometime, Alice decides to upload a new profile picture. Facebook displays this picture whenever e.g. Alice

posts into news feeds, when she appears in the friend lists of another user, or in the search function to find friends

Figure 4: Alice’s new

profile image as it is

shown in Facebook at

the home screen (a) and

at the profile page (b)

(a)

(a)

(b)

(b)

Figure 5: Alice posts the image to her personal news feed: (a) preview image as it is shown in the news feed, (b)

larger version that is displayed when user clicks the preview

and contacts. We assume, Alice uses the image shown in Figure 2 with a size of 1024 by 683 pixels stored with

a JPEG quality factor of 85∗ .

After Alice uploaded the image, it appears on her home screen next to her name. Facebook squared and

shrunk the image to a size of 50 by 50 pixels. If she now clicks the image, Facebook shows her profile page that

contains a larger version of the picture with a size of 180 by 119 pixels and a JPEG quality factor of approx. 80.

Figure 4 shows both altered versions of the original image embedded in Alice’s facebook profile.

Now Alice decides to share her new profile image with her friends by posting it into her personal news feed

(Facebook calls this feature “the wall”). For that, she again uploads the original image from Figure 2 while she

creates a new post. After she submitted the post, other users can see the image in her news feed. To present

an image preview, Facebook shrunk the original image to a size of 320 by 213 pixels and recompressed it with a

JPEG quality factor of approx. 75. When other users click the preview image, Facebook shows a larger version

with a size of 960 by 640 pixels and a JPEG quality factor of approx. 80. Figure 5 shows the preview image in

the news feed, and the larger version displayed after the user clicks the preview image.

As many other Facebook members, Alice also uses third party applications with her Facebook account. One

of these applications has access to her uploaded images to allow automatic tagging of faces. This application

also loads her new profile image and displays it in the application’s user interface. The third party application

changed the size to 720 by 480 pixels and applied a JPEG compression with a quality factor of approx. 75.

To sum up, images Alice uploads to Facebook are (a) used for several functions in the social network, (b) to

this end, undergo image transformations like recompressions with changing quality factors, resizing and cropping,

∗

c

We use a picture of an animal (Alexander

Klink, CC-BY 3.0) instead of a portrait shot due to privacy and copright

issues.

and (c) are also submitted to third party applications that additionally transform the images on their site. Our

small experiment shows, that even in the case we use only few functions of the online social network, the image

appears in four different sizes, undergoes JPEG recompressions with varying JPEG quality factors between 75

and 85, and is even cropped in one case.

Now we go one step further and assume that Alice wants to achieve end-to-end security for the images she

uploads to Facebook. In one case, she would like to share private pictures with her friend Bob, but she does not

desire that the provider of the social network is able to see the content of the images. In Section 4 we will give

multiple explanations why Alice’s desire is reasonable in certain situations.

To achieve this end-to-end security, Alice applies some kind of encryption or obfuscation to her private images

before she uploads them to the online social networks, or other Web 2.0 applications. Bob’s internet browser

receives the protected images while he visits Alice’s site on Facebook. However, the images Bob’s browser receives

differ from the images Alice uploaded to the online social network due to the transformations done by Facebook

and possibly involved third party applications. If the image encryption or obfuscation Alice applied, is not robust

towards these transformations, Bob will be unable to decrypt or de-obfuscate the image without a considerable

loss of quality. None of the yet existing approaches to image encryption delivers satisfying robustness, as we

explained in 2.

4. PRIVACY RISKS OF IMAGE SHARING IN WEB 2.0 APPLICATIONS

In Section 3, we explained why robustness is necessary for an end-to-end image encryption or obfuscation for

Web 2.0 applications. In this section, we will give three examples for current privacy risks in Web 2.0 applications

that require users of Web 2.0 applications to use an end-to-end protection mechanisms for their images.

4.1 Disclosure of Private Content due to Unreliable Privacy Settings

Web 2.0 applications, and in particular online social networks, allow their users to restrict the distribution of

private content. To this end, the Web 2.0 applications provide privacy settings, allowing the users to choose a

specific audience for their private data. However, there are two major problems with privacy settings:

First, privacy settings are often very complex. For example, a Facebook user has to deal with more than

fifty single controls to adjust how to share private data. Moreover, there are additional controls for selected data

objects and data collections such as photo albums. The default settings are often very permissive, and the user

has to explicitly opt-out from sharing data with all users of the social network.

Second, it depends on the reliability and security awareness of the provider of the Web 2.0 application, how

trustworthy these privacy settings actually are. In the case of Facebook, it already happened in the past that the

platform provider unexpectedly changed the privacy settings so that private content of the users became visible

to a broader audience. The U.S. Federal Trade Commission recently forced Facebook to enter into a privacy

agreement as a result of their unreliability in questions of privacy.11

Furthermore, privacy settings may become ineffective due to bugs and security weaknesses. As an example, in

December 2011, a security weakness in the online social network Facebook allowed unauthorized persons access

to certain private images of the users to unauthorized persons.12

To conclude, in principle, privacy settings enable users of Web 2.0 applications to control how to share private

data with others, but they are often complex and thus difficult to handle, and their enforcement solely relies

on the platform provider. Wrong configurations, technical issues including security weaknesses, and unexpected

changes of privacy policies by the platform provider can cause an undesired disclosure of the user’s private images.

and such disclosure already happened in the past.

If users could use end-to-end security mechanisms, these security glitches would have less dramatic consequences for the users’ privacy. Persons who get unauthorized access to images are not able to de-obfuscate them

as long as they do not possess the correct key. Configuring the intended group of receivers for obfuscation key

distribution can be done with a single and much simpler user interface compared to the current service- and

function-specific privacy settings.

Figure 6: Picture in an article of the print version of the

German magazine DER SPIEGEL that can be target

to a re-identification attack with robust image hashes

4.2 Extraction of Biometric Features and Face Recognition in Web 2.0 Applications

An upcoming privacy issue is the extraction of biometric features from images in Web 2.0 applications in order to

identify depicted persons: In 2011, the online social networks Facebook and Google+ introduced face recognition

techniques allowing to automatically tag faces in uploaded images. To this end, the providers of the online social

networks extract biometric features of their users and collect them in biometric databases. These features are

fed into the tagging algorithms later on. Such databases impose serious privacy risks since they may allow to

identify persons in images against their will, either by the owner of the database or by attackers who gained

unauthorized access.

On the other hand, the extraction of biometric features continuously improves because of the continuous

technical advances in digital photography. The increasing resolution and quality of digital images lead to more

accurate results of extraction algorithms. Upcoming three-dimensional photography allows to extract biometric

features from the three-dimensional shape of the face, further improving the performance of face recognition.

4.3 Image Re-Identification with Robust Images Hashes

Today a number of robust hashing or image fingerprinting techniques are known that can identify matching images

even after serious degradation of the images’ quality.13 This includes cropping, scaling, lossy compression and

rotation, depending on the applied algorithm.

For the privacy of depicted persons, these techniques can have serious consequences: In some cases, persons

are anonymized by blurring their facial regions. With an image hash robust against blurring, matching the image

to the original one would still be possible. As a consequence, an attacker could create a set of robust hashes of

images and metadata belonging to the images, especially the identities of the persons by crawling social networks.

If a newspaper publishes a blurred version of an image from a social network, the attacker can identify the person

by calculating the hash of the blurred image, matching it to the original image and deriving the metadata from

it.

As an example, Figure 6 shows a blurred image of a suicide victim in a German magazine. As the reference

indicates, the editor took the image from the online social network Facebook. An attacker may be able to find the

image in Facebook by means of a database with robust image hashes. This way the attacker can de-anonymize

the suicide victim.

5. OBFUSCATION BY PERMUTATION IN SPATIAL DOMAIN

5.1 Overview

One well-known approach for robust image obfuscation is the permutation of image regions in the spatial domain.

The main idea is to split the image into parts of equal shape (tiles), and to reorganize these parts in a way which

is only known by the sender and receiver of the image. The resulting obfuscated image is similar to a jigsaw

puzzle. Such a permutation algorithm can be constructed as explained in pseudo code in Algorithm 1.

(a)

(b)

(c)

Figure 7: Result of conventional permutation in the spatial domain: (a) original image, (b) image after applying

permutation of image regions, (c) de-permutated image with blurred tile borders. The de-permutation took place

after applying a JPEG lossy compression on the permutated image with a JPEG quality factor of 45. (picture

Public Domain by Jon Sullivan)

Algorithm 1 Puzzling a gray scale image

Input: Gray scale image G with width w > 0 and height h > 0

Input: Tile length l which satisfies 0 < l < min(w, h), for reasons of simplicity we assume w = h = 0 mod l

Input: Permutation function pk dependent on secret key k

Output: Gray scale image Ĝ with width w and height h

for u = 1 to w/l do

for v = 1 to h/l do

(û, v̂) ← pk ((u, v)) {With bijective permutation function pk , calculate tile position (û, v̂) in output image

from the tile position (u, v) in input image.}

for m = 1 to l do

x ← (u − 1) · l + m {Calculate column x in input image}

x̂ ← (û − 1) · l + m {Calculate column x̂ in output image}

for n = 1 to l do

y ← (v − 1) · l + n {Calculate row y in input image}

ŷ ← (v̂ − 1) · l + n {Calculate row ŷ in output image}

Ĝx̂,ŷ ← Gx,y {Copy single pixel gray value from input to output image}

end for

end for

end for

end for

First, without modifications on the algorithm, permutation of image regions is not very robust towards image

transformations. The reason is that the rearrangement of the tiles results in new sharp gradations at the borders

of the permuted image regions. As a consequence thereof, the frequency spectrum of the image gains energy

in particular high frequencies. This effect causes a blurring of the borders when the image is compressed with

JPEG, which is similar to a low-pass frequency filter. Figure 7 shows an example.

Second, an unmodified permutation algorithm is prone to image reconstruction algorithms. These algorithms

use heuristics that can significantly reduce the effort to solve the jigsaw puzzle compared to the NP-complete

checking of all possible combinations of the pieces. The recent work of Cho et al provides a good overview of

current techniques for solving jigsaw puzzles.14

From the state of the art, in simplified terms, we can conclude that jigsaw puzzle solvers are currently able

to handle jigsaw puzzles with less than 500 pieces with reasonable effort. In practice, solutions provided by the

jigsaw puzzle solver may vary in completeness. However, this variance is not important for our discussion here

since we consider that these algorithms always yield the complete solution which is the most unfavorable case

for us.

Original tile

(1,1)

(l,1)

(1,l)

(l,l)

Enlarged tile with margin

m

^

(l,1)

(1,1)

l

(m+1,

m+1)

Core created by

directly copying

original tile

(m+l,

m+1)

^l

(m+1,

m+l)

l

(m+l,

m+l)

^

(1,l)

^^

(l,l)

Figure 8: How a tile is enlarged by adding margins and filling them with the pixel values of border pixels

In the following sections 5.2 and 5.3, we propose solutions to tackle both problems.

5.2 Reducing Border Blurring

The permutation described above can be modified so that the defect rate declines steeply. To this end, each

tile is supplemented with a margin that “intercepts” the defects. This margin is removed when the image is

de-obfuscated. The margin is filled by copying the values of the pixels at the border. This step is necessary

to prevent defects in the de-obfuscated image resulting from inaccurate alignment between image content and

calculated tile borders.

Algorithm 2 explains in pseudo code how to create the margin for a single gray value tile. The algorithm

consists of three parts:

1. All gray values of the original tile are copied to the inner region of the enlarged tile. We call this inner

region the core of the enlarged tile.

2. Gray values of the original tile’s border pixels are copied to margin bars above, below, left and right of the

core.

3. Gray values of the original tile’s corner pixels fill the corners of the enlarged tile.

Figure 8 illustrates how to create a margin of width 2 pixels for a tile with edge length 3. The illustration

uses the notations from Algorithm 2.

Figure 10 shows a practical example of enlarged tiles in an obfuscated image: The original image from Figure 7

is split into nine tiles, the tiles are shuffled, and an additional tile margin of 6 pixels is added. Of course, by

adding margins, the size of the image increases (cp. 5.3.2). However, in practice, we can use rather thin margins:

The diagram in Figure 11 shows the connection between margin width and the PSNR of a de-obfuscated

image to original image, in this specific case of the image in Figure 7. It turned out that if we only consider

robustness towards recompression with lower JPEG quality factors, a margin width of 2 pixels is already sufficient.

Regarding image scaling, a margin of 2 pixels allows scaling rates down to 30 percent of the original size. For

lower scaling factors, the margin needs to be increased to prevent image degradation.

Figure 12 demonstrates the robustness against JPEG compression with decreasing JPEG quality factor and

scaling.

Algorithm 2 Creating an enlarged tile with margin

Input: Tile T representing a square image region with edge length l with l > 0 of a grey scale image

Input: Margin width m with m > 0

Output: Enlarged square tile T̂ with edge length ˆl = l + 2m that contains the tile T as a core and additional

surrounding margins with width m to improve robustness towards JPEG compression and scaling

{Create tile core}

for u = 1 to l do

for v = 1 to l do

x ← m + u {Column index in enlarged tile is shifted by margin width}

y ← m + v {Row index in enlarged tile is shifted by margin width}

T̂x,y ← Tu,v {Copy single pixel gray value from input tile to the core of the output tile}

end for

end for

{Create upper, lower, left and right margin}

for u = 1 to l do

for v = 1 to m do

x̂ ← m + u

ŷ ← m + 1 − v

T̂x̂,ŷ ← Tu,1 {Copy single pixel gray value from input tile to the upper margin of the output tile}

T̂ŷ,x̂ ← T1,u {Copy single pixel gray value from input tile to the left margin of the output tile}

ŷ ← m + l + v

T̂x̂,ŷ ← Tu,l {Copy single pixel gray value from input tile to the lower margin of the output tile}

T̂ŷ,x̂ ← Tl,u {Copy single pixel gray value from input tile to the right margin of the output tile}

end for

end for

{Create corners}

ˆl ← l + 2m

for u = 1 to m do

for v = 1 to m do

x̂ ← ˆl − u + 1

ŷ ← ˆl − v + 1

T̂u,v ← T1,1 {Copy single pixel gray value from input tile to the top, left corner of the output tile}

T̂x̂,v ← Tl,1 {Copy single pixel gray value from input tile to the top, right corner of the output tile}

T̂u,ŷ ← T1,l {Copy single pixel gray value from input tile to the bottom, left corner of the output tile}

T̂x̂,ŷ ← Tl,l {Copy single pixel gray value from input tile to the bottom, right corner of the output tile}

end for

end for

Figure 10: Adding margins to tiles:

(a) original image from Figure 7,

image regions permuted with nine

tiles and a margin of 6 pixels, (b)

same image but the margins are

marked with a checkerboard pattern for the purpose of illustration

(a)

(b)

(a)

(b)

Figure 11: Impact of recompression with decreasing JPEG quality factor and downscaling on the quality of the

de-obfuscated test image from Figure 10: (a) Correlation between JPEG quality factor and PSNR for different

margin widths, (b) Correlation between scale ratio and PSNR for different margin widths with a JPEG quality

factor of 75

(a)

(b)

(c)

Figure 12: Obfuscating an example image with 104 tiles and a margin width of 2 pixels: (a) obfuscated image,

(b) de-obfuscated image gained from a JPEG compressed obfuscated image with several quality factors; the part

of the original, uncompressed image is shown left, (c) de-obfuscated image gained from a JPEG compressed

(quality factor 80) and scaled obfuscated image with several scaling rates

5.3 Countering Jigsaw Solvers

In general, there are several ways to obstruct jigsaw solver algorithms:

• Increasing the number of possible permutations which leads to an enlarged search space for the solver

algorithms.

• Reducing or transforming the information contained in the permuted objects, providing the solver heuristics

with less clues. Consequently, the solver algorithms have to explore a larger part of the solution space.

• Adding redundant information to the permuted objects that mislead solver algorithms, but can be removed

during de-obfuscation. The redundant information shall as far as possible be indistinguishable from the

original information.

In the following, we will discuss approaches that implement these principles.

5.3.1 Rotating and Mirroring Tiles

A low hanging fruit to increase the number of available permutations is using the possibility to mirror the tiles

either on the horizontal or vertical mirror axis, and the possibility to rotate them in steps of 90◦ . The secret

obfuscation key k can be used to calculate the mirror and rotation transformations that shall be applied to a

given tile, and to undo them during de-obfuscation.

Figure 13: Correlation between image increase through the obfuscation

and tile size for different margin widths

The total number of permutations for the conventional image permutation algorithm, described in 5.1, is

kT k!, where T is the set of all image tiles. The proposed rotating and mirroring of tiles increases this total

number to kT k! · 8kT k . Rotating and mirroring tiles does not impact the quality of the de-obfuscated image.

5.3.2 Decreasing Tile Size

Decreasing the tile size is another way to increase the number of available permutations, and reducing the

information contained in a single tiles at the same time. However, the width of the tile margins is only determined

by the desired robustness and not by the tile size. Thus, the ratio between the tile size and the size of its margins

changes when the tile size decreases. Consequently, image width and height increase more during obfuscation.

Figure 13 shows this correlation.

As we described in 5.2, to achieve robustness towards compression with lower JPEG quality factors, and

scaling down by a factor of 5, a margin width of 2 pixels is sufficient. In this case, to restrict the increase in

image size to less than 20%, the tile edge length must be more than 20 pixels. However, that means that even a

small image with the size of 640 by 480 pixels is disassembled into 768 tiles.

5.3.3 Combination with Channel Intensity Modulation

Permutation in the spatial domain can be combined with the channel intensity modulation we describe in 6.

Thereby, the tiles are not only shifted to different positions in the image, but additionally a pseudo-random

pattern is added to the color channel values of the tile’s pixels. As a result, solver algorithms can not use e.g. the

average color values of tiles to find neighboring ones. In other words, the combination of both methods reduces

the amount of information contained in tiles that provide clues for jigsaw solvers.

6. OBFUSCATION BY CHANNEL INTENSITY MODULATION

6.1 General concept

This approach is based on adding a pseudo-random pattern to one or more color channels. The level of obfuscation

depends on various factors:

• Strength of pattern in comparison to image

• Usage of cyclic addition

• Pattern resolution

The strength of the pattern is defined by the range of its individual values. Typical image color channel

values have a range between 0 and 255 if they use the maximum contrast. The pattern can also feature the same

range, but this will require a strategy to handle overflows when adding color channel and pattern. An alternative

is to limit the range on both image channel and pattern. If both are reduced to a range of 0 to 127, while the

contrast of the resulting image will be lower, overflows will not occur. On the other hand this will result in a

significant loss of contrast.

Pattern resolution must be high enough for the cover to effectively interfere with the structure of the image

to be obfuscated. On the other hand, creating a smaller pattern is more efficient with respect to computation.

Patterns in the range of 40% to 50% of the image size show sufficient masking characteristics. An image of size

150 · 150 pixels therefore can be masked by a pattern of size 64 · 64.

Figure 14: Artifacts

in a de-obfuscated image (right) caused by

cyclic addition overflows, the original

image is shown left

When applying cyclic addition, overflows will be ignored and an addition of two values a and b will result in

a value equal to (a + b) mod 256. While this is simple to achieve, the risk of visible artifacts in ranges of a + b

close to 0 or 255 in the resulting images is high: If a = 255 and b = 5, the resulting value will be 4. If this value

is somehow increased, e.g. by lossy compression, to an amount of 5 or more, when subtracting b to recover the

original value of a, the result will be 0 or more, but far from 255. This will produce visible artifacts in the form

of pixels significantly differing from their environment, see Figure 14.

A less drastic strategy than limiting both the image and the mask avoids the negative effect of overflows:

Overflow artifacts will only occur when the image is close to the borders 0 or 255 and modifications afterwards

move the resulting values across these borders. If the image is limited to a range sufficiently far from these

borders, artifacts will not occur. Our evaluation identified 5 to 250 as a suitable range causing small quality loss

and no artifacts. Figure 15 shows the results for the “Lena” image.

6.2 Algorithm Description

The following pseudo code describes how the whole approach works.

To create an obfuscated copy of an image:

1.

2.

3.

4.

5.

Load image I of size x, y and value range 0 to 255

Create pattern P of size n and value range 0 to 255 with secret key k

Resize P to size x, y, result is P 0

Limit I to range 5 to 250, result is I 0

Create obfuscated image O by adding the P 0 to I 0

Now the user can save O with lossy compression, result is O0 . If somebody wants to undo the obfuscation,

he needs O or a copy O0 as well as k.

1.

2.

3.

4.

Load O or O0 of size x, y

Create pattern P of size n and value range 0 to 255 with secret key k

Resize P to size x, y, result is P 0

Create Image I 00 by subtracting P 0 from O or O0

The result I 00 should be of a quality comparable to I after going through a similar distribution channel.

6.3 Security Features

To create a pseudo-random pattern for obfuscation, a secret key k is necessary. This key must be available to

both the obfuscater party as well as the viewer of the image. The key exchange security is not discussed in this

work. Both one-time pads as well as chaotic sequences can be used as the base of the pattern. Sometimes only a

limited level of obfuscation may be required. In this case, both pattern size and pattern strength can be reduced.

Small value will result in obfuscated image where the content is perceivable while details are masked. Or robots

will be disabled to apply image recognition and extraction of biometric features while viewers can estimate the

content.

Figure 15: The “Lena” image after

channel limiting, obfuscation with

a pattern of half image size and deobfuscation, the last image shows

the obfuscation result after applying a pattern with 16x16 pixels.

original

limited

pattern

obfuscated

de-obfuscated

obfuscated with

16x16 pattern

Figure 17: Dependence of

PSNR on JPEG quality factor (a) and scaling rate (b)

36

PSNR in db

34

32

30

28

26

20

40

60

80

100

Scaling Rate in %

not obfuscated

(a)

obfuscated

(b)

7. TESTS AND RESULTS

7.1 Permutation of Image Regions

We tested the robustness of the image region permutation by obfuscating and de-obfuscating the images of the

Corel 1000 database for CBIR (http://wang.ist.psu.edu/docs/related/) with different parameterizations.

The quality of the de-obfuscation result is measured with the peak signal-to-noise ratio (PSNR). For all tests, a

tile edge length of 15 pixels and a margin width of 2 pixels have been used.

The left diagram in Figure 17 shows the PSNR for different JPEG quality factors without resizing the images.

The right diagram shows the PSNR for image resizing with different scaling rates; the aspect ratio has not been

changed and the JPEG quality factor was 80. For comparison, the PSNR for not obfuscated images which passed

the same transformations has been plotted in the diagrams as well.

For changes of the quality factor, the difference of the PSNR for obfuscated and not obfuscated images is

stable at approx. 2 db. Scaling behaves different: The PSNR difference increases with decreasing image size. At

a scaling rate of 20% the PSNR difference is approx. 4 db.

However, 20% scaling rate means that the de-obfuscated image has only 4% of the pixels of the original

image–which is a considerable quality loss caused by transformation itself.

7.2 Channel Intensity Modulation

To evaluate the behavior of our masking approach with respect to JPEG lossy compression, we execute the

following test:

Delta/Pixel

JPEG Imact

JPEG Impact

3

80

2,5

60

Pixel Difference

Pixel Difference

70

50

40

30

Average

20

Max

2

1,5

1

0,5

10

0

0

100 90

80

70

60

50

40

30

20

10

1

JPEG Factor

(a)

Image

(b)

Figure 18: (a) Dependence of pixel difference on JPEG quality factor, (b) Average pixel difference for the

Waterloo images

1.

2.

3.

4.

Create a masked copy of Lena with image size 400x400, mask size 200x200 and masking range 255.

Compress masked image by JPEG quality factor 100 to 1 in steps of 10

Unmask the compressed image

Calculate the average pixel difference of the original and the compressed copy which was masked and

unmasked

In the left diagram in Figure 18 it can be observed that there is a linear increase of the pixel difference as

expected. It is caused both by JPEG lossy compression and unmasking errors due to changes caused by JPEG.

It must also be noted that the maximum pixel difference in the Lena example lies at 70. This proves that in this

example overflow errors causing high local differences could be avoided.

We applied our masking algorithm to the full set of images of the Waterloo image repository (http://links.

uwaterloo.ca/Repository.html) gray scale set 2 to evaluate its behavior with different photos. Here we used

a JPEG quality factor of 65, a strong but still acceptable compression. As an evaluation criterion, we again

calculated the average pixel difference. The right diagram in Figure 18 shows that this difference is low for

all examples, but a dependency with respect to image characteristics can be observed. The worst result can

be found in “library”. White plain areas in the photo trigger overflow errors during unmasking which are not

avoided by our standard choice of limiting pixel values between 5 and 250. This is also true to a much lower

extent for “peppers2”, “france”, “frog” and “mountain”, while the other images feature no overflow errors. This

first evaluation points in the direction that the approach is especially suitable for human portraits, which makes

it well suited for privacy protection.

8. CONCLUSION AND OUTLOOK

In this work, we show that image obfuscation is robust to lossy compression and scaling is possible. These

two operations can be assumed to be the most typical in our target application domain of Web 2.0 community

platforms. With this obfuscation one can use the third platform as a distribution channel for images without

giving this platform access to the content. At the same time the platform can apply its common content

processing methods without disabling the de-obfuscation. This is the core difference to common cryptography

which does not allow processing in an encrypted state.

The first results are promising. Both permutation and masking are robust to common JPEG compression.

For permutation, robustness to scaling has also been proven by adding buffer edges to the permuted tiles. Both

approaches feature parameters to control obfuscation and security. Therefore with the same algorithms both

strong obfuscation similar to encryption as well as weak obfuscation to disable automatic face recognition or

similar methods can be achieved.

As this work is in its initial stage, a number of open questions are left for future work. More detailed testing

of the dependence of the algorithms to image characteristics is necessary. Also the behavior of a combination

of permutation and masking must be evaluated. To fight artifacts caused by the algorithm, additional image

processing may be applied at the de-obfuscation stage. As an example, occurrences of cyclic overflows may

be countered by local low pass filtering. Another approach to enhance security of the permutation may be to

split the image in the frequency domain with a bandpass and to permute the resulting high-pass and low-pass

images independently in the spatial domain before joining them again in the frequency domain to generate the

obfuscated image.

Another issue we want to tackle in our further work is the vulnerability to chosen or known plaintext attacks.

One possible solution is salting the encryption key with a randomly chosen nonce before the obfuscation takes

place. However, we have to find ways to store the non-confidential nonce in the image, e.g. in the tile borders

or by means of image watermarking.

REFERENCES

[1] J. Mitchell, “Making photo tagging easier,” December 2010. [Online]. Available: http://blog.facebook.

com/blog.php?post=467145887130

[2] H. Geser and C. Stross, “Augmenting things, establishments and human beings ”blended reality” in a

psycho-sociological perspective,” 2010.

[3] M. Hassinen and P. Mussalo, “Client controlled security for web applications,” in LCN. IEEE Computer

Society, 2005, pp. 810–816.

[4] PrimeLife, “Scramble!” September 2010. [Online]. Available: http://www.primelife.eu/results/opensource/

65-scramble

[5] Y. Zhou, K. Panetta, and S. Agaian, “Image encryption using discrete parametric cosine transform,” in Proceedings of the 43rd Asilomar conference on Signals, systems and computers, ser. Asilomar’09. Piscataway,

NJ, USA: IEEE Press, 2009, pp. 395–399.

[6] M. Podesser, H.-P. Schmidt, and A. Uhl, “Selective bitplane encryption for secure transmission of image

data in mobile environments,” in 5th Nordic Signal Processing Symposium, 2002, pp. 10–37.

[7] A. Yekkala and C. E. V. Madhavan, “Bit plane encoding and encryption,” in Proceedings of the 2nd international conference on Pattern recognition and machine intelligence, ser. PReMI’07. Berlin, Heidelberg:

Springer-Verlag, 2007, pp. 103–110.

[8] W. Li and N. Yu, “A robust chaos-based image encryption scheme,” in Proceedings of the 2009 IEEE

international conference on Multimedia and Expo, ser. ICME’09. Piscataway, NJ, USA: IEEE Press, 2009,

pp. 1034–1037.

[9] M. Gschwandtner, A. Uhl, and P. Wild, “Transmission error and compression robustness of 2D chaotic map

image encryption schemes,” EURASIP J. Inf. Secur., vol. 2007, pp. 21:1–21:13, January 2007.

[10] S. Li, C. Li, G. Chen, D. Zhang, N. G. Bourbakis, and N. G. B. Fellow, “A general cryptanalysis of

permutation-only multimedia encryption algorithms,” 2004.

[11] J. Leibowitz, J. T. Rosch, E. Ramirez, and J. Brill, “In the Matter of Facebook, Inc., a

corporation - Complaint,” November 2011. [Online]. Available: http://www.ftc.gov/os/caselist/0923184/

111129facebookcmpt.pdf

[12] T. W. S. Journal, “Facebook Flaw Exposes Its CEO,” December 2011. [Online]. Available:

http://online.wsj.com/article/SB10001424052970204083204577082732651078156.html

[13] S. Katzenbeisser, H. Liu, and M. Steinebach, “Challenges and solutions in multimedia document authentication,” in Handbook of Research on Computational Forensics, Digital Crime, and Investigation, C.-T. Li,

Ed. IGI Global, 2010, pp. 155–175.

[14] T. S. Cho, S. Avidan, and W. T. Freeman, “A probabilistic image jigsaw puzzle solver,” in CVPR. IEEE,

2010, pp. 183–190.