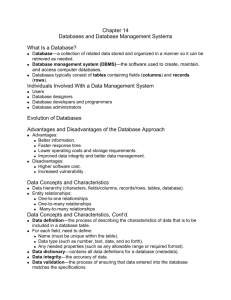

Managing Oilfield Data Management

advertisement