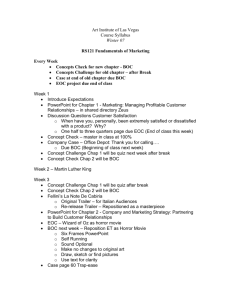

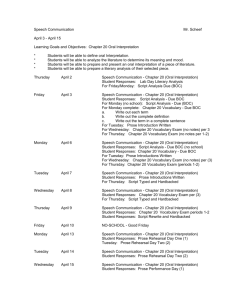

Review of ABC & BOC 1

advertisement