Taxonomy-Based Risk Identification

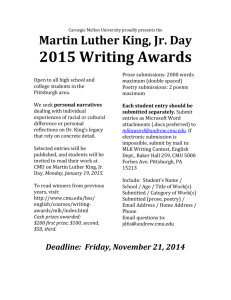

advertisement