Practical Language-Based Security From The Ground Up “Verify

advertisement

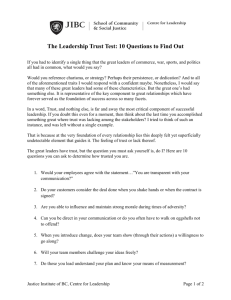

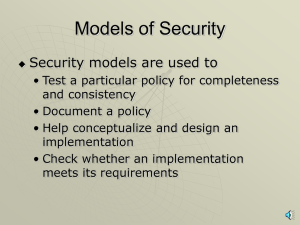

Practical Language-Based Security From The Ground Up “Verify Everything, Every Time & Prove It Remotely” Prof. Dr. Michael Franz University of California, Irvine Tokyo, Japan, June 2005 Today’s OS’s: Highly Vulnerable • the “Slammer” worm (January 2003) • • • • astonishing facts: • • • • • consisted of just 376 bytes of code infected nearly 90% of all vulnerable hosts worldwide in under ten minutes the number of infected hosts doubled almost every eight seconds attack exploited a buffer overflow vulnerability in Microsoft’s SQL Server database product this particular vulnerability had been made public six months prior to the attack, and a patch had been released even earlier a number of Microsoft’s own servers were affected by the attack “Slammer’s” spread was stopped not by human intervention but by the network outages it had caused itself Today’s OSs: Rotten Foundations • too large and too fluid to be audited properly • • • • • “old” software is usually more reliable than “new” software but almost everything in a modern operating system is “new” device drivers, dynamic loading etc. thwart even static analysis techniques current approach to overcoming known vulnerabilities is “patching”, yet evidence shows that patching compliance is spotty unknown how many OS vulnerabilities are bona-fide programming errors and how many are actually “back doors” placed by agents of foreign nation states Who Cares? • • • • from Microsoft’s (Cisco’s, Oracle’s, ...) perspective, “an error is an error is an error” they don’t care whether a foreign agent put it in or whether it is a genuine error (it is a liability/reputation issue) but some of Microsoft’s (Cisco’s, Oracle’s, ...) products have become essential facilities, so we all should care! in the event of an international conflict, an adversary might be able to remotely tamper with our infrastructure in unexpected ways Compiler Research To The Rescue “the results of mobile code research are much broader than expected” “apply these ideas to [operating] systems safety in general, not just for mobile code” Solution: Don’t Trust The OS! Application Application Safe Code Platform Operating System Hardware traditional • • Operating System Trusted Safe Code Foundation Hardware foundational safety invert the traditional relationship between compiler and operating system use recent results from mobile-code domain to obtain safety everywhere Foundational Safety Application Code Virtual Machine Abstraction Layer Virtual Machine / Dynamic Translator Operating System Typed Hardware Abstraction Layer Core Safe Code Verifier / Dynamic Translator Hardware Compilers! Sorry, Java Won’t Cut It... • • • • Java just-in-time compilation has enjoyed spectacular success, but won’t support an OS on top... worse, need to verify everything in our model, which would pose a problem with Java Java verification time essentially depends on the longest path of control flow edges along which type information has to flow, in the forward direction of the analysis could use the verification cost itself as a denial of service attack Java Bytecode Verifcation • • the JVM uses an iterative data-flow algorithm to check each method for consistent typing the average case performance is good (1-5 iterations), but the worst case complexity is quadratic • some poor implementations are even O(n4) Pathologic Cases • • Java verification time essentially depends on the longest path of control flow edges along which type information has to flow, in the forward direction of the analysis all JVM verifiers we have seen simply process each method from beginning to end, and then repeat if anything has changed • • every backward jump requires an additional iteration! can use this knowledge to construct a denial-of-service exploit • a JVM program that will verify eventually, but that will take an inordinate time to do so Iteration 1 2 3 4 5 1 iconst 0; istore 1 I I I I I 3 goto L0 I I I I I I ! 4 L3: return 5 L2: iconst 0; ifeq L3 I 7 aconst null; astore 1 A 9 goto L2 A A A ! ! ! L1: ! A ! A iconst 0; ifeq L2 I 12 aconst null; astore 1 A 14 goto L1 A A A A ! ! ! ! A A A A 10 iconst 0; ifeq L1 I 17 aconst null; astore 1 A 19 goto L0 A 15 L0: A A A A A A A Verification Time • • • • verification time also depends on the specific implementation of the verifier Sun’s verifier is very slow when dealing with subroutine invocations this is a constant overhead, but can be exploited to make the DoS attack worse on the next slide, we show the time to verify a single method with worst-case dataflow on a 2.53GHz P4 under Sun HotSpot VM 1.41 time to verify method (s) 35 worst case data flow worst case data flow with subroutine calls ax2+bx 30 25 20 15 N=3000 10 5 0 0 10000 20000 30000 40000 method size (bytes) 50000 60000 Obfuscating the Exploit • • • to make matters worse, pathologic code as shown here can be compressed very well a 700-byte JAR file (one classfile with a single pathologic method) takes 5s to verify on a high-end P4 workstation (2.5 GHz) a 3500-byte JAR file (one classfile with many identical pathologic methods) takes 15 minutes to verify time to verify (s) 5 4 3 2 1 0 550 600 650 jar file size (bytes) 700 750 demo: http://nil.ics.uci.edu/exploit Note: .NET CLR Does Better... • • since the stack is empty upon jumps, and there are no subroutines, the problem is less severe in .NET however, other complexity-based denial-of-service attacks may exist • • e.g., knowing the implementation of the register allocator, I might be able to send it a particularly nasty graph-coloring puzzle... we have a little research project going on, trying to find some more Attacking the Compilation Pipeline • • why stop at the verifier? whenever the worst-case performance of an algorithm is known, a particular hard-to-solve puzzle can be constructed • • • • verification register allocation (graph coloring, known to be NP-complete) instruction scheduling (topological sorting, NP-complete) etc... A New Security Paradigm • • currently, just-in-time compiler pipelines are optimized for the average case in mobile code environments, we should optimize for the worst case this applies to every step along the code pipeline • apparently, nobody does it, (yet) • Open-Source Development • • • • this is the one situation where open source is actually bad there may be some truth to the claim that “thousand eyes spot a programming error faster than two” — but this class of attacks is based on the algorithmic complexity of the underlying algorithm and not on any implementation error errors are easily fixed but algorithms are not easily replaced for this type of attack, security by obscurity may be the best defense! Foundational Safety Application Code Virtual Machine Abstraction Layer Virtual Machine / Dynamic Translator Operating System Typed Hardware Abstraction Layer Core Safe Code Verifier / Dynamic Translator Hardware Compilers! Foundational Safety • need a small and efficient safe code foundation • • • current options all fall short • • • • small enough to be verified manually efficient enough to support an OS running on top (!) virtual machines / interpreters: too slow virtual machines / just-in-time compilers: too big when good proof carrying code: proofs are huge and code is non-portable solution: new hybrid approach using a software-defined architecture for PCC, optimized simultaneously for • • • minimizing the complexity of proofs fast and good dynamic translation into the actual machine code small size of the trusted code base Research Question • is there a middle ground such that • the architecture is concrete enough that most optimizations are possible • while semantics are high-level enough so that the complexity of proofs is reasonable Hybrid Approach: PCC-VM PCC our work JVM HLL HLL HLL static compilation proof generation proof generation JVM dynamic compilation VM dynamic compilation machine code machine code machine code Foundational Safety Application Code Virtual Machine Abstraction Layer Virtual Machine / Dynamic Translator Operating System Typed Hardware Abstraction Layer Core Safe Code Verifier / Dynamic Translator Hardware Compilers! Trusted Computing On Steroids • trusted computing with “deeply embedded” virtual machines is much more powerful than current approaches • trust = integrity + authenticity • • • integrity: the program/system has not been changed or tampered with, is in a known state authenticity: the program/system has presented credentials that can be verified trusted computing: add a hardware-based crypto component (TPM) as a root of trust Integrity: Secure Boot Process • goal: make sure that system is executing only trusted software • build a chain of trust • at every level: • compute a binary hash for the executable of the next level to be launched • compare with known signatures of valid executables • transfer control only if match (figure by Arbaugh et al) Authenticity: Remote Attestation • goal: a remote party wants to make sure I am running “approved” software before it will talk to me • • • • • e.g., media company wants to make sure that my video player doesn’t capture information streamed to me it asks me for my “integrity metrics” the TPM on my computer signs my integrity metrics, which I send to the remote party the remote party verifies the digital signature in current trusted computing solutions, the integrity metrics are signed hashes of the binary executable images Problems With Remote Attestation • says nothing about program behavior • • • • certifies that a particular binary is running an attested program may still contain bugs static, inexpressive, and inflexible versioning problem: mutually independent patches and upgrades (Bios version X, OS version Y, Driver Z, ...) • • upgrades and patches may lead to an exponential blowup of the version space creates problems at both ends of the network • • • servers need to manage intractable lists of approved software clients may need to hold off on upgrades/patches heterogeneity of devices/platforms • specific binaries are certified (good for Microsoft...) Enter: VM-Based Attestation • • • • • VMs that execute high-level code have access to metainformation (class hierarchy, ...) and to the internals of program state code runs under complete control of the virtual machine can build a remote attestation mechanism that attests properties of individual “objects” with the richness of a higher programming language => “semantic” remote attestation reconcile security and trust Framework For Semantic Remote Attestation “Prover” / Client Enforcement Mechanism “Challenger” / Server TrustedVM Attestation Service Attestation Requester client ` application server application Semantic RA: Examples • peer-to-peer networks • • • distributed computation • • • depend on clients respecting the rules of the protocol with SRA, can explicitly check requirements want to test a platform’s capabilities before handing over a computation bundle evaluate quality of service metrics for application service providers agent-based computing • e.g., an “electronic marketplace” can attest that it will not compromise an autonomous shopping agent that visits it (otherwise exposed to reverse engineering, replay attacks, etc.) Advantages • • • certify program behavior, not a specific binary version allow multiple implementations, as long as they satisfy required criteria dynamic & flexible: can attest wide range of runtime properties • • • trust relationships between nodes are made explicit these are actually checked and enforced finer-grained trust • • traditional attestation is all-or-nothing semantic RA permits to dynamically adjust trust relationships Ongoing Research @ UCI • • • • working on ways to specify remote attestation challenges at a programming-language level techniques to reduce the parts of a VM that actually need to be trusted (minimize the trusted computing base) working on ways to attest information flow, both statically and dynamically have an experimental virtual machine that tracks mandatory access control (MAC) tags inside the virtual machine • • can enforce information-flow security at the “data item level” even if the program manipulating the data is defective or malicious requires aggressive dynamic compiler optimization to make performance overhead acceptable Closing Comments & Viewpoint • existing “trusted computing” projects are mostly not much more than yet another stopgap measure for security • • • real breakthrough will only come with true verification of the code itself • • code signing, digital rights management are the main focus but tamper-proof hardware is an important first step but I am confident that this will happen sooner than many expect virtual-machine based solutions will enable important additional capabilities, “semantic” remote attestation Summary & Outlook • a hot topic: many “trusted systems” projects • • • • confluence of factors • • • • • Trusted BSD Trusted Linux (Debian) Security-Enhanced Linux (the-agency-that-is-not-to-be-named) a real threat recent research results from mobile-code community hardware technology to sustain dynamic translation politics: nobody but Microsoft has anything to lose hence, “trusted foundations” will be the next big thing • look forward to the biggest platform war ever, defining the next 25 years of computing Thank You contact information: mailto: franz@uci.edu googlefor: Michael+Franz Team Members • • • • • • • • • • Matthew Beers, BS in Information and Computer Science, 1999, University of California, Irvine Deepak Chandra, BS in Computer Science, 2001, Birla Institute of Technology, India Andreas Gal, BS cum laude in Computer Information Systems, 2000, University of Wisconsin & Diplom-Informatiker summa cum laude, 2001, Universität Magdeburg, Germany Vivek Haldar, B.Tech in Computer Science, 2000, Indian Institute of Technology, Delhi Jeffery Von Ronne, Honors BS in Computer Science summa cum laude, Oregon State University, 1999 & MS in Computer Science, 2002, University of California, Irvine Christian Stork, Diplom-Mathematiker summa cum laude, 1998, Heinrich-Heine-Universität Düsseldorf, Germany Vasanth Venkatachalam, BS with High Honors in Mathematics and Philosophy, 1998, Wesleyan University & MS in Information and Computer Science, 2002, University of California, Irvine Efe Yardimci, BS in Electrical and Electronics Engineering, 1998, Middle East Technical University, Turkey & MS in Computer Engineering, 2001, Northeastern University, Boston Lei Wang, BS in Electrical Engineering, 1994, North China Electrical Power University & MS in Electrical Engineering, 1997, Tsinghua University, China Ning Wang, BA in Physics, Shandong University, China, 1995 & MS in Computer Engineering, 2000, University of California, Irvine • Prof. Michael Franz, dipl. Informatikingenieur, 1989 & Dr. sc. techn. (advisor: Niklaus Wirth), 1994, ETH Zürich, Switzerland