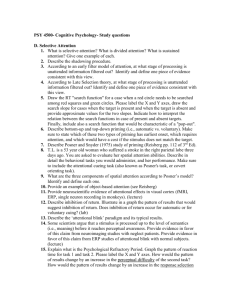

Attention - Psychology

advertisement