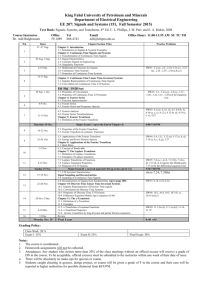

Class

advertisement

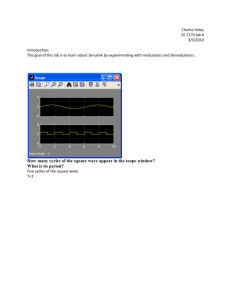

Contents

1 Introduction to Signals and Systems

1.1 Introduction . . . . . . . . . . . . . . . . . . .

1.2 Continuous and Discrete Time Signals . . . .

1.3 Signal Properties . . . . . . . . . . . . . . . .

1.3.1 Signal Energy and Power . . . . . . .

1.3.2 Periodic Signals . . . . . . . . . . . . .

1.3.3 Even and Odd Symmetry Signals . . .

1.3.4 Elementary Signal Transformations . .

1.4 Some Important Signals . . . . . . . . . . . .

1.4.1 The Delta Function . . . . . . . . . .

1.4.2 The Unit-Step Function . . . . . . . .

1.4.3 Piecewise Constant Functions . . . . .

1.4.4 Exponential Signals . . . . . . . . . .

1.5 Continuous-Time and Discrete-Time Systems

1.5.1 System Properties . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2 Linear, Time-Invariant (LTI) Systems

2.1 The Impulse Response and the Convolution Integral . . . . . . . . . .

2.1.1 The Continuous-Time Case . . . . . . . . . . . . . . . . . . . .

2.1.2 The Discrete-Time Case . . . . . . . . . . . . . . . . . . . . . .

2.2 The Laplace Transform and its Use to Study LTI Systems . . . . . . .

2.2.1 Properties of the Laplace Transform . . . . . . . . . . . . . . .

2.2.2 The Laplace Transform of Some Useful Functions . . . . . . . .

2.2.3 Inversion by Partial Fraction Expansion . . . . . . . . . . . . .

2.2.4 Solution of Differential Equations Using the Laplace Transform

2.2.5 Interconnection of Linear Systems . . . . . . . . . . . . . . . .

2.2.6 The Sinusoidal Steady-State Response of a LTI System . . . .

3 The Fourier Series

3.1 Signal Space . . . . . . . . . . . . . . . . . . . . .

3.1.1 Signal Energy . . . . . . . . . . . . . . . .

3.1.2 Signal Orthogonality . . . . . . . . . . . .

3.1.3 The Euclidean Distance . . . . . . . . . .

3.2 The Complex Fourier Series . . . . . . . . . . . .

3.2.1 Complex Fourier Series Examples . . . . .

3.2.2 An Alternative Form of the Fourier Series

3.2.3 Average Power Of Periodic Signals . . . .

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5

5

6

7

7

9

10

11

12

12

14

16

16

17

18

.

.

.

.

.

.

.

.

.

.

26

26

26

29

30

33

37

38

41

41

44

.

.

.

.

.

.

.

.

46

46

47

47

47

48

50

52

57

2

CONTENTS

3.3

4 The

4.1

4.2

4.3

4.4

3.2.4 Parseval’s Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

3.2.5 The Root-Mean-Square (RMS) Value of a Periodic Signal . . . . . . . . . . . 60

Steady-State Response of a LTI System to Periodic Inputs . . . . . . . . . . . . . . . 61

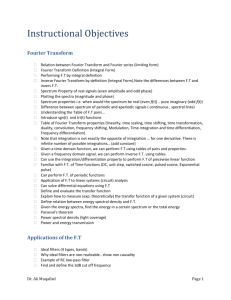

Fourier Transform

Properties of the Fourier Transform . . . . . . . . .

Fourier Transforms of Selected Functions . . . . . . .

Application of Fourier Transform to Communication

4.3.1 Baseband vs Passband Signals . . . . . . . .

4.3.2 Amplitude Modulation . . . . . . . . . . . . .

Sampling and the Sampling Theorem . . . . . . . . .

4.4.1 Ideal (Impulse) Sampling . . . . . . . . . . .

4.4.2 Natural Sampling . . . . . . . . . . . . . . . .

4.4.3 Zero-Order Hold Sampling . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

65

66

73

75

76

76

78

78

81

82

List of Figures

1.1

1.2

1.3

1.4

1.5

1.6

1.7

1.8

1.9

1.10

1.11

1.12

1.13

1.14

1.15

1.16

1.17

1.18

Examples of audio signals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The Dow Jones daily average starting January 5, 2009. . . . . . . . . . . . . . . . . .

The Dow Jones Industrial average over a 50 day period starting on January 5, 2009.

Example finite energy signal. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

A continuous-time periodic signal. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

A discrete-time periodic signal. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Example of time-shift: original signal x(t), delayed signal x(t − 3) and advanced

signal x(t + 3). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The continuous-time and discrete-time delta functions. . . . . . . . . . . . . . . . . .

A unit-area rectangular pulse. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Illustration for proof of the sifting property of ∆(t). . . . . . . . . . . . . . . . . . .

Illustration of f (t)δ(t − a) = f (a)δ(t − a). . . . . . . . . . . . . . . . . . . . . . . . .

The continuous and discrete-time unit-step functions. . . . . . . . . . . . . . . . . .

A piecewise constant function and its derivative. . . . . . . . . . . . . . . . . . . . .

A system with one input and one output. . . . . . . . . . . . . . . . . . . . . . . . .

A system with one input and one output. . . . . . . . . . . . . . . . . . . . . . . . .

Illustration of the linearity property. . . . . . . . . . . . . . . . . . . . . . . . . . . .

Illustration of the commutation property of linear systems. . . . . . . . . . . . . . .

Illustration of the time-invariance property. . . . . . . . . . . . . . . . . . . . . . . .

11

13

13

14

15

15

16

17

18

19

20

22

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

2.9

2.10

Illustration of convolution for Example 1. . . . . .

Illustration of convolution for Example 2. . . . . .

Illustration of discrete convolution for Example 1. .

Illustration of discrete convolution for Example 2. .

Illustration of ROC for ejω0 t . . . . . . . . . . . . .

A parallel interconnection of two systems. . . . . .

A series interconnection of two systems. . . . . . .

A feedback interconnection of two systems. . . . .

Illustration of equalization. . . . . . . . . . . . . .

Circuit for Example 4. . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

28

29

31

32

33

42

42

43

43

45

3.1

3.2

3.3

3.4

3.5

3.6

Illustration of signal-space concepts. . . . . . . . . . . .

Rectangular pulse periodic signal with period T . . . . .

Amplitude spectrum of the periodic signal in Figure 3.2.

Rectangular pulse periodic signal with period T . . . . .

Spectrum of periodic signal in Figure 3.4. . . . . . . . .

Half-wave rectified signal with period T . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

48

50

51

52

53

54

3

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5

6

6

9

10

10

4

LIST OF FIGURES

3.7

3.8

3.9

3.10

3.11

3.12

3.13

3.14

Spectrum of periodic signal in Figure 3.6. . . . . . . . . .

Half-wave rectified signal with period T . . . . . . . . . .

Spectrum of periodic signal in Figure 3.6. . . . . . . . . .

Fourier series using 10 (red) and 50 terms (green) . . . .

Fourier series using 5 (red) and 10 terms (green) . . . . .

Simple low-pass filter for extracting the dc component. .

Rectangular pulse periodic signal with period T = 4. . .

The magnitude of the Fourier coefficients of x(t) and y(t).

4.1

4.2

Rectangular pulse. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The Fourier transform of a rectangular pulse as a function of normalized frequency

fT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The frequency response of an ideal low-pass filter. . . . . . . . . . . . . . . . . . . . .

(a) The Fourier transform of a baseband signal x(t); (b) the Fourier transform of

x(t) modulated by a pure sinusoid; (c) the upper sideband; (d) the lower sideband.

The response of a LTI system to an input in the time-domain and frequency domains.

Triangular and rectangular pulses. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The sign function. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The Fourier transform of a pure cosine carrier. . . . . . . . . . . . . . . . . . . . . .

Amplitude modulation (DSB-SC). . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Demodulation of DSB-SC. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Envelope demodulation of AM radio signals. . . . . . . . . . . . . . . . . . . . . . . .

The sampling function h(t). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Illustration of the sampled signal xs (t). . . . . . . . . . . . . . . . . . . . . . . . . . .

Illustration of sampling above and below the Nyquist rate. . . . . . . . . . . . . . . .

The low-pass filter G(f ) and its impulse response g(t). . . . . . . . . . . . . . . . . .

The pulse approximation to an impulse. . . . . . . . . . . . . . . . . . . . . . . . . .

The sample-and-hold signal. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Reconstructing the original signal from zero-order hold samples. . . . . . . . . . . . .

Generating 1/P (f ). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.10

4.11

4.12

4.13

4.14

4.15

4.16

4.17

4.18

4.19

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

55

56

57

58

59

62

62

64

66

67

68

70

71

71

75

76

76

77

78

79

79

80

81

82

83

83

84

Chapter 1

Introduction to Signals and Systems

1.1

Introduction

In general, a signal is any function of one or more independent variables that describes some

physical behavior. In the one variable case, often the independent variable is time. For example, a

speech signal at the output of a microphone as a function of time is an example of an audio signal.

Two such signals are shown in Figure 1.1.

"

!+&

!

!!+&

!"

!

"!!

#!!

$!!

%!!

&!!

,-./

'!!

(!!

)!!

*!!

"!!!

!

"!!

#!!

$!!

%!!

&!!

,-./

'!!

(!!

)!!

*!!

"!!!

"

!+&

!

!!+&

!"

Figure 1.1: Examples of audio signals.

An example of a signal which is a function of two independent variables is an image, which

presents light intensity and possibly color as a function of two spatial variables, say x and y.

A system in general is any device or entity that processes signals (inputs) and produces other

signals (outputs) in response to them. There are numerous examples of systems that we design to

manipulate signals in order to produce some desirable outcome. For example, an audio amplifier

will receive as input a weak audio signal produced, for example, by a microphone, and will amplify

it and possibly shape its frequency content (more on this later) to produce a higher power signal

that can drive a speaker. Another system is the stock market where a large number of variables

(inputs) affect the daily average value of the Dow Jones Industrial Average Index (and indeed the

value of all stocks). Indeed, the value of the Dow Jones Industrial Average Index as a function of

time is another example of a signal (an output of an underlying financial system in this case). The

Dow Jones industrial average closing values over a 100 day period staring on January 5, 2009 is

shown in Figure 1.2 as a function of time and it is another example of a signal.

5

6

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

Closing Value

10000

9000

8000

7000

6000

0

10

20

30

40

50

Time, Days

60

70

80

90

100

Figure 1.2: The Dow Jones daily average starting January 5, 2009.

1.2

Continuous and Discrete Time Signals

There is a variety of signals and systems that we encounter in every day life. In particular, signals

can be continuous-time or discrete-time. Continuous-time signals, as the name implies, take values

on a continuum in time, whereas discrete-time signals are defined only over a a discrete set of

normally equally spaced times. Examples of continuous-time signals are the audio signals at the

output of a condenser (electrostatic based) microphone, as those shown in Figure 1.1. An example

of a discrete-time signal is the Dow Jones daily average shown in Figure 1.2, which takes values

only over discrete times, i.e. at the end of each day. Unlike in the case of Figure 1.2 where the

discrete-time signal is plotted as a continuous-time signal by connecting the points with straight

lines, in general, discrete-time signals are plotted as shown in Figure 1.3, which plots the Dow Jones

daily average over a 50 day period.

10000

8000

6000

4000

2000

0

0

5

10

15

20

25

Time, Days

30

35

40

45

50

Figure 1.3: The Dow Jones Industrial average over a 50 day period starting on January 5, 2009.

We will use the continuous variable t to denote time when dealing with continuous-time signals

and the discrete time variable n when dealing with discrete-time signals. For example, we will

represent mathematically a continuous-time signal by x(t), for example, and a discrete-time one by

x[n].

Note that both the continuous-time and discrete-time signals described above have amplitudes

that take values on a continuum (in other words the real line). Although we will only focus on

these two classes of signals in this course, there are in fact two more signal classes which are very

important and in fact necessary when signals are processed digitally (by DSPs or computers).

7

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

Computers have a finite world length (although quite large currently) which can only describe

a countable number of amplitudes. Therefore, so that signals can be processed by digital signal

processors one must forgo the infinity of possible amplitudes and only consider a finite set of such

amplitudes, i.e. by discretizing also the amplitude axis. The process of discretizing the amplitude

axis is referred to as quantization. The process of discretizing the time axis if the original signal is

a continuous-time one is referred to as sampling. We will consider sampling later in the course and

we will show that if it is done appropriately (sampling theorem) there need not be any information

loss. In other words, one can reconstruct in this case the original continuous-time signals from

its samples. In contrast, quantization always entails information loss (infinitely many amplitudes

mapped into one) and all we can do is limit it. The two additional classes of signals then are derived

from the continuous and discrete-time signals described above by discretizing the amplitude axis.

1.3

Signal Properties

Practically, signals can be very diverse in nature and shape of course, but they are often characterized by some ”bulk” properties that provide signal users information without the need for a

complete description of the signal.

1.3.1

Signal Energy and Power

Consider a circuit in which the voltage across a resistor of resistance R is v(t) and the current

current through it is i(t). Then the instantaneous power delivered to the resistor is

p(t) = i(t)v(t) =

v 2 (t)

= i2 (t)R.

R

If the current i(t) flows through the resistor for a time (t2 − t1 ) seconds, from some time t1 to

another time t2 , then the energy expended is:

E=

Z t2

p(t)dt =

t1

1

R

Z t2

v 2 (t)dt = R

t1

Z t2

i2 (t)dt.

t1

We also define the average power dissipated in the resistor over the T second interval by the

time-average:

P =

E

1

=

(t2 − t1 )

(t2 − t1 )

Z t2

Ri2 (t)dt =

t1

1

(t2 − t1 )

Z t2

1 2

v (t)dt.

t1

R

In general and analogous to the above definition of energy which depends on the value of the

resistance R, we abstract the definition and define the energy for any signal x(t) (in general complex

and not just currents or voltages) over some time period T to be:

E=

Z t2

t1

|x(t)|2 dt,

where |x(t)| is the magnitude of the (possibly) complex signal x(t). Similarly, the average power of

any signal is defined by

Z t2

1

P =

|x(t)|2 dt.

(t2 − t1 ) t1

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

8

In a similar way the energy and average power of a discrete-time signal x[n] are defined as

E=

n2

X

|x[n]|2

n=n1

n

2

X

1

|x[n]|2 .

(n2 − n1 + 1) n=n1

P =

We often are interested in the energy and power of signals defined over all time, i.e. for

continuous-time signals for −∞ < t < ∞ and for discrete-time signals for −∞ < n < ∞. Thus, for

continuous-time signals we are interested in the limits:

E∞ = lim

Z T

T →∞ −T

P∞

1

= lim

T →∞ 2T

|x(t)|2 dt,

Z T

−T

|x(t)|2 dt.

Similarly, for discrete-time signals, we have:

E∞ = lim

N →∞

N

X

n=−N

|x[n]|2

N

X

1

|x[n]|2 .

N →∞ (2N + 1)

n=−N

P∞ = lim

We distinguished classes of signals based on total energy and total average power based on

whether each is finite or infinite. Based on this binary-valued classification, there would normally

be 4 classes of signals, i.e.:

1. Signals with finite energy and finite power;

2. Signals with finite energy and infinite power;

3. Signals with infinite energy and finite power; and

4. Signals with infinite energy and infinite power.

However, the second of the four classes above, i.e. finite energy and infinite power, cannot possibly

exist since a signal with finite energy must necessarily have finite (and in fact zero) finite average

power. This is easily shown. Consider for example a signal with finite energy A, i.e.,

E∞ = lim

Z T

T →∞ −T

|x(t)|2 dt =

Z ∞

−∞

|x(t)|2 dt = A.

Clearly, we can write (since the term in the integral cannot be negative, and thus the integral

cannot decrease as we increase the region of integration):

Z T

−T

Thus,

P∞

1

= lim

T →∞ 2T

|x(t)|2 dt ≤ A.

Z T

−T

A

= 0.

T →∞ 2T

|x(t)|2 dt ≤ lim

9

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

x(t)

1

t

1

Figure 1.4: Example finite energy signal.

For example, consider the signal x(t) in the form of a rectangular pulse in Figure 1.4 below:

The energy in this signal is clearly E∞ = 1, so this is a finite energy signal. The total average

power is thus zero. In general, finite energy signals imply finite power signals.

An example of a signal that has infinite energy, but finite power is, for example x(t) = 1. Clearly

Z ∞

E∞ =

However,

P∞

1

= lim

T →∞ 2T

−∞

Z T

12 dt = ∞.

2T

= 1.

T →∞ 2T

12 dt = lim

−T

An example of a signal with infinite energy and infinite power is x(t) = t. We have

Z T

E∞ = lim

T →∞ −T

P∞

1

= lim

T →∞ 2T

2T 3

= ∞.

T →∞ 3

t2 dt = lim

Z T

2T 3

= ∞.

T →∞ 6T

t2 dt = lim

−T

Similar examples can be found in the discrete-time case.

1.3.2

Periodic Signals

A continuous-time signal is periodic with period T if for all values of t

x(t) = x(t + T ).

In the discrete-time case, a periodic signal is defined similarly as one in which for all n,

x[n] = x[n + N ].

Perhaps the most prevalent class of period signals are the sinusoidal signals. For example

x(t) = sin(2πt/T ) is clearly periodic with period T . To see this, we have:

x(t + T ) = sin 2π

t+T

T

= sin 2π

t

t

+ 2π = sin 2π

T

T

= x(t).

Another example of a periodic signal is shown in Figure 1.5.

An example of a discrete-time periodic signal is given in Figure 1.6.

Periodic signals are a special class of signals and we will deal with them in more detail later.

10

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

x(t)

...

...

−T

T

t

Figure 1.5: A continuous-time periodic signal.

x[n]

...

...

Figure 1.6: A discrete-time periodic signal.

1.3.3

Even and Odd Symmetry Signals

A signal x(t) is said to have even symmetry when

x(t) = x(−t)

and to have an odd symmetry if

x(t) = −x(−t).

Examples of a signals with even symmetry are x(t) = e−|t| , x(t) = cos(t) and x(t) = t2 . Examples

of signals with odd symmetry are x(t) = t and x(t) = sin(t).

Similar definitions apply to discrete-time signals. In other words, a discrete-time signal has

even symmetry if

x[n] = x[−n]

and odd symmetry if

x[n] = −x[−n].

Any signal can be decomposed into the sum of an even signal and an odd signal. To show this,

consider an arbitrary signal x(t) that need not have any symmetry. We can write:

x(t) = x(t) +

x(−t) − x(−t)

x(t) + x(−t) x(t) − x(−t)

=

+

2

2

2

|

{z

} |

{z

}

xe (t): Even

xo (t): Odd

To show that xe (t) is even, we have:

xe (−t) =

x(−t) + x(+t)

= xe (t).

2

11

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

To show xo (t) is odd, we have:

xo (−t) =

1.3.4

x(−t) − x(t)

x(t) − x(−t)

=−

= −xe (t).

2

2

Elementary Signal Transformations

We often need to transform signals in ways that better allow us to extract information from them

or that make them more useful in implementing a given task. Signal processing is a huge field or

research and spans numerous every day applications. Indeed as humans we do signal processing

continuously by interpreting audio and optical (visual) signals and taking action in response to

them.

In this section we will deal only with the most basic operations, which will also be useful in

our later development of the material. The transformations we consider thus only involve the

independent variable which is time in many cases. We will illustrate the concepts using mainly

continuous-time signals, but the principles are exactly the same for discrete-time signals.

Time Shift

Let x(t) be a continuous-time signal. Then x(t − t0 ) is a time-shifted version of x(t) that is identical

in shape to x(t) but is is translated in time by t0 seconds (delayed by t0 seconds if t0 > 0 or advanced

by t0 seconds if t0 < 0.) Similarly, if x[n] is a discrete-time signal, then x[n − n0 ] is a translated

version of x[n]. Figure 1.7 shows a signal x(t) and a version of it that is delayed by 3 seconds and

one that is advanced by 3 seconds.

-4

-3

-2

-1

1

2

3

4

Figure 1.7: Example of time-shift: original signal x(t), delayed signal x(t − 3) and advanced signal

x(t + 3).

Time Reversal

Let x(t) be a continuous-time signal. Then x(−t) is a time-reversed version of x(t). Similarly, if

x[n] is a discrete-time signal, then x[−n] is its time-reversed version. The time reversed signals

look the same as their originals, but they are reflected around t = 0 and n = 0 for continuous-time

and discrete-time signals, respectively.

Time Scaling

Time scaling is another transformation of the time-axis often encountered in practice. Thus, if x(t)

is a continuous-time signal, then x(at) is a time-scaled version of it. If |a| > 1, then the signal is

time-compressed and if |a| < 1 it is time-stretched. For example, if x(t) represents a recorded audio

signal of duration T seconds, x(2t) will be of duration T /2 and will sound high pitched (it will be

12

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

equivalent to being recorded at some speed and then played out at twice that speed). Similarly,

x(t/2) will last 2T and will be equivalent to being played out at half the speed.

The following Matlab script loads a music signal into (Handel’s classical piece) into variable y

and with sampling rate F s = 8, 192 samples/s. The Matlab sound command can be used to listen

to the signal at the original sampling speed, twice that and at half that speed. It also defines y[−n]

in variable z[n] and plays it, which corresponds to playing the music piece backwards.

load handel;

sound(y,Fs);

sound(y,2*Fs);

sound(y,Fs/2);

z=y(length(y):-1:1);

sound(z,Fs);

In general, time-reversal, time-translation and scaling can be combined to produce the signal

x(at + b). See examples in the text.

1.4

Some Important Signals

In this section we define some basic signals which will be used later on as we analyze systems.

1.4.1

The Delta Function

The “delta” or impulse function is a mathematically defined function that can never arise exactly

in nature but is extremely useful in characterizing the response of (linear) systems. Mathematically

the delta function is defined as δ(t) in the continuous-time case and by δ[n] in the discrete-time

case. In the latter case, the delta function is defined by:

δ[n] =

1,

0

n = 0,

n 6= 0.

In the continuous-time case, the delta function is defined by its two important properties:

1. Unit area under the function:

Z ∞

δ(t)dt = 1

(1.1)

x(a)δ(t − a)da = x(t).

(1.2)

−∞

2. The sifting property:

Z ∞

−∞

A similar sifting property (but easier to see) holds for the discrete-time delta function. Let x[n]

be a discrete-time function (signal). Then, it is easy to see the following equation holds:

x[n] =

∞

X

m=−∞

x[m]δ[n − m]

(1.3)

since δ[n − m] will be zero for all m in the sum except for m = n for which it will be equal to one.

Also, it is easy to see that

∞

X

m=−∞

δ[m] = 1.

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

b[n]

bt)

n

0

13

0

t

Figure 1.8: The continuous-time and discrete-time delta functions.

Both continuous and discrete-time functions are shown in the figure below:

The unit impulse functions can be scaled to yield impulses of any value. For example, cδ(t) will

naturally yield c when integrated over all time. Similarly for the discrete-time delta function.

In the continuous-time case, there is no naturally occurring signal that will have a unit area

under a zero support interval (clearly in this case the amplitude must be infinite.) Continuous-time

delta functions can be constructed as limiting cases of other functions. For example, consider the

rectangular pulse function

1

∆(t) = 2T , −T ≤ t ≤ T , .

0

otherwise

This function is shown in the figure below: Clearly, the area under this rectangular pulse is one,

6t)

1/2T

t

-­T 0 T

Figure 1.9: A unit-area rectangular pulse.

irrespective of T . A delta function can be defined as a limiting case of ∆(t) as T → 0, i.e.,

δ(t) = lim ∆(t).

T →0

To verify that ∆(t) satisfies the sifting property in the limit as T → 0, let f (t) be an arbitrary

function and F (t) it’s indefinite integral, i.e.

dF (t)

= f (t).

dt

Let us now consider the integral

Z ∞

−∞

f (a)∆(t − a)da.

We need to show that in the limit as T → 0, this integral is f (t), and thus in that limit, ∆(t) has

both defining properties of a delta function. We have:

Z ∞

−∞

f (a)∆(t − a)da =

=

t+T

1

f (a)da

2T t−T

1

[F (t + T ) − F (t − T )] .

2T

Z

14

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

Thus,

lim

Z ∞

T →0 −∞

1

[F (t + T ) − F (t − T )] = f (t)

T →0 2T

f (a)∆(a − t)da = lim

since the limit on the right above can be seen as the definition of the derivative of F (t) at time

t, which must be f (t) since F (t) was defined as the integral of f (t). The scenario is illustrated in

Figure 1.10.

6t-­a)

f(t)

f(a)

t-­T t t+T

a

Figure 1.10: Illustration for proof of the sifting property of ∆(t).

It can be shown that the delta function is an even function, i.e. δ(t) = δ(−t) and δ[n] = δ[−n].

Thus, in the continuous-time case, for example,

Z ∞

−∞

f (a)δ(a − t)da =

Z ∞

−∞

f (a)δ(t − a)da = f (t).

Also, since the delta function is zero everywhere except where it is located, we have:

Z ∞

−∞

f (a)δ(t − a)da =

Z t+

t−

f (a)δ(t − a)da = f (t),

where t− is the time just before t and t+ is the time just after t, where t is the location of the delta

function δ(t − a) on the a-axis. Indeed, the above integrals will evaluate to the same result f (t) for

any limits that include the delta function within their scope.

Since the delta function is non-zero only at the value of the horizontal axis where it is located,

we can write:

f (t)δ(t − a) = f (a)δ(t − a).

This is illustrated in Figure 1.11 below. In other words, when a function is multiplied by a delta

function, the result is to “sample” the function at the time where the delta function is located. In

other words, the product of a delta function located at some time a on the t-axis and a function

f (t) will be a delta function of height (area) f (a).

1.4.2

The Unit-Step Function

The continuous-time unit-step function is defined in terms of the delta function as:

u(t) =

Z t

−∞

δ(a)da.

15

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

f(a)

bt-­a)

f(t)

t

a

Figure 1.11: Illustration of f (t)δ(t − a) = f (a)δ(t − a).

Clearly, the integral above is zero for all t < 0 and it is one for t ≥ 0, i.e.,

1, t > 0

0 t < 0.

The unit-step function is plotted in Figure 1.12. Clearly, the step function is discontinuous at t = 0.

u(t) =

1

u[n]

u(t)

1

t

n

Figure 1.12: The continuous and discrete-time unit-step functions.

In view of the definition of the unit-step function in terms of the delta function, we can write also,

du(t)

.

dt

Another expression for the unit-step function can be obtained by making use of the sifting

property of the delta function in (1.2). Thus, we can write

δ(t) =

u(t) =

Z ∞

−∞

u(a)δ(t − a)da.

Since u(a) = 0 for a < 0 and 1 for a > 0, we have

u(t) =

Z ∞

0

δ(t − a)da.

The discrete-time unit-step is defined similarly in terms of the discrete-time delta function:

u[n] =

n

X

δ[m]

m=−∞

Another expression can be derived by using (1.3):

u[n] =

∞

X

m=−∞

u[m]δ[n − m] =

∞

X

m=0

δ[n − m].

16

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

1.4.3

Piecewise Constant Functions

The unit-step can be used to describe any piecewise constant function. For example, the function

described in Figure 1.13 can be represented mathematically in terms of step functions as:

x(t) = 2u(t) − u(t − 1) − 2u(t − 3) + u(t − 4).

The derivative of x(t) is also shown in the figure and mathematically it is

2

x(t)

2

1

x’(t)

1

1

2

3

4

t

1

-­1

2

3

4

t

-­1

Figure 1.13: A piecewise constant function and its derivative.

dx(t)

= 2δ(t) − δ(t − 1) − 2δ(t − 3) + δ(t − 4).

dt

1.4.4

Exponential Signals

The class of exponential signals is another important class of signals often encountered in the

analysis of systems. The general form of such signals is in the continuous-time case is:

x(t) = ceat ,

where both c and a can in general be complex. If both c and a are real, then x(t) is of course a

real exponential. When a = jω0 is purely imaginary and c is real, x(t) is a complex exponential:

x(t) = ceat = cejω0 t = c[cos(ω0 t) + j sin(ω0 t)].

This signal is periodic. To find its period, T , we have:

x(t + T ) = cjω0 (t+T ) = cejω0 t ejω0 T = x(t)ejω0 T .

Thus, for x(t + T ) to equal x(t) for all t as required for a periodic signal, it must be that

ejω0 T = 1

which means that ω0 T = 2mπ for some integer m. Thus, T = 2mπ/ω0 and the period (the smallest

positive T for which x(t) is periodic) is

2π

T =

.

|ω0 |

ω0 > 0 is the fundamental frequency and it has the units of radians/s. We also define the

fundamental frequency in hertz (Hz) as ω0 = 2πf0 which implies

f0 =

1

.

T

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

17

Clearly the complex exponential relates closely to the sinusoidal signals in view of Euler’s

identity. In other words,

A cos(2πf0 t) = R{ej2πf0 t },

A sin(2πf0 t) = I{Aej2πf0 t }.

As in the complex exponential case, each sinusoidal signal above has fundamental frequency

f0 Hz and period T = 1/f0 . Note that the period decreases monotonically as f0 increases.

Similar definitions apply to the discrete-time case, although there are some very important and

distinct differences to the continuous-time case. The discrete-time complex exponential is

x[n] = ejω0 n = ej2πf0 n .

Unlike the continuous-time case though where the signal period decreases monotonically as the

frequency increases, only values of ω0 in a 2π interval result in distinct values of the period. This

can be seen easily since (k is any integer)

jω0 n

ej(ω0 +2kπ)n = ejω0 n e| j2kπn

.

{z } = e

1

In other words one might say the discrete-time complex exponential is periodic with period 2π in

its frequency variable ω0 for any fixed time n.

Thus, in the discrete-time case we only need to consider the frequency ω0 to be within a 2π

interval, usually taken either from 0 ≤ ω0 ≤ 2π or −π ≤ ω0 ≤ π.

Now let us determine the period of a discrete-time complex exponential. We have

x[n + N ] = ejω0 (n+N ) = ejω0 n ejω0 N ,

and for the right-hand side to equal x[n] we must have (for some integer m) ω0 N = 2mπ or,

equivalently,

ω0

m

= .

2π

N

In other words, for x[n] = ejω0 n to be periodic, ω0 /2π must be rational. The period in this case is

N if m and N do not have common factors.

1.5

Continuous-Time and Discrete-Time Systems

In general a system is any device that processes signals (inputs) to produce other signals (outputs),

as illustrated abstractly in Figure 1.14 below. In the figure, x(t) (or x[n] in the discrete-time)

x(t)

x[n]

System

S

y(t)

y[n]

Figure 1.14: A system with one input and one output.

represents the input to the system, labeled by S, and y(t) (or y[n] in the discrete-time) the corresponding output. We say the system S operates on the input x(t) to produce the output y(t) and

write mathematically

y(t) = S[x(t)].

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

18

Similarly, in the discrete-time case we write

y[n] = S [x[n]] .

As an example of a (electrical) system, consider the circuit in the figure below. In this electrical

R

+

+

x(t)

y(t)

C

-

-

Figure 1.15: A system with one input and one output.

system the input is a voltage and so is the output. The output relates to the input through a

differential equation. If we let the current through the loop be i(t), then

i(t) = C

dy(t)

.

dt

Then, writing a single loop equation, we have:

x(t) = Ri(t) + y(t).

Thus,

dy(t)

+ y(t).

dt

For an input signal x(t), we can compute the output y(t) by solving the above first-order, linear

differential equation.

In general, continuous and discrete-time systems can be deterministic or stochastic, linear or

non-linear, time-variant or time-invariant. Stochastic systems are ones which contain components

whose behavior cannot be deterministically described for a given input signal. An example of such

a system is a circuit which contains a thermistor, whose resistance is a function of temperature.

Since the temperature as a function of time cannot be exactly predicted in advance, one can only

assume the resistance provided by the thermistor is random, and, thus, any system that contains

it will be stochastic. Stochastic systems are studied using statistical signal processing techniques.

We will not deal with stochastic systems in this class but rather we will only study deterministic

systems. In fact, we will focus only on linear, time-invariant (LTI) systems. We define linearity

and time-invariance, as well as other system properties next.

x(t) = RC

1.5.1

System Properties

Linearity

Consider the single-input, single-output system in Figure 1.14 and two inputs x1 (t) and x2 (t) (in

the continuous-time case). Let y1 (t) and y2 (t) be the corresponding system outputs, i.e.,

y1 (t) = S[x1 (t)],

y2 (t) = S[x2 (t)].

19

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

Then if the system is linear, the following holds:

S[c1 x1 (t) + c2 x2 (t)] = c1 S[x1 (t)] + c2 S[x2 (t)] = c1 y1 (t) + c2 y2 (t),

for any pair of real numbers c1 and c2 . The definition for discrete-time systems is exactly the same.

Thus, a discrete-time system S is linear if

y1 [n] = S [x1 [n]] ,

y2 [n] = S [x2 [n]] ,

then,

S [c1 x1 [n] + c2 x2 [n]] = c1 S [x1 [n]] + c2 S [x2 [n]] = c1 y1 [n] + c2 y2 [n].

In particular, linear systems possess the scaling (or homogeneity) property. I.e., if, for example,

c2 = 0, then

S[c1 x1 (t)] = c1 S[x1 (t)] = c1 y1 (t).

Thus, when the input is scaled by a certain amount, the output corresponding to it is scaled by

the same amount.

They also have the superposition property. I.e., if c1 = c2 = 1,

S[x1 (t) + x2 (t)] = S[x1 (t)] + S[x2 (t)] = y1 (t) + y2 (t).

The linearity property is obviously very important as it allows us to easily compute the response

of a linear system to an infinite variety of inputs, i.e. to any linear combination of inputs for which

the outputs are known. The corresponding output is the same linear combination applied to the

outputs.

The linearity concept is illustrated in Figure 1.16 below.

x1(t)

Linear System

S

y1(t)

If:

Then:

x2(t)

Linear System

S

y2(t)

c1x1(t)+c2x2(t) Linear System c1y1(t)+c2y2(t)

S

Figure 1.16: Illustration of the linearity property.

A very important property of linear operators (which describe systems operating on their inputs

to produce outputs) is that they commute. In other words if S1 and S2 are two linear operators

(systems) and x(t) is an input, we have

S2 [S1 [x(t)]] = S1 [S2 [x(t)]] .

(1.4)

This concept is illustrated in Figure 1.17 below: Thus, if the input to the concatenated system is

x(t), the output will be the same (y(t) whether S1 acts on the input first (top configuration in the

figure above) or S2 acts on the input first (bottom configuration). There are some very important

implications of this property. For example, let the response of a linear system to a unit-step, u(t)

20

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

x(t)

Linear System

S1

z(t)

Linear System

S2

y(t)

x(t)

Linear System

S2

q(t)

Linear System

S1

y(t)

Figure 1.17: Illustration of the commutation property of linear systems.

(i.e. the step response) be y(t). Then the response of the system to a unit impulse δ(t), say h(t),

referred to as the system impulse response, is the derivative of the step response, i.e.,

h(t) =

dy(t)

.

dt

This can be seen mathematically as follows. Let the system be defined by the linear operator S.

Then,

y(t) = S[u(t)].

Now, we know that the delta function is the derivative of the unit-step and that the derivative

operator is linear. Thus,

du(t)

δ(t) =

.

dt

We are interested in the impulse response, h(t), of the system; we have:

h(t) = S[δ(t)] = S

du(t)

d

dy(t)

= S[u(t)] =

,

dt

dt

dt

where the third equality above is because both the derivative operator and the system are linear

and we exchanged their order.

In general, let S be a linear system and L be a linear operator. Let y(t) be the output of a

system when the input is x(t), i.e. y(t) = S[x(t)]. Now let z(t) = L[x(t)] be another signal obtained

after the linear operator L operates on x(t). Then

S[z(t)] = S [L[x(t)]] = L [S[x(t)]] = L[y(t)].

The above result will be used in the next chapter to obtain an important result in computing

the response of linear systems.

Examples of Linear Systems

1. y(t) = 2x(t). Clearly this is a linear system since when the input is c1 x1 (t) + c2 x2 (t) the

output is

2[c1 x1 (t) + c2 x2 (t)] = c1 [2x1 (t)] + c2 [2x2 (t)] = c1 y1 (t) + c2 y2 (t).

2. Consider the system in which the output relates to the input as:

y(t) =

Z t

−∞

x(a)da.

21

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

This system is also linear since:

Z t

−∞

[c1 x1 (a) + c2 x2 (a)]da = c1

Z t

−∞

x1 (a)da + c2

3.

y[n] =

m=n+N

X

Z t

−∞

x2 (a)da = c1 y1 (t) + c2 y2 (t).

x[m].

m=n−N

This is again easily shown using the definition of linearity:

m=n+N

X

m=n−N

(c1 x1 [m] + c2 x2 [m]) = c1

m=n+N

X

x1 [m] + c2

m=n−N

m=n+N

X

x2 [m] = c1 y1 [m] + c2 y2 [m].

m=n−N

4. The current i(t) through a resistor with resistance R relates to the voltage across it through

v(t) = Ri(t).

If the input is v(t) and the output is i(t) this is clearly a linear system.

Similarly, the voltage v(t) across an inductor of inductance L relates to the current i(t)

through it through

di(t)

v(t) = L

.

dt

If we consider the input to be i(t) and the output to be v(t), one can easily show (using the

properties of derivatives) that this is a linear system as well. However, if we consider the

input to be the voltage v(t) across the inductor and the output to be the current i(t) through

it, we have

Z

1 t

i(t) = i0 +

v(a)da,

L −∞

where i0 is some initial current through the inductor. As is shown below, unless i0 = 0, the

system is not linear.

Similar conclusions hold if one considers the voltage v(t) across a capacitor of capacitance C

as the input and the current through it as the output. We have in this case,

i(t) = C

dv(t)

,

dt

and the system is clearly linear. If we instead consider the input to be i(t) and the output to

be v(t), we have

Z

1 t

v(t) = v0 +

i(a)da,

C −∞

and the system is not linear unless the initial voltage v0 across the capacitor is zero.

In general, an electrical system that consists of resistors, inductors and capacitors is a linear

system provided the initial state (initial currents through inductors and initial voltages across

capacitors) is zero (i.e we are only considering the emphzero-state response of the system and

not the total response.)

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

22

Examples of Non-linear Systems

1. y(t) = x2 (t). To show that a system is not linear, it suffices to show the scaling or superposition

property does not hold for some specific case. In the above example, if the input signal is

scaled by a factor of 2, for example, then the output will be

[2x(t)]2 = 4x2 (t) 6= 2x2 (t) = 2y(t).

2. y[n] = c + x[n], i.e. the output is the input plus a fixed constant c. One might be tempted

to say this is a linear system, but it is not in general. For example, let us again see what

happens when we scale the input x[n] by a factor of 2; we have:

S [2x[n]] = c + 2x[n] 6= 2 [c + x[n]] = 2y[n].

One can actually show that for a linear system, when the input is zero, the output MUST

be zero as well. The above system clearly does not satisfy this property since the output is c

when the input is zero (unless of course c = 0). To show that for a linear system the output

must be zero when the input is zero, we argue as follows: If the system is linear, it must be

that:

S[c1 x1 (t) + c2 x2 (t)] = c1 S[x1 (t)] + c2 S[x2 (t)],

for any pair of real numbers c1 and c2 . If we choose c1 = c2 = 0, then, if the system is linear,

in which case the above equation must hold, we have:

S[0] = 0.

3. y(t) = u(x(t)), where u(·) is the unit-step function. Since the unit-step function takes only 2

possible values, 0 or 1, the above system cannot possibly be linear since the scaling property,

for example, cannot possibly hold.

Time Invariance

Let y(t) = S[x(t)] be the response of a system S to an input x(t). Then the system is time-invariant

if:

S[x(t − t0 )] = y(t − t0 ).

In other words, the output of a time-invariant system to an input x(t) shifted in time by t0 has

exactly the same shape as the output when the input is x(t) but it is also shifted by the same

amount t0 as the input. The concept is illustrated in Figure 1.18 below.

If: x(t)

Time-­Invariant

System

S

y(t)

Then:

x(t-­t0 )

Time-­Invariant y(t-­t )

0 System

S

Figure 1.18: Illustration of the time-invariance property.

Examples of time-invariant systems include the following:

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

1. The discrete-time system

n

X

y[n] =

23

x[m]

m=−∞

is time-invariant. To show this, we have

S [x[n − n0 ]] =

n

X

m=−∞

x[m − n0 ].

Changing variables in the summation by letting k = m − n0 , we have:

(n−n0 )

S [x[n − n0 ]] =

X

k=−∞

x[k] = y[n − n0 ],

and therefore the system is time-invariant.

2. y(t) = u (x(t)) , where u(·) is the unit-step function. We have:

S[x(t − t0 )] = u (x(t − t0 )) = y(t − t0 ),

and therefore the system is time-invariant.

3. y[n] = x2 [n]. To show it is time-invariant, we have:

S [x[n − n0 ]] = x2 [n − n0 ] = y[n − n0 ].

Examples of systems that are not time-invariant include the following:

1. y(t) = S[x(t)] = x(2t). Let x1 (t) = x(t − t0 ) be a time-shifted version of x(t). Then,

S[x1 (t)] = x1 (2t) = x(2t − t0 ) 6= x(2(t − t0 )) = y(t − t0 ),

and therefore the system is not time-invariant.

2. y(t) = f (t)x(t), for some arbitrary function f (t). In other words the system multiplies the

input by the function f (t) to produce the output. We have

S[x(t − t0 )] = f (t)x(t − t0 ) 6= y(t − t0 ) = f (t − t0 )x(t − t0 ),

and thus the system is not time-invariant in general, unless of course f (t) = c for some

constant c.

3. y[n] = S [x[n]] = f [n] + x[n], for some arbitrary function f [n]. We have

S [x[n − n0 ]] = f [n] + x[n − n0 ] 6= y[n − n0 ] = f [n − n0 ] + x[n − n0 ],

and therefore the system is not time-invariant.

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

24

Memory

In continuous-time, a system is memoryless when the output y(t) at time t is only a function of

the input, x(t), at time t. In discrete-time, the definition is the same. A discrete-time system is

memoryless when the output y[n] at some time n is only a function of the input at time [n]. For

example, the systems described by:

y[n] = nx2 [n]

y(t) = x(t) − 2x2 (t)

are memoryless, but the ones described by

y(t) = x(t) − x(t − 1)

y[n] =

n

X

x[m],

m=−∞

are not.

Causality

A system (continuous-time or discrete-time) is causal if the output at any time t (or n) is possibly

a function of the input at times from −∞ up to time t (or n) but not a function of the input for

times τ > t (or m > n). Thus, the systems described below are causal

y[n] =

n

X

x[m],

m=−∞

y(t) = x(t)e−(t+1) ,

are causal, but the systems described by

y(t) = x(t/2),

y[n] =

n+1

X

x[m]

m=−∞

are not. Clearly, any memoryless system, such as the second causal system above, must by definition

be causal. The first non-causal system above is easy to see it is non-causal for if one considers t < 0.

So, ones must be careful to make sure the condition for causality holds for all times.

Invertibility

A system is invertible if the input signal at any time can be uniquely determined from the output

signal. In other words, distinct inputs yield distinct outputs. For example, the system

y[n] =

n

X

x[m]

m=−∞

is invertible since x[n] = y[n] − y[n − 1], but the system

y(t) = |x(t)|

is not since it is impossible to determine the sign of the input from the output.

CHAPTER 1. INTRODUCTION TO SIGNALS AND SYSTEMS

25

Stability

A stable system is one in which an input which has an amplitude that is bounded (it is less than

infinity), necessarily results in an output that is bounded. For example, the system

y(t) = cos (x(t)) ,

is naturally bounded since the output can never exceed ±1. The linear, time-invariant system

y[n]| =

∞

X

m=−∞

h[m]x[n − m],

is stable under some conditions on h[n]. Let |x[n]| ≤ A < ∞ be bounded. Then we must find a

condition under which the corresponding output |y[n]| is bounded. We have

∞

X

|y[n]| = h[m]x[n − m]

≤

=

m=−∞

∞

X

m=−∞

∞

X

|h[m]x[n − m]|

|h[m]||x[n − m]|

m=−∞

∞

X

≤ A

m=−∞

|h[m]| < ∞.

Thus, a sufficient condition for a stable system is that

∞

X

m=−∞

|h[m]| < ∞.

Examples of systems that are not stable are:

y(t) = tx(t),

y[n] = x[n]/n.

Chapter 2

Linear, Time-Invariant (LTI) Systems

The rest of the course will focus almost solely on causal, linear, time-invariant systems. Linear, timeinvariant systems are described by linear differential equations with constant coefficients. Invariably,

to compute the response of such systems to a specific input, one must solve a differential equation.

As we saw above during the discussion of linearity, such systems are linear only if the initial state

of the system is zero (i.e. there is no initial energy in the system), or, in general, one only considers

the zero-state response of the system to an input. For example, consider the system described by

the circuit in Figure 1.15 with input-output described by the first-order differential equation:

x(t) = RC

dy(t)

+ y(t).

dt

(2.1)

The output of this system is the voltage across the capacitor and it is assumed to be zero at the

time the input is applied (which we consider to be t = 0). Thus, y(0) = 0. In general, for an n-th

order system described by an n-th order differential equation, the state of the system is determined

by y(t) and its first (n − 1) derivatives and for a zero state, they should all be zero, i.e.,

y(0) =

dy(t)

dy n−1 (t)

|t=0 = · · · =

|t=0 = 0.

dt

dtn−1

There are various ways to solve the above differential equation, but a strong mathematical

tool in this regard is the Laplace transform. We will thus focus on the Laplace transform and its

properties next. Before doing so, though, we derive an important result in linear system theory.

2.1

2.1.1

The Impulse Response and the Convolution Integral

The Continuous-Time Case

As stated earlier, the response of an LTI system to a unit impulse, δ(t), is the impulse response of

the system. We will see next that this response is important in that using it we can write a general

expression for the output of the system to any input.

We know from the definition of the delta function earlier that it possesses the sifting property,

defined by

Z

x(t) =

∞

−∞

x(a)δ(t − a)da,

for an arbitrary signal x(t). Let the impulse-response of a linear, time-invariant system S be h(t).

We are interested in the response of the system when the input is the arbitrary signal x(t), i.e.,

26

27

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

y(t) = S[x(t)]. We have

y(t) = S[x(t)]

= S

Z ∞

−∞

=

y(t) =

Z ∞

−∞

Z ∞

−∞

x(a)δ(t − a)da

x(a)S[δ(t − a)]da →

(2.2)

x(a)h(t − a)da.

The integral in the equation (2.2) above is known as the convolution integral. Thus, the output

of a linear system with impulse response h(t) to an arbitrary input x(t) is obtained by convolving

x(t) with h(t). Mathematically, we write

y(t) = x(t) ∗ h(t) =

Z ∞

−∞

x(a)h(t − a)da.

It is easy to show that x(t) ∗ h(t) = h(t) ∗ x(t). To show this, we have:

Z ∞

x(t) ∗ h(t) =

x(a)h(t − a)da

−∞

Z −∞

= −

Z ∞∞

=

−∞

x(t − b)h(b)db

x(t − b)h(b)db

= h(t) ∗ x(t),

where the second equality above was obtained through a change of variables t − a = b.

If the system is causal, then the impulse response h(t) must be zero for t < 0. In this case

h(−a) will be zero for a > 0 and h(t − a)1 will be zero for a > t. Thus, the convolution integral

can be written in this case as

Z

t

y(t) =

−∞

x(a)h(t − a)da.

If in addition x(t) = 0 for t < 0, then clearly y(t) = 0 for t < 0 and for t ≥ 0 we have

y(t) =

Z t

0

(2.3)

x(a)h(t − a)da.

However, the expression in (2.2) is the most general one and will always hold.

Let us now consider some examples of convolving continuous-time signals.

Continuous-Time Convolution Examples

1. Let x(t) = u(t) and h(t) = e−t u(t). We wish to compute y(t) = x(t) ∗ h(t) = h(t) ∗ x(t). Let

us use

Z ∞

Z t

y(t) = h(t) ∗ x(t) =

h(a)x(t − a)da =

h(a)x(t − a)da.

−∞

0

Since both x(t) and h(t) are zero for t < 0, so will be y(t), so we only need consider t > 0, as

illustrated in Figure 2.1. For t > 0, we have

y(t) =

Z t

0

1

h(a)u(t − a)da =

Z t

0

e−a da = 1 − e−t .

Note here that a is the variable in the convolution integral in Equation (2.2) and t is fixed.

28

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

Thus, the convolution between x(t) and h(t) over all time is

y(t) = 1 − e−t u(t).

t < 0

u(t-a)

1

h(a)

a

t

t>0

u(t-a)

1

h(a)

t

a

h(a)u(t-a)

1

t>0

t

a

Figure 2.1: Illustration of convolution for Example 1.

2. Let x(t) = u(t) − u(t − 1) be a rectangular pulse. Let’s compute

y(t) = x(t) ∗ x(t) =

Z ∞

−∞

x(a)x(t − a)da.

As illustrated in Figure 2.2, we have 4 regions of the time axis to consider: t < 0, 0 ≤ t ≤ 1,

1 ≤ t ≤ 2 and t > 2. Clearly, y(t) = 0 for T < 0 and for t > 2 as seen in from the figure. For

0 ≤ t ≤ 1, we have

Z

t

y(t) =

da = t,

0

and for 1 ≤ t ≤ 2,

y(t) =

Z 1

t−1

da = (2 − t).

The final convolution result is the triangular pulse at the bottom of Figure 2.2. Mathematically, in

terms of the ramp-function,

y(t) = r(t) − 2r(t − 1) + r(t − 2),

29

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

t < 0

x(t-a)

1

x(a)

t

x(t-a)

1

2

a

1

2

a

2

a

x(a)

t

1

1

x(t-a)

x(a)

1

1

t

x(t-a)

x(a)

1

2

1

2

t

a

x(t)*x(t)

1

t

Figure 2.2: Illustration of convolution for Example 2.

or, explicitly,

2.1.2

0,

t<0

t

0≤t≤1

y(t) =

(2

−

t)

1

≤t≤2

0

t > 2.

The Discrete-Time Case

The same results corresponding to the convolution integral in equation (2.2) in the continuous-time

case can be derived for the discrete-time case by using equation (1.3). Thus, if the response of a

linear, time-invariant system to a unit impulse is h[n], then the output of the system to an arbitrary

input x[n] is

y[n] =

∞

X

m=−∞

x[m]h[n − m] =

∞

X

m=−∞

h[m]x[n − m].

(2.4)

where the second equality above can be easily established, as for the continuous-time case, by a

change of variable. If both functions x[n] and h[n] are zero for negative time, then the convolution

30

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

reduces to

y[n] =

n

X

m=0

x[m]h[n − m],

but be careful using this expression; you must make sure the both functions are zero for n < 0.

Discrete-Time Convolution Examples

1. Let x[n] = u[n+2]−u[n−3] and h[n] = x[n]. Figure 2.3 shows graphically the process and the

different time regions when there is a change in the functional dependence. We distinguish

4 different regions: n < −4 and n > 4 when y[n] = 0, −4 ≤ n ≤ 0 and 0 ≤ n ≤ 4. For

4 ≤ n ≤ 0, we have:

y[n] =

∞

X

m=−∞

x[m]x[n − m] =

n+2

X

1 = n + 5.

m=−2

For 0 ≤ n ≤ 4, we have

y[n] =

∞

X

m=−∞

x[m]x[n − m] =

2

X

m=n−2

1 = 5 − n.

The result is illustrated in the last plot in Figure 2.3.

2. Let x[n] = u[n] − u[n − L] and h[n] = an u[n] where 0 ≤ a < 1. Then

∞

X

y[n] =

m=−∞

h[m]x[n − m]

∞

X

m=−∞

am u[m] [u[n − m] − u[n − m + L]] .

The convolution is illustrated in Figure 2.4 for a = 1/2 and L = 5. We distinguish 3 regions:

n < 0, 0 ≤ n < L and n ≥ L. For n < 0, clearly y[n] = 0. For 0 ≤ n < L, we have:

n

X

y[n] =

am =

m=0

For n ≥ L we have:

y[n] =

n

X

m=n−L+1

2.2

1 − an+1

.

1−a

am =

an+1 a−L − 1

1−a

.

The Laplace Transform and its Use to Study LTI Systems

The Laplace transform is an extremely useful mathematical tool in the analysis and design of

continuous-time, linear, time-invariant systems. A parallel transform in the discrete-time case,

the z-transform, is as powerful and important in analyzing and designing discrete-time systems

and has similar properties to the Laplace transform. The power of the Laplace transform is in

converting linear differential equations with constant coefficients into algebraic equations which are

easier to solve. It does so by moving the design from the time-domain (where input-output relations

are described by differential equations) to the frequency-domain, where input-output relations are

described by algebraic equations. Once a solution is obtained in the frequency domain, we can use

31

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

x[n-m]

x[m]

1

1 2

m

-­2 -­1

1 2

m

-­2 -­1

1 2

m

1 2

m

-­2 -­1

n

x[n-m]

1

a

1

-­2 -­1

5

-­5 -­4 -­3 -­2 -­1

x[n]*x[n]

1 2 3 4 5

m

Figure 2.3: Illustration of discrete convolution for Example 1.

the inverse Laplace transform to obtain the solution in the time-domain. In this course, we will not

deal extensively with the mathematical subtleties inherent in the study of the Laplace transform

but rather focus on its properties and how they can be used in the context of LTI systems.

The Laplace transform is an operator, L, which transforms a time-domain signal into the

frequency domain. It is customary to designate the Laplace transform of a signal x(t) by X(s)

where s = σ + jω is the complex frequency variable (with real part σ and imaginary part ω.)

The Laplace transform of a function (signal) x(t) is defined by

X(s) = L[x(t)] =

Z ∞

x(t)e−st dt.

(2.5)

−∞

Not all functions possess a Laplace transform and those that have one is often limited to a

region of the so-called s-plane defined by the real axis (σ) versus the imaginary axis (jω), referred

to as the region of convergence (ROC).

As an example, let us compute the Laplace transform of x(t) = eω0 t u(t). We have: Thus,

X(s) =

Z ∞

−∞

x(t)e−st dt

32

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

h[m]

x[n-m]

1

m

1 2 3 4

n

h[m]

x[n-m]

1

1 2 3 4

n

m

h[m]

x[n-m]

1 2 3 4

2

n

m

x[n]*h[n]

1

-­3 -­2 -­1

1 2 3 4 5

m

Figure 2.4: Illustration of discrete convolution for Example 2.

=

Z ∞

ejω0 t e−st dt

0

=

Z ∞

e−(s−ω0 )t dt

0

=

=

e−(s−ω0 )t

1

− lim

(s − ω0 ) t→∞ (s − ω0 )

1

e−σt e−(ω−ω0 )t

− lim

(s − ω0 ) t→∞

(s − ω0 )

Clearly, if σ > 0, the second term above goes to zero, and thus,

L[ejω0 t ] =

1

,

(s − ω0 )

and the region of convergence is the right-hand s-plane (σ > 0), as indicated by the shaded region

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

33

in Figure 2.5:

Figure 2.5: Illustration of ROC for ejω0 t .

The Laplace transform is invertible; the inverse Laplace transform of X(s) is

1

x(t) =

2πj

Z σ+j∞

X(s)est ds.

(2.6)

σ−j∞

Because of the specific nature of the Laplace transform of linear, constant coefficient differential

equations describing linear time-invariant systems, the Laplace transforms encountered are in the

form of rational functions (ratios of polynomials in s). In these cases we have an easier way of

inverting without having to resort to the contour integral in (2.6).

2.2.1

Properties of the Laplace Transform

Next, we will study several very important properties of the Laplace transform that allow us to

compute Laplace transforms of functions often without resorting to (2.5). Some of these properties

also provide a fundamental insight into the analysis and design of LTI systems.

Linearity

The Laplace transform is linear. To see this, let

X1 (s) = L[x1 (t)] =

and

X2 (s) = L[x2 (t)] =

Z ∞

−∞

Z ∞

−∞

x1 (t)e−st dt,

x2 (t)e−st dt.

Then, the Laplace transform of z(t) = c1 x1 (t) + c2 x2 (t) is:

Z(s) = L[z(t)]

=

Z ∞

−∞

= c1

[c1 x1 (t) + c2 x2 (t)]e−st dt

Z ∞

−∞

x1 (t)e−st dt + c2

= c1 L[x1 (t)] + c2 L[x2 (t)],

Z ∞

−∞

x2 (t)]e−st dt

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

34

and, thus, linearity is established.

Clearly the linearity property is very important as it can be used to break up the Laplace

transform of the linear combination of several functions to a linear combination of the Laplace

transforms of the individual functions. The ROC for the composite function is the intersection of

the ROCs for the individual functions.

Time Shift

Let X(s) = L[x(t)]. Then the Laplace transform of x(t − t0 ) is

L[x(t − t0 )] = e−st0 X(s).

The proof is easily obtained from the definition of the Laplace transform and a simple change

of variables; thus, we have:

L[x(t − t0 )] =

=

Z ∞

−∞

Z ∞

x(t − t0 )e−st dt

x(a)e−s(a+t0 ) da

−∞

= e−st0

Z ∞

x(a)e−sa da

−∞

= e−st0 X(s),

where the second equality is obtained by a change of variables a = t − t0 .

Frequency Shift

Multiplication by eat in the time-domain corresponds to a frequency shift in the frequency domain:

L[eat x(t)] = X(s − a).

The proof is also easy:

L[e x(t)] =

at

=

Z ∞

−∞

Z ∞

eat x(t)e−st dt

x(t)e−(s−a)t dt

−∞

= X(s − a).

Time-domain Differentiation

Differentiation in the time-domain corresponds to multiplication by s in the frequency domain:

dx(t)

L

= sX(s).

dt

To show this we use (2.6). We have:

1

x(t) =

2πj

Z σ+j∞

σ−j∞

X(s)est ds.

35

CHAPTER 2. LINEAR, TIME-INVARIANT (LTI) SYSTEMS

Taking derivatives with respect to t on both sides, we obtain:

dx(t)

1

=

dt

2πj

Z σ+j∞

which indicates that the Laplace transform of

By inductive reasoning, we conclude that

L

sX(s)est ds,

σ−j∞

dx(t)

dt

n

d x(t)

dtn

is sX(s).

= sn X(s).

Frequency-domain Differentiation

Multiplication by t in the time-domain corresponds to differentiation in the frequency-domain (with

negation):

dX(s)

L [tx(t)] = −

.

ds

This is easily seen form the definition of the Laplace transform:

X(s) =

Z ∞

x(t)e−st dt.

−∞

Taking derivatives with respect to s on both sides, we have:

dX(s)

=−

ds

Z ∞

tx(t)e−st dt,

−∞

from which the property follows.

By induction we can generalize this to

L [tn x(t)] = (−1)n

dX n (s)

.

dsn

Frequency-Domain Integration

x(t)

L

=

t

Z ∞

X(a)da.

s

To show this property, we have:

L

x(t)

t