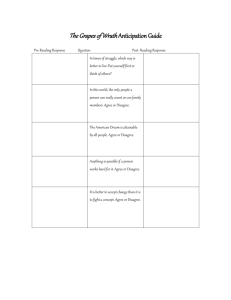

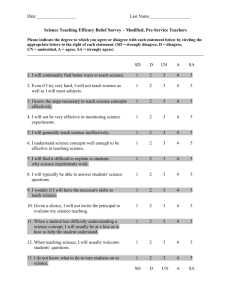

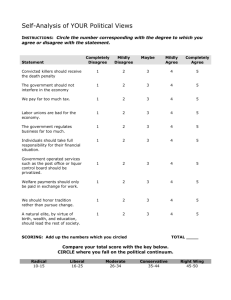

Comparing Questions with Agree/Disagree Response Options to

advertisement