INTERDEPENDENCE, INFORMATION PROCESSING AND

advertisement

INTERDEPENDENCE, INFORMATION PROCESSING

AND ORGANIZATION DESIGN: AN EPISTEMIC PERSPECTIVE

Phanish Puranam

ppuranam@london.edu

London Business School

University of London

Marlo Goetting

mgoetting.phd2007@london.edu

London Business School

University of London

Thorbjorn Knudsen

tok@sam.sdu.dk

Strategic Organization Design Unit

University of S. Denmark

This Draft: Jan 13, 2010

Abstract1

We develop a novel analytical framework to study epistemic dependence: “who needs

to know what about whom” as a basis for understanding information processing requirements

in organizations, and the resulting implications for organization design. The framework we

develop helps to describe and compare the nature of the underlying coordination problems

generated by different patterns of interdependence, and the resulting knowledge requirements

for the design and implementation of appropriate organizational structures. The framework

offers a formal language that may prove useful for parsimoniously integrating what we know,

as well as for building new theory.

1

The authors thank Yang Fan, Mike Ryall, Mihaela Stan and Bart Vanneste for helpful comments on

earlier drafts. Puranam acknowledges funding from the European Research Council under Grant #

241132 for “The Foundations of Organization Design” project.

1. INTRODUCTION

An extensive literature treats the design and structure of organizations as a response to

the information processing needs generated by interdependence between its constituent

agents (e.g. Simon, 1945; March and Simon, 1958; Lawrence and Lorsch, 1967; Thompson,

1967; Van de Ven, Delbeq, Koenig, 1976; Galbraith, 1977; Tushman and Nadler, 1978;

Burton and Obel, 1984). The goal of this paper is to offer a novel analytical framework that

can potentially refine and elaborate on the foundational concept of “interdependence” in this

literature. Specifically, we develop an approach to systematically analyze the epistemic

implications of different patterns of interdependence. We explicitly describe the nature of the

underlying coordination problem (Schelling, 1960) generated by different patterns of

interdependence, and the resulting knowledge requirements for the design and

implementation of appropriate organizational structures.2 In doing so, we develop a formal

language that may prove useful for parsimoniously integrating what we know, as well as for

building new theory.

Interdependence is a concept that applies at multiple levels of analysis- between

individuals, groups and organizations- but always refers to a relationship between two

decision-making entities. Given two tasks undertaken by actors A and B, there is dependence

of A on B if A’s outcomes depend partly on the actions taken by B. Interdependence exists

when A’s outcomes depend on B’s actions and vice versa. This conceptualization is common

across widely varied literatures. It can be found implicitly in Thompson’s pioneering work on

2

Coordination failures occur when interacting individuals are unable to anticipate each other’s actions and

adjust their own accordingly (Schelling, 1960); in organizations, coordination failures are often manifested as

delay, mis-understanding, poor synchronization and ineffective communication. In contrast cooperation failures

occur when interdependent individuals are not motivated to achieve the optimal collective outcome because of

conflicting incentives. Coordination failures can occur quite independent of cooperation failures – even when

incentives are fully aligned (Simon, 1947; March and Simon, 1958; Schelling, 1960; Camerer, 2003; Heath and

Staudenmayer, 2000; Grant, 1996; Holmstrom and Roberts, 1998). Cooperation and coordination failures are

therefore individually sufficient reasons for the failure of collaboration.

2

interdependence between those executing related tasks (Thompson 1967), in the literature on

groups (Kelley and Thibault, 1978) and relationships between organizational units (Van de

Ven et al 1976). The same analytical idea also underlies formal models of complementarities

in production functions (Milgrom and Roberts 1990), and epistatic interactions in models of

organizational adaptation on fitness landscapes (Levinthal 1997), though in many

applications in these domains the focus is on interdependent tasks undertaken by a single

decision maker.

Interdependence is a natural foundational construct for analyzing organization design,

which involves two complementary problems- the division of labor and the integration of

effort (March and Simon, 1958; Lawrence and Lorsch, 1967; Burton and Obel, 1984).

Interdependence is the inevitable consequence of the division of labor as it arises between

those who carry out the divided labor. Consequently, much of the literature on formal

organization design rests on the premise that specific patterns of the division of labor give

rise to specific patterns of interdependence, and that efficient organizational forms “solve”

the problems of cooperation and coordination that arise when integrating the efforts of

interdependent actors (Simon, 1945; Thompson, 1967; Lawrence and Lorsch, 1967). For this

idea to have theoretical and empirical traction, the analysis of organization designs must build

on a clear understanding of why certain patterns of interdependence are harder to organize

around than others.

An influential set of answers to this question are rooted in the notion of bounded

rationality (Simon, 1945), with a focus on the costly information processing requirements

generated by interdependence. These are the costs of “communication and decision making”

(Thompson, 1967: 57) or the “gathering, interpreting and synthesis of information in the

3

context of organizational decision making” (Tushman and Nadler, 1978: 614)3. In this

perspective, distinct patterns of interdependence give rise to different information processing

requirements (Thompson, 1967), and “variations in organizing modes are actually variations

in the capacity of organizations to process information” (Galbraith, 1977: 79). Our approach

lies squarely within this perspective. Our key thesis is that different patterns of division of

labor generate corresponding patterns of interdependence, which in turn imply a particular

distribution of knowledge across actors that is necessary for them to achieve coordinated

action. Thus the nature of interdependence shapes epistemic dependence: “who needs to

know what about whom”. We claim that taking into account the epistemic consequences of

interdependence allows us to establish an improved mapping from types of interdependence

to types of organizational structures, which is the fundamental mapping on which most

descriptive as well as prescriptive principles of organization design in the information

processing perspective are based.

We acknowledge an important alternative tradition that focuses on the challenge of

aligning incentives to explain why certain patterns of interdependence are harder to organize

around. In this perspective, managing interdependence can be complicated when the initial

(pre-design) distribution of gains makes it unlikely that optimal actions will be taken - for

instance, because of gains from free riding, hold-up, or other forms of rent seeking behavior.

This approach to the analysis of interdependence characterizes the extensive literature on

social dilemmas (Kollock, 1998), reward interdependence within teams (Kelly and Thibault,

1978), principal-agent models with multiple agents (Holmstrom, 1982), and hold-up

problems in transaction cost economics as well as the property rights perspective

(Williamson, 1985; Grossman and Hart, 1986). These diverse accounts from social

3

The term “coordination costs” is also sometimes used to describe these costs. However, this is ambiguous as it

could refer either to the costs of the information processing necessary to generate coordinated action, or the

(opportunity) costs that arise when coordination failures occur.

4

psychology and economics have in common the notion that the central organizational design

problem involves using incentives (formal or informal) to change the distribution of gains

from managing interdependence in a manner that motivates optimal (if not efficient) actions.

While incentive structure does play an important part in this paper, we are largely

agnostic to the problem of the distribution of gains from managing interdependence, and

focus instead on the epistemic and information processing consequences of interdependence.

A fuller integration between the incentive and information perspectives is doubtless desirable,

but beyond the scope of this paper.

The rest of this paper is organized as follows: The next section discusses Thompson’s

highly influential conceptualization of interdependence and implications for information

processing and organization design (Thompson, 1967), and notes ambiguities within this

framework. We then develop a formal definition of epistemic dependence in section 3, and

discuss its application to deriving implications of any pattern of the division of labor, and the

consequent role of the organization designer in sections 4 and 5. In the discussion section we

show how our approach accommodates a number of traditional organization design topics,

and summarize our key contributions in the conclusion.

2. PRIOR CONCEPTUALIZATIONS OF THE LINKS BETWEEN

INTERDEPENDENCE & INFORMATION PROCESSING

An early conceptualization of the mapping from interdependence to information

processing requirements was presented by Thompson (1967). Thompson’s framework is still

the dominant one for studying interdependence as a basis for understanding organizational

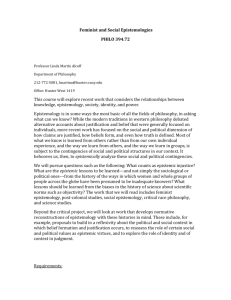

structures. Figure 1 provides some data on the number of papers published by year in five

important management journals (Strategic Management Journal, Administrative Science

Quarterly, Organization Science, Academy of Management Journal and Academy of

5

Management Review) that a) cite Thompson’s 1967 book b) contain the word

“interdependence” and c) the words “organization (al) structure (OR) design” as a fraction of

the number of papers that satisfied b) and c) alone. Given its wide usage and utility, we use

Thompson’s approach to the analysis of interdependence to briefly illustrate what we see as

distinctive about our approach.

---------------------------------Insert Figure 1 about here

----------------------------------

The central idea that arises from Thompson’s work on interdependence is that

complex forms of interdependence require high levels of costly information processing

activities such as decision making and communication (Galbraith, 1977; Tushman and

Nadler, 1978). In Thompson’s classic typology of interdependence complexity (1967),

interdependence is “pooled” when tasks depend on each other in a simple additive manner;

each task renders a discrete contribution to the whole, and each is supported by the whole.

Interdependence is “sequential” when the outputs of one task form the inputs of the other.

Finally, interdependence is “reciprocal” when the outputs of each task become the inputs of

the other.

Influential contributions by Galbraith, Tushman and Nadler elaborated on the

mapping from increasing interdependence complexity to increasing need for information

processing activities (Galbraith, 1973; Tushman and Nadler, 1978). We note that both

Galbraith’s and Tushman and Nadler’s analyses were clearly epistemic in spirit, because their

emphasis lay in outlining the conditions under which more or less knowledge was to be made

available to interdependent agents through information processing activities. In this respect,

our work directly builds on and extends their contributions. However, we also argue that

Thompson’s interdependence framework is a problematic basis on which to construct a

mapping from the nature of interdependence to the extent of information processing

6

necessary to manage it. Specifically, we see two important ways in which the framework is

underspecified, which result in ambiguities in its application.

First, Thompson’s framework does not distinguish between the logical sequence of

actions and their temporal sequence. Pooled interdependence involves a situation where each

action makes an independent contribution to the whole- no task provides an input to another.

However, this situation can accommodate variation in the timings of the actions themselves,

which may be sequential or simultaneous. Actions may be taken sequentially, even though

the results of the actions themselves are pooled to create a final output. As we will show, the

timing of actions has critical implications for the epistemic conditions that must hold for

actions to be coordinated. Furthermore, if the time it takes for tasks to be executed is greater

than zero, then a distinct category for “reciprocal interdependence” is unnecessary, as it can

be expressed as a repeated cycle of temporally sequential tasks.

Second, Thompson’s framework is silent about the role of incentives, and therefore

does not account for the entire class of interdependencies induced by incentive structures

(Kelley and Thibault, 1978; Wageman, 1995). It is worthwhile elaborating on this critique in

some detail to delimit its scope precisely: Thompson’s work, like much of the literature in

classical organization theory (and in contrast to much work in economics) focused on issues

of information and coordination rather than issues of incentive conflict. Our point is not to

point out that his approach privileges information over incentives; tractability is necessary for

theorizing, and indeed the converse critique could apply to the extensive literature that has

emphasized the incentive consequences of interdependence over coordination issues (also see

Dosi, Levinthal, and Marengo, 2003; Kretschmer and Puranam, 2008). Rather, our point is

that incentive structures themselves can critically impact the epistemic implications of

various patterns of interdependence. For instance, what is required to be known for

coordinated action by two individuals who are rewarded on joint output is not the same as

7

when they are rewarded on individual output. The complexities of managing the different

patterns of interdependence Thompson analyses could vary vastly as a function of the

incentive structure in place, and it is this missing “moderator” role of incentives that we point

to in this critique.

As will become clear from the next two sections, without knowing the nature of the

incentive structure – individual or joint rewards- and the sequence of actions – sequential or

simultaneous- it is difficult to give a precise specification of the underlying coordination

problem (also see Weber (2004) for an attempt to model each of Thompson’s

interdependence types as a distinct coordination game). Without knowing the nature of the

coordination problem associated with a particular pattern of interdependence, or a means to

compare these underlying coordination problems, it is in turn difficult to rank them in terms

of the need for information processing. Thus, the ambiguities in Thompson’s framework raise

doubts about the ordering of his interdependence categories in terms of the need for

information processing. The approach we introduce in the next two sections avoids these

ambiguities. We are able to specify the underlying coordination problems generated by any

pattern of division of labor, and also compare them in terms of the knowledge requirements

imposed on the relevant actors, using the concept of epistemic dependence.

3. DEFINING EPISTEMIC DEPENDENCE

To define epistemic dependence, consider a simple organization comprising multiple agents,

in which each agent i undertakes costly action a i and receives performance based

compensation. The agent’s utility is given by u i = π i (a i ,ψ i ) − ci (ai , ξ i ) , where ci is the

agent’s cost of action, π i is compensation, and ψ i and ξ i are vectors of exogenous

parameters. Total utility for all agents in the system is thus given by U = ∑ u i . The

8

functional form of the agent’s objective function is defined as oi = [π i (a i ,ψ i ) − ci (ai , ξ i ); Ω i ]

where Ω i represents any endogenous constraints faced by the agent as part of his objective

function. These could include internalized norms or habits of behavior, for instance.

Assumption 1: For total utility of the organization to be maximized, for every agent i

it is necessary that K i {oi }

The “knows that” operator K i {.} from modal (epistemic) logic can be read as “agent i knows

{.}”. This assumption makes explicit the notion that each agent is a decision maker (rather

than an automaton), who acts on the basis of her own objective function, which may include

constraints in the form of habits or norms for behavior in a particular context.

Assumption 2: For agent i to accurately predict agent j’s actions, it is necessary that

K i {o j }

The second assumption is not innocuous. It rules out, for instance, the use of a history of prior

observations that may enable an accurate prediction of another’s actions (or at least a history

that would be sufficient to generate an accurate prediction). It also rules out the existence of

signals that allow infallible predictions about actions. This leaves knowledge of the agents’

objective function as the only means by which an accurate prediction of that agent’s actions

can be made.

Definition: Given assumptions 1 & 2, if agent i maximizes his own utility only by

acting on the basis of an accurate prediction of agent j’s action, then i is

epistemically dependent on j.

We say there is epistemic dependence between agent i and j under these conditions because it

follows automatically that under assumptions 1 & 2 for total utility to be maximized, it is

necessary that K i , j {o j } - i.e. agent j’s objective function must be shared knowledge between

agent i and j. To see this, consider that for total utility to be maximized, agent j must know

9

her own objective function K j {o j } (Assumption 1). If i maximizes his own utility by acting

on the basis of an accurate prediction of j’s action then it is necessary that K i {o j }

(Assumption 2). Therefore for total utility to be maximized, it is necessary

that K i {o j } ∧ K j {o j } ≡ K i , j {o j } .

The notion of epistemic dependence helps to sharpen our discussion of coordination

problems in two important ways. First, we may say that whenever one agent’s utility is

maximized by acting on an accurate prediction of another agent’s actions, there is a

(potential) coordination problem.4 More generally, coordination problems in “real time”

settings (e.g. surgical teams, fire-fighters) as well as in “realistic time” settings (new product

development, strategic alliances) are fundamentally identical. Any setting in which actions

are unobservable- either because they are taking place simultaneously, because of

communication/information transmission constraints, or because of timing (it hasn’t

happened yet) – but must be predicted, can be modeled as a coordination problem.

Communication itself can be seen as a coordination problem, as indeed the modern view of

linguistics sees it: when communicating, I need to predict which among several possible

meanings you chose to attach to the word you use (eg. Clark, 1996).

A coordination failure thus is fundamentally a failure to predict the actions of another

in situations where such a prediction is essential for optimal action by oneself. It also follows

that whenever there is epistemic dependence, there must be a coordination problem, but the

converse is not true. For instance, the need to predict other’s actions in order to take one’s

own optimal actions may exist even in situations where Assumption 2 is not valid- where

norms, precedents conventions and signals exist to help make this prediction. Since there is

no epistemic dependence unless assumption 2 holds, it is clear that an interesting set of

4

Thus any game where at least one agent does not have a dominant strategy features a coordination problem.

10

questions arise about the circumstances under which prior history can serve as a basis for

predicting actions, or a system of signals can arise that makes such predictions possible.

However, we defer these issues for future work and focus on situations in which assumption

2 does hold.

Second, we note that there is bound to be a coordination failure if epistemic

dependence exists and the shared knowledge condition (i.e. the objective function of the

agent whose action is being predicted is known to itself as well as the predictor) is not met.

On the other hand, because the shared knowledge condition is necessary not sufficient, one

could also have a coordination failure when (given epistemic dependence) the shared

knowledge condition is met, but higher order shared knowledge is not present- common

knowledge may not exist (see Appendix A for a formal definition of shared knowledge of any

order).

For instance, consider the case of epistemic interdependence, which is a small

conceptual step from epistemic dependence: If agent i is epistemically dependent on agent j,

and agent j is epistemically dependent on agent i, then there is epistemic interdependence

between agents i and j. Therefore each must know whatever the other must know in order to

act- so that the objective function of each must be shared knowledge between them. However,

shared knowledge (of first order- both know it) may not be sufficient: unless both know that

both know, and both know that both know that both know (and so on), there may be residual

doubt in the mind of each agent about the action of the other. This residual doubt is in

principle fully eliminated only with shared knowledge of infinite order- which is common

knowledge. If we assume that residual doubt must disappear for coordinated action to take

place, then common knowledge is necessary; if some residual doubt is tolerable (because of

the relative costs of foregone coordination opportunities vis-à-vis failed attempts at

11

coordination), then only shared knowledge of finite order may be necessary. However, in

either case, shared knowledge of first order is always necessary.

4. THE DIVISION OF LABOR AND EPISTEMIC DEPENDENCE

Having defined epistemic dependence, we next provide a framework within which to

map different ways to divide labor to the resulting epistemic implications. To represent the

division of labor, we utilize the notion of a Task Architecture (denoted by A): a configuration

of tasks performed by agents who are measured and rewarded in certain ways. Task

Architectures capture the nature of the division of labor- which we define as the result of a

process of decomposing a single task performed by a single agent, into multiple tasks

performed by multiple agents. A task may be thought of as a production technology- it is a

transformation of inputs into outputs in a finite (non-zero) time period. The inputs are broadly

of two kinds: 1) actions taken by agents (always necessary- if there is no action as an input to

a task, only the output of a previous task, then the task is indistinguishable from the previous

task), and 2) the outputs of other tasks.5 In the basic formulation of our framework, each

agent is assigned a single task, though it is easy to relax this assumption.

The fundamental building block of a Task Architecture is the task dyad – a pair of

tasks i and j conducted by a pair of agents. More complicated structures are built through

scaling (e.g. from dyad to triad) delegation (hierarchical decomposition of tasks) and

recursion (e.g. a dyad in which each task is itself a dyad of tasks- see Appendix B).

4.1 Dimensions of Task Architecture

5

One may also consider Resources to be another kind of input. A Resource is an input that cannot itself be

viewed as the output of any other task within the Task Architecture being analysed.

12

Task architecture can be described along two basic dimensions, which capture the

nature of 1) task sequencing and 2) incentive breadth

1.

Task sequencing (S): This refers to the timing of actions by the agents. For

instance, in a dyad of tasks, the two agents may act sequentially (q) or

simultaneously (u).

Note that “simultaneous” need not actually mean

synchronized actions by the agents; as long as each did not know the

actions/outputs of the other agent when they acted, their actions are effectively

simultaneous. Conversely, sequential actions may not be restricted to only those

cases where the output of the first task is strictly necessary for the second task,

but more generally refers to the case of one agent acting only after the

action/output of the other agent has become visible.

2.

Incentive Breadth ( π ): In a dyad, narrow incentives correspond to individual

incentives, whereas broad incentives correspond to dyad level incentives (e.g.

Kretschmer and Puranam, 2008). More generally, the incentives for unit i (where

i may be an individual agent, group of agents, group of groups.. etc) are narrow if

performance is at the same level of aggregation as i, and broad if performance is

measured at the next higher level of aggregation.

To illustrate our notation for capturing the nature of a simple Task Architecture

comprising a single task dyad, we could write A :{ S (q ) | π 1 , π 2 } to denote sequential actions,

with narrow incentives for agent 1 and agent 2.

We take the perspective of a Designer who can make choices about one or both

dimensions of Task Architecture- sequencing and incentive breadth. As before, we assume

that the agent maximizes his utility given by u i = π i (a i ,ψ i ) − ci (ai , ξ i ) , subject to

constraint Ω i . The designer maximizes utility from the final output of the Task Architecture

13

net of incentive compensation paid out U d = Π A − ∑ π i . Total utility is thus given

by U = U d + ∑ U i .

In an analysis focusing on incentive considerations, we would now proceed to find the

optimal incentive structure a designer would set (as in standard principal agent models)

subject to some participation constraint for the agents; however, our goal is to explore the

epistemic requirements necessary to maximize total utility. In other words, we aim to specify

the distribution of knowledge across agents that would be necessary to enable maximization

of the sum of the agents’ and designer’s utilities, i.e. total utility. To do so, corresponding to

every Task Architecture A we show how to construct an Epistemic Structure- E which

captures “who needs to know what about whom” for total utility to be maximized. E thus

describes the necessary epistemic conditions for total utility to be maximized for a given A.

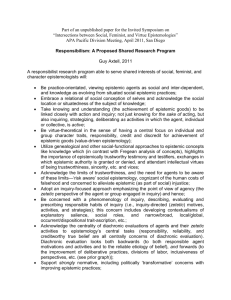

In all there are 4 (22) possible variants on task architecture for a single task dyad. The

corresponding epistemic structures are shown in Figure 2. A “1” in a cell indicates that the

column actor needs to know row knowledge element for total utility to be maximized. The

task architectures in which there is epistemic dependence between agents (i.e. off-diagonals

are non zero in the matrices in E) are those, by definition, in which one agent must act on the

basis of a prediction of the acts of another. This situation arises under the conjunction of two

conditions: a) whenever agent i faces broad incentives – where the returns to agent i’s actions

depend at least partly on j’s actions so that j’s actions feature as part of the vectors ψ i , ξ i and

b) when i is unaware of j’s action at the time of acting, which could be the case if i and j act

simultaneously, or i acts sequentially before j.

Consider the case where the two agents have broad incentives and act simultaneously. In

effect, both agents act before knowing the actions/outputs of the other, so that each is

epistemically dependent on the other. Therefore each must know whatever the other must

14

know in order to act- so that the objective function of each must be shared knowledge

between them. In contrast, consider the case of sequential action with broad incentives for (at

least) the first agent. There is an asymmetry that is immediately visible; the first mover needs

to know whatever the second mover needs to know in order to act but the converse is not

true.6 Here, there is only epistemic dependence but not epistemic interdependence.

Put simply, epistemic dependence between agents in an interdependent dyad arises

only when broad incentives are used; and given broad incentives, epistemic dependence is

greater for simultaneous than for sequential actions. In other dyadic task architectures, there

is no epistemic dependence between agents. In Appendix B, we show how epistemic

dependence can be represented for task architectures beyond the simple dyad.

---------------------------------Insert Figure 2 about here

----------------------------------

4.2 From Epistemic Structures (E) to Information Structures (It )

While the epistemic structures (E) gives the necessary knowledge conditions to maximize

total utility, in any given task architecture, the agents may not possess such knowledge. The

Information Structure (It) captures their actual state of knowledge at any point in time. The

Information Structure has exactly the same form as the matrix representing E. However, the

entries in the cells now capture the probability that the (column) agent knows the (row)

knowledge element.

4.3 The Information Processing Implications of Epistemic and Information Structures

6

Readers familiar with game theory will recognize the well known principle of “backward induction”

15

In order to compare Task Architectures in terms of the relative costliness of

information processing required to maximize total utility in each, we define a few useful

metrics on any E and It:

Epistemic Load (EL) of the task architecture, which is simply the number of all

positive elements in any E7. To the extent that memory is expensive, or every piece of

knowledge must be communicated to agents, EL is one way to capture the magnitude of

information processing costs in any task architecture.

Epistemic Dependence (ED) is measured as the sum of all off-diagonal elements in E.

The diagonal elements in E8 tell us whether the agent needs to know the relevant knowledge

item about himself, whereas off-diagonal elements tell us what agent i needs to know about

other agents in the system. If knowledge about an agent is typically private, and expensive to

communicate or be learnt by another agent, then ED is another measure of information

processing costs which focuses on the cost of communication among interdependent agents in

order to create sufficient epistemic conditions for coordinated activity. A visual

representation of ED also shows us the structure of the network linking agents who need to

communicate.

It is worth noting here that in our framework we presume that the division of labor

always involves some degree of specialization, i.e. that the tasks are qualitatively different in

a task architecture. However, this need not be so, as one can also see division of labour with

homogenous efforts (arising from individual effort constraints) – for instance a group of

workers trying to move furniture. The major difference in the case of division of labor

7

8

n

n

i =1

j =1

Formally, EL = ∑ ∑ δ eij , where δ takes on a value of 1 if eij is positive and 0 otherwise.

Formally, ED=EL-tr(E)

16

without specialization lies in the lower cost of generating shared knowledge to cope with

epistemic dependence. The nature of the information processing problem in the first case

(with specialization) compared to the latter (without specialization) is fundamentally different

– without specialization both agents hold the same general knowledge, in the case with

specialization, each agent has task specific knowledge. The cost of creating shared

knowledge about each other’s objective functions and constraints, when it is required to be

shared, should be higher with specialization than without. Thus we can expect that the

information processing costs for a given level of epistemic dependence are higher with

specialization than without.

Reliability ( ρ ) We can combine information from E and It to calculate the reliability

of any task architecture: first create a new square matrix from the element by element

multiplication of E and It and then multiply all the non-zero elements of the resulting matrix

together to yield a scalar in the range [0, 1]. Reliability captures the joint probability that all

agents know everything they need to for total utility to be maximized. Reliability as defined

here provides a precise way of assessing the extent of task uncertainty (Galbraith) - “the

difference between the information required to perform a task and the information already

possessed” (1973: 5).

Epistemic Load and Epistemic Dependence offer two precise ways in which we can

assess the de novo information processing costs of any task architecture. Epistemic Load

includes both the agent’s knowledge of own objective function and constraints as well as

Epistemic Dependence with other agents. If we assume that knowing one’s own objective

function and constraints is costless, then Epistemic Dependence may be a better measure of

information processing costs; else we may use the more comprehensive Epistemic Load

measure. In any case, EL= ED + the number of agents in the task architecture. However, both

these measures assume that agents have no prior knowledge. In contrast, Reliability, which

17

suggests a performance metric for any task architecture (given the epistemic and information

structures) may also be used to assess the extent of information processing necessary given

what the agents now. Put simply, the lower the reliability, the higher the information

processing costs required to ensure that It =E

5. THE ROLE OF THE DESIGNER

By analyzing task architectures in terms of epistemic dependence between agents, it is

also possible to conceptualize the role of a designer in epistemic terms. We assume that the

goal of the designer is to maximize reliability of the task architecture at minimal information

processing cost. Broadly, the designer can do this in two ways: first, by shaping the task

architecture in a manner that minimizes the epistemic burden on the agents; second, in

creating communication channels between agents who are epistemically dependent so that the

shared knowledge conditions are met through communication between agents. These

correspond closely to the classical distinction drawn between coordination through

programming and feedback in organizations (March and Simon, 1958). Our discussion below

shows the compatibility between the classic conceptualizations of coordination modes and

the notion of epistemic dependence, but also goes a step further: it points to the epistemic

conditions for the designer to be able to adopt either mode of coordination.

5.1. Design as Programming: Shaping task architecture

In this mode of coordination, the designer’s role consists of creating plans, schedules

and programs to enable integration of effort without the need for communication among

agents (March and Simon, 1958). According to March and Simon, “[t]he type of coordination

18

(...) used in the organization is a function of the extent to which the situation is standardized”,

and hence, “[t]he more stable and predictable the situation, the greater the reliance on

coordination by plan.” (1958: 182).

In epistemic terms, we may think of this role of the designer as involving the selection

of a task architecture A in a manner that minimizes the epistemic burden on the agents. We

know that epistemic dependence is lowest in task architectures characterized by narrow

incentives, followed by broad incentives with sequential actions, and highest in broad

incentive structures with simultaneous actions (see Figure 2). Therefore, we need to

understand under what conditions a designer can impose a narrow incentive structure or at

least sequence actions.

We argue that knowing what outputs to expect from each task and the logical

sequencing of tasks constitute a form of architectural knowledge (Henderson and Clark,

1990; von Hippel, 1990; Baldwin and Clark, 2000). This comprises knowledge about task

decomposition and task integration. Specifically, the designer must know the different tasks

and the order in which they must be performed for the final system level output to be

produced. Having architectural knowledge thus implies knowing what outputs to expect from

each task in the entire task architecture. Architectural knowledge is therefore required if the

designer wants to implement a narrow incentive structure or sequence actions. Conversely, in

the absence of architectural knowledge, the task architecture will admit broad incentives with

simultaneous sequencing of action only. This task architecture generates the highest level of

epistemic dependence between agents. Limited architectural knowledge thereby results in

coarser partitioning of sub-tasksThe key point here is that the architectural knowledge of the

designer helps to reduce epistemic dependence between agents.

Further, the designer can also influence the epistemic load of the agents in terms of

what they need to know about themselves, not only about others (i.e. epistemic dependence).

19

If the designers possess knowledge of the production technology used by the agents- the

knowledge required at the task level to convert actions (and inputs) to outputs, then the agents

need not know their own production technologies, because the designer can set incentives

based on actions rather than outputs. Thus, being paid on output implies a higher epistemic

requirement for the agent- who must know his own production technology. The corollary is

that if the agent’s incentive pay is based on his actions, then the agent need not know his own

production technology. The epistemic requirement is lowered because the agent’s objective

function becomes simpler. This is an important epistemic distinction between output vs.

behavior based contracts, and also points to the conditions under which a designer can

impose a high degree of formalization (i.e. action based contracts for agents) (Galbraith,

1973). Thus, whether programming takes the form of designing task architectures with

narrow incentives or action based incentives- which is after all what plans, schedules and

programs are- viewed from the epistemic perspective, its function is to exploit the knowledge

of the designer to minimize the epistemic burden on the agents.

An equally important insight is that designers with different levels of knowledge may

well choose different task architectures for the same set of tasks. For instance, consider a

task dyad assigned to the pair of agents {1,2} and {3,4}, each with its own designer, D1 and

D2. D1 may sequence the tasks simultaneously, while D2 may do so sequentially. We know

from Figure 2 that if both designers use narrow incentives, then in fact the two pairs of agents

have identical knowledge requirements. However, even if D1 used broad incentives, D2

could still plausibly use narrow incentives as long as he knows what to measure- in other

words, has the requisite architectural knowledge. Similarly, if {1,2} knew their production

technologies they could work to an output based measurement, while {3,4} who are ignorant

about the relevant production technology may be measured on their actions.

20

By explicitly allowing the Designer to modify A (task architecture) we are clearly

eschewing the strong form of technological determinism- where there is only one “true”

underlying task architecture. Indeed, with a bounded rational designer, it appears to us that at

least the strongest forms of technological determinism are impossible to sustain, as the

Designer’s choices about A can only reflect his understanding at a point in time. Different

Designers with different levels of knowledge may therefore enforce quite different A for the

same basic set of tasks. Conversely, with sufficient knowledge (almost) any task architecture

is feasible.9

5.2. Design as Feedback: Shaping Organizational Architecture

Programming is feasible only with high levels of architectural knowledge. If such

knowledge is not available to the designer, an alternative may be to let the agents

communicate among themselves in order to create the shared knowledge made necessary by

epistemic dependence. Even in this case, the designer must possess some level of

architectural knowledge- for instance, he must know who is epistemically dependent with

whom- but not enough to be able to appropriately redefine the epistemic structure. Instead,

the designer can make the knowledge specified in E available to agents by creating

appropriate channels for the agents to communicate directly with each other (enabling

feedback). According to March and Simon coordination by feedback becomes necessary “[t]o

the extent that contingencies arise, not anticipated in the schedule, (...) [which] requires

9

It is even possible to imagine adopting simultaneous scheduling for tasks that appear to have a logical

sequence to them (task 2 depends on the output of task 1 as an input, for instance). As long as both agents in the

dyad meet the knowledge requirements imposed by such a high level of epistemic dependence -the downstream

agent would literally have to predict the upstream agents output before it was delivered, and the upstream agent

would have to predict the ability of the downstream agent to do this etc.- the task architecture is still technically

feasible (consider, for instance attempts at concurrent engineering in software development). However, a caveat

here is that no physical good is necessary from upstream to downstream for downstream to start working- all

that is required is a prediction of what the output will be.

21

communication to give notice of deviations from planned or predicted conditions, or to give

instructions for changes in activity to adjust to these deviations.” (1958: 182) The marked

distinction between coordination by plans and coordination by feedback is that the former is

based on pre-established schedules, whereas the latter “involves transmission of new

information” (1958: 182).

In prior literature, grouping and linking have been considered as the two basic ways in

which communication between agents can be induced (Nadler and Tushman, 1997).

Grouping constitutes placing activities that need to be tightly coordinated within common

organizational boundaries; linking refers to the creation of channels of communication and

influence that link activities in different groupings. Grouping and linking jointly describe the

organizational architecture. Specifically, the grouping decision leads naturally to a

consideration of organization structure- as grouping results in sub-units within the

organization. 10

Coordinating interdependent activities through grouping therefore implies the

creation of an organizational architecture that eases communication between epistemically

dependent agents. The distinction between programming and feedback thus corresponds to an

important but often ignored distinction between task architecture and organizational

architectural. Put simply, designer’s shape organizational structures when they cannot shape

task architectures; the latter requires the designer to possess a higher level of architectural

knowledge.

6. DISCUSSION: IMPLICATIONS FOR ORGANIZATION DESIGN

10

Two assumptions are implicit in the discussion of grouping: a) communication is easier within rather than

between groups b) communication efficiency declines with group size. Both assumptions are necessary and

jointly sufficient to explain the presence of organizational sub-units.

22

We now show how the approach we have developed can be used to state more

precisely some well known ideas in the literature on interdependence, information processing

and organization design. Our coverage of topics cannot be exhaustive, but we will select

some examples with the idea of demonstrating the generative powers of the language we have

constructed. We limit ourselves to a discussion of the underlying theoretical mechanisms in

terms of the simple interdependent dyad – interested readers will find details of how to scale

up to arbitrarily large sized organizations with both vertical and horizontal differentiation in

Appendix B.

6.1. The Mapping Between Interdependence and Information Processing

The central purpose of this paper was to develop an improved mapping between

interdependence and the resulting need for information processing. In the task dyad, our

analysis makes clear that four task architectures are feasible (Figure 2). Further, the lowest

epistemic load (EL) and epistemic dependence (ED) occur with narrow incentives, while

maximum EL and ED occurs with broad incentives and simultaneous moves. The task

architecture with broad incentives and sequential moves is intermediate between these two

extreme cases. Thus in the absence of prior knowledge (i.e. It=0), this ordering also captures

the extent of information processing required. However, as our analysis makes clear, if the

“blank slate” condition does not hold and It ∫0, then this ordering need not hold. To see, this

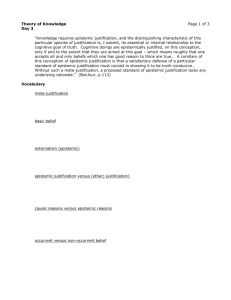

consider Figure 3 below, which shows epistemic structures E1-E3 as well as associated

information structures I1-I3 for three dyadic task architectures.

---------------------------------Insert Figure 3 about here

----------------------------------

It is obvious in Figure 3 that while EL(E1)>EL(E2)>EL(E3), it is also true that

ρ 1> ρ 2> ρ 3. If the agents in these task architectures all began from blank slate conditions

23

with no prior knowledge (I1=I2=I3=0), we would have no hesitation in saying that

information processing costs would be highest in the first case and lowest in the last.

However, if the information structures are as shown in the figure, then in fact information

processing costs (measured as a decreasing function of reliability) would be highest for the

last case and lowest in the first. Put simply the history of a task architecture, which in turn

may influence the current knowledge states of the agents (i.e. their information structures),

cannot be ignored in assessing its information processing implications.

How do our conclusions compare with received wisdom? We have already discussed

Thompson’s (1967) conceptualization of interdependence into pooled, sequential and

reciprocal categories extensively. In terms of our framework, Thompson’s rank ordering of

sequential interdependence as higher than pooled interdependence in terms of information

processing requirements can be justified only in special cases in our framework. If we assume

that pooled interdependence (“when tasks depend on each other in a simple additive manner;

each task renders a discrete contribution to the whole, and each is supported by the whole”

pp. 54, 64) is associated with a narrow incentive structure and simultaneous task scheduling,

whereas sequential interdependence implies broad incentives with sequential actions, then

Thompson’s ranking holds Unless measured in this way, we would expect to see little if any

empirical support for Thompson’s ordering in terms of information processing needs.

As we have noted, Thompson’s notion of reciprocal interdependence (“when the

outputs of each task become the inputs of the other” –p.55) is not logically definable at a

point in time (because time to perform a task cannot be zero). However, it might well capture

repeated instances of sequential task scheduling (i.e. task A feeds into task B, which then

feeds into task A, which then feeds into task B etc.). Yet if the nature of the interaction does

not change by period, then it is still not clear why this should necessarily generate higher

information processing needs than pooled or sequential interdependence. There is also a

24

second possibility- perhaps reciprocal interdependence points to the need for repeated

interaction because the agents lack knowledge of E or its elements. By interacting repeatedly,

they may build such knowledge through communication.

It is thus useful to look closely at cases of reciprocal interdependence to distinguish a)

a pair of sequential architecture tasks that reverse direction after every period, but in which

the relevant Epistemic Structures are fully known, from b) repeated interactions in a trial and

error learning process meant to overcome uncertainty or ignorance about E. If the latter case

applies, then this is better viewed as an organizational architecture meant to create feedback

(i.e. communication between agents) rather than as a form of task architecture. But in either

case, we would not expect strong evidence to support the idea that reciprocal interdependence

must generate higher information processing needs than the other two categories of

interdependence. More generally, in the absence of the blank slate condition (i.e. It=0), it is

hard to see why we should expect any evidence for the increasing information processing

costs of pooled, sequential, and reciprocal interdependence.

Van de Ven et al. (1976) extended Thompson’s framework by adding an additional

category of interdependence that they called “team”. In their own words: “Team work flow

refers to situations where the work is undertaken jointly by unit personnel who diagnose,

problem-solve and collaborate in order to complete the work. In team work flow, there is no

measurable temporal lapse in the flow of work between unit members, as there is in the

sequential and reciprocal cases; the work is acted upon jointly and simultaneously by unit

personnel at the same point in time.” (p. 325) The authors conceptualize this fourth category

to stand at the top of Thompson’s Guttman scale of task interdependence, requiring the most

complex (group) coordination mechanisms. Within our framework, it is easy to see that team

interdependence can be modeled as simultaneous scheduling with broad incentives. It would

then generate the highest values of epistemic load and dependence and would indeed describe

25

a situation with the highest possible information processing costs, provided the blank slate

assumption is met.

Adler (1995) explicitly considered differences in situations where the actors in a task

architecture begin from a relatively more or less knowledgeable state. Adler applied

Thompson’s concept of task interdependence without modification; however, he

distinguished between two types of uncertainty, namely the degree of fit novelty and the

degree of fit analyzability. “The fit novelty of a project can be defined as the number of

exceptions with respect to the organization’s experience of product/process fit problems.”

(1995: 157) On the other hand, “[f]it analyzability can be defined (following Perrow (1967))

as the difficulty of the search for an acceptable solution to a given fit problem..” (1995: 158).

Within our framework, these situations correspond to the absence of architectural knowledge

on the part of the designer and/or low reliability structures (fit novelty), and difficulties in

building the relevant knowledge (fit analyzability). Adler’s approach thus strongly aligns

with our own conclusions that information processing requirements must depend not only on

the nature of the task architecture (and resulting epistemic structure) but also existing

knowledge endowments of the actors involved. Empirical measures of interdependence are

likely to be useful only to the extent both these aspects are accounted for.

6.2 Span of Control, Delegation and the Depth of the Hierarchy

The epistemic perspective offers us a clear distinction between the role of design vs.

management: while the former involves manipulations to task architecture (programming) or

organizational architecture (feedback) in an effort to enhance reliability at minimal

information cost, the latter requires managing the exceptions that arise because of

inadequacies of design (Galbraith, 1973). This calls for a view of hierarchical supervision as

exception management rather than as simply telling the agents what to do. This is because if

26

the designer was sufficiently endowed with architectural knowledge to be able to simply

specify action based contracts (i.e. telling agents what to do) – then this is by definition

simply a form of coordination by programming. Whether the instructions on what to do are

delivered in written plans or periodic oral communication does not appear to us to be a

critical distinction. On the other hand, exception management by definition cannot be

conducted by plans or schedules (else they would not qualify as exceptions)- indicating this is

a distinct task for managers in addition to the task of designing. Of course, managers in an

organization may possess both design and exception management responsibilities for their

immediate subordinates or only the latter (also see Appendix B for how to model

centralization vs. delegation of design).

This role of managers in exception management provides a natural basis for limits to

the span of control (Galbraith, 1973).

Imperfect architectural knowledge should imply the

need for exception management, and the greater the number of subordinates, the greater the

efforts needed for exception management. If there is a finite limit to the exception

management capacity of a manager, then there is a finite span of control. This in turn

influences the need for delegation, as well as the number of hierarchical layers for any given

total size of the organization. Thus, we should expect that limited architectural knowledge

implies a limited span of control, resulting in greater delegation and deeper hierarchies.

Conversely, flatter organizations require a high level of architectural knowledge on the part

of designer. Thus, we should expect that combinations of limited architectural knowledge and

imperfect information structures should imply more need for managing exceptions and a

sharply limited span of control, resulting in greater delegation and deeper hierarchies.

Conversely, flatter organizations require superior architectural knowledge on the part of

designer or superior information structures for the agents.

27

6.3 Environmental Attributes and Information Processing

The attributes of the organization’s task environment- comprising the organization’s

consumers and users, suppliers, competitors, and regulatory groups- have also been argued to

have information processing implications for the organization (Galbraith, 1973). A fair

number of environmental characteristics relevant to a firm’s decision-making process have

been proposed and analyzed.

Environmental uncertainty, in much of the early information-processing literature

(Burns & Stalker, 1961; Lawrence & Lorsch, 1967; Galbraith, 1973, 1977) is argued to be a

primary driver of task uncertainty and consequently information processing. It is “caused by

high levels of technological and market change requiring the organization to innovate in

order to remain effective and competitive” (Donaldson, 2001: 22). Eisenhardt and Bourgeois

(1988) argue that in high-velocity environments, “changes in demand, competition and

technology are so rapid and discontinuous that information is often inaccurate, unavailable, or

obsolete.”(1988: 818). Equivocality, has been defined by Daft & Lengel as a “measure of the

organization’s ignorance of whether a variable exists in the space.” (1986: 567) and hostile

environments are characterized by Burton and Obel by “precarious settings, intense

competition, harsh, overwhelming business climates, and the relative lack of exploitable

opportunities.” (1998: 171).

Interestingly, the consequences of these various forms of environmental change can

ultimately be described in epistemic terms. As Burton and Obel clarify (1998: pp. 167-171),

the impact of environmental attributes can be traced through their impact on interdependence

within the organization. Thus, we can represent the impact of environmental attributes in

terms of an underlying epistemic and/or information structure. In other words, changes in the

environment may a) exogenously alter the epistemic structure and/or b) the agents’

information structure leading in either case to a need for more information processing.

28

An example will highlight this point: Given a task structure with high epistemic

interdependence (such as the one depicted in Figure 4a – with sequential tasks and broad

incentives).Reliability will be highest with the information structure depicted in Figure 4b.

However, the effect of environmental uncertainty, velocity, equivocality, and hostility is

effectively a reduction in the agents’ knowledge as it becomes outdated – and hence a

reduction in the structure’s reliability given that I (as depicted in Figure 4c) does not map as

closely onto E anymore. We can thus explain why in the presence of environmental

uncertainty leading to lowered probabilities of knowing what they need to know, task

architectures which minimize epistemic load will always have higher reliability.

However changes in environment may also alter the underlying epistemic structure. In

particular, if the change is in the direction of greater epistemic dependence, then the need for

information processing should also increase. Thus if the new epistemic structure is as shown

in Figure 4d (simultaneous tasks with broad incentives), then we should expect to see a

dramatic decrease in reliability as well as the need for substantial information processing.

---------------------------------Insert Figure 4 about here

----------------------------------

Thus, the epistemic perspective helps us to think of environmental changes as either

affecting epistemic structure in the direction of greater epistemic dependence (for instance,

by destroying the architectural knowledge of the designer), or weakening the information

structure while preserving the epistemic structure. Each kind of change would result in a

greater information processing requirements. It is therefore critical to parcel out these effects

in empirical research- otherwise conclusions can be severely misleading.

29

7. CONCLUSION

We have introduced an analytical framework that can help to refine our understanding

of the links between interdependence, information processing and organization design.

Specifically, building on the central concept of epistemic dependence, we show how it is

possible to describe differences in the underlying coordination problems associated with

different divisions of labor, and to compare the resulting implications for information

processing. Through a few applications, we hope to have also illustrated that our approach is

highly compatible with prior wisdom- but at the same time offers opportunities for

refinement and novel theorizing. We have touched upon several topics central to the study of

organization design such as coordination by plan vs. feedback, formalization, delegation,

span of control, the depth of the hierarchy, delegation, management of exceptions and

environmental uncertainty. However, we recognize that neither this list nor our coverage of

these topics is exhaustive. Rather the goal in this paper has been to demonstrate the promise

of a new approach to tackling these classic problems, through a few initial steps. We hope to

take many more.

30

FIGURE 1

Papers on Interdependence & Design citing Thompson

(1967)

120

Percentage

100

80

Percentage

60

40

20

19

67

19

70

19

74

19

77

19

80

19

83

19

86

19

89

19

92

19

95

19

98

20

01

20

04

20

07

0

Year

FIGURE 2

The Epistemic Structures for Four basic Task Architectures for a Task Dyad

incentive structure

simultaneous

K{oA1}

K{oA2}

incentive structure

sequential

K{oA1}

K{oA2}

π1, 2

π1, π2

A1

1

0

A2

0

1

A1

1

1

π1, 2

π1, π2

A1

1

0

A2

1

1

A2

0

1

A1

1

1

A2

0

1

31

FIGURE 3

Comparing Information Processing Costs across Epistemic and Information Structures

E1

E2

E3

A1

A2

A1

A2

A1

A2

1

1

1

1

1

1

0

1

1

0

0

1

A1

A2

A1

A2

A1

A2

1

0.9

0.9

1

1

0.7

0

1

0.5

0

0

0.5

I1

I2

I3

FIGURE 4

Example of the effects of the Environmental Uncertainty on E and on I

incentive structure

E

K:{oA1}

K:{oA2}

Figure 4a

π1, 2

A1 A2

1

1

1

1

I

A1

1

1

I

A2

1

1

Figure 4b

A2

0

0

E: π1,2

A1

A2

1

1

1

1

Figure 4c

Figure 4d

A1

1

0

32

APPENDIX A: SHARED KNOWLEDGE

We present a formal definition of shared knowledge using standard notation from epistemic

(modal) logic. K1j denotes that Agent 1 knows j

Shared knowledge of first order

j is shared knowledge (of first order- which is implied if not otherwise specified) among the j

agents of a group G if

K1j ⁄ K2j ⁄ K3j ….⁄ Kjj

This is written as E1Gj and read “Everyone in group G knows j”

Shared knowledge of any order

By abbreviating EGEGn-1j = EGnj, and defining EG0j=j, we can define shared knowledge of

j to any order n with the axiom:

∧ in=1 E nϕ

Common knowledge is shared knowledge of order n =∝

Cϕ = ∧ in=1 E nϕ where n =∝ .

APPENDIX B: FROM TASK DYADS TO COMPLEX ORGANIZATIONS

Here we show how epistemic dependence can be represented for task architectures

beyond the simple dyad. More complex structures are built through scaling (e.g. from dyad to

n-task structures), delegation (managers at each level design task architectures for their subordinates) and recursion (e.g. a dyad in which each task is itself a dyad of tasks).

1. Simple scaling from task dyads to n-task structures

The general n-task versions of the four basic epistemic structures in any task dyad are shown

in Figure B1. Recall that a “1” in a cell indicates that the column actor needs to know row

knowledge element for total utility to be maximized. When an agent i must act on the basis of

predictions about the acts of any other agent j, there is epistemic dependence between agents.

These cases appear as entries with non zero off-diagonals.

2. Building nested, hierarchical n-task structures

The epistemic structures shown in Figure B1 are quite simple in that they describe a flat

structure with a designer and a single layer of agents below him – there are no organizational

subdivisions so that all agents are housed in a single department. The next step is to consider

ways in which multiple hierarchical levels and horizontal subdivisions can be captured in our

formalism. It turns out that we can proceed to build up such complex structures in two very

different ways, either by delegation or centralization of organization design. Note that in

33

either case, the task of managing exceptions as they occur may still be delegated to subordinates.

FIGURE B1

n-Task Versions of the Basic Epistemic Structures

incentive structure

simultaneous

K{oA1}

K{oA2}

...

K{oAn}

incentive structure

sequential

K{oA1}

K{oA2}

...

K{oAn}

A1

1

0

...

0

π1,π2

A2

...

0

...

1

...

...

...

0

...

An

0

0

...

1

A1

1

0

...

0

π1,π2

A2

...

0

...

1

...

...

...

0

...

An

0

0

...

1

A1

1

1

...

1

π1,2

A2

...

1

...

1

...

...

...

1

...

An

1

1

...

1

A1

1

1

...

1

π1,2

A2

...

0

...

1

...

...

...

1

...

An

0

0

...

1

2.1 Delegation of design.

In this approach, we assume “design is always one level deep”. The agents below the

designer could themselves be middle managers who design for their subordinates and so on,

giving rise to a hierarchy. Assume there are m middle managers, each responsible for a

subgroup including one or more subordinates. Each middle manager is responsible for

designing the task architecture for her own group, in addition to managing exceptions that

arise because of inadequate design for their respective subunits. This has several

implications:

a) Only the responsible middle manager has detailed architectural knowledge and

awareness of the epistemic conditions within her own unit.

b) There is implicitly a design cycle and a production cycle. For instance in a three level

organization, the organizational designer (typically the CEO), designs the incentive

structure for middle managers. The middle manager’s action is based on these

contracts. The middle manager in turn designs the incentive structure for the

subordinates. The subordinates then complete their production cycle after which the

middle managers complete theirs. Thus, we assume alternating cycles of design and

production that have to move in serial from the bottom up, one layer in each time

interval.

c) Epistemic dependence occurs only within sub-groups, not between members in

different sub-groups. The sub-groups are essentially modular units with high

epistemic dependence within but not between them (Simon, 1962). Every sub-unit

must have a middle manager-who aggregates the output of his subordinates- and these

34

managers may indeed be epistemically interdependent- but in general, individuals in

one group are not interdependent with individuals in other groups.

We show a simple example in Figure B2. In the structure depicted in Figure B2, the designer

D delegates the design task for the lowest level of the hierarchy to middle managers M1 and

M2. Thus, the designer only needs to know architectural knowledge necessary to define the

incentives for M1 and M2 and the middle managers, in turn, need to possess architectural

knowledge relevant to the sub-ordinates they directly oversee; in addition, given that M1

moves sequentially before M2, she also needs to know M2’s output function. A possible

resulting Epistemic structure is shown in Figure B3.

FIGURE B2

A Simple Hierarchy with one Layer of Middle Managers

FIGURE B3

The Corresponding Epistemic Structure E to A in Figure B2

K{oD}

K{oM1}

K{oM2}

K{oS1}

K{oS2}

K{oS3}

K{oS4}

K{oS5}

K{oS6}

K{oS7}

K{oS8}

M1

0

1

1

1

1

1

1

0

0

0

0

M2

0

0

1

0

0

0

0

1

1

1

1

S1

0

0

0

1

1

1

1

0

0

0

0

S2

0

0

0

1

1

1

1

0

0

0

0

S3

0

0

0

1

1

1

1

0

0

0

0

S4

0

0

0

1

1

1

1

0

0

0

0

S5

0

0

0

0

0

0

0

1

0

0

0

S6

0

0

0

0

0

0

0

0

1

0

0

S7

0

0

0

0

0

0

0

0

0

1

1

S8

0

0

0

0

0

0

0

0

0

1

1

2.2 Centralization of design.

Centralization of design is the natural contrast to delegation of design. This approach assumes

that a central designer (e.g. CEO) designs the entire organization so that each subunit is selfmanaged. In this case middle managers exist primarily to manage exceptions that arise within

their sub-units. The only reason for layers of organization to exist in this case is task

35

sequencing. There are layers in the organization only to the extent that certain tasks must

occur before others- there is therefore a “hierarchy of timing”.

The formal characterization of the central design model is somewhat more involved than the

delegation model because interdependencies across units influence every subordinate. Our

aim is to formally specify the epistemic structure required to achieve coordination in any

activity system comprising m groups of n members each. Epistemic structures are represented

as before, and we use the general n-member versions of the basic epistemic structures that are

shown in the above Figure B1.

Building blocks

It is useful to express the general n-member versions of the basic epistemic structures in

matrix form. The three distinct epistemic structures shown in Figure B1 are shown in matrix

form below, i.e. matrices I, L and J. The 0 and H matrices are helpers that allow us to use

recursive expansion and thereby model organizations that vary in horizontal span and number

of hierarchical layers.

1 0 L 0

0 1L 0

In =

M M O M

0 0 L 1

1 0 L 0

1 1L 0

Ln =

M M O M

1 1L 1

0 0 L 0

0 0 L 0

0n =

M MO M

0 0 L 0

1 1 L 1

1 1L 1

Jn =

M M O M

1 1L 1

0 1L 1

1 0 L 1

Hn =

M M O M

1 1L 0

The general case defines an epistemic structure E composed of block matrices that each

represents a distinct epistemic category. Coordination between m groups is regulated by

matrices Bm, where Bm stand for any m × m matrix that can be made from the building blocks

presented above. Coordination within subunits with n members is regulated by two matrices

Wn, where Wn stand for any n × n matrix, including those that can be made from the

building blocks presented above. Coordination within groups with arbitrary structures

represented by Bm and Wn requires the following epistemic condition:

([ Bm ][ H m ]) ⊗ J n + I m ⊗ Wn

E =

([ Bm ][ H m ]) ⊗ J n + I m ⊗ Wn

This formalism extracts the overall epistemic matrix E for m groups that each employ n

agents. The method used here is matrix expansion by tensor product. The advantage of this

method is that there are virtually no restrictions on the specification of the two required input

matrices:

1. A matrix Bm defining the epistemic structure among m groups.

36

2. A matrix Wn defining the epistemic structure among the n agents that are employed in

each of the m groups.

The number of agents n can vary across groups and there is no restriction on the size of m and

n. In addition to the two required inputs, there are three “helpers”, the matrices Im (m × m

identity matrix), Hm (m × m identity matrix with zeros on the diagonal), and Jn (n × n unit

matrix). The helper matrices allow us to decompose the formal representation into two

meaningful additive components. Each component is a matrix expansion by tensor product.

The first tensor product represents coordination between groups and the second represents

coordination within. As previously mentioned, square brackets denote element-by-element

multiplication. This operation eliminates self-referential interdependencies in Bm (betweengroup interdependence).

A simple example illustrates the formalism. Suppose there are two subunits and the

epistemic structure between the two units, B2, is defined in terms of the basic task

architecture I2 (narrow incentives). Further suppose that there are three members in each

group and that the epistemic structure within the unit, W3, is defined in terms of the basic

task architecture J3 (simultaneous actions and broad incentives). We then get:

E = ([ B 2 ][ H 2 ]) ⊗ J 3 + I 2 ⊗ W3 = ([ I 2 ][ H 2 ]) ⊗ J 3 + I 2 ⊗ J 3

The resulting Epistemic structure is shown in Figure B4.

FIGURE B4

Example of a Nested Task Structure: Two groups with three agents each

A1

K{oA1}

K{oA2}

K{oA3}

K{oA4}

K{oA5}

K{oA6}

A2

1

1

1

0

0

0

A3

1

1

1

0

0

0

A4

1

1

1

0

0

0

A5

0

0

0

1

1

1

A6

0

0

0

1

1

1

0

0

0

1

1

1

Recursive expansion

Multilevel systems can be generated by recursively expanding Wn while defining Bm at each

recursive pass. This scheme is quite flexible because the number of groups, m, can be varied

for each pass in the recursion (the number of agents, n, is defined from the expanding matrix

Wn). A new epistemic structure Wn can even be applied in each recursive pass. Our recursive

algorithm allows us to generate any epistemic structure E, given an initial definition of m, n,

Bm and Wn as well as proper definitions of m and Bm for each subsequent pass in the

recursion.

37

REFERENCES

Adler, P. S. 1995. Interdepartmental Interdependence and Coordination: The Case of the

Design/Manufacturing Interface. Organization Science, 6(2): 147 - 167.

Baldwin, C. Y., & Clark, K. B. 2000. Design Rules. Cambridge, MA: The MIT Press.

Burns, T., & Stalker, G. M. 1961. The Management of Innovation. London: Tavistock.

Burton, R. M., & Obel, B. 1998. with contributions by S.D. Hunter III., M. Sondergaard, and

D. Dojbak. Strategic Organizational Diagnosis and Design: Developing Theory for

Application (2nd ed.). Boston/Dordrecht/London: Kluwer Academic Publishers.

Burton, R. M., & Obel, B. 1984. Designing Efficient Orgnaizations: Modelling and

Experimentation: North-Holland.

Camerer, C. 2003. Behavioural Game Theory: Experiments in Strategic Interaction. Press: