On the common origins of psychology & statistics

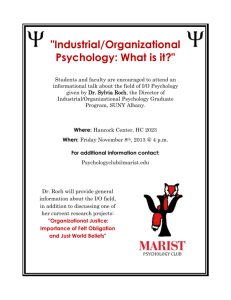

advertisement