Stat 110 notes - {m x[a}w n g]

advertisement

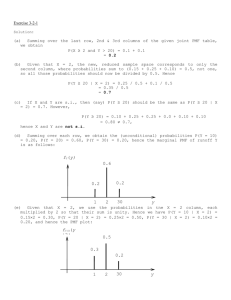

![Stat 110 notes - {m x[a}w n g]](http://s3.studylib.net/store/data/008120387_1-3b4895d2f473dfe516b6ab16119e31f2-768x994.png)

Statistics 110—Intro to Probability

Lectures by Joe Blitzstein

Notes by Max Wang

Harvard University, Fall 2011

Lecture 2: 9/2/11 . . . . . . . . . . . . . .

1

Lecture 19: 10/19/11 . . . . . . . . . . . . 14

Lecture 3: 9/7/11 . . . . . . . . . . . . . .

1

Lecture 20: 10/21/11 . . . . . . . . . . . . 15

Lecture 4: 9/9/11 . . . . . . . . . . . . . .

1

Lecture 21: 10/24/11 . . . . . . . . . . . . 16

Lecture 5: 9/12/11 . . . . . . . . . . . . .

2

Lecture 22: 10/26/11 . . . . . . . . . . . . 17

Lecture 6: 9/14/11 . . . . . . . . . . . . .

3

Lecture 7: 9/16/11 . . . . . . . . . . . . .

3

Lecture 8: 9/19/11 . . . . . . . . . . . . .

4

Lecture 23: 10/28/11 . . . . . . . . . . . . 18

Lecture 24: 10/31/11 . . . . . . . . . . . . 19

Lecture 25: 11/2/11 . . . . . . . . . . . . 20

Lecture 9: 9/21/11 . . . . . . . . . . . . .

5

Lecture 10: 9/23/11 . . . . . . . . . . . .

6

Lecture 11: 9/26/11 . . . . . . . . . . . .

7

Lecture 12: 9/28/11 . . . . . . . . . . . .

8

Lecture 13: 9/30/11 . . . . . . . . . . . .

8

Lecture 26: 11/4/11 . . . . . . . . . . . . 21

Lecture 27: 11/7/11 . . . . . . . . . . . . 22

Lecture 28: 11/9/11 . . . . . . . . . . . . 24

Lecture 29: 11/14/11 . . . . . . . . . . . . 25

Lecture 14: 10/3/11 . . . . . . . . . . . . 10

Lecture 30: 11/16/11 . . . . . . . . . . . . 26

Lecture 15: 10/5/11 . . . . . . . . . . . . 11

Lecture 31: 11/18/11 . . . . . . . . . . . . 27

Lecture 17: 10/14/11 . . . . . . . . . . . . 12

Lecture 32: 11/21/11 . . . . . . . . . . . . 28

Lecture 18: 10/17/11 . . . . . . . . . . . . 13

Lecture 33: 11/28/11 . . . . . . . . . . . . 29

Introduction

Statistics 110 is an introductory statistics course offered at Harvard University. It covers all the basics of probability—

counting principles, probabilistic events, random variables, distributions, conditional probability, expectation, and

Bayesian inference. The last few lectures of the course are spent on Markov chains.

These notes were partially live-TEXed—the rest were TEXed from course videos—then edited for correctness and

clarity. I am responsible for all errata in this document, mathematical or otherwise; any merits of the material here

should be credited to the lecturer, not to me.

Feel free to email me at mxawng@gmail.com with any comments.

Acknowledgments

In addition to the course staff, acknowledgment goes to Zev Chonoles, whose online lecture notes (http://math.

uchicago.edu/~chonoles/expository-notes/) inspired me to post my own. I have also borrowed his format for

this introduction page.

The page layout for these notes is based on the layout I used back when I took notes by hand. The LATEX styles can

be found here: https://github.com/mxw/latex-custom.

Copyright

Copyright © 2011 Max Wang.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This means you are free to edit, adapt, transform, or redistribute this work as long as you

• include an attribution of Joe Blitzstein as the instructor of the course these notes are based on, and an

attribution of Max Wang as the note-taker;

• do so in a way that does not suggest that either of us endorses you or your use of this work;

• use this work for noncommercial purposes only; and

• if you adapt or build upon this work, apply this same license to your contributions.

See http://creativecommons.org/licenses/by-nc-sa/4.0/ for the license details.

Stat 110—Intro to Probability

Max Wang

Lecture 2 — 9/2/11

Proof. This count is equivalent to the number of ways

to put k indistinguishable particles in n distinguishable

boxes. Suppose we order the particles; then this count is

simply the number of ways to place “dividers” between

the particles, e.g.,

Definition 2.1. A sample space S is the set of all possible outcomes of an experiment.

Definition 2.2. An event A ⊆ S is a subset of a sample

space.

• • •| • | • •|| • |•

There are

Definition 2.3. Assuming that all outcomes are equally

likely and that the sample space is finite,

P (A) =

n+k−1

n+k−1

=

k

n−1

# favorable outcomes

# possible outcomes

ways to place the particles, which determines the placement of the divisiors (or vice versa); this is our result. is the probability that A occurs.

n

n

Example.

1.

=

k

n−k

Proposition 2.4 (Multiplication Rule). If there are r ex

periments and each experiment has ni possible outcomes,

n−1

n

2. n

=k

then the overall sample space has size

k−1

k

Pick k people out of n, then designate one as special. The RHS represents how many ways we can

do this by first picking the k individuals and then

making our designation. On the LHS, we see the

number of ways to pick a special individual and

then pick the remaining k − 1 individuals from the

remaining pool of n − 1.

n1 n2 · · · nr

Example. The probability of a full house in a five card

poker hand (without replacement, and without other

players) is

4

4

13

· 12

3

2

P (full house) =

52

5

3. (Vandermonde)

X

k n+m

n

m

=

k

i

k−i

i=0

Definition 2.5. The binomial coefficient is given by

n

n!

=

k

(n − k)!k!

On the LHS, we choose k people out of n + m.

On the RHS, we sum up, for every i, how to choose

i from the n people and k − i from the m people.

Definition 3.2. A probability space consists of a sample

space S along with a function P : P(S) → [0, 1] taking

events to real numbers, where

or 0 if k > n.

Theorem 2.6 (Sampling Table). The number of subsets

of size k chosen from a set of n distinct elements is given

by the following table:

ordered

replacement

no replacement

n

k

n!

(n − k)!

1. P (∅) = 0, P (S) = 1

S∞

P∞

2. P ( n=1 An ) = n=1 P (An ) if the An are disjoint

unordered

n+k−1

k n

k

Lecture 4 — 9/9/11

Example (Birthday Problem). The probability that at

least two people among a group of k share the same birthday, assuming that birthdays are evenly distributed across

the 365 standard days, is given by

Lecture 3 — 9/7/11

P (match) = 1 − P (no match)

365 · 364 · · · (365 − k + 1)

Proposition 3.1. The number of ways to choose k ele=1−

ments from a set of order n, with replacement and where

365k

order doesn’t matter, is

Proposition 4.1.

1. P (AC ) = 1 − P (A).

n+k−1

k

2. If A ⊆ B, then P (A) ≤ P (B).

1

Stat 110—Intro to Probability

Max Wang

3. P (A ∪ B) = P (A) + P (B) − P (A ∩ B).

Proof. All immediate.

Definition 5.1. The probability of two events A and B

are independent if

P (A ∩ B) = P (A)P (B)

Corollary 4.2 (Inclusion-Exclusion). Generalizing 3

In general, for n events A1 , . . . , An , independence reabove,

quires i-wise independence for every i = 2, . . . , n; that

n

n

is, say, pairwise independence alone does not imply inde[

X

X

P Ai =

P (Ai ) −

P (Ai ∩ Aj )

pendence.

i=1

i=1

+

X

i<j

Note. We will write P (A ∩ B) as P (A, B).

P (Ai ∩ Aj ∩ Ak ) − · · ·

Example (Newton-Pepys Problem). Suppose we have

some fair dice; we want to determine which of the following is most likely to occur:

i<j<k

+ (−1)n+1 P

n

\

Ai

1. At least one 6 given 6 dice.

i=1

2. At least two 6’s with 12 dice.

Example (deMontmort’s Problem). Suppose we have n

3. At least three 6’s with 18 dice.

cards labeled 1, . . . , n. We want to determine the probability that for some card in a shuffled deck of such cards, For the first case, we have

the ith card has value i. Since the number of orderings of

6

−1

the deck for which a given set of matches occurs is simply

P (A) = 1 −

5

the permutations on the remaining cards, we have

For the second,

1

(n − 1)!

12

11

=

P (Ai ) =

−1

−1

−1

n!

n

P (B) = 1 −

− 12

1

(n − 2)!

5

1

5

=

P (A1 ∩ A2 ) =

n!

n(n − 1)

and for the third,

(n − k)!

k 18−k

2 P (A1 ∩ · · · ∩ Ak ) =

X

18

−1

−1

n!

P (C) = 1 −

1

5

k

k=0

So using the above corollary,

(The summand on the RHS is called a binomial

1

n(n − 1)

1

P (A1 ∪ · · · ∪ An ) = n · −

·

probability.)

n

2!

n(n − 1)

Thus far, all the probabilities with which we have conn(n − 1)(n − 2)

1

+

·

cerned ourselves have been unconditional. We now turn

3!

n(n − 1)(n − 2)

to conditional probability, which concerns how to update

1

1

n 1

our beliefs (and computed probabilities) based on new

= 1 − + − · · · + (−1)

2! 3!

n!

evidence?

1

≈1−

Definition 5.2. The probability of an event A given B

e

is

P (A ∩ B)

P (A|B) =

Lecture 5 — 9/12/11

P (B)

if P (B) > 0.

Note. Translation from English to inclusion-exclusion:

Corollary 5.3.

• Probability that at least one of the Ai occurs:

P (A ∩ B) = P (B)P (A|B) = P (A)P (B|A)

P (A1 ∪ · · · ∪ An )

or, more generally,

• Probability that none of the Ai occurs:

P (A1 ∩ · · · ∩ An ) = P (A1 ) · P (A2 |A1 ) · P (A3 |A1 , A2 )

· · · P (An |A1 , . . . , An−1 )

1 − P (A1 ∪ · · · ∪ An )

Theorem 5.4 (Bayes’ Theorem).

• Probability that all of the Ai occur:

P (A1 ∩ · · · ∩ An ) = 1 −

P (AC

1

∪ ··· ∪

P (A|B) =

AC

n)

2

P (B|A)P (A)

P (B)

Stat 110—Intro to Probability

Max Wang

Lecture 6 — 9/14/11

Example. Two independent events are not necessarily

conditionally independent. Suppose we know that a fire

Theorem 6.1 (Law of Total Probability). Let S be a alarm goes off (event A). Suppose there are only two

sample space and A1 , . . . , An a partition of S. Then

possible causes, that a fire happened, F , or that someone

was making popcorn, C, and suppose moreover that these

P (B) = P (B ∩ A1 ) + · · · + P (B ∩ An )

events are independent. Given, however, that the alarm

= P (B|A1 )P (A1 ) + · · · + P (B|An )P (An )

went off, we have

Example. Suppose we are given a random two-card hand

from a standard deck.

P (F |(A ∩ C C )) = 1

1. What is the probability that both cards are aces and hence we do not have conditional independence.

given that we have an ace?

P (both aces, have ace)

P (have ace)

52

4

2 / 2

52

=

1 − 48

2 / 2

1

=

33

Lecture 7 — 9/16/11

P (both aces | have ace) =

Example (Monty Hall Problem). Suppose there are

three doors, behind two of which are goats and behind

one of which is a car. Monty Hall, the game show host,

knows the contents of each door, but we, the player, do

not, and have one chance to choose the car. After choosing a door, Monty then opens one of the two remaining

doors to reveal a goat (if both remaining doors have goats,

he chooses with equal probability). We are then given the

option to change our choice—should we do so?

In fact, we should; the chance that switching will give

us the car is the same as the chance that we did not originally pick the car, which is 23 . However, we can also solve

the problem by conditioning. Suppose we have chosen a

door (WLOG, say the first). Let S be the event of finding

the car by switching, and let Di be the event that the car

is in door i. Then by the Law of Total Probability,

2. What is the probability that both cards are aces

given that we have the ace of spades?

P (both aces | ace of spades) =

3

1

=

51

17

Example. Suppose that a patient is being tested for a

disease and it is known that 1% of similar patients have

the disease. Suppose also that the patient tests positive

and that the test is 95% accurate. Let D be the event that

the patient has the disease and T the event that he tests

positive. Then we know P (T |D) = 0.95 = P (T C |DC ).

Using Bayes’ theorem and the Law of Total Probability,

we can compute

1

1

1

P (S) = P (S|D1 ) + P (S|D2 ) + P (S|D3 )

3

3

3

1

1

=0+1· +1·

3

3

2

=

3

P (T |D)P (D)

P (T )

P (T |D)P (D)

=

P (T |D)P (D) + P (T |DC )P (DC )

≈ 0.16

P (D|T ) =

By symmetry, the probability that we succeed conditioned on the door Monty opens is the same.

Definition 6.2. Two events A and B are conditionally

independent of an event C if

Example (Simpson’s Paradox). Suppose we have the

two following tables:

P ((A ∩ B) | C) = P (A|C)P (B|C)

Example. Two conditionally independent events are

not necessarily unconditionally independent. For instance, suppose we have a chess opponent of unknown

strength. We might say that conditional on the opponent’s strength, all games outcomes would be independent. However, without knowing the opponent’s strength,

earlier games would give us useful information about the

opponent’s strength; hence, without the conditioning, the

game outcomes are not independent.

3

success

failure

Hibbert

heart band-aid

70

10

20

0

success

failure

Nick

heart band-aid

2

81

8

9

Stat 110—Intro to Probability

Max Wang

p

4p2 − 4p + 1

=

2p

1 ∓ (2p − 1)

=

2p

q

= 1,

p

1±

for the success of two doctors for two different operations.

Note that although Hibbert has a higher success rate

conditional on each operation, Nick’s success rate is

higher overall. Let us denote A to be the event of a successful operation, B the event of being treated by Nick,

and C the event of having heart surgery. In other words,

then, we have

As with differential equations, this gives a general solution of the form

i

−1

i

pi = A1 + B

q

P (A|B, C) < P (A|B C , C)

and

P (A|B, C C ) < P (A|B C , C C )

for p 6= q (to avoid a repeated root). Our boundary conditions for p0 and pn give

but

C

P (A|B) > P (A|B )

B = −A

In this example, C is the confounder.

and

n

q

1=A 1−

p

To solve for the case where p = q, we can guess x =

and take

1 − xi

ixi−1

i

lim

=

lim

=

x→1 1 − xn

x→1 nxn−1

n

So we have

i

−1

1

−

q

n p 6= q

−1

pi = 1 −

q

i

p=q

n

Lecture 8 — 9/19/11

Definition 8.1. A one-dimensional random walk models a (possibly infinite) sequence of successive steps along

the number line, where, starting from some position i,

we have a probability p of moving +1 and a probability

q = 1 − p of moving −1.

q

p

Example. An example of a one-dimensional random

walk is the gambler’s ruin problem, which asks: Given

two individuals A and B playing a sequence of successive

rounds of a game in which they bet $1, with A winning

B’s dollar with probability p and A losing a dollar to B

with probability q = 1 − p, what is the probability that

Now suppose that p = 0.49 and i = n − i. Then we

A wins the game (supposing A has i dollars and B has have the following surprising table

n−i dollars)? This problem can be modeled by a random

N

P (A wins)

walk with absorbing states at 0 and n, starting at i.

20

0.40

To solve this problem, we perform first-step analy100

0.12

sis; that is, we condition on the first step. Let pi =

200

0.02

P (A wins game | A start at i). Then by the Law of Total

Probability, for 1 ≤ i ≤ n − 1.

Note that this table is true when the odds are only slightly

against A and when A and B start off with equal funding;

pi = ppi+1 + qpi−1

it is easy to see that in a typical gambler’s situation, the

chance of winning is extremely small.

and of course we have p0 = 0 and pn = 1. This equation

Definition 8.2. A random variable is a function

is a difference equation.

To solve this equation, we start by guessing

X:S→R

pi = xi

from some sample space S to the real line. A random

variable acts as a “summary” of some aspect of an experiment.

Then we have

Definition 8.3. A random variable X is said to have the

Bernoulli distribution if X has only two possible values,

0 and 1, and there is some p such that

xi = pxi+1 + qxi−1

px2 − xi + q = 0

1±

√

1 − 4pq

2p

p

1 ± 1 − 4p(1 − p)

=

2p

x=

P (X = 1) = p

P (X = 0) = 1 − p

We say that

X ∼ Bern(p)

4

Stat 110—Intro to Probability

Max Wang

Note. We write X = 1 to denote the event

Proof. This is clear from our “story” definition of the

binomial distribution, as well as from our indicator r.v.’s.

Let us also check this using PMFs.

{s ∈ S : X(s) = 1} = X −1 {1}

Definition 8.4. The distribution of successes in n independent Bern(p) trials is called the binomial distribution

and is given by

n k

P (X = k) =

p (1 − p)n−k

k

P (X + Y = k) =

k

X

P (X + Y = k | X = j)P (X = j)

j=0

=

independence

where 0 ≤ k ≤ n. We write

=

X ∼ Bin(n, p)

=

Definition 8.5. The probability mass function (PMF)

of a discrete random variable (a random variable with

enumerable values) is a function that gives the probability that the random variable takes some value. That is,

given a discrete random variable X, its PMF is

k

X

n j n−j

P (Y = k − j | X − j)

p q

j

j=0

k

X

n j n−j

P (Y = k − j)

p q

j

j=0

k X

m

n j n−j

pk−j q m−(k−j)

p q

k

−

j

j

j=0

= pk q n+m−k

Vandermonde

=

k X

m

n

k

−

j

j

j=0

n + m k n+m−k

p q

k

fX (x) = P (X = x)

Example. Suppose we draw a random 5-card hand from

a standard 52-card deck. We want to find the distribuIn addition to our definition of the binomial distribu- tion of the number of aces in the hand. Let X = #aces.

tion by its PMF, we can also express a random variable We want to determine the PMF of X (or the CDF—but

the PMF is easier). We know that P (X = k) = 0 exX ∼ Bin(n, p) as a sum of indicator random variables,

cept if k = 0, 1, 2, 3, 4. This is clearly not binomial since

the trials (of drawing cards) are not independent. For

X = X1 + · · · + Xn

k = 0, 1, 2, 3, 4, we have

48 4

where

(

k 5−k

P (X = k) =

1 ith trial succeeds

52

Xi =

5

0 otherwise

which is just the probability of choosing k out of the 4

In other words, the Xi are i.i.d. (independent, identically aces and 5 − k of the non-aces. This is reminiscient of the

distributed) Bern(p).

elk problem in the homework.

Lecture 9 — 9/21/11

Definition 9.1. The cumulative distribution function Definition 9.3. Suppose we have w white and b black

marbles, out of which we choose a simple random sam(CDF) of a random variable X is

ple of n. The distribution of # of white marbles in the

sample, which we will call X, is given by

FX (x) = P (X ≤ x)

b w

n−k

Note. The requirements

for a PMF with values pi is that

P (X = k) = k w+b

P

n

each pi ≥ 0 and i pi = 1. For Bin(n, p), we can easily

verify this with the binomial theorem, which yields

where 0 ≤ k ≤ w and 0 ≤ n − k ≤ b. This is called the

hypergeometric distribution, denoted HGeom(w, b, n).

n

X

n k n−k

n

p q

= (p + q) = 1

Proof. We should show that the above is a valid PMF. It

k

k=0

is clearly nonnegative. We also have, by Vandermonde’s

identity,

b Proposition 9.2. If X, Y are independent random variw

w

w+b

X

k n−k

n

ables and X ∼ Bin(n, p), Y ∼ Bin(m, p), then

= w+b = 1

w+b

k=0

X + Y ∼ Bin(n + m, p)

n

n

5

Stat 110—Intro to Probability

Max Wang

Note. The difference between the hypergometric and biThe above shows that to get the probability of an

nomial distributions is whether or not we sample with event, we can simply compute the expected value of an

replacement. We would expect that in the limiting case indicator.

of n → ∞, they would behave similarly.

Observation 10.6. Let X ∼ Bin(n, p). Then (using the

binomial theorem),

Lecture 10 — 9/23/11

n

X

n k n−k

E(X) =

k

p q

Proposition 10.1 (Properties of CDFs).

A function

k

k=0

FX is a valid CDF iff the following hold about FX :

n

X

n k n−k

=

k

p q

1. monotonically nondecreasing

k

k=1

n

2. right-continuous

X

n − 1 k n−k

=

n

p q

k−1

3. limx→−∞ FX (x) = 0 and limx→∞ FX (x) = 1.

k=1

n X

n − 1 k−1 n−k

Definition 10.2. Two random variables X and Y are

= np

p

q

k−1

independent if ∀x, y,

k=1

n−1

X n − 1

P (X ≤ x, Y ≤ y) = P (X ≤ x)P (Y ≤ y)

= np

pj q n−1−j

j

j=0

In the discrete case, we can say equivalently that

= np

P (X = x, Y = y) = P (X = x)P (Y = y)

Proposition 10.7. Expected value is linear; that is, for

Note. As an aside before we move on to discuss averages random variables X and Y and some constant c,

and expected values, recall that

1

n

n

X

i=1

i=

E(X + Y ) = E(X) + E(Y )

n+1

2

and

E(cX) = cE(X)

Observation 10.8. Using linearity, given X ∼ Bin(n, p),

Example. Suppose we want to find the average of

since we know

1, 1, 1, 1, 1, 3, 3, 5. We could just add these up and divide

by 8, or we could formulate the average as a weighted

X = X1 + · · · + Xn

average,

5

2

1

where the Xi are i.i.d. Bern(p), we have

·1+ ·3+ ·5

8

8

8

X = p + · · · + p = np

Definition 10.3. The expected value or average of a discrete random variable X is

Example. Suppose that, once again, we are choosing a

five card hand out of a standard deck, with X = #aces.

X

E(X) =

xP (X = x)

If Xi is an indicator of the ith card being an ace, we have

x∈Im(X)

E(X) = E(X1 + · · · + X5 )

Observation 10.4. Let X ∼ Bern(p). Then

= E(X1 ) + · · · + E(X5 )

by symmetry

E(X) = 1 · P (X = 1) + 0 · P (X = 0) = p

= 5E(X1 )

= 5P (first card is ace)

Definition 10.5. If A is some event, then an indicator

5

=

random variable for A is

13

(

Note that this holds even though the Xi are dependent.

1 A occurs

X=

0 otherwise

Definition 10.9. The geometric distribution, Geom(p),

is the number of failures of independent Bern(p) triBy definition, X ∼ Bern(P (A)), and by the above,

als before the first success. Its PMF is given by (for

X ∼ Geom(p))

E(X) = P (A)

P (X = k) = q k p

6

Stat 110—Intro to Probability

Max Wang

for k ∈ N. Note that this PMF is valid since

∞

X

pq k = p ·

k=0

Definition 11.1. The negative binomial distribution,

NB(r, p), is given by the number of failures of independent Bern(p) trials before the rth success. The PMF for

X ∼ NB(r, p) is given by

n+r−1 r

P (X = n) =

p (1 − p)n

r−1

1

=1

1−q

Observation 10.10. Let X ∼ Geom(p). We have our

formula for infinite geometric series,

∞

X

k=0

for n ∈ N.

1

q =

1−q

k

Observation 11.2. Let X ∼ NB(r, p). We can write

X = X1 + · · · + Xr where each Xi is the number of

failures between the (i − 1)th and ith success. Then

Xi ∼ Geom(p). Thus,

rq

E(X) = E(X1 ) + · · · + E(Xr ) =

p

Taking the derivative of both sides gives

∞

X

kq k−1 =

k=1

1

(1 − q)2

Observation 11.3. Let X ∼ FS(p), where FS(p) is the

time until the first success of independent Bern(p) trials,

counting the success. Then if we take Y = X −1, we have

Y ∼ Geom(p). So,

Then

E(X) =

∞

X

k=0

kpq k = p

∞

X

k=0

kq k =

pq

q

=

2

(1 − q)

p

E(X) = E(Y ) + 1 =

q

1

+1=

p

p

Alternatively, we can use first step analysis and write a

recursive formula for E(X). If we condition on what hap- Example. Suppose we have a random permutation of

pens in the first Bernoulli trial, we have

{1, . . . , n} with n ≥ 2. What is the expected number

of local maxima—that is, numbers greater than both its

E(X) = 0 · p + (1 + E(X))q

neighbors?

Let Ij be the indicator random variable for position j

E(X) − qE(X) = q

being a local maximum (1 ≤ j ≤ n). We are interested in

q

E(X) =

1−q

E(I1 + · · · + In ) = E(I1 ) + · · · + E(In )

q

E(X) =

For the non-endpoint positions, in each local neighborp

hood of three numbers, the probability that the largest

number is in the center position is 31 .

Lecture 11 — 9/26/11

5, 2, · · · , 28, 3, 8, · · · , 14

| {z }

Recall our assertion that E, the expected value function,

is linear. We now prove this statement.

Moreover, these positions are all symmetrical. Analogously, the probability that an endpoint position is a local

Proof. Let X and Y be discrete random variables. We

maximum is 21 . Then we have

want to show that E(X + Y ) = E(X) + E(Y ).

n−2 2

n+1

X

E(I1 ) + · · · + E(In ) =

+ =

E(X + Y ) =

tP (X + Y = t)

3

2

3

t

Example (St. Petersburg Paradox). Suppose you are

X

=

(X + Y )(s)P ({s})

given the offer to play a game where a coin is flipped until

s

a heads is landed. Then, for the number of flips i made up

X

to and including the heads, you receive $2i . How much

=

(X(s) + Y (s))P ({s})

should you be willing to pay to play this game? That

s

X

X

is, what price would make the game fair, or the expected

=

X(s)P ({s}) +

Y (s)P ({s})

value zero?

s

s

X

X

Let X be the number of flips of the fair coin up to and

=

xP (X = x) +

yP (Y = y)

including the first heads. Clearly, X ∼ F S( 21 ). If we let

x

y

Y = 2X , we want to find E(Y ). We have

= E(X) + E(Y )

∞

∞

X

X

1

k

E(Y ) =

2 · k =

1

The proof that E(cX) = cE(X) is similar.

2

k=1

7

k=1

Stat 110—Intro to Probability

Max Wang

Proof. Fix k. Then as n → ∞ and p → 0,

n k

lim P (X = k) = n→∞

lim

p (1 − p)n−k

n→∞

k

p→0

p→0

This assumes, however, that our cash source is boundless.

If we bound it at 2K for some specific K, we should only

bet K dollars for a fair game—this is a sizeable difference.

n(n − 1) · · · (n − k + 1)

k!

p→0

n −k

λ

λ

1−

1−

n

n

Lecture 12 — 9/28/11

Definition 12.1. The Poisson distribution, Pois(λ), is

given by the PMF

P (X = k) =

e−λ λk

k!

=

k=0

λk

= e−λ eλ = 1

k!

Its mean is given by

E(X) = e−λ

= e−λ

= λe

= λe

k=1

−λ λ

λk −λ

·e

k!

P (X ≥ 1) = 1 − P (X = 0) = 1 − e−λ

λk

(k − 1)!

k=1

∞

X

−λ

·

To approximate P (X ≥ 1), we approximate X ∼ Pois(λ)

with λ = E(X). Then we have

∞

X

λk

k

k!

k=0

∞

X

k

Example. Suppose we have n people and we want to

know the approximate probability that at least three individuals have the same birthday. There are n3 triplets

of people; for each triplet, let Iijk be the indicator r.v.

that persons i, j, and k have the same birthday. Let

X = # triple matches. Then we know that

n

1

E(X) =

3 3652

Observation 12.2. Checking that this PMF is indeed

valid, we have

e−λ

−1

λ

for k ∈ N, X ∼ Pois(λ). We call λ the rate parameter.

∞

X

= n→∞

lim

λ0

= 1 − e−λ

0!

Lecture 13 — 9/30/11

λk−1

(k − 1)!

Definition 13.1. Let X be a random variable. Then X

has a probability density function (PDF) fX (x) if

Z b

P (a ≤ X ≤ b) =

fX (x) dx

e

=λ

a

The Poisson distribution is often used for applications

where we count the successes of a large number of trials

where the per-trial success rate is small. For example, the

Poisson distribution is a good starting point for counting

the number of people who email you over the course of

an hour. The number of chocolate chips in a chocolate

chip cookie is another good candidate for a Poisson distribution, or the number of earthquakes in a year in some

particular region.

Since the Poisson distribution is not bounded, these

examples will not be precisely Poisson. However, in general, with a large number of events Ai with small P (Ai ),

and where the Ai are all independent or “weakly dependent,” then the number of the

Pn Ai that occur is approximately Pois(λ), with λ ≈

i=1 P (Ai ). We call this a

Poisson approximation.

A valid PDF must satisfy

1. ∀x, fX (x) ≥ 0

Z ∞

2.

fX (x) dx = 1

−∞

Note. For > 0 very small, we have

fX (x0 ) · ≈ P X ∈ (x0 − , x0 + )

2

2

Theorem 13.2. If X has PDF fX , then its CDF is

Z x

FX (x) = P (X ≤ x) =

fX (t) dt

−∞

If X is continuous and has CDF FX , then its PDF is

0

fX (x) = FX

(x)

Moreover,

Proposition 12.3. Let X ∼ Bin(n, p). Then as n → ∞,

p → 0, and where λ = np is held constant, we have

X ∼ Pois(λ).

Z

a

8

b

fX (x) dx = FX (b) − FX (a)

P (a < X < b) =

Stat 110—Intro to Probability

Max Wang

The CDF, then, is given by

Z x

Z x

Definition 13.3. The expected value of a continuous

fU (t) dt

fU (t) dt =

FU (x) =

random variable X is given by

a

−∞

Z

x

Z ∞

1

=

dt

xfX (x) dx

E(X) =

b

−

a

a

−∞

x−a

=

Giving the expected value is like giving a one number

b−a

summary of the average, but it provides no information

Observation 13.7. The expected value of an r.v. U ∼

about the spread of a distribution.

Unif(a, b) is

Definition 13.4. The variance of a random variable X

Z b

x

is given by

E(U ) =

dx

2

Var(X) = E((X − EX) )

a b−a

b

which is the expected value of the distance from X to its

x2 =

mean; that is, it is, on average, how far X is from its

2(b − a) a

mean.

2

2

b −a

=

We can’t use E(X − EX) because, by linearity, we

2(b − a)

have

(b + a)(b − a)

=

2(b − a)

E(X − EX) = EX − E(EX) = EX − EX = 0

b+a

=

We would like to use E|X − EX|, but absolute value is

2

hard to work with; instead, we have

This is the midpoint of the interval [a, b].

Definition 13.5. The standard deviation of a random

Finding the variance of U ∼ Unif(a, b), however, is a

variable X is

p

bit

more trouble. We need to determine E(U 2 ), but it is

SD(X) = Var(X)

too much of a hassle to figure out the PDF of U 2 . Ideally,

Note. Another way we can write variance is

things would be as simple as

Z ∞

Var(X) = E((X − EX)2 )

2

E(U

)

=

x2 fU (x) dx

= E(X 2 − 2X(EX) + (EX)2 )

−∞

Proof. By the Fundamental Theorem of Calculus.

= E(X 2 ) − 2E(X)E(X) + (EX)2

Fortunately, this is true:

= E(X 2 ) − (EX)2

Theorem 13.8 (Law of the Unconscious Statistician

Definition 13.6. The uniform distribution, Unif(a, b), is (LOTUS)). Let X be a continuous random variable, g :

given by a completely random point chosen in the interval R → R continuous. Then

Z ∞

[a, b]. Note that the probability of picking a given point

E(g(X))

=

g(x)fX (x) dx

x0 is exactly 0; the uniform distribution is continuous.

−∞

The PDF for U ∼ Unif(a, b) is given by

(

where fX is the PDF of X. This allows us to determine

c a≤x≤b

the expected value of g(X) without knowing its distribufU (x) =

0 otherwise

tion.

for some constant c. To find c, we note that, by the defi- Observation 13.9. The variance of U ∼ Unif(a, b) is

given by

nition of PDF, we have

Z

Var(U ) = E(U 2 ) − (EU )2

2

Z b

b+a

2

=

x fU (x) dx −

2

a

2

Z b

1

b+a

=

x2 dx −

b−a a

2

b

c dx = 1

a

c(b − a) = 1

c=

1

b−a

9

Stat 110—Intro to Probability

Max Wang

b 2

1

b+a

x3 =

· −

b−a 3 2

a

a3

(b + a)2

b3

−

−

=

3(b − a) 3(b − a)

4

2

(b − a)

=

12

The following table is useful for comparing discrete

and continuous random variables:

P?F

CDF

E(X)

Var(X)

E(g(X))

[LOTUS]

discrete

PMF P (X = x)

FX (x) = P (X ≤ x)

P

x xP (X = x)

EX 2 − (EX)2

P

x g(x)P (X = x)

continuous

PDF fX (x)

FX (x) = P (X ≤ x)

R∞

xfX (x) dx

−∞

2

EX − (EX)2

R∞

g(x)fX (x) dx

−∞

Intuitively, this is true because there is no difference

between measuring U from the right vs. from the left of

[0, 1].

The general uniform distribution is also linear; that is,

a + bU is uniform on some interval [a, b]. If a distribution

is nonlinear, it is hence nonuniform.

Definition 14.3. We say that random variables

X1 , . . . , Xn are independent if

• for continuous, P (X1 ≤ x1 , . . . , Xn ≤ xn ) =

P (X1 ≤ x1 ) · · · P (Xn ≤ xn )

• for discrete, P (X1 = x1 , . . . , Xn = xn ) = P (X1 =

x1 ) · · · P (Xn = xn )

The expressions on the LHS are called joint CDFs and

joint PMFs respectively.

Note that pairwise independence does not imply independence.

Example. Consider the penny matching game, where

X1 , X2 ∼ Bern( 21 ), i.i.d., and let X3 be the indicator r.v.

for the event X1 = X2 (the r.v. for winning the game). All

Lecture 14 — 10/3/11

of these are pairwise independent, but the X3 is clearly

Theorem 14.1 (Universality of the Uniform). Let us dependent on the combined outcomes of X1 and X2 .

take U ∼ Unif(0, 1), F a strictly increasing CDF. Then

Definition 14.4. The normal distribution, given by

for X = F −1 (U ), we have X ∼ F . Moreover, for any

N (0, 1), is defined by PDF

random variable X, if X ∼ F , then F (X) ∼ Unif(0, 1).

f (z) = ce−z

Proof. We have

P (X ≤ x) = P (F −1 (U ) ≤ x) = P (U ≤ F (x)) = F (x)

2

/2

where c is the normalizing constant required to have f

integrate to 1.

since P (U ≤ F (x)) is the length of the interval [0, F (x)], Proof. We want to prove that our PDF is valid; to do

so, we will simply determine the value of the normalizing

which is F (x). For the second part,

constant that makes it so. We will integrate the square

−1

−1

of the PDF sans constant because it is easier than inteP (F (X) ≤ x) = P (X ≤ F (x)) = F (F (x)) = x

grating naı̈vely

Z ∞

Z ∞

since F is X’s CDF. But this shows that F (X) ∼

2

−z 2 /2

e

dz

e−z /2 dz

Unif(0, 1).

−∞

−∞

Z ∞

Z ∞

2

−x

−x2 /2

Example. Let F (x) = 1 − e with x > 0 be the CDF

=

e

dx

e−y /2 dy

−X

−∞

−∞

of an r.v. X. Then F (X) = 1 − e

by an application of

Z ∞Z ∞

2

2

the second part of Universality of the Uniform.

=

e−(x +y )/2 dx dy

−∞ −∞

2π Z ∞

Example. Let F (x) = 1 − e−x with x > 0, and also let

U ∼ Unif(0, 1). Suppose we want to simulate F with a

random variable X; that is, X ∼ F . Then computing the

inverse

F −1 (u) = − ln(1 − u)

Z

e−r

=

0

2

/2

r dr dθ

0

r2

Substituting u = , du = r dr

2

Z 2π Z ∞

−u

=

e du dθ

yields F −1 (U ) = − ln(1 − U ) ∼ F .

0

0

= 2π

Proposition 14.2. The standard uniform distribution is

symmetric; that is, if U ∼ Unif(0, 1), then also 1 − U ∼

So our normalizing constant is c =

Unif(0, 1).

10

√1 .

2π

Stat 110—Intro to Probability

Max Wang

Observation 14.5. Let us compute the mean and vari- which yields a PDF of

ance of Z ∼ N (0, 1). We have

x−µ 2

1

fX (x) = √ e−( σ ) /2

Z ∞

σ 2π

2

1

ze−z /2 dz = 0

EZ = √

2π −∞

We also have −X = −µ + σ(−Z) ∼ N (−µ, σ 2 ).

Later, we will show that if Xi ∼ N (µi , σi2 ) are indeby symmetry (the integrand is odd). The variance re- pendent, then

duces to

Xi + Xj ∼ N (µi + µj , σi2 + σj2 )

2

2

2

Var(Z) = E(Z ) − (EZ) = E(Z )

and

Xi − Xj ∼ N (µi − µj , σi2 + σj2 )

By LOTUS,

Z ∞

Observation 15.2. If X ∼ N (µ, σ 2 ), we have

1

2 −z 2 /2

2

z e

dz

E(Z ) = √

2π −∞

P (|X − µ| ≤ σ) ≈ 68%

Z ∞

2

2

P

(|X − µ| ≤ 2σ) ≈ 95%

z 2 e−z /2 dz

evenness = √

2π 0

P (|X − µ| ≤ 3σ) ≈ 99.7%

Z ∞

2

−z 2 /2

by parts = √

z ze

dz

Observation 15.3. We observe some properties of the

2π 0 |{z} | {z }

u

dv

variance.

∞

Z ∞

2

2

Var(X) = E((X − EX)2 ) = EX 2 − (EX)2

= √ uv +

e−z /2 dz

2π

0

0

For any constant c,

!

√

2

2π

Var(X + c) = Var(X)

=√

0+

2

2π

Var(cX) = c2 Var(X)

=1

Since variance is not linear, in general, Var(X + Y ) 6=

Var(X) + Var(Y ). However, if X and Y are independent,

We use Φ to denote the standard normal CDF; so

we

do have equality. On the other extreme,

Z z

1

−t2 /2

Φ(z) = √

e

dt

Var(X + X) = Var(2X) = 4 Var(X)

2π −∞

Also, in general,

By symmetry, we also have

Var(X) ≥ 0

Φ(−z) = 1 − Φ(z)

Var(X) = 0 ⇐⇒ ∃a : P (X = a) = 1

Observation 15.4. Let us compute the variance of the

Poisson distribution. Let X ∼ Pois(λ). We have

Lecture 15 — 10/5/11

∞

Recall the standard normal distribution. Let Z be an

X

e−λ λk

E(X 2 ) =

k2

r.v., Z ∼ N (0, 1). Then Z has CDF Φ; it has E(Z) = 0,

k!

k=0

Var(Z) = E(Z 2 ) = 1, and E(Z 3 ) = 0.1 By symmetry,

also −Z ∼ N (0, 1).

To reduce this sum, we can do the following:

∞

X

λk

Definition 15.1. Let X = µ + σZ, with µ ∈ R (the

mean or center), σ > 0 (the SD or scale). Then we say

X ∼ N (µ, σ 2 ). This is the general normal distribution.

If X ∼ N (µ, σ 2 ), we have E(X) = µ and Var(µ +

σZ) = σ 2 Var(Z) = σ 2 . We call Z = X−µ

the standardσ

ization of X. X has CDF

X −µ

x−µ

x−µ

P (X ≤ x) = P

≤

=Φ

σ

σ

σ

1 These

are called the first, second, and third moments.

11

k=0

k!

= eλ

Taking the derivative w.r.t. λ,

∞

X

kλk−1

= eλ

k!

λ

k=1

∞

X

k=0

kλk−1

= λeλ

k!

Stat 110—Intro to Probability

Max Wang

∞

X

kλk

k!

k=1

Repeating,

∞

X

k 2 λk−1

k=1

∞

X

k=1

k!

Lecture 17 — 10/14/11

= λeλ

Definition 17.1. The exponential distribution, Expo(λ),

is defined by PDF

= λeλ + eλ

f (x) = λe−λx

k 2 λk

= λeλ (λ + 1)

k!

for x > 0 and 0 elsewhere. We call λ the rate parameter.

Integrating clearly yields 1, which demonstrates validity. Our CDF is given by

So,

Z

2

E(X ) =

∞

X

k

2e

k=0

−λ λ

=e

x

λe−λt dt

F (x) =

−λ k

λ

k!

(0

1 − e−λx

=

0

e λ(λ + 1)

x>0

otherwise

= λ2 + λ

Observation 17.2. We can normalize any X ∼ Expo(λ)

by multiplying by λ, which gives Y = λX ∼ Expo(1). We

have

So for our variance, we have

Var(X) = (λ2 + λ) − λ2 = λ

Observation 15.5. Let us compute the variance of thebinomial distribution. Let X ∼ Bin(n, p). We can write

P (Y ≤ y) = P (X ≤

X = I1 + · · · + In

where the Ij are i.i.d. Bern(p). Then,

X 2 = I12 + · · · + In2 + 2I1 I2 + 2I1 I3 + · · · + 2In−1 In

Let us now compute the mean and variance of Y ∼

Expo(1). We have

Z

where Ii Ij is the indicator of success on both i and j.

n

2

2

E(X ) = nE(I1 ) + 2

E(I1 I2 )

2

= np + n p − np

∞

ye−y dy

0

∞ Z

∞

−y = (−ye ) +

e−y dy

0

E(Y ) =

= np + n(n − 1)p2

2 2

y

) = 1 − e−λy/λ = 1 − e−y

λ

0

2

=1

for the mean. For the variance,

So,

Var(X) = (np + n2 p2 − np2 ) − n2 p2

Var(Y ) = EY 2 − (EY )2

Z ∞

=

y 2 e−y dy − 1

= np(1 − p)

= npq

0

=1

Proof. (of Discrete LOTUS)

P

We want to show that E(g(X) = x g(x)P (X = x). To

do so, once again we can “ungroup” our expected value Then for X = Y , we have E(X) = 1 and Var(X) = 12 .

λ

λ

λ

expression:

X

X

Definition 17.3. A random variable X has a memoryless

g(x)P (X = x) =

g(X(s))P ({s})

distribution if

x

s∈S

We can rewrite this as

X X

X

g(X(s))P ({s}) =

g(x)

x s:X(s)=x

x

=

X

x

X

P (X ≥ s + t | X ≥ s) = P (X ≥ t)

P ({s})

s:X(s)=x

g(x)P (X = x)

Intuitively, if we have a random variable that we interpret as a waiting time, memorylessness means that no

matter how long we have already waited, the probability

of having to wait a given time more is invariant.

12

Stat 110—Intro to Probability

Max Wang

Proposition 17.4. The exponential distribution is mem- Observation 18.3. We might ask why we call M

oryless.

“moment-generating.” Consider the Taylor expansion of

M:

Proof. Let X ∼ Expo(λ). We know that

∞

∞

n n

X

X

−λt

E(X n )tn

X

t

P (X ≥ t) = 1 − P (X ≤ t) = e

=

E(etX ) = E

n!

n!

n=0

n=0

Meanwhile,

Note that we cannot simply make use of linearity since

our sum is infinite; however, this equation does hold for

reasons beyond the scope of the course.

This observation also shows us that

P (X ≥ s + t, X ≥ s)

P (X ≥ s)

P (X ≥ s + t)

=

P (X ≥ s)

P (X ≥ s + t | X ≥ s) =

E(X n ) = M (n) (0)

e−λ(s+t)

e−λs

−λt

=e

=

Claim 18.4. If X and Y have the same MGF, then they

have the same CDF.

= P (X ≥ t)

We will not prove this claim.

which is our desired result.

Observation 18.5. If X has MGF MX and Y has MGF

Example. Let X ∼ Expo(λ). Then by linearity and by MY , then

the memorylessness,

MX+Y = E(et(X+Y ) ) = E(etX )E(etY ) = MX MY

E(X | X > a) = a + E(X − a | X > a)

1

The second inequality comes from the claim (which we

=a+

will prove later) that if for X, Y independent, E(XY ) =

λ

E(X)E(Y ).

Lecture 18 — 10/17/11

Example. Let X ∼ Bern(p). Then

Theorem 18.1. If X is a positive, continuous random

M (t) = E(etX ) = pet + q

variable that is memoryless (i.e., its distribution is memoryless), then there exists λ ∈ R such that X ∼ Expo(λ). Suppose now that X ∼ Bin(n, p). Again, we write

Proof. Let F be the CDF of X and G = 1 − F . By X = I1 + · · · + In where the Ij are i.i.d Bern(p). Then we

see that

memorylessness,

M (t) = (pet + q)n

G(s + t) = G(s)G(t)

Example. Let Z ∼ N (0, 1). We have

We can easily derive from this identity that ∀k ∈ Q,

Z ∞

2

1

√

M

(t)

=

etz−z /2 dz

G(kt) = G(t)k

2π −∞

Z

2

et /2 ∞ −(1/2)(z−t)2

This can be extended to all k ∈ R. If we take t = 1, then

completing the square = √

e

dz

we have

2π −∞

2

G(x) = G(1)x = ex ln G(1)

= et /2

But since G(1) < 1, we can define λ = − ln G(1), and we

Example. Suppose X1 , X2 , . . . are conditionally indewill have λ > 0. Then this gives us

pendent (given p) random variables that are Bern(p).

F (x) = 1 − G(x) = 1 − e−λx

Suppose also that p is unknown. In the Bayesian approach, let us treat p as a random variable. Let p ∼

as desired.

Unif(0, 1); we call this the prior distribution.

Let Sn = X1 + · · · + Xn . Then Sn | p ∼ Bin(n, p). We

Definition 18.2. A random variable X has

want to find the posterior distribution, p | Sn , which will

moment-generating function (MGF)

give us P (Xn+1 = 1 | Sn = k). Using “Bayes’ Theorem,”

M (t) = E(etX )

P (Sn = k | p)f (p)

f (p | Sn = k) =

if M (t) is bounded on some interval (−, ) about zero.

P (Sn = k)

13

Stat 110—Intro to Probability

=

Max Wang

P (Sn = k | p)

P (Sn = k)

Observation 19.3. Let X ∼ Pois(λ). By LOTUS, its

MGF is given by

∝ pk (1 − p)n−k

E(etX ) =

In the specific case of Sn = n, normalizing is easier:

∞

X

etk e−λ

k=0

λk

k!

t

n

= e−λ eλe

f (p | Sn = n) = (n + 1)p

t

= eλ(e −1)

Computing P (Xn+1 = 1 | Sn = k) simply requires finding the expected value of an indicator with the above

Observation 19.4. Now let X ∼ Pois(λ) and Y ∼

probability p | Sn = n.

Pois(µ) independent. We want to find the distribution

Z 1

n+1

of X + Y . We can simply multiply their MGFs, yielding

n

p(n + 1)p dp =

P (Xn+1 = 1 | Sn = n) =

n

+

2

0

t

t

MX (t)MY (t) = eλ(e −1) eµ(e −1)

= e(λ+µ)(e

Lecture 19 — 10/19/11

Observation 19.1. Let X ∼ Expo(1). We want to determine the MGF M of X. By LOTUS,

t

−1)

Thus, X + Y ∼ Pois(λ + µ).

Example. Suppose X, Y above are dependent; specifically, take X = Y . Then X + Y = 2X. But this cannot

be Poisson since it only takes on even values. We could

also compute the mean and variance

M (t) = E(etX )

Z ∞

=

etx e−x dx

0

Z ∞

=

e−x(1−t) dx

E(2X) = 2λ

Var(2X) = 4λ

0

=

If we write

but they should be equal for the Poisson.

1

, t<1

1−t

We now turn to the study of joint distributions. Recall

that joint distributions for independent random variables

can be given simply by multiplying their CDFs; we want

also to study cases where random variables are not independent.

∞

∞

X

X

tn

1

n!

tn =

=

1 − t n=0

n!

n=0

we get immediately that

E(X n ) = n!

Definition 19.5. Let X, Y be random variables. Their

joint CDF is given by

Now take Y ∼ Expo(1) and let X = λY ∼ Expo(1). So

n

Yn = X

λn , and hence

F (x, y) = P (X ≤ x, Y ≤ y)

E(Y n ) =

n!

λn

In the discrete case, X and Y have a joint PMF given by

P (X = x, Y = y)

Observation 19.2. Let Z ∼ N (0, 1), and let us determine all its moments. We know that for n odd, by sym- and in the continuous case, X and Y have a joint PDF

given by

metry,

∂

n

E(Z ) = 0

f (x, y) =

F (x, y)

∂x∂y

We previously showed that

and we can compute

2

ZZ

M (t) = et /2

P ((X, Y ) ∈ B) =

f (x, y) dx dy

But we can write

B

∞

∞

∞

X

X

X

(t2 /2)n

t2n

(2n)! t2n

=

=

n!

2n n! n=0 2n n! (2n)!

n=0

n=0

So

E(Z 2n ) =

(2n)!

2n n!

Their separate CDFs and PMFs (e.g., P (X ≤ x)) are

referred to as marginal CDFs, PMFs, or PDFs. X and

Y are independent precisely when the the joint CDF is

equal to the product of the marginal CDFs:

F (x, y) = FX (x)FY (y)

14

Stat 110—Intro to Probability

Max Wang

We can show that, equivalently, we can say

P (X = x, Y = y) = P (X = x)P (Y = y)

Lecture 20 — 10/21/11

Definition 20.1. Let X and Y be random variables.

Then the conditional PDF of Y |X is

or

fY |X (y|x) =

f (x, y) = fX (x)fY (y)

for all x, y ∈ R.

fX|Y (x|y)fY (y)

fX,Y (x, y)

=

fX (x)

fX (x)

Note that Y |X is shorthand for Y | X = x.

Definition 19.6. To get the marginal PMF or PDF of Example. Recall the PDF for our uniform distribution

a random variable X from its joint PMF or PDF with on the disk,

another random variable Y , we can marginalize over Y

(

1

by computing

y 2 = 1 − x2

f (x, y) = π

0 otherwise

X

P (X = x) =

P (X = x, Y = y)

y

and marginalizing over Y , we have

√

or

Z

Z

∞

fX (x) =

fX (x) =

fX,Y (x, y) dy

1−x2

√

− 1−x2

2p

1

dy =

1 − x2

π

π

−∞

Example. Let X ∼ Bern(p), X ∼ Bern(q). Suppose

they have joint PMF given by

Y =0

2/6

2/6

4/6

X=0

X=1

Y =1

1/6

1/6

2/6

for −1 ≤ x ≤ 1. As a check, we could integrate this again

with respect to dx to ensure that it is 1. From this, it is

easy to find the conditional PDF,

fY |X (y|x) =

3/6

3/6

2

π

1

1/π

√

= √

2

1−x

2 1 − x2

√

√

for − 1 − x2 ≤ y ≤ 1 − x2 . Since√we are √

holding x

constant, we see that Y |X ∼ Unif(− 1 − x2 , 1 − x2 ).

Here we have computed the marginal probabilities (in the From these computations, it is clear, in many ways,

margin), and they demonstrate that X and Y are inde- that X and Y are not independent. It is not true that

pendent.

fX,Y = fX fY , nor that fY |X = fY

Example. Let us define the uniform distribution on the Proposition 20.2. Let X, Y have joint PDF f , and let

2

unit square, {(x, y) : x, y ∈ [0, 1]}. We want the joint g : R → R. Then

Z ∞Z ∞

PDF to be constant everywhere in the square and 0 othE(g(X, Y )) =

g(x, y)f (x, y) dx dy

erwise; that is,

−∞

(

f (x, y) =

c

0

0 ≤ x ≤ 1, 0 ≤ y ≤ 1

otherwise

1

Normalizing, we simply need c = area

= 1. It is apparent

that the marginal PDFs are both uniform.

−∞

This is LOTUS in two dimensions.

Theorem 20.3. If X, Y are independent random variables, then E(XY ) = E(X)E(Y ).

Proof. We will prove this in the continuous case. Using

LOTUS, we have

Example. Let us define the uniform distribution on the

Z ∞Z ∞

unit disc, {(x, y) : x2 + y 2 ≤ 1}. Their joint PDF can be

E(XY ) =

xyfX,Y (x, y) dx dy

given by

−∞ −∞

Z ∞Z ∞

(

1

x2 + y 2 ≤ 1

by independence =

xyfX (x)fY (y) dx dy

f (x, y) = π

−∞ −∞

0 otherwise

Z ∞

√

√

=

E(X)yfY (y) dy

Given X = x, we have − 1 − x2 ≤ y ≤ 1 − x2 . We

−∞

might guess that Y is uniform, but clearly X and Y are

= E(X)E(Y )

dependent in this case, and it turns out that this is not

the case.

as desired.

15

Stat 110—Intro to Probability

Max Wang

Example. Let X, Y ∼ Unif(0, 1) i.i.d.; we want to find Lecture 21 — 10/24/11

E|X − Y |. By LOTUS (and since the joint PDF is 1), we

Theorem 21.1. Let X ∼ N (µ1 , σ12 ) and Y ∼ N (µ2 , σ22 )

want to integrate

be independent random variables. Then X + Y ∼ N (µ1 +

Z 1Z 1

µ2 , σ12 + σ22 ).

|x − y| dx dy

E|X − Y | =

Proof. Since X and Y are independent, we can simply

Z0Z 0

ZZ

multiply their MGFs. This is given by

=

(x − y) dx dy +

(y − x) dx dy

x>y

x≤y

MX+Y (t) = MX (t)MY (t)

ZZ

t2

t2

by symmetry = 2

(x − y) dx dy

= exp(µ1 t + σ1 ) exp(µ2 t + σ2 )

x>y

2

2

Z 1Z 1

2

t

= exp((µ1 + µ2 )t + (σ12 + σ22 ) )

(x − y) dx dy

=

2

y

0

!

which yields our desired result.

Z 1

1

x2

=2

− yx dy

Example. Let Z1 , Z2 ∼ N (0, 1), i.i.d.; let us find

2

0

y

E|Z1 − Z2 |. By the above, Z1 − Z2 ∼ N (0, 2). Let

1

Z

∼ N (0, 1). Then

=

√

3

E|Z1 − Z2 | = E| 2Z|

√

If we let M = max{X, Y } and L = min{X, Y }, then we

= 2E|Z|

would have |X − Y | = M − L, and hence also

Z ∞

2

1

=

|z| √ e−z /2 dz

1

2π

−∞

E(M − L) = E(M ) − E(L) =

Z ∞

2

3

1

evenness = 2

|z| √ e−z /2 dz

2π

We also have

r0

2

=

π

E(X + Y ) = E(M + L) = E(M ) + E(L) = 1

Definition 21.2. Let X = (X1 , . . . , Xk ) be a multivariThis gives

ate random variable,

P p = (p1 , . . . , pk ) a probability vector

1

2

with pj ≥ 0 and j pj = 1. The multinomial distribution

E(L) =

E(M ) =

is given by assorting n objects into k categories, each ob3

3

ject having probability pj of being in category j, and takExample (Chicken-Egg Problem). Suppose there are ing the number of objects in each category, Xj . If X has

N ∼ Pois(λ) eggs, each hatching with probability p, in- the multinomial distribution, we write X ∼ Multk (n, p).

dependent (these are Bernoulli). Let X be the number of

The PMF of X is given by

eggs that hatch. Thus, X|N ∼ Bin(N, p). Let Y be the

n!

number that don’t hatch. Then X + Y = N .

P (X1 = n1 , . . . , Xk = nk ) =

pn1 1 · · · pnk k

n

!

·

·

·

n

!

1

k

Let us find the joint PMF of X and Y .

P

if k nk = n, and 0 otherwise.

∞

X

Observation 21.3. Let X ∼ Multk (n, p). Then the

P (X = i,Y = j) =

P (X = i, Y = j | N = n)P (N = n)

marginal distribution of Xj is simply Xj ∼ Bin(n, pj ),

n=0

since each object is either in j or not, and we have

= P (X = i, Y = j | N = i + j)P (N = i + j)

E(Xj ) = npj Var(Xj ) = npj (1 − pj )

= P (X = i | N = i + j)P (N = i + j)

(i + j)! i j −λ λi+j

pq e

i!j!

(i + j)!

!

!

i

j

−λp (λp)

−λq (λq)

= e

e

i!

j!

=

Observation 21.4. If we “lump” some of our categories

together for X ∼ Multk (n, p), then the result is still

multinomial. That is, taking

Y = (X1 , . . . , Xl−1 , Xl + · · · + Xk )

and

p0 = (p1 , . . . , pl−1 , pl + · · · + pk )

In other words, the randomness of the number of eggs

offsets the dependence of Y on X given a fixed number of we have Y ∼ Multl (n, p0 ), and this is true for any combiX. This is a special property of the Poisson distribution. nations of lumpings.

16

Stat 110—Intro to Probability

Max Wang

Observation 21.5. Let X ∼ Multk (n, p). Then given

X1 = n1 ,

and then we proceed as before.

(X2 , . . . , Xk ) ∼ Multk−1 (n − n1 , (p02 , . . . , p0k ))

Lecture 22 — 10/26/11

where

pj

pj

=

1 − p1

p2 + · · · + pk

This is symmetric for all j.

p0j =

Definition 22.1. The covariance of random variables X

and Y is

Definition 21.6. The Cauchy distribution is a distribution of T = X

Y with X, Y ∼ N (0, 1) i.i.d.

Cov(X, Y ) = E((X − EX)(Y − EY ))

Note. The following properties are immediately true of

Note. The Cauchy distribution has no mean, but has the covariance:

property that an average of many Cauchy distributions is

still Cauchy.

1. Cov(X, X) = Var(X)

Observation 21.7. Let us compute the PDF of X with

the Cauchy distribution. The CDF is given by

P(

3. Cov(X, Y ) = E(XY ) − E(X)E(Y )

X

X

≤ t) = P (

≤ t)

Y

|Y |

= P (X ≤ t|Y |)

Z ∞ Z t|y|

2

2

1

1

√ e−x /2 √ e−y /2 dx dy

=

2π

2π

−∞ −∞

Z ∞

Z t|y|

2

2

1

1

√ e−x /2 dx dy

=√

e−y /2

2π −∞

2π

−∞

Z ∞

1

−y 2 /2

=√

e

Φ(t|y|) dy

2π −∞

r Z ∞

2

2

=

e−y /2 Φ(ty) dy

π 0

4. ∀c ∈ R, Cov(X, c) = 0

5. ∀c ∈ R, Cov(cX, Y ) = c Cov(X, Y )

6. Cov(X, Y + Z) = Cov(X, Y ) + Cov(X, Z)

The last two properties demonstrate that covariance is

bilinear. In general,

n

m

X

X

X

bj Yj =

ai Xi ,

ai bj Cov(Xi , Yj )

Cov

i=1

j=1

i,j

Observation 22.2. We can use covariance to compute

the variance of sums:

There is little we can do to compute this integral. Instead, let us compute the PDF, calling the CDF above

F (t). Then we have

r Z ∞

2 2

2

2

1

0

F (t) =

ye−y /2 √ e−t y /2 dy

π 0

2π

Z

1 ∞ −(1+t2 )y2 /2

=

ye

dy

π 0

(1 + t2 )y 2

Substituting u =

=⇒ du = (1 + t2 )y dy,

2

1

=

π(1 + t)2

Var(X + Y ) = Cov(X, X) + Cov(X, Y )

+ Cov(Y, X) + Cov(Y, Y )

= Var(X) + 2 Cov(X, Y ) + Var(Y )

and more generally,

X

X

X

Var(

X) =

Var(X) + 2

Cov(Xi , Xj )

i<j

Theorem 22.3. If X, Y are independent, then

Cov(X, Y ) = 0 (we say that they are uncorrelated).

We could also have performed this computation using the

Law of Total Probability. Let φ be the standard normal

PDF. We have

Z ∞

P (X ≤ t|Y |) =

P (X ≤ t|Y | | Y = y)φ(y) dy

−∞

Z ∞

by independence =

P (X ≤ ty)φ(y) dy

−∞

Z ∞

=

Φ(ty)φ(y) dy

−∞

2. Cov(X, Y ) = Cov(Y, X)

Example. The converse of the above is false. Let Z ∼

N (0, 1), X = Z, Y = Z 2 , and let us compute the covariance.

Cov(X, Y ) = E(XY ) − (EX)(EY )

= E(Z 3 ) − (EZ)(EZ 2 )

=0

But X and Y are very dependent, since Y is a function

of X.

17

Stat 110—Intro to Probability

Max Wang

Example. Let X ∼ Bin(n, p). Write X = X1 + · · · + Xn

where the Xj are i.i.d. Bern(p). Then

Definition 22.4. The correlation of two random variables X and Y is

Cov(X, Y )

SD(X) SD(Y )

X − EX Y − EY

,

= Cov

SD(X) SD(Y )

Var(Xj ) = EXj2 − (EXj )2

Cor(X, Y ) =

= p − p2

= p(1 − p)

It follows that

The operation of

X − EX

SD(X)

Var(X) = np(1 − p)

is called standardization; it gives the result a mean of 0

and a variance of 1.

since Cor(Xi , Xj ) = 0 for i 6= j by independence.

Lecture 23 — 10/28/11

Theorem 22.5. | Cor(X, Y )| ≤ 1.

Example. Let X ∼ HGeom(w, b, n). Let us write p =

w

Proof. We could apply Cauchy-Schwartz to get this rew+b and N = w+b. Then we can write X = X1 +· · ·+Xn

sult immediately, but we shall also provide a direct proof. where

the Xj are Bern(p). (Note, however, that unlike

WLOG, assume X and Y are standardized. Let ρ = with the binomial, the X are not independent.) Then

j

Cor(X, Y ). We have

n

Var(X)

=

n

Var(X

)

+

2

Cov(X1 , X2 )

1

Var(X + Y ) = Var(X) + Var(Y ) + 2ρ = 2 + 2ρ

2

n

and we also have

= np(1 − p) + 2

Cov(X1 , X2 )

2

Var(X − Y ) = Var(X) + Var(Y ) − 2ρ = 2 − 2ρ

Computing the covariance, we have

But since Var ≥ 0, this yields our result.

Cov(X1 , X2 ) = E(X1 X2 ) − (EX1 )(EX2 )

2

w

w−1

w

−

=

w+b w+b−1

w+b

w

w−1

=

− p2

w+b w+b−1

Example. Let (X1 , . . . , Xk ) ∼ Multk (n, p). We shall

compute Cov(Xi , Xj ) for all i, j. If i = j, then

Cov(Xi , Xi ) = Var(Xi ) = npi (1 − pi )

Suppose i 6= j. We can expect that the covariance will be

and simplifying,

negative, since more objects in category i means less in

category j. We have

N −n

Var(X) =

np(1 − p)

N −1

Var(Xi + Xj ) = npi (1 − pi ) + npj (1 − pj ) + 2 Cov(Xi , Xj )

−n

The term N

N −1 is called the finite population correction;

But by “lumping” i and j together, we also have

it represents the “offset” from the binomial due to lack of

replacement.

Var(Xi + Xj ) = n(pi + pj )(1 − (pi + pj ))

Theorem 23.1. Let X be a continuous random variable

with PDF fX , and let Y = g(X) where g is differentiable

Then solving for c, we have

and strictly increasing. Then the PDF of Y is given by

Cov(Xi , Xj ) = −npi pj

dx

fY (y) = fX (x)

dy

Note. Let A be an event and IA its indicator random

variable. It is clear that

where y = g(x) and x = g −1 (y). (Also recall from calcu −1

n

dy

IA

= IA

lus that dx

.)

dy = dx

for any n ∈ N. It is also clear that

Proof. From the CDF of Y , we get

IA IB = IA∩B

P (Y ≤ y) = P (g(X) ≤ y)

18

Stat 110—Intro to Probability

Max Wang

Z

= P (X ≤ g −1 (y))

∞

FY (t − x)fX (x) dx

=

= FX (g −1 (y))

−∞

Then taking the derivative of both sides,

Z ∞

fT (t) =

fX (x)fY (t − x) dx

= FX (x)

Then, differentiating, we get by the Chain Rule that

−∞

dx

fY (y) = fX (x)

dy

We now briefly turn our attention to proving the existence of objects with some desired property A using probability. We want to show that P (A) > 0 for some random

Example. Consider the log normal distribution, which

object, which implies that some such object must exist.

Z

is given by Y = e for Z ∼ N (0, 1). We have

Reframing this question, suppose each object in our

universe of objects has some kind of “score” associated

1 −z2 /2

fY (y) = √ e

with this property; then we want to show that there is

2π

some object with a “good” score. But we know that there

To put this in terms of y, we substitute z = ln y. More- is an object with score at least equal to the average score,

over, we know that

i.e., the score of a random object. Showing that this average is “high enough” will prove the existence of an object

dy

without specifying one.

= ez = y

dz

Example. Suppose there are 100 people in 15 commitand so,

tees of 20 people each, and that each person is on exactly

1 1

3 committees. We want to show that there exist 2 comfY (y) = √ e− ln y/2

y 2π

mittees with overlap ≥ 3. Let us find the average of two

Theorem 23.2. Suppose that X is a continuous random random committees. Using indicator random variables

variable in n dimensions, Y = g(X) where g : Rn → Rn for the probability that a given person is on both of those

two committees, we get

is continuously differentiable and invertible. Then

3

300

20

2

dx

=

E(overlap) = 100 · 15 =

fY (y) = fX (x)det

105

7

dy

2

where

∂ x1

∂y

1

dx

= ...

dy

∂ xn

∂y1

···

..

.

···

∂ xn

∂yn

..

.

∂ xn

∂yn

is the Jacobian matrix.

Observation 23.3. Let T = X + Y , where X and Y are

independent. In the discrete case, we have

X

P (T = t) =

P (X = x)P (Y = t − x)

x

For the continuous case, we have

fT (t) = (fX ∗ fY )(t)

Z ∞

=

fX (x)fY (t − x) dx

−∞

This is true because we have

FT (t) = P (T ≤ t)

Z ∞

=

P (X + Y ≤ t | X = x)fX (x) dx

−∞

Then there exists a pair of committees with overlap of at

least 20

7 . But since all overlaps must be integral, there is

a pair of committees with overlap ≥ 3.

Lecture 24 — 10/31/11

Definition 24.1. The beta distribution, Beta(a, b) for

a, b > 0, is defined by PDF

(

cxa−1 (1 − x)b−1 0 < x < 1

f (x) =

0

otherwise

where c is a normalizing constant (defined by the beta

function).

The beta distribution is a flexible family of continuous

distributions on (0, 1). By flexible, we mean that the appearance of the distribution varies significantly depending

on the values of its parameters. If a = b = 1, the beta

reduces to the uniform. If a = 2 and b = 1, the beta appears as a line with positive slope. If a = b = 21 , the beta

appears to be concave-up and parabolic; if a = b = 2, it

is concave down.

The beta distribution is often used as a prior distribution for some parameter on (0, 1). In particular, it is

the conjugate prior to the binomial distribution.

19

Stat 110—Intro to Probability

Max Wang

Observation 24.2. Suppose that, based on some data, for any a > 0. The gamma function is a continuous exwe have X | p ∼ Bin(n, p), and that our prior distribu- tension of the factorial operator on natural numbers. For

tion for p is p ∼ Beta(a, b). We want to determine the n a positive integer,

posterior distribution of p, p | X. We have

Γ(n) = (n − 1)!

P (X = k | p)f (p)

f (p | X = k) =

More generally,

P (X = k)

n k

Γ(x + 1) = xΓ(x)

p (1 − p)n−k cpa−1 (1 − p)b−1

= k

P (X = k)

Definition 25.2. The standard gamma distribution,

a+k−1

∝ cp

(1 − p)b+n−k−1

Gamma(a, 1), is defined by PDF

1 a−1 −x

x

e

Γ(a)

So, we have p | X ∼ Beta(a + X, b + n − X). We call the

Beta the conjugate prior to the binomial because both its

prior and posterior distribution are Beta.

for x > 0, which is simply the integrand of the normalized

Observation 24.3. Let us find a specific case of the nor- Gamma function. More generally, let X ∼ Gamma(a, 1)

and Y = X

malizing constant

λ . We say that Y ∼ Gamma(a, λ). To get the

PDF of Y , we simply change variables; we have x = λy,

Z 1

so

c−1 =

xk (1 − x)n−k dx

dx

0

fY (y) = fX (x)

dy

To do this, consider the story of “Bayes’ billiards.” Sup1

1

pose we have n + 1 billiard balls, all white; then we paint

=

(λy)a e−λy λ

Γ(a)

λy

one pink and throw them along (0, 1) all independently.

1

1

Let X be the number of balls to the left of the pink ball.

=

(λy)a e−λy

Γ(a)

y

Then conditioning on where the pink ball ends up, we

have

Definition 25.3. We define a Poisson process as a proZ 1

cess in which events occur continuously and indepenP (X = k) =

P (X = k | p) f (p) dp

dently such that in any time interval t, the number of

|{z}

0

events which occur is Nt ∼ Pois(λt) for some fixed rate

1

Z 1 parameter λ.

n k

=

p (1 − p)n−k dp

k

Observation 25.4. The time T1 until the first event oc0

curs is Expo(λ):

where, given the pink ball’s location, X is simply binomial

(each white ball has an independent chance p of landing

P (T1 > t) = P (Nt = 0) = e−λt

to the left). Note, however, that painting a ball pink and

then throwing the balls along (0, 1) is the same as throw- which means that

ing the balls along the real line and then painting one

P (T1 ≤ t) = 1 − e−λt

pink. But then it is clear that there is an equal chance

for any given number from 0 to n of white balls to be to as desired. More generally, the time until the next event

the pink ball’s left. So we have

is always Expo(λ); this is clear from the memoryless property.

Z 1 n k

1

n−k

p (1 − p)

dp =

Proposition 25.5. Let Tn be the time of the nth event

n+1

k

0

in a Poisson process with rate parameter λ. Then, for Xj

i.i.d. Expo(λ), we have

Lecture 25 — 11/2/11

Definition 25.1. The gamma function is given by

Z ∞

Γ(a) =

xa−1 e−x dx

Z0 ∞

1

=

xa e−x dx

x

0

Tn =

n

X

Xj ∼ Gamma(n, λ)

j=1

The exponential distribution is the continuous analogue of the geometric distribution; in this sense, the

gamma distribution is the continuous analogue of the negative binomial distribution.

20

Stat 110—Intro to Probability

Max Wang

Proof. One method of proof, which we will not use, Lecture 26 — 11/4/11

would be to repeatedly convolve the PDFs of the i.i.d. Xj .

Instead, we will use MGFs. Suppose that the Xj are i.i.d Observation 26.1 (Gamma-Beta). Let us take X ∼

Gamma(a, λ) to be your waiting time in line at the bank,

Expo(1); we will show that their sum is Gamma(n, 1).

and Y ∼ Gamma(b, λ) your waiting time in line at the

The MGF of Xj is given by

post office. Suppose that X and Y are independent.

Let T = X + Y ; we know that this has distribution

1

MXj (t) =

Gamma(a + b, λ).

1−t

Let us compute the joint distribution of T and of

X

for t < 1. Then the MGF of Tn is

, the fraction of time spent waiting at the

W = X+Y

bank.

For

simplicity

of notation, we will take λ = 1. The

n

−1

joint

PDF

is

given

by

MTn (t) =

1

∂ (x, y) fT,W (t, w) = fX,Y (x, y)det

also for t < 1. We will show that the gamma distribution

∂(t, w) has the same MGF.

1

∂ (x, y) a −x b −y 1 =

det

x e y e

Let Y ∼ Gamma(n, 1). Then by LOTUS,

Γ(a)Γ(b)

xy ∂(t, w) Z ∞

1

1

We must find the determinant of the Jacobian (here exety y n e−y dy

E(etY ) =

Γ(n) 0

y

pressed in silly-looking notation). We know that

Z ∞

1

1

x

=

y n e(1−t)y dy

x+y =t

=w

Γ(n) 0

y

x+y

Changing variables, with x = (1 − t)y, then

Z

(1 − t)−n ∞ n −x 1

x e

dx

Γ(n)

x

0

n

−1

Γ(n)

=

1

Γ(n)

n

−1

=

1

Solving for x and y, we easily find that

x = tw

E(etY ) =

y = t(1 − w)

Then the determinant of our Jacobian is given y

w

t 1 − w −t = −tw − t(1 − w) = −t

Taking the absolute value, we then get

1

1

xa e−x y b e−y t

Γ(a)Γ(b)

xy

1

1

a−1

=

w

(1 − w)b−1 ta+b e−t

Γ(a)Γ(b)

t

Γ(a + b) a−1

1

1

=

w

(1 − w)b−1

ta+b e−t

Γ(a)Γ(b)

Γ(a + b)

t

Note that this is the MGF for any n > 0, although the

sum of exponentials expression requires integral n.

fT,W (t, w) =

Observation 25.6. Let us compute the moments of

X ∼ Gamma(a, 1). We want to compute E(X c ). We

have

Z ∞

1

1

c

E(X ) =

xc xa e−x dx

Γ(a) 0

x

Z ∞

1

1

=

xa+c e−x dx

Γ(a) 0

x

Γ(a + c)

=

Γ(a)

a(a + 1) · · · (a + c)Γ(a)

=

Γ(a)

= a(a + 1) · · · (a + c)

This is a product of some function of w with the PDF of

T , so we see that T and W are independent. To find the

marginal distribution of W , we note that the PDF of T

integrates to 1 just like any PDF, so we have

Z ∞

fW (w) =

fT,W (t, w) dt

If instead, we take X ∼ Gamma(a, λ), then we will have

E(X c ) =

a(a + 1) · · · (a + c)

λc

−∞

Γ(a + b) a−1

w

(1 − w)b−1

=

Γ(a)Γ(b)

This yields W ∼ Beta(a, b) and also gives the normalizing

constant of the beta distribution.

It turns out that if X were distributed according to

any other distribution, we would not have independence,

but proving so is out of the scope of the course.

21

Stat 110—Intro to Probability

Max Wang

Observation 26.2. Let us find E(W ) for W ∼ Example. Let U1 , . . . , Un be i.i.d. Unif(0, 1), and let us

X

Beta(a, b). Let us write W = X+Y

with X and Y de- determine the distribution of U(j) . Applying the above

result, we have

fined as above. We have

n − 1 j−1

−1

E(X)

a

fU(j) (x) = n

x (1 − x)n−j

E

=

=

j−1

X

E(X + Y )

a+b

Note that in general, the first equality is false! However, for 0 < x < 1. Thus, we have U(j) ∼ Beta(j, n − j + 1).

X

because X +Y and X+Y

, they are uncorrelated and hence This confirms our earlier result that, for U1 and U2 i.i.d.

Unif(0, 1), we have

linear. So

1

−1

E|U1 − U2 | = E(Umax ) − E(Umin ) =

E

E(X + Y ) = E(X)

3

X

because Umax ∼ Beta(2, 1) and Umin ∼ Beta(1, 2), which

Definition 26.3. Let X1 , . . . , Xn be i.i.d. The order