Schedules of Reinforcement

advertisement

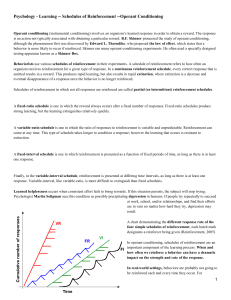

Schedules of Reinforcement Types of Schedule Schedule Performance Analysis What Is a Schedule of Reinforcement? l l A schedule of reinforcement arranges a contingency (relationship) between an operant and the delivery of a reinforcer. Continuous reinforcement (CRF) l l l l Every response is reinforced. Partial or intermittent schedule Not every response is reinforced. Schedules may also specify relationships between reinforcement contingencies and discriminative stimuli. Schedules of Reinforcement as Feedback Functions l In a simple control system: l l l l a perceptual input is compared to an internallyspecified reference state for that perception. The difference between them creates an error signal, which drives an output . The output affects the environment so as to push the perceptual input toward its reference value (negative feedback), thus closing the loop. A schedule of reinforcement specifies how the output will affect the perceptual input; it thus constitutes an environmental feedback function. 1 Control-System Diagram Showing Feedback Function reference Input function Output funct error comparator Output (key-pecks) input Feedback (grain accesses) disturbances Feedback function Reinforcement schedule, e.g. FR-5 Basic Intermittent Schedules l l Schedules may be based on number of responses, passage of time, or both. Ratio Schedules l l Interval Schedules l l Reinforcement after a specified number of responses have been completed. Reinforcement follows first response after the passage of a specified amount of time. Differential Reinforcement Schedules l Reinforcement based on the spacing of responses in time or on response omission. Fixed Ratio Schedules l l l Reinforcement after a fixed number of responses has occurred. Symbolized FR-x, where x is the fixed ratio requirement. Example: FR-5: Five responses per reinforcement. Delivery of reinforcement resets the response counter back to zero. 2 Responding on Fixed Ratio Schedules l “Break-and-run pattern: l l l l FR-200 FR-120 High rate sustained during ratio completion Pause follows delivery of reinforcer (“postreinforcement pause”) Pause length increases with ratio size. Too high a ratio leads to ratio strain. Analysis of Performance on Fixed-Ratio Schedules l l l In ratio schedules, the rate of reinforcement depends directly on the rate of responding. Because reinforcement is more effective at short than at long delays, high response rates are more strongly reinforced than low ones. This positive feedback loop drives rate during the “ratio run” to the maximum. After reinforcement, alternate sources of potential reinforcement for various activities compete. Returning to the lever is only weakly encouraged owing to the delay between return to the lever and reinforcer delivery that is imposed by the ratio requirement. This explains the post -reinforcement pause, and why the pause is longer for higher ratios. Variable Ratio Schedules l l l Reinforcement after completion of a variable number of responses; schedule specified by the average number of responses per reinforcement. Symbolized VR-x, where x is the average ratio requirement. Example: VR-20: An average of 20 responses is required before the reinforcer will be delivered, but actual ratio varies unpredictably after each reinforcement. 3 Performance on Variable Ratio Schedules VR-173 l l l Variable ratio schedules maintain a relatively high, steady rate of responding. Little or no evidence of post-reinforcement pausing Too high a ratio produces ratio strain. Analysis of Performance on Variable-Ratio Schedules l l l On ratio schedules, rate of reinforcement depends directly on rate of responding. This positive feedback loop drives responding upward toward the maximum. Because VR schedules occasionally present very short ratios, return to the lever is sometimes almost immediately reinforced, allowing this behavior to compete effectively against other behaviors. This explains the lack of post -reinforcement pause. Lack of pauses also rules out fatigue explanation for post-reinforcement pauses in FR schedules. Fixed Interval Schedules l l l l Reinforcement follows the first response to occur after a specified fixed interval is over. Delivery of reinforcer resets the interval timer to zero. Symbolized FI x, where x is the interval size in seconds or minutes. Examples: FI 30-s, FI 1-m 4 Performance on Fixed Interval Schedules l l Low or zero rate begins to increase perhaps half-way into the interval, accelerates to the end. This pattern is called the fixed interval scallop because of its scalloped or fluted appearance. FI 4-m Analysis of Performance on Fixed Interval Schedules l l l It is evident that rats can time the interval; therefore there must be internally generated stimuli that correlate with different times since reinforcement. These may function as discriminative stimuli; those occurring near the end of the interval are more closely associated with reinforcement, therefore generate higher rates of responding. Skinner demonstrated that an external clock stimulus would produce an inverted scallop if the clock were run backwards, supporting this analysis. Stable performance may represent a tradeoff between responding too soon (wasted effort) and responding too late (reduced rate of reinforcement). Variable Interval Schedules l l l l Reinforcer delivered immediately following the first response to occur after the current interval is over. Interval size varies unpredictably after each reinforcer delivery; schedule specified by the average interval size. Symbolized as VI x, where x is the average interval length in seconds or minutes Examples: VI 20-s, VI 3-m 5 Performance on Variable Interval Schedules l l l VI schedules tend to sustain a relatively moderate but steady rate of responding. As the average interval size increases, response rate decreases. Sustain lower rates than VR schedules that produce the same rate of reinforcement VI 3-m Analysis of Performance on Variable-Interval Schedules l l l On variable-interval schedules, the probability that a response will be immediately followed by reinforcer delivery is roughly constant. Because the currently timed interval is sometimes very short, returning to the lever is occasionally followed almost immediately by reinforcer delivery, encouraging immediate return to responding (no pauses or scallops). Reinforcement rate is nearly independent of response rate, above some minimum. As the only way to know whether a reinforcer has been “set up” by the schedule is to respond, this encourages a moderate, steady rate of responding. Differential Reinforcement Schedules l Differential reinforcement of high rates (DRH) l l Differential reinforcement of low rates (DRL) l l A response is reinforced if it occurs within x seconds of the previous response A response is reinforced if it occurs no sooner than x seconds after the previous response Differential reinforcement of other behavior (DRO) l A reinforcer is delivered after x seconds, but only if no response has occurred. Also called an omission schedule. 6 Analysis of Differential Reinforcement Performance l l l Inter-response times (IRTs ) vary from response to response. Only those IRTs meeting the schedule requirement are followed immediately by reinforcement. These become more probable, other IRTs relatively less probable, and performance changes in the direction required by the schedule: Short IRTs for DRH, long IRTs for DRL, infinite IRTs for DRO. Applications l l The simple schedules just described were not intended to model “real-world” contingencies, but rather, to explore how schedule properties affect the patterning of behavior. Nevertheless, we can draw some parallels between these laboratory schedules and reinforcement contingencies found outside the laboratory. Some examples follow. Piece-work l l l Some jobs pay so much per item produced. The pay rate depends directly on the rate of production of pieces; thus this is a ratio schedule. Where each item requires several steps to complete, breaks will tend to occur after an item is completed rather than in the middle of assembly – a post-reinforcement pause. 7 Gambling l l l l l l The slot machine is an excellent example. Each response (put money in slot, pull lever) brings you closer to a pay -off. The faster you play, the sooner you win. How many responses you will have to make before a pay -off varies unpredictably after each win. It’s a variable-ratio schedule! And what do we know about VR schedules? They generate a high, steady rate of play. Just what the “house” wants! Waiting for Company to Arrive l l l l l Your guests are expected at 8 pm. As the time approaches, you glance out the front window to see if anyone has pulled into your driveway. The closer to 8 pm it gets, the more often you glance. If they are punctual, the first glance after 8 pm will find them in the drive. It’s (approximately) a fixed-interval schedule, complete with FI scallop. Calling the Plumber l l l l One of the water pipes at your house has sprung a leak, and you are desperate to get your plumber out to fix it. He doesn’t have an answering machine, so you call, call, call, call, call. Finally, he answers the phone. It’s not how often you called that counted, but whether enough time had passed for him to be back from wherever he went. But you had no idea how long that would be. It’s a variable-interval schedule, and it generated a moderate, steady rate of calling. 8