Blind Adaptive Sampling of Images

advertisement

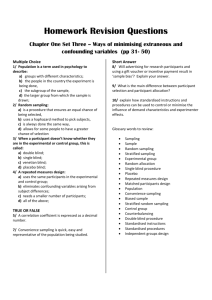

1478 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 21, NO. 4, APRIL 2012 Blind Adaptive Sampling of Images Zvi Devir and Michael Lindenbaum Abstract—Adaptive sampling schemes choose different sampling masks for different images. Blind adaptive sampling schemes use the measurements that they obtain (without any additional or direct knowledge about the image) to wisely choose the next sample mask. In this paper, we present and discuss two blind adaptive sampling schemes. The first is a general scheme not restricted to a specific class of sampling functions. It is based on an underlying statistical model for the image, which is updated according to the available measurements. A second less general but more practical method uses the wavelet decomposition of an image. It estimates the magnitude of the unsampled wavelet coefficients and samples those with larger estimated magnitude first. Experimental results show the benefits of the proposed blind sampling schemes. Index Terms—Adaptive sampling, blind sampling, image representation, statistical pursuit, wavelet decomposition. I. INTRODUCTION I MAGE sampling is a method for extracting partial information from an image. In its simplest form, i.e., point sampling, every sample provides value of image at some location . In generalized sampling methods, every sample measures the inner product of image with some sampling mask . Linear transforms may be considered as sampling processes, which use a rich set of masks, defined by their basis functions; examples include the discrete cosine transform (DCT) and the discrete wavelet transform (DWT). A progressive sampling scheme is a sequential sampling scheme that can be stopped after any number of samples, while providing a “good” sampling pattern. Progressive sampling is preferred when the number of samples is not predefined. This is important, e.g., when we want to minimize the number of samples and terminate the sampling process when sufficient knowledge about the image is obtained. Adaptive schemes generate different sets of sampling masks for different images. Common sampling schemes (e.g., DCT and DWT) are nonadaptive, in the sense of using a fixed set of masks, regardless of the image. Adaptive schemes are potentially more efficient as they are allowed to use direct information from the image in order to better construct or choose the set of sampling masks. For example, using a directional DCT basis [21] that is adapted to the local gradient of an image patch is more efficient than using a regular 2-D DCT basis. Other Manuscript received July 08, 2010; revised October 10, 2011; accepted November 15, 2011. Date of publication December 23, 2011; date of current version March 21, 2012. The associate editor coordinating the review of this manuscript and approving it for publication was Prof. Charles Creusere. The authors are with the Computer Science Department, Technion–Israel Institute of Technology, Haifa 32000, Israel (e-mail: zdevir@cs.technion.ac.il; mic@cs.technion.ac.il). Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org. Digital Object Identifier 10.1109/TIP.2011.2181523 examples for progressive adaptive sampling methods are the matching pursuit and the orthogonal matching pursuit (OMP) [11], [12], [14], which sample the image with a predefined set of masks and choose the best mask according to various criteria. In this paper, we consider a class of schemes that we refer to as blind adaptive sampling schemes. Those schemes do not have direct access to the image and make exclusive use of indirect information gathered from previous measurements for generating their adaptive masks. A geological survey for underground oil reserves may be considered as an example of such a scheme. Drilling can be regarded as point sampling of the underground geological structure, and the complete survey can be regarded as a progressive sampling scheme, which ends when either oil is found or funds are exhausted. This sampling scheme is blind in the sense that the only data directly available to the geologists are the measurements. The sampling scheme is adaptive in the sense that the previous measurements are taken into an account when choosing the best spot for the next drill. While blindness to the image necessarily makes the samples somewhat less efficient, it implies that the masks are dependent only on the previous measurements. Therefore, storing the various sampling masks is unnecessary. The sampling scheme functions as a kind of decoder, i.e., given the same set of measurements, it will produce the corresponding set of sampling masks. This property of blind sampling schemes stems from the deterministic nature of the sampling process. Similar ideas stand at the root of various coding schemes, such as adaptive Huffman codes (e.g., [20]) or binary compression schemes, which construct the same online dictionary during encoding and decoding (e.g., the Lempel–Ziv–Welch algorithm). In this paper, we present two blind adaptive schemes, which can be considered as generalizations of the adaptive farthest point strategy (AFPS), a progressive blind adaptive sampling scheme proposed in [6]. The first method, called statistical pursuit, generates an optimal (in a statistical sense) set of masks and can be restricted to dictionaries of masks. The scheme maintains an underlying statistical model of the image, derived from the available information about the image (i.e., the measurements). The model is updated online as additional measurements are obtained. The scheme uses this statistical model to generate an optimal mask that provides the most additional information possible from the image. The second method, called blind wavelet sampling, works with the limited yet large set of wavelet masks. It relies on an empirical statistical model of wavelet coefficients. Using this model, it estimates the magnitude of unknown wavelet coefficients from the sampled ones and samples the coefficients with larger estimated magnitude first. Our experiments indicate that this method collects information about the image much more efficiently than alternative nonadaptive methods. 1057-7149/$26.00 © 2011 IEEE DEVIR AND LINDENBAUM: BLIND ADAPTIVE SAMPLING OF IMAGES 1479 Fig. 1. One iteration of the nonadaptive FPS algorithm: (a) the sampled points (sites) and their corresponding Voronoi diagram; (b) the candidates for sampling (Voronoi vertices); (c) the farthest candidate chosen for sampling; and (d) the updated Voronoi diagram. The remainder of this paper is organized as follows: In Section II, the AFPS scheme is described as a particular case of a progressive blind adaptive sampling scheme. Section III presents the statistical pursuit scheme, followed by a presentation of the blind wavelet sampling scheme. Experimental results are shown in Section V. Section VI concludes this paper and proposes related research paths. II. AFPS ALGORITHM We begin by briefly describing an earlier blind adaptive sampling scheme [6], which inspired the work in this paper. The algorithm, denoted as AFPS, is based on the farthest point strategy (FPS), a simple, progressive, but nonadaptive point sampling scheme. A. FPS Algorithm In the FPS, a point in the image domain is progressively chosen, such that it is the farthest from all previously sampled points. This intuitive rule leads to a truly progressive sampling scheme, providing after every single sample a cumulative set of samples, which is uniform in a deterministic sense and becomes continuously denser [6]. To efficiently find its samples, the FPS scheme maintains a Voronoi diagram of the sampled points. A Voronoi diagram [2] is a geometric structure that divides the image domain into cells corresponding to the sampled points (sites). Each cell contains exactly one site and all points in the image domain, which are closer to the site than to all other sites. An edge in the Voronoi diagram contains points equidistant to two sites. A vertex in the Voronoi diagram is equidistant to three sites (in the general case) and is thus a local maximum of the distance function. Therefore, in order to find the next sample, it is sufficient to consider only the Voronoi vertices (with some special considerations for points on the image boundary). After each sampling iteration, the new sampled point becomes a site, and the Voronoi diagram is accordingly updated. Fig. 1 describes one iteration of the FPS algorithm. Note that, because the FPS algorithm is nonadaptive, it produces a uniform sampling pattern regardless of the given image content. B. AFPS Algorithm An adaptive more efficient sampling scheme is derived from the FPS algorithm. Instead of using the Euclidean distance as a priority function, the geometrical distance is used, along with Fig. 2. First 1024, 4096, and 8192 point samples of the cameraman image, taken according to the AFPS scheme. either the estimated local variance of the image intensities or the equivalent local bandwidth. The resulting algorithm, denoted AFPS, samples the image more densely in places where it is more detailed and more sparsely where it is relatively smooth. Fig. 2 shows the first 1024, 4096, and 8192 sampling points produced by the AFPS algorithm for the cameraman image, using the priority function , where is the distance of the candidate to its closest neighbors and is a local variance estimate. A variant of the AFPS scheme, designed for range sampling using a regularized grid pattern, was presented in [5]. III. STATISTICAL PURSUIT In this section, we propose a sampling scheme based on a direct statistical model of the image. In contrast to point sampling schemes such as the AFPS, this scheme may choose sampling masks from an overcomplete family of basis functions or calculate optimal masks. The scheme updates an underlying statistical model for the image as more information is gathered during the sampling process. A. Simple Statistical Model for Images Images are often regarded as 2-D arrays of scalar values (gray levels or intensities). Yet, it is clear that arbitrary arrays of values do not resemble natural images. Natural images contain structures that are difficult to explicitly define. Several attempts were made to formulate advanced statistical models, which can approximate such global structures and provide good prediction for missing parts [9]. Still, the local structure is easier to predict, and there exist some low-level statistical models, which model local behavior fairly well. 1480 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 21, NO. 4, APRIL 2012 Here, we consider a common and particularly simple secondorder local statistical model for images. We regard image as a Gaussian random vector, i.e., (1) where is the mean vector and is the covariance matrix. For simplicity and without loss of generality, we assume . Two neighboring pixels in an image often have similar gray level values (colors). Statistically speaking, their colors have a strong positive correlation, which weakens as their distance grows. The exponential correlation model [10], [13] is a secondorder stationary model based on this observation. According to this model, the covariance between the intensities of two arbitrary pixels and exponentially depends on their distance, i.e., (2) where is the variance of the intensities and quickly the correlation drops. determines how B. Statistical Reconstruction We consider linear sampling where the th sample is generated as an inner product of image with some sampling mask . That is, , where both the image and the mask are regarded as column vectors. A sampling process provides us with a set of sampling masks and their corresponding measurements . We wish to reconstruct an image from this partial information. Let be a matrix containing the masks as its columns, and let be a column vector of the measurements. Using those matrix notations, . The underlying statistical model of the image may be used to obtain an image estimate based on measurements . For the second-order statistical model (1), the optimal estimator is linear [15]. The linear estimator is optimal in the sense, i.e., it minimizes the mean square error (MSE) between the true and reconstructed images MSE . It is not hard to show that the image estimate , its covariance , and its MSE can be written in matrix form as (3) (4) MSE trace (5) is linearly independent. assuming It should be noted that the statistical reconstruction is analogous to the algebraic one. The algebraic (consistent) reconstruction of an image from its measurements is (assuming the linear independence of ). That is, the algebraic reconstruction is a statistical reconstruction, assuming the pixels are independent identically distributed, i.e., . Searching for a new mask that minimizes the algebraic reconstruction error , leads to the OMP [14]. Analogously, searching for a new mask that minimizes the expected error , leads to the statistical pursuit, which is discussed next. C. Reconstruction Error Minimization A greedy sampling strategy is to find a sampling mask that minimizes the MSE of . If is a linear combination of the previous masks, it is trivial to show that the MSE does not change (as no additional information is gained) and MSE . Therefore, we can assume that the new mask is linearly independent of . Proposition: The reduction of the MSE, given a new mask (linearly independent of ), is MSE (6) where is the covariance of , defined in (4). Proof: See Appendix A. The aforementioned proposition justifies selection criteria for the next best mask(s) in several scenarios. For the sake of brevity, we define and as the estimated image and its covariance, after sampling the image with masks . MSE is the MSE after sampling masks, and MSE is the expected reduction of MSE given an arbitrary and mask as the next selected mask. We further denote . Without subscript, and shall refer to the estimated image and its covariance, given all known masks. is a positive-semidefinite symmetric matrix, with the previous masks as its eigenvectors with corresponding zero eigenvalues. may be regarded as the “portion” of the covariance matrix , which is statistically independent of the previous masks. D. Progressive Sampling Schemes 1) Predetermined Family of Masks: The masks are often selected from a predefined set of masks (a dictionary) , such as the DCT or DWT basis. In such cases, the next best mask is determined by calculating MSE for each mask in the dictionary and choosing MSE . 2) Parametrized Masks: Suppose depends on several parameters . We can differentiate (6) by the parameters of the mask, solve the resulting system of equations MSE , and check all the extrema masks for the one that maximizes MSE . However, solving the resulting system of equations is not a trivial task. 3) Optimal Mask: If the next mask is not restricted, the optimal mask is an eigenvector corresponding to the largest eigenvalue. See Appendix B for details. We shall denote the eigenvector corresponding to the largest eigenvalue of the largest eigenvector of and mark it as . is the 4) Optimal Set of Masks: The largest eigenvector of optimal mask. If that mask is chosen, it becomes an eigenvector of with a corresponding eigenvalue of 0. Therefore, optimal mask is the largest eigenvector of , which the is the second largest eigenvector of . Collecting optimal masks together is equivalent to finding the largest eigenvectors of . If we begin with no initial masks, the optimal first masks are simply the largest eigenvectors of . Those are, not surprisingly, the first components obtained from the principal DEVIR AND LINDENBAUM: BLIND ADAPTIVE SAMPLING OF IMAGES 1481 F. Image Reconstruction From Adaptive Sampling The statistical reconstruction of (3) requires the measurements, along with their corresponding masks. For nonadaptive sampling schemes, the masks are fixed regardless of the image, and there is no need to store them. For blind adaptive sampling schemes, where the set of masks differs for different images, there is no need to store them either. At each iteration of the sampling process, a new sampling mask is constructed, and the image is sampled. Because of the deterministic nature of the sampling process, those masks can be recalculated during reconstruction. The reconstruction algorithm is almost identical to Algorithm 1, except for step 3(c), which now reads, “Pick from a list of stored measurements,” and the stopping criteria at step 4 is accordingly updated. IV. BLIND WAVELET SAMPLING component analysis (PCA) [7], [8] of the image . , i.e., the covariance of E. Adaptive Progressive Sampling Using the MSE minimization criteria, it is easy to construct a nonadaptive progressive sampling scheme that selects the optimal mask, i.e., the one that minimizes the estimated error at each step. Such a nonadaptive sampling scheme makes use of a fixed underlying statistical model for image . However, as we gain information about the image, we can update the underlying model accordingly. In the exponential model of (2), the covariance between two pixels depends only on their spatial distance. However, pairs associated with a large intensity dissimilarity are more likely to belong to different segments in the image and to thus be less correlated. If we have some image estimate, a pair of pixels may be characterized by their spatial distance and their estimated intensity dissimilarity. Using the partial knowledge about image obtained during the iterative sampling process, we now redefine the exponential correlation model (2). The new model is based on both the spatial distance and the estimated intensity distance, i.e., (7) Such correlation models are implicitly used in nonlinear filters such as the bilateral filter [19]. Naturally, other color-aware models can be used. For example, instead of taking Euclidean distances, we can take geodesic distances on the image color manifold [17]. Introducing the model of (7) into a progressive sampling scheme, we can construct an adaptive sampling scheme presented as Algorithm 1. The statistical pursuit algorithm is quite general, but updating the direct underlying space-varying statistical model of the image is computationally costly. We now present an alternative blind sampling approach, which is limited to a family of wavelet masks and relies on an indirect statistical model of the image. The scheme chooses first the coefficient that is estimated to carry most of the energy, using the measurements that it obtains. A trivial adaptive scheme stores the largest wavelet coefficients and their corresponding masks. Such a scheme samples (i.e., decomposes) the complete image and sorts the coefficients according to their energy. However, it clearly uses all the image information and is therefore not blind. The proposed sampling scheme is based on the statistical properties of a wavelet family; we use the statistical correlations between magnitudes (or energies) of the wavelet coefficients. Those statistical relationships are used to construct a number of linear predictors, which predict the magnitude of the unsampled coefficients from the magnitude of the known coefficients. This way, the presented scheme chooses the larger coefficients without direct knowledge about the image. The proposed sampling scheme is divided into three stages. 1) Learning Stage: The statistical properties of the wavelet family are collected and studied. This stage is done offline using a large set of images and is considered a constant model. 2) Sampling Stage: At each iteration of this blind sampling scheme, the magnitudes of all unsampled coefficients are estimated, and the coefficient with the largest magnitude is greedily chosen. 3) Reconstruction Stage: The image is reconstructed using the measurements obtained from the sampling stage. As with the other blind schemes, it is sufficient to store the values of the sampled coefficients since their corresponding masks are recalculated during reconstruction. A. Correlation Between Wavelet Coefficients Wavelet coefficients are weakly correlated among themselves [3], [9]. However, their absolute values or their energies are highly correlated. For example, the correlation between the magnitude of wavelet coefficients at different scales but 1482 similar spatial locations is relatively high. Nevertheless, the sign of the coefficient is hard to predict, and therefore, the correlations between the coefficients remain low. This property is the foundation of zerotree encoding [16], which is used to efficiently encode quantized wavelet coefficients. Each wavelet coefficient corresponds to a discrete wavelet basis function, which can also be interpreted as a sampling mask. The mask (and its associated coefficient) is specified by orientation ( , , , or ), level of decomposition (or scale), spatial location, and support. Three relationships are defined. 1) Each coefficient has four (spatial) direct neighbors in the same block. 2) Each coefficient from the , , and blocks (for ) has four children. The children are of the same orientation one level below and occupy approximately the same spatial location of the parent coefficient. Each coefficient in the , , and blocks (expected at the highest level) has a parent coefficient. 3) Each coefficient from the , , and blocks has two cousins that occupy the same location but with different orientations. At the highest level , a third cousin in the block exists. Similarly, each coefficient in the block has three cousins in the , , and blocks. We can further define second-order family relationships, such as grandparents and grandchildren, cousin’s children, diagonal neighbors, and so on. Those relationships carry lower correlation and can be indirectly approximated from the first-order relatives. B. Learning Stage The learning stage is done offline, and the statistical relationships are stored for the later sampling and reconstruction stages. The measured correlations are considered as a fixed model. Our experiments showed the statistical characteristics to be almost indifferent to the image classes. That is, the proposed method is robust for varying image classes. At the learning stage, the wavelet coefficients are considered as instances of random variables. We assume that the statistics of the wavelet coefficients are independent of their spatial location, and we study complete blocks of wavelet coefficients as instances of a single random variable. In addition, we assume transposition invariance of the image and wavelet coefficients. Therefore, we expect the same behavior from wavelet coefficients of opposite orientations (e.g., the and blocks). It is common to assume scaling invariance as well, but our experiments showed this assumption is not completely valid for discrete images. See Section V-B for experimental results of the statistical model for different types of wavelet families. It is straightforward to build a linear predictor of the magnitude of a certain coefficient , assuming it has known relatives , i.e., [15]. However, the actual predictors differ according to the available observations. IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 21, NO. 4, APRIL 2012 C. Sampling Stage The sampling stage is divided into an initial phase and a progressive phase. 1) Initial Sampling Phase: Before any coefficient is sampled, the predictors can rely only on the expected mean. Therefore, the coefficients with the highest expected mean should be sampled first. For wavelet decompositions, the expected mean of the coefficients from the block is the highest, and we start by sampling them all. The coefficients of the block carry low correlation with their cousins (at the , , and blocks). Therefore, after sampling the block, we further decompose it “on the fly” into , , , and , in order to make use of the higher parent–children correlations. This way, we get better predictors for , , and . 2) Progressive Sampling Phase: The blind sampling algorithm has three types of coefficients, i.e., the coefficients it has already sampled, which we refer to as known coefficients; relatives of the known coefficients, which are the candidates for the sampling; and the remaining coefficients. The algorithm keeps the candidates in a heap data structure, sorted according to their estimated magnitude. The output of the algorithm is an array of the coefficient values, as sampled at steps 1(a) and 2(a). D. Reconstruction Stage Again, we mark the coefficients as known, candidates, and the remaining coefficients. The input for the reconstruction algorithm is the array of values generated by the sampling algorithm (Algorithm 2). Note that, while the value of the coefficient is stored in the array, its corresponding wavelet mask is not part of the stored information and is obtained during the reconstruction stage (step 2), using the predictors. Consequently, the algorithm does not DEVIR AND LINDENBAUM: BLIND ADAPTIVE SAMPLING OF IMAGES 1483 Fig. 3. First 30 nonadaptive sampling masks. Fig. 6. Ratio between the average reconstruction errors of adaptive and nonadaptive schemes, for unrestricted masks (PCA) and three overcomplete dictionaries (DB3, DB2, and Haar). The reference line (100%) represents the nonadaptive schemes. V. EXPERIMENTAL RESULTS Fig. 4. First 30 adaptive sampling masks. Fig. 5. (Left) Image patch and (right) the reconstruction error using adaptive and nonadaptive unrestricted masks. A. Masks Generated by Statistical Pursuit We start by illustrating the difference between the nonadaptive and adaptive masks. Figs. 3 and 4 present the first 30 masks used to sample a 32 32 image patch, shown in Fig. 5. The masks are produced by both nonadaptive and adaptive schemes, where, in both cases, the masks are unrestricted and the same exponential correlation model is used. The nonadaptive sampling masks, shown in Fig. 3, closely resemble the DCT basis and not by coincidence (the DCT is an approximation of the PCA for periodic stationary random signals [1]). The adaptive sampling masks, shown in Fig. 4, present more complicated patterns. As the sampling process advances, it attempts to “study” the image at interesting regions, e.g., the vertical edge at the center of the patch (see Fig. 5). Fig. 5 presents the true reconstruction error for a varying number of samples. For both adaptive and nonadaptive sampling schemes, the reconstruction is done using the linear estimator (3). Fig. 6 presents the ratio between the reconstruction errors of the adaptive and nonadaptive schemes. We took 256 small patches (16 16 pixels each) and compared the average reconstruction errors. This experiment was repeated for several classes of masks, i.e., unrestricted masks (PCA) and an overcomplete family of Daubechies wavelets of order three (DB3), of order two (DB2), and of order one (Haar wavelets) [4]. Using the adaptive schemes reduces the reconstruction error by between 5% and 10% compared with the nonadaptive schemes (which use the same family of masks). Using optimal representation (i.e., the PCS basis), the error is reduced by 5%, whereas using a less optimal basis (the Haar basis), the error is reduced even further, as the corresponding nonadaptive scheme is known to be suboptimal. For the first few coefficients, the adaptive scheme does not have much information to work with. Therefore, until more samples are gathered, the relative gain over the nonadaptive schemes is erratic. After sampling some more coefficients, the benefits of the adaptivity become more apparent. B. Correlation Models for Blind Wavelet Sampling need to keep the masks associated with the coefficients or their indexes. In our experiments, we used a data set of 20 images. All the images were converted to grayscale and rescaled to 256 256 pixels. The images are shown in Fig. 7. The first two subsets are 1484 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 21, NO. 4, APRIL 2012 TABLE II CORRELATIONS BETWEEN DB3 Fig. 7. Image sets used in our experiments. TABLE I CORRELATIONS BETWEEN DB2 “natural” images, the third set is taken from a computed-tomography brain scan, and the fourth set is a collection of animations. Two examples for experimental correlation models are presented in Tables I and II. Those models were studied for Daubechies wavelets [4] of second and third orders. A four-level wavelet decomposition was carried out over the image data set, and the correlation coefficients between different kinds of wavelet coefficients were estimated according to the maximum-likelihood principle. Observing Tables I and II, we see that Daubechies wavelets of second and third orders exhibit similar behavior. This behavior was also experimentally found for other wavelet families. As the order of the wavelet family increases, most of the correlation coefficients decrease. We also see that the images are not scale invariant, and as the decomposition level decreases, the correlation coefficients between related coefficients increase. Our experiments show the statistical characteristics to be almost indifferent to the image classes. We tested the benefits of using a specific (rather than the generic) model for each class of images and found that it reduces the error by a negligible 1%. It appears that the correlation model characterizes the statistical behavior of the wavelet family and not of the different image classes. C. Blind Wavelet Sampling Results Having obtained the correlation model for the wavelet family, we now present some results of the blind sampling scheme. Taking an image, we decompose it using third-level wavelet decomposition with DB2. We compare the adaptive order, obtained by the blind adaptive sampling scheme, to a nonadaptive raster order, and to a optimal order (which is not blind). The nonadaptive raster-order scheme samples the coefficients according to their block order, from the highest level to lower ones. The optimal-order scheme assumes full knowledge of the coefficients and samples them according to their energy. Figs. 8–10 present partial reconstructions of the cameraman image, where only some of the wavelet coefficients are used for the reconstruction. There are three columns in the figures, i.e., the left, where the selected coefficients are marked; the middle, where the reconstructed images, using the selected coefficients, are presented; and the right, where the error images are shown. Reconstruction error is also shown. All three schemes start with the block and continue to sample the remaining wavelet coefficients in different orders. Figs. 8–10 correspond to raster, adaptive, and optimal orders, respectively. In Fig. 11, we can see the reconstruction errors for the raster, adaptive and optimal orders, averaged over all 20 images. Note that the same reconstruction error may be achieved by the adaptive scheme using about half of the samples required by the nonadaptive scheme. The main advantage of blind sampling schemes is that the sampling results (the actual coefficients) need to be stored but not their locations. Nonblind adaptive schemes, such as choosing the largest coefficients of the decomposition (the optimal-order scheme), require an additional piece of information, i.e., the exact location of each coefficient, to be stored alongside its value. From a compression point of view, we have to estimate the number of bits required for storing the additional information needed for the reconstruction. As a rough comparison, DEVIR AND LINDENBAUM: BLIND ADAPTIVE SAMPLING OF IMAGES Fig. 8. Reconstruction of the cameraman image using (a) the first 1024 coeffiblock; (b) 4096 coefficients of the , , , and cients of the blocks; and (c) 16 384 coefficients of the , , , , , , blocks. and the 1485 Fig. 11. Reconstruction error averaged over 20 images, using up to 16 384 wavelet coefficients, taken according to the raster, adaptive, and optimal orders. (Dashed line) Compression-aware comparison of the optimal order, taking into an account the space required to store the coefficient indexes of the optimal order. dashed line marks the reconstruction error of the optimal order taking into account the storage considerations. VI. CONCLUSION Fig. 9. Reconstruction of the cameraman image using (a) 4096 coefficients taken according to the blind sampling order and (b) 16 384 coefficients taken according to the blind sampling order. Fig. 10. Reconstruction of the cameraman image using (a) 4096 coefficients taken according to the optimal order and (b) 16 384 coefficients taken according to the optimal order. we assume that the location (index) of a coefficient takes bits. Optimistic assumptions on entropy encoders reduce the size required for storing the coefficient indexes to about 8 bits, disregarding quantization. Using this rough estimation on storage requirements, each coefficient of the optimal order is equivalent, in a bit-storage sense, to about two coefficients of the blind adaptive scheme. In Fig. 11, the In this paper, we have presented two novel blind adaptive schemes. Our statistical pursuit scheme, presented in Section III, maintains a second-order statistical model of the image, which is updated as information is gathered during the sampling process. Experimental results have shown that the reconstruction error is smaller by between 5% and 10%, as compared with regular nonadaptive sampling schemes, depending on the class of basis functions. Due to its complexity, however, this scheme is most suitable for small patches and a small number of coefficients. Our blind wavelet sampling scheme, presented in Section IV, is more suitable for complete images. It uses the statistical correlation between the magnitudes of wavelet coefficients. Naturally, the optimal selection of the coefficients with the highest magnitude shall produce superior results, but such unblind methods require storage of the coefficient indexes, whereas the blind scheme only stores the coefficients. Taking into an account the additional bit space, the blind wavelet sampling scheme produces results almost as good as optimal selection of the masks. Some open problems are left for future research, such as the application of the statistical-pursuit scheme to image compression. Including quantization in the scheme and introducing an appropriate entropy encoder can turn the sampling scheme into a compression scheme. Replacing DCT or DWT sampling with their statistical-pursuit counterparts reduces the error for each patch by 5%–10%. However, some of the gain is expected to be lost by the entropy encoder. The blind wavelet sampling scheme makes use of linear predictors. However, it is known that the distribution of wavelet coefficients is not Gaussian [9], [18]. Therefore, higher order predictors for modeling the relationships between the coefficients 1486 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 21, NO. 4, APRIL 2012 may yield better predictors and a better blind adaptive sampling scheme. and the denominator is APPENDIX A Let be a linearly independent set of masks, where is the new mask. According to (5), MSE , i.e., the MSE of the whole image estimate after sampling the th mask, is MSE Both the numerator and the denominator have similar quadratic forms. Therefore, let us define Plugging back into the numerator and the denominator of MSE yields where , is a unit column vector with 1 at and 0 elsewhere, and is the image domain. We now rewrite MSE while separating the elements influenced by , i.e., the new mask, from the elements that are independent of MSE Surprisingly, is the covariance of , i.e., the estimated image based on the first measurements. MSE APPENDIX B We denote as the matrix of the previous masks, excluding the th new mask . Using matrix notation, , , , , and . A matrix blockwise inversion , which is an eigenvector corresponding to Proposition: the largest eigenvalue of , is an optimal mask. Proof: Let be the eigendecomposition of , where are the orthonormal eigenvectors of , sorted in descending order of their corresponding eigenvalues . Let be an arbitrary mask. If , MSE . Otherwise, let be represented by the eigenvector basis as . According to (6) implies that MSE MSE As MSE Now, we have a more precise expression for the expected reduction of the MSE at the th iteration, i.e., Since indeed MSE is MSE MSE Hence, MSE The numerator of is the largest eigenvalue of , the following holds: maximizes , we see that, MSE MSE and is an optimal mask. REFERENCES [1] N. Ahmed, T. Natarajan, and K. R. Rao, “Discrete cosine transform,” IEEE Trans. Comput., vol. C-23, no. 1, pp. 90–93, Jan. 1974. [2] M. de Berg, M. van Kreveld, M. Overmars, and O. Schwarzkopf, Computational Geometry, Algorithms and Applications, 2nd ed. New York: Springer-Verlag, 2000. [3] R. W. Buccigrossi and E. P. Simoncelli, “Image compression via joint statistical characterization in the wavelet domain,” IEEE Trans. Image Process., vol. 8, no. 12, pp. 1688–1701, Dec. 1999. [4] I. Daubechies, Ten Lectures on Wavelets. Philadelphia, PA: SIAM, 1992. DEVIR AND LINDENBAUM: BLIND ADAPTIVE SAMPLING OF IMAGES [5] Z. Devir and M. Lindenbaum, “Adaptive range sampling using a stochastic model,” J. Comput. Inf. Sci. Eng., vol. 7, no. 1, pp. 20–25, Mar. 2007. [6] Y. Eldar, M. Lindenbaum, M. Porat, and Y. Zeevi, “The farthest point strategy for progressive image sampling,” IEEE Trans. Image Process., vol. 6, no. 9, pp. 1305–1315, Sep. 1997. [7] H. Hotelling, “Analysis of a complex of statistical variables into principal components,” J. Educ. Psychol., vol. 24, no. 6, pp. 417–441, Sep. 1933. [8] H. Hotelling, “Analysis of a complex of statistical variables into principal components,” J. Edu. Psychol., vol. 24, no. 7, pp. 498–520, Oct. 1933. [9] J. Huang and D. Mumford, “Statistics of natural images and models,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., Fort Collins, CO, 1999, pp. 541–547. [10] A. K. Jain, Fundamentals of Digital Image Processing. Upper Saddle River, NJ: Prentice-Hall, 1989. [11] S. Mallat, A Wavelet Tour of Signal Processing. San Diego, CA: Academic, 1999. [12] S. Mallat and Z. Zhifeng, “Matching pursuits with time-frequency dictionaries,” IEEE Trans. Signal Process., vol. 41, no. 12, pp. 3397–3415, Dec. 1993. [13] A. N. Netravali and B. G. Haskell, Digital Pictures. New York: Plenum Press, 1995. [14] Y. C. Pati, R. Rezaiifar, and P. S. Krishnaprasad, “Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition,” in Proc. 27th Annu. Asilomar Conf. Signals, Syst., Comput., 1993, pp. 40–44. [15] S. Papoulis, Probability, Random Variables and Stochastic Processes. New York: McGraw-Hill, 2002. [16] J. M. Shapiro, “Embedded image coding using zerotrees of wavelet coefficients,” IEEE Trans. Signal Process., vol. 41, no. 12, pp. 3445–3462, Dec. 1993. [17] N. Sochen, R. Kimmel, and R. Malladi, “A general framework for low level vision,” IEEE Trans. Image Process., vol. 7, no. 3, pp. 310–318, Mar. 1998. [18] A. Srivastava, A. B. Lee, E. P. Simoncelli, and S. C. Zhu, “On advances in statistical modeling of natural images,” J. Math. Imag. Vis., vol. 18, no. 1, pp. 17–33, Jan. 2003. [19] C. Tomasi and R. Manduchi, “Bilateral filtering for gray and color images,” in Proc. IEEE Int. Conf. Comput. Vis., 1998, pp. 839–846. [20] J. S. Vitter, “Design and analysis of dynamic Huffman codes,” J. ACM, vol. 34, no. 4, pp. 825–845, Oct. 1987. 1487 [21] B. Zeng and J. Fu, “Directional discrete cosine transforms for image coding,” in Proc. IEEE Int. Conf. Multimedia Expo, 2006, pp. 721–724. Zvi Devir received the B.A. degrees in mathematics and in computer science and the M.Sc. degree in computer science from the Technion–Israel Institute of Technology, Haifa, Israel, in 2000, 2000, and 2007, respectively. From 2006 to 2010, he was with Medic Vision Imaging Solutions, Haifa, Israel, a company he cofounded, where he was the Chief Scientific Officer. Previously, he was with Intel, Haifa, Israel, mainly working on computer graphic and mathematical optimizations. He is currently with IARD Sensing Solutions, Yagur, Israel, focusing on advanced video processing and spectral imaging. His research interests include video and image processing, mainly algebraic representations and differential methods for images. Michael Lindenbaum received the B.Sc., M.Sc., and D.Sc. degrees from the Department of Electrical Engineering, Technion–Israel Institute of Technology, Haifa, Israel, in 1978, 1987, and 1990, respectively. From 1978 to 1985, he served in the IDF in Research and Development positions. He did his Postdoc with the Nippon Telegraph and Telephone Corporation Basic Research Laboratories, Tokyo, Japan. Since 1991, he has been with the Department of Computer Science, Technion. He was also a Consultant with Hewlett-Packard Laboratories Israel and spent sabbaticals in NEC Research Institute, Princeton, NJ, in 2001 and in Telecom ParisTech, in 2011. He also spent shorter research periods in the Advanced Telecommunications Research, Kyoto, Japan, and the National Institute of Informatics, Tokyo. He worked in digital geometry, computational robotics, learning, and various aspects of computer vision and image processing. Currently, his main research interest is computer vision, particularly statistical analysis of object recognition and grouping processes. Prof. Lindenbaum served on several committees of computer vision conferences and is currently an Associate Editor of the IEEE TRANSACTIONS OF PATTERN ANALYSIS AND MACHINE.