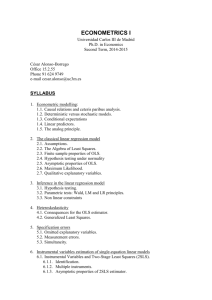

The Methodology of Econometrics

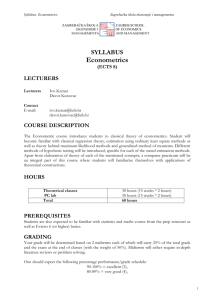

advertisement