Section 6

advertisement

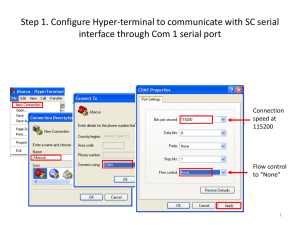

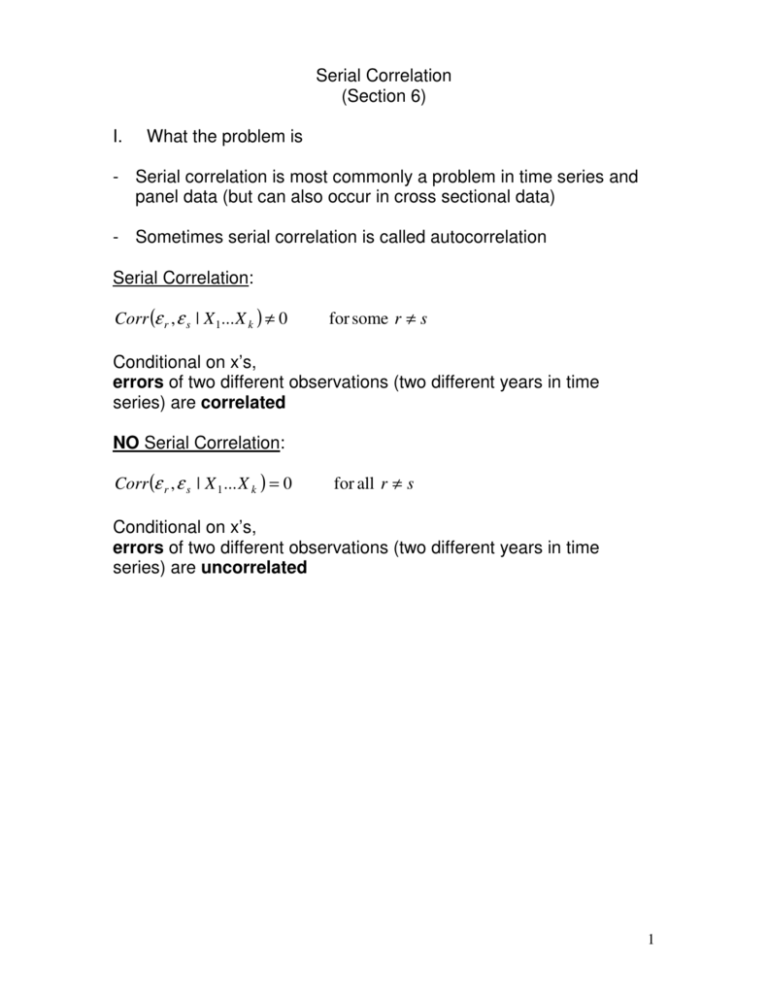

Serial Correlation (Section 6) I. What the problem is - Serial correlation is most commonly a problem in time series and panel data (but can also occur in cross sectional data) - Sometimes serial correlation is called autocorrelation Serial Correlation: Corr (ε r , ε s | X 1... X k ) ≠ 0 for some r ≠ s Conditional on x’s, errors of two different observations (two different years in time series) are correlated NO Serial Correlation: Corr (ε r , ε s | X 1 ... X k ) = 0 for all r ≠ s Conditional on x’s, errors of two different observations (two different years in time series) are uncorrelated 1 A. Pure serial correlation 1. First order serial correlation in time series data (stochastic error term follows an AR(1) process) AR(1) process (Markov scheme): ε t = ρε t −1 + ut - What if ρ = 0 ? - What if ρ → 1 ? - What if ρ > 1 ? - What if ρ is positive? (positive serial correlation) Picture of positive serial correlation: 2 What if ρ is negative? (negative serial correlation) Picture of negative serial correlation: Picture of stochastic error with no serial correlation: 2. Other order serial correlation (a) Seasonally serial correlation (b) Second order serial correlation (error term is an AR(2) process) 3 3. Serial Correlation in panel data Correlation between errors of a particular individual will probably be correlated 4 B. Impure serial correlation Impure serial correlation- serial correlation caused by a specification error (such as an omitted variable or incorrect functional form) Ex True equation: Yt = β 0 + β1 X 1t + β 2 X 2t + ε t Suppose we accidentally omit X 2 ? Yt = β 0 + β1 X 1t + ε t* ε t* = β 2 X 2t + ε t ε t* will tend to be serial correlation if 1. X 2 is serially correlated ( 2. size of ε is small compared to the size of β 2 X 2 ) & Ex (from the book) Ft = β 0 + β1 RPt + β 2Ydt + β 3 Dt + ε t What happens if disposable income is omitted? 1. Why might the estimated coefficients for RP & D be bias? 2. Error term included left out disposable income effect Disposable income probability follows a serially correlated pattern 5 Why? Some more algebra: 6 C. Does putting a lagged value of our dependent variable in our independent variables cause bias and serial correlation? i.e. - Most textbooks would say this would cause bias & serial correlation, but this is not quite right. 7 II. Consequences of serial correlation Three major consequences of serial correlation: 1. Pure serial correlation does not cause bias in the coefficients’ estimates 2. Serial correlation causes OLS estimates not to be the minimum variance estimates (of all the linear unbiased estimators) ( ) 3. Serial correlation causes the OLS estimates of SE βˆ j ’s to be bias, leads to unreliable hypothesis testing - Does not make it βˆ j biased but standard errors will typically increase because of serial correlation - OLS is more likely to misestimate β Note: It very rarely makes sense to talk about a bias in R 2 (or adjusted R 2 ) caused by serial correlation 8 III. Tests for detecting serial correlation A. A t-test for AR(1) serial correlation with strictly exogenous regressors Must assume: Hypothesis we will test: How do we test this? Why can’t we regress ε t = ρε t −1 + ut and use t-test on ρ ? t = 2,...T What could we do instead? If we get a small p-value what does this mean? 9 B. Durbin-Watson test Must meet the following assumptions to use the Durbin-Watson test: 1. Regression includes an intercept 2. Serial correlation is AR(1) 3. The regression does not included a lagged dependent variables as an independent variable T ∑ (et − et −1 ) DW = t = 2 2 T ∑ (et )2 t =1 DW =0 : Extreme positive serial correlation DW ≈ 2 : No serial correlation DW =4 : Extreme negative serial correlation - Weaknesses of Durbin-Watson test • Can have inconclusive results • Depends on a full set of OLS assumptions - How to perform a Durbin-Watson test • Run regression → Get OLS residual → Calculate DW stat • Determine sample size and use tables, in our book (B-4, B-5, B-6) Decision rule for DW < d L DW > dU d L ≤ DW ≤ dU HO : ρ ≤ 0 : Reject H O Do not reject H O Inconclusive • Decision rule for If DW < d L If DW > 4 − dU If 4 − dU ≤ DW ≤ dU Otherwise HO : ρ = 0 : Reject H O Reject H O Do not reject H O Inclusive • If If If - SPSS gives a DW statistic 10 C. Other tests - There is a DW test for AR(2) serial correlation ε t = ρ1ε t −1 + ρ 2ε t − 2 + ut H O : ρ1 = 0 ρ 2 = 0 F-Test for joint significance - There is also a Lagrange multiplier test 11 IV. What to do about serial correlation - What do you do if your Durbin-Watson shows you have serial correlation A. First check to see if it is impure serial correlation - Check specification of your equation 1. Is the functional form correct? 2. Are there omitted variables? 12 B. For pure serial correlation 1. Use generalized least squares (GLS) - GLS starts with an equation that does not meet CLR assumptions (In this case due to serial correlation) & transform it into one that does. 13 Ways to estimate GLS equations: a) Cochrane-Orcutt Method (two step method) - Run regression to get starting values of et and et −1 - Run regression of et = ρet −1 Get ρ̂i i. Run regression of: Yt − ρˆ iYt −1 = β 0 (1 − ρˆ i ) + β1 ( X 1t − ρˆ i X 1t ) ii. Run regression of: et = ρet −1 using new et and et −1 get ρˆ i +1 If ρˆ i +1 − ρˆ i > tolleranceLevel change ρˆ i +1 to ρ̂i and go back to step 1 If ρˆ i +1 − ρˆ i ≤ tolleranceLevel Stop and use your last regression results b) The AR(1) method Estimate a GLS equation like (4) estimating β 0 , β1 , & ρ simultaneously 2. Newey-West standard errors - Changes standard errors but does not change β j ’s. o Why does it make sense to adjust se(β j ) but not β j ’s? - Typically Newey-west standard errors are larger than OLS standard errors o What does this do to significance? Summary: 14