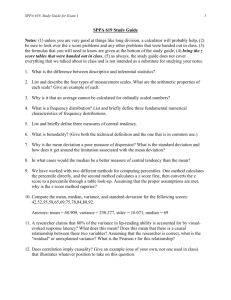

Reliability - The SAPA

advertisement