Component Notation

advertisement

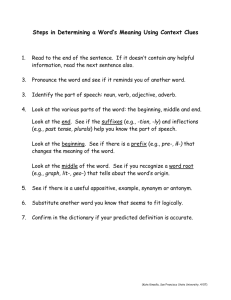

Component Notation Adrian Down January 23, 2006 1 Levi-Civita density 1.1 Definition The advantage of component notation is that it allows us to rearrange terms in a product. Components can be rearranged, since they are only numbers, whereas matrices and vectors cannot, in general, be rearranged. Consider writing the third component of b × c, (b × c)3 = b1 c2 − b2 c1 In more general terms, an arbitrary component can be written using the Levi-Civita density. Definition (Levi-Civita Density). ( 1 even permutations of 123 ijk = −1 odd permutations of 123 A permutation, or “swap”, is defined as an exchange of adjacent indices. With this definition, (b × c)k = klm bl cm recalling that repeated indices are implicit sums. There are a total of nine terms on the right side of the equation as i and j both range from 1 to 3, but only two terms are nonzero. 1 1.2 Component-wise proof of the BAC − CAB rule The familiar rule for double cross products is a × (b × c) = b (a · c) − c (a · b) We give a proof of this identity using component notation to demonstrate how this approach can greatly simplify some otherwise tedious calculations. Proof. Using the Levi-Civita density to rewrite the cross products, (a × (b × c))k = ijk aj (b × c)k = ijk aj klm bl cc Since each term is only a scalar, they can be rearranged. (a × (b × c))k = aj bl cm ijk klm There are four sums on the right side, and hence 81 terms. However, Considering just the last term, only k is summed over, and there are only three terms. Note. Sums can be performed in any order, so choose the order that will most simplify calculations. ijk klm is only nonzero if i, j, l, and m are all different than k. There are two possibilities, either • i = l and j = m or • i = m and j = l Consider the case of i = l and j = m, which gives ijk kij . The latter can be derived from the former by two swaps, so the second is an even permutation. This product is +1 for any value of k. Likewise, if i = m and j = l, ijk kji = −1. Hence we have the lemma, ijk klm = δil δjm − δim δjl We can now complete the proof, (a × (b × c))i = aj bl cm (δil δjm − δim δjl ) 2 Using these delta functions to evaluate the summations, = b i aj c j − c i aj b j Recall that the repeated j index is an implicit sum, aj c j = 3 X aj c j = a · c j=1 We have then (a × (b × c))i = bi (a · c) − ci (a · b) Since this holds for any component, a × (b × c) = b (a · c) − c (a · b) 1.3 Determinants We can write a determinant as det A = 1 ijk Ail Ajm Akn lmn 3! There are 729 terms on the right. However, there are groups of six terms that are identical, hence the factor of 3!1 . We can also write a four-dimensional determinant, det A = 1 ijkl Aim Ajn Akp Alq mnpq 4! where ijkl is +1 for even swaps of 1234 and −1 for odd swaps of 1234. 2 2.1 Rotation of vectors Setup: 3D rotations Definition (Rotations). A passive rotation is a rotation of the coordinate frame that leaves all vectors vectors in space fixed. An active rotation rotates a vector but leaves the coordinate frame fixed. 3 Note. In this course, we will deal mostly with passive rotations. Consider a passive rotation in which the coordinate x and y axes are rotated counter-clockwise by an angle φ, while the z axis is held fixed. We denote the rotated system with a prime. The transformation rule is a0i = 3 X Λij aj j=1 where the elements of the matrix Λ are all sines and cosines. We usually suppress the sums to write a0i = Λij aj Equivalently, this transformation can be written using a tensor, ← → a0 = Λ a 2.2 2.2.1 Properties of transformation matrices Orthogonality We would like to find the properties of the Λ Λ12 ← → 11 Λ = Λ21 Λ22 Λ31 Λ32 transformation matrix, Λ13 Λ23 Λ33 The transformation matrix is constrained by the requirement that the vector remain the same length under rotation, i.e. the dot product of the vector with itself must be preserved, ai ai = a0i a0i = Λij aj Λik ak Note. We have to use different summation indices for the Λ matrices. Indices are only repeated if we intend to sum over them. Any symbols as the second indices of the Λ matrices are equivalent. 4 We would like ho rearrange the order of the indices on one of the Λ matrices. Since indices cannot be permuted at will, we have to take the transpose, ΛTki = Λik Since we are working in components, we can switch the order of the terms, ai ai = ak ΛTki Λij aj The matrices have a repeated inner index, which implies matrix multiplication. ΛTki Λij = ΛT Λ kj If the rotation is to preserve length, ai ai = ak ΛT Λ kj aj it must be that ΛT Λ kj = δkj This is true if ΛT Λ = 113 This is the characteristic property of orthogonal matrices, ΛT = Λ−1 Note. Complex matrices with this property are called unitary. However, we would have to take the Hermitian conjugate instead of simply the inverse. We will always deal with cases where Λ is real. 2.2.2 Degrees of freedom It takes 3 Euler angles to define a rotation. However, Λ has nine components. The requirement of orthogonality gives 9 equations. 3 of these equations are redundant. The remaining 6 restraints reduce the number of degrees of freedom to 9 − 6 = 3, as expected. 5 2.3 2.3.1 Rotation of tensors Definition Definition (Tensor). A tensor is an object that transforms according to the rule that we are about to show. Note. Tensors can be represented by 3 × 3 matrices. A 3 × 3 matrix is not always a tensor, however. Tensors act on vectors to produce new vectors, ← → b= T a 2.3.2 Transformation rule We would like to see how a tensor rotates. Beginning with the defining equation of the tensor, we would like to see how to phrase it in a rotated system. ← → ← → b= T a b0 = T 0 a0 We also have the transformation properties from before, ← → ← → a0 = Λ a b0 = Λ b In component notation, bi = Tij aj b0i = Tij0 a0j a0j = Λjl al b0i = Λik bk ← → Substituting into b0 = T 0 a0 , Λik bk = Tij0 Λjl al Knowing the desired result, we multiply both sides by an inverse, −1 0 Λmi Λik bk = Λ−1 mi Tij Λjl al This step is useful because the repetition of the inner index i on the left side of the equation indicates matrix multiplication. Since the transformation must be orthogonal, we can perform the sum on i, Λ−1 Λ mk = δmk 6 Repeated inner indices on the right also indicate matrix multiplication. With these simplifications, δmk bk = Λ−1 T 0 Λ ml al bm = Λ−1 T 0 Λ ml al The quantity in parenthesis is a tensor, ←−−−→ b = Λ−1 T 0 Λa From the previous definition of the tensor,we must have ← → b= T a ⇒ T = Λ−1 T 0 Λ The similarity transformation for tensors is thus T 0 = ΛT Λ−1 In component notation, Tij0 = Λik Tkl Λ−1 lj = Λik Tkl ΛTlj = Λik Tkl Λjl The most convenient way to write the transformation law for tensors is Tij0 = Λik Λjl Tkl 7