Information Domain Modeling for Adaptive Web Systems

advertisement

Information Domain Modeling for Adaptive Web Systems

Wenpu Xing and Ali A. Ghorbani

Intelligent & Adaptive Systems (IAS) Research Group

Faculty of Computer Science

University of New Brunswick

Fredericton, NB, Canada

wenpu.xing, ghorbani@unb.ca

Abstract

This paper presents a Domain Modeling System, which

builds a domain model framework for adaptive Web systems. It records concepts and the relationships among them

and represents them as a concept network. To speed up run

time searches, the system finds all related concepts by calculating the optimal paths between all pairs of concepts offline in advance. In addition, a new algorithm, Rich Maximal Frequent Sequence algorithm, is introduced in the system for discovering frequent sequence patterns among concepts. To test the Domain Modeling System, it is applied to

an adaptive web system. The experiments demonstrate that

the adaptive Web system is improved in the performance of

accurate page recommendations and quick responses.

1. Introduction

In the past few years, the World Wide Web has expanded

quickly and has been permeating people’s lives. People can

do many of their daily activities online, such as shopping,

reading news, banking or booking a flight seat or a restaurant. The Web makes life convenient. However, with the

fast growth of the Web, people are not satisfied with viewing the same content on the same web page and are not

happy with easily getting lost in the hyperspace of the Web.

To cater to people’s needs, Adaptive Web Systems (AWSs)

are demanded to provide the exact information people need,

present in the way people prefer, and guide people to a destination through an optimal path [2, 3, 5, 11]. This requires

the systems to know exactly the information domain and

manage it. To make the information domain much easier

to manage, recording the information as conceptual units,

which we call concepts, along with the associations among

them, which we call relationships, [4, 10, 7, 9] is necessary. For example, in the existing AWSs, such as Interbook

[2], AHA! [3], SKILL [8] and ELM-ART [11], concepts

and relationships have been widely used. The systems study

the concepts and relationships in their own information domains and generate dynamic pages based on the study and

user information (i.e., interests, preference, goals and background).

Moreover, to improve the reusability and the modifiability of AWSs, the information domain can be encapsulated as

a domain model. Many AWSs, such as AHA! [3], have been

built by recording the content and the navigation structure

as domain models. However, there is no standard, comprehensive framework or design pattern for building them. To

avoid having developers repeat the same work that others

have already done for domain modeling of AWSs and promote knowledge transfer between AWSs, building a domain

model framework for AWSs is paramount. To concentrate

on this issue, this research works on studying the concepts

and the relationships in the information domains and building a domain modeling system. The domain modeling system provides a domain model framework for AWSs with the

techniques of effectively using concepts and relationships.

Finally, to evaluate it, the domain modeling system is applied to an AWS, Adaptive Recommendation for Academic

Scheduling (ARAS). The experiments are also shown in this

paper.

The rest of this paper is organized as follows. Section

2 presents the domain modeling system in detail. The experiments of evaluation are shown in Section 3. Finally, the

conclusion is addressed in Section 4.

2 Domain Modeling System

The Domain Modeling System (DMS) is aimed at supporting the development of AWSs. DMS builds a domain

model framework for AWSs. The system focuses on two

things: 1) defining a general structure to represent the information domains of AWSs and 2) providing techniques

to consume the information more efficiently and quickly.

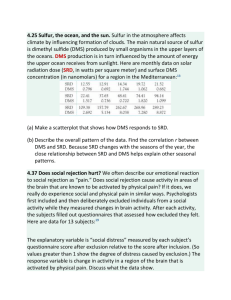

The structure of the proposed DMS is shown in Figure 1.

Domain Modeling System

na

Author Tool

sets

Domain Model

used by

Recommendation

Provider

request

Concepts

sets

provides

sets

Pattern Miner

Environment

response

Relationships

uses

concept

remmendation

uses

sets

uses

Graph Generator

usage

data

Figure 1. The Structure of the Domain Modeling System (DMS)

The system consists of a data model, namely domain model,

and four processors, namely author tool, pattern miner,

graph generator and recommendation provider. The domain model encapsulates the information domain and describes how it is represented as concepts and relationships.

The processors provide the functionalities of constructing

and consuming the domain model. First of all, the author

tool provides an interface for authors to input concepts and

relationships to AWSs. Then new relationships are retrieved

from the existing relationships or usage data and recorded in

the domain model by the pattern miner. After that, the graph

generator builds a concept network and finds out the optimal

paths for each pair of the concepts based on the priorities of

the concepts and the relationships stated in the concept network. Finally, the recommendation provider generates concept recommendations to given requests by using the optimal paths and other necessary information in the domain

model.

2.1 Domain model

2.1.1 Concepts

Concepts are the fundamental classifications or units of information within the system. According to the information

included, concepts in DMS are divided into two categories:

atomic concepts and composite concepts (as shown in Figure 2). Atomic concepts are a special kind of composite

concept. They are the smallest items recorded in the domain model and do not need to be further broken down.

For example, an icon, an image, or a fragment of text is

an atomic concept. Composite concepts are those that consist of several other composite concepts, which can be composite concepts or atomic concepts. However, a composite

concept cannot be constituted by its sub concepts.

Composite

Concepts

*

1

Atomic

Concepts

Figure 2. Concept categorization

The domain model represents the information domain as

conceptual units, which are concepts, along with the associations among them, which are relationships. To record

the concepts and the relationships in a common structure

and then to facilitate information exchange between applications within different domains, a general structure, Ontology, is presented. The detailed information is described in

the following subsections.

2.1.2 Relationships

A relationship describes in what way (if any) two concepts

are related and to what degree that relationship exists. In

DMS, two kinds of relationships are considered: predefined

relationships, which are those defined by the author, and

discovered relationships, which are those mined by the system from the usage data.

Predefined Relationships: the relationships that can be observed by studying the information domain. To state the

generalization of these relationships to AWSs, the predefined relationships in DMS are further divided into two

groups based on their life scopes: domain independent,

which are independent from any domain specific concepts,

and domain specific, which are dependent on some domain

specific concepts and will not be listed until an application

domain is specified. The domain independent relationships

considered in DMS are listed as follows:

1. IsA(a,b): Concept a is a concept b iff a is defined as a

sub concept or an instance of b.

2. Prerequisite(a,b): Concept a is a prerequisite of concept b iff accessing b requires knowing a.

3. Co-requisite(a,b): Concept a is a co-requisite of concept b iff they must be processed together.

4. Inhibition(a,b): Concept a is an inhibition of concept

b iff a should not be accessed after accessing b.

5. Similarity(a,b): Concept a is similar to concept b iff

their contents are similar.

6. Containment(a,b)/Member(b,a): Concept a contains

concept b and b is a member of a iff b is held within

container a.

7. Whole(a,b)/Part(b,a): Concept b is a part of concept

a iff an occurrence of a would necessarily involve an

occurrence of b, but not vice versa.

1. Association(a,b): Concept a is an association of concept b iff the presence of a in the sessions implies the

presence of b.

2. MaximalFrequentSequence(a,b,c,d): The sequence (a,

b, c, d) is a maximal frequent sequence iff the following three requirements are met: 1) these concepts are

always presented in order in the sessions; 2) the occurrence of the sequence in the sessions is greater than

the given threshold value; and, 3) the sequence is not

contained in any other longer frequent sequences.

2.1.3 Ontology

Ontology has become a popular word in information- and

knowledge-based systems research, such as the Semantic

Web [6], and has been developed in artificial intelligence to

facilitate knowledge sharing and reuse. Ontologies play a

key role in advanced information exchange as they provide

a common understanding of a domain. In DMS, we present

an ontology to represent the domain model by describing

the vocabulary and structure of the information those domains contain. Figure 3 shows the structure of the ontology. The Ontology contains the concepts and the relationships and provides an interface to query, update, and create

them. Each concept is represented by a set of attributes that

are descriptive properties possessed by each instance of the

concept. Each concept has one or more values for each of

its attributes. Each relationship associates with one source

concept and one target concept.

Ontology

1

8. Sibling(a,b): Concept a is a sibling of concept b iff

they are contained in the same concept. For example, part(a,x) and part(b,x), or member(a,y) and member(b,y) are satisfied.

Concept

9. Equivalent(a,b): Concept a is equivalent to concept b

iff they are essentially equal.

Attribute

10. Complement(a,b): Concept a is a complement of concept b iff they are totally different and the union of

them constitutes the universe.

11. Link(a,b): Concept b is a link of concept a iff a direct

link from a to b exists.

Discovered Relationships: the patterns among concepts

observed from the usage data. They cannot be found by analyzing only the information domain. Because associations

and frequent sequences of concepts are popularly used in

electronic systems (i.e., e-commerce or e-learning) for providing recommendations, DMS defines the following two

discovered relationships:

*

1

1

1

source

*

* Relationship

1

target

*

*

1

*

Value

Figure 3. Ontology structure of DMS

2.2 Processors

In contrast to the domain model, which provides a data

structure for AWSs, the processors of DMS present functionalities of setting data, discovering useful information

from the available data, and generating recommendations.

Detailed information is provided in the following subsections.

2.2.1 Author Tool

The author tool is a component of DMS for authors to interact with the system. By using this tool, authors can send

requests for retrieving, updating, or adding the concepts and

the relationships from or to the domain model. The system

manipulates the concepts or the relationships according to

the given requests. With the tool, authors can set all necessary concepts and the relationships between them to the

domain model. However, with the exponential growth of

Web systems, this task becomes not only time consuming

but also challenging. To reduce the workload of the authors caused by figuring out the relationships that can be

derived from available data, the system provides two relationship finders: sibling finder and similarity finder. The

sibling finder discovers siblings from the relationships of

containment and whole based on the definition of sibling relationship. The similarity finder discovers similar concepts

by analyzing the topics contained in the concepts. Furthermore, to check the consistence and the integrality of the

relationships, a relationship checker is provided based on

the properties of the relationships and the implications between them. For example, sibling(a,b) implies sibling(b,a)

and containment(a,b) implies member(b,a).

2.2.2 Pattern Miner

The pattern miner provides two sub-miners, Association

Miner and Rich Maximal Frequent Sequence Miner, to find

the discovered relationships defined in DMS from usage

data, respectively.

1. Association Miner: is used to discover the association

relationships from the Web access logs. The miner applies the APRIORI algorithm. APRIORI was originally proposed by Agrawal in [1] in 1994 to find frequent itemsets and association rules in a transaction

database. Now, it is the most basic and well-known

algorithm to find frequent itemsets. The algorithm

generates association rules by following the following

process: at first, all frequent itemsets, whose occurrence is greater than a given threshold value of support, are incrementally discovered from the transaction

database (for example, the itemsets with length k are

spread from the itemsets with length k − 1); next, rules

between the frequent itemsets are generated by checking the probability of the transactions containing both

of the itemsets (for example, the association(a,b) holds

iff the probability of the sessions containing both a

and b is greater than the threshold value of confidence).

The algorithm provides an efficient way to generate the

candidate itemsets in a database pass by using only the

itemsets found large in the previous pass. Algorithm 1

shows the algorithm. Detailed information is described

in [1].

Algorithm 1 APRIORI Algorithm [1]

Input:

D: database

Output:

all frequent itemsets.

Method:

1) L1 = {large 1-itemsets};

2) for (k = 2; Lk−1 6= ∅; k + +) do begin

3)

Ck = apriori-gen(Lk−1 );//New candidates

4)

forall transactions t ∈ D do begin

5)

Ct = subset(Ck , t);//Candidates contained in t

6)

forall candidates c ∈ Ct do

7)

c.count++;

8)

end

9)

Lk = {c ∈ Ck | c.count ≥ minsup}

10) end S

11) return k Lk ;

2. Rich Maximal Frequent Sequence Miner: is used to

discover the traversal patterns, maximal frequent sequences, from Web access logs. A Frequent Sequence

Tree (FSTree), a tree-like data structure, is introduced

to record the frequent items and the maximal frequent

sequences. The tree is constructed by following these

steps. Firstly, an empty tree is defined with a root

node only. Secondly, the frequent 1-item sequences

are found by counting their occurrences in the sessions

and added to the tree as child nodes of the root. Within

each node, the occurrence of the included item and the

path from the root to the current node are recorded

to speed up later searches. Thirdly, for each node,

M SN ode, in the newly updated level of the tree, every

frequent 1-item C is considered as a potential child and

a corresponding candidate frequent sequence is generated by appending C to the current path recorded in

the node. Then, the candidate’s occurrence is counted

by observing the sessions. If the candidate is frequent, C will be added to the tree as a child node of

MSNode with the candidate sequence and its occurrence. Fourthly, step three will be continued until the

construction of the tree is well done. In the finished

FSTree, all maximal frequent sequences are recorded

in the leaf nodes as their current path.

Moreover, to speed up decision making at the cross

points of similar sequences (i.e., (a, b, c, d) and

(a, b, c, e)), weights are added to the sequences to identify the priority of step choices. For example, the sequence (c1 , c2 , c3 ) becomes ( c1 , wc1 c2 , c2 , wc2 c3 , c3 ),

where wci ci+1 denotes the priority of the choice from

ci to ci+1 . Sequences with weights are called rich

frequent sequences while the algorithm that generates

them is call Rich Maximal Frequent Sequence algorithm (RMFS). The pseudocode of RMFS is shown as

Algorithm 2.

Algorithm 2 RMFS Algorithm

Input:

S1 , S2 ,...,Sn :sessions.

smin :minimum support threshold.

Output:

all rich maximal frequent sequences (RMFSs)

Method:

1)

F ST ree = an empty sequence tree;

2)

F S1 = {frequent 1-item sequences};

3)

update(F ST ree, F S1 );

4)

for( k = 2; F Sk−1 6= ∅; k + +) do

5)

F Sk−1 = {sequences with length k − 1 in ST };

6)

Ck = genPathCandidate(F Sk−1 );

7)

for i from 1 to n do

8)

count(Ck , Si );

9)

F Sk = {p ∈ Ck |p.count ≥ smin }

10)

update(F ST ree, F Sk );

11)

RMFSs = richPathsGen(F ST ree);

12)

return RMFSs;

their priorities. The weight of a concept a is propagated from its referrer concepts that are concepts

linked to it. A concept gets one value proportional to

its popularity (numbers of inlinks and outlinks) from

each referrer concept. The popularity from the number

in

out

of inlinks and outlinks is recorded as P(v,u)

and P(v,u)

,

in

respectively. P(v,u) is the popularity of link(v, u) calculated based on the number of inlinks from concept

v to concept u and the number of inlinks of all referout

ence concepts of concept v. P(v,u)

is the popularity of

link(v, u) calculated based on the number of outlinks

of concept u and the number of outlinks of all reference concepts of concept v.

in

P(v,u)

=P

Iv,u

c∈R(v) Ic

out

P(v,u)

=P

Ou

c∈R(v)

Oc

(1)

(2)

where Iv,u represents the number of inlinks from concept v to concept u. I(c) represents the number of

inlinks of concept c. Ou and Oc represent the number

of outlinks of concept u and concept c, respectively.

R(v) denotes the reference concept list of concept v.

The weight of a concept is calculated by summing up

the products of each referrer concept’s weight and corresponding popularity.

W (u) =

X

in

out

W (v)P(v,u)

P(v,u)

(3)

v∈B(u)

2.2.3 Graph Generator

Because AWSs generate dynamic pages to the given requests on the fly, response time is a main concern. Therefore, processing as much information as possible in advance

is necessary. The graph generator provides such a technique

to accelerate the information query for providing recommendations by building a concept network and finding all

optimal paths between each pair of concepts. The process

is described as follows:

• Building a concept network: Firstly, DMS builds

a concept network by representing the concepts as

nodes, and the relationships as links. At this step, multiple links are allowed between nodes because multiple

relationships might exist between concepts.

• Setting weights to the concepts and the relationships: Secondly, to emphasize the importance of the

concepts and the relationships to the system, weights

are set. The more important they are, the larger

weights they will have. The weights of the relationships can be set arbitrarily by the author according to

where B(u) is the set of concepts that link to concept

u. W (u) and W (v) represent the weights of concepts

u and v, respectively. The weights of the concepts and

the relationships are set to the corresponding nodes and

links in the concept network.

• Combining the multiple links between nodes: To

reduce the search time through the concept network,

multiple links between nodes are combined. The multiple links are combined to a single link, and the maximal weight of the links is set to the combined link.

• Finding related concepts: Finding related concepts

for each concept off-line in advance is another efficient

method to reduce the response time of AWSs. The related concepts are discovered by calculating optimal

paths between each pair of concepts according to the

importance of concepts and relationships. Equation 4

is defined to calculate the paths’ weights. The path

with the largest weight is defined as the optimal path

and saved into the domain model.

P

W (Ci ) × W (Ci−1 Ci )

n−1

(4)

where PCs Ct is any path from the source concept Cs

to the target concept Ct . W (PCs Ct ) represents the

weight of the path PCs Ct . n is the number of concepts on the path. Ci denotes the ith concept on the

path. W (Ci ) and W (Ci−1 Ci ) represent the weights

of concept Ci and link Ci−1 Ci , respectively.

W (PCs Ct ) =

i = 2 to n

2.2.4 The Recommendation Provider

Finally, a built-in recommendation provider is introduced

into DMS to recommend related concepts to the given requests. Once DMS receives a request about a specific concept, the system checks the information contained in the

request. If there is any user’s information (i.e., interests,

browsed history in the current session), the information is

passed to the recommendation provider with the requested

concept. Otherwise, only the requested concept is passed.

The recommendation provider finds a list of closely related

concepts to the requested concept and/or the user information according to the optimal paths and the discovered patterns of association and maximal frequent sequences stated

in the domain model. The name and/or URLs of the related

concepts are passed to DMS. At the end, DMS provides the

requested concept with these recommended concepts to the

given request as a response.

3 Evaluation

For the purpose of evaluating our work, DMS is applied to an AWS, Adaptive Recommendation for Academic

Scheduling (ARAS). ARAS is an online system that aims

to provide adaptive support to the course selection process

for students without the assistance of advisors. The system

generates recommendations to students based on course information, and users’ interests and course-taken history.

To demonstrate the helpfulness of DMS to ARAS, three

sample systems are developed and compared based on their

performance:

• System A: in which only relationships prerequisite and

containment are considered. Once the system receives

a concept request, it searches through the relationships

and concepts and finds related concepts online for the

given request based on the user’s interests.

• System B: in which all predefined relationships defined in DMS are considered. The system finds all related concepts for each concept in the domain model

in advance off-line by following the process described

in section 2.2.3. Once the system receives a concept

request, it provides the related concepts of the given

request from its recorded related concept list immediately.

• System C: in which all predefined relationships defined in DMS are considered. Similar to system B,

the related concepts of each concept are found offline in advance based on these relationships and concept information. In addition, the discovered relationships, which are associations and maximal frequent

sequences, are discovered in advance in this system.

Once the system receives a concept request, the system not only finds the related concepts of the given

request from its recorded related concept list, but also

generates recommendations based on the associations

and the maximal frequent sequences.

The performance of these systems is measured in two

ways: accuracy of recommendations, and response time of

requests. The accuracy of recommendations measures how

close the recommendations are to what users want. The response time measures how fast the system can provide the

requested concept to users. In this research, the accuracy

of recommendations is calculated based on two parameters:

recall and precision. Recall is the portion of the number of

concepts recommended correctly by the system to the number of concepts the users really want. Precision is the portion of the number of concepts recommended correctly by

the system to the total number of recommended concepts.

Since ARAS takes the University of New Brunswick,

Fredericton, Canada, as the sample application domain, the

real data of the students at the university is used as sample

data for DMS. The sample data is preprocessed in two steps:

firstly, a set of data is separated from the sample data set for

mining the discovered relationships; then, the rest of the

data is divided into training data sets and test data sets: the

courses taken in each student’s last term are considered as

a test set, which represents what the student wants, and the

courses taken in previous terms are considered as a corresponding training set, which represents the student’s course

taken history. The system generates recommendations for

each student based on course information, curriculum information, and students’ interests, which are discovered from

the student’s course taken history. The recommendations

are measured against the corresponding test data set.

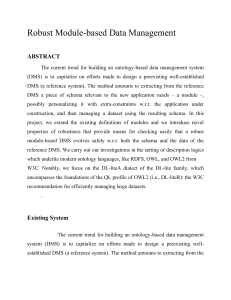

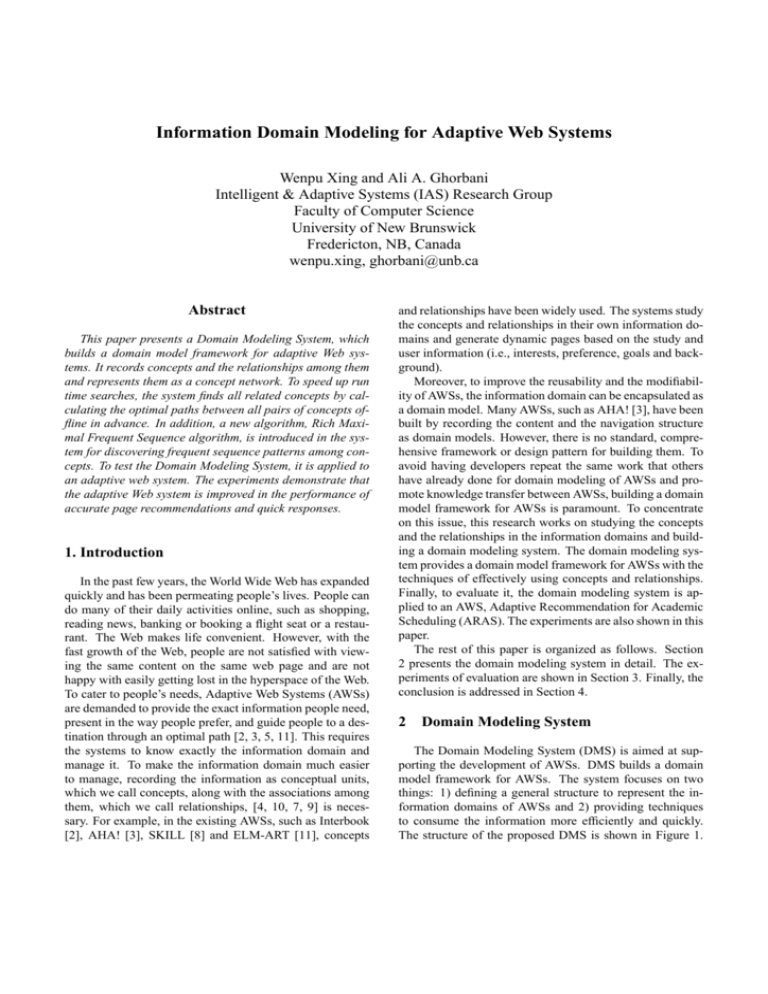

Figure 4 presents the accuracy of the recommendations

provided by the three sample systems. In the graph, the

number of relationship types considered in the system is

shown as the x-axis while accuracy is taken as the y-axis.

The graph shows that the more relationships are considered

in the system, the bigger recalls but smaller precisions the

recommendations have because the more recommendations

are provided. However, since the decrement ratio of the

precisions is less than the increment ratio of the recalls, Sys-

tems B and C provide better recommendations. Therefore,

DMS represented by System C improves the performance

of ARAS in accuracy of recommendations.

60

Recall

Precision

50

References

Accuracy (%)

40

30

20

10

0

3

4

5

6

7

8

9

Number of Relationship Types

10

11

12

13

Figure 4. Accuracy of the Sample Systems

To measure the response time, the average response time

of requests is calculated in the sample systems. The results

are shown in Table 1. The key point of the results is that

even though many more relationships are considered in Systems B and C, their response times are still less than that of

System A. This result shows that DMS accelerates ARAS’

responses.

System A

System B

System C

tems. In the future, discovering techniques for generating

relationship weights automatically based on concept information is planed. In addition, the system is planned to be

extended with an ontology proxy for exchanging information between different domains.

Average Response Time(millSec)

2740200

2020

2555

Table 1. Average response time of the sample

systems

In general, improvements of DMS to ARAS in accuracy of recommendations and response time demonstrate

that DMS improves the performance of ARAS.

4 Conclusion

In this paper, a domain modeling system is presented.

The system not only provides a list of general concept relationships in AWSs, but also introduces a general structure

for recording domain information for AWSs. Moreover, in

order to improve the performance of AWSs, techniques of

effectively using the domain information are addressed. Finally, the feasibility of the domain modeling system is presented by the experiment comparisons of three sample sys-

[1] R. Agrawal and R. Srikant. Fast algorithms for mining association rules. In J. B. Bocca, M. Jarke, and C. Zaniolo,

editors, Proc. 20th Int. Conf. Very Large Data Bases, VLDB,

pages 487–499. Morgan Kaufmann, 12–15 1994.

[2] P. Brusilovsky, J. Eklund, and E. Schwarz. Web-based education for all: A tool for developing adaptive courseware.

In Computer Networks and ISDN Systems (Proceedings of

Seventh International World Wide Web Conference), pages

291–300, April 1998.

[3] P. De Bra and L. Calvi. AHA: a generic adaptive hypermedia system. In Proceedings of the 2nd Workshop on Adaptive

Hypertext and Hypermedia, HYPERTEXT‘98, Pittsburgh,

USA, June 20–24 1998.

[4] C. Eliot, D. Neiman, and M. Lamar. Medtec: A web-based

intelligent tutor for basic anatomy. In World Conference of

the WWW, Internet, and Intranet (Web-Net’97), pages 161–

165, Toronto, Canada, October 1997.

[5] M. Kilfoil, A. Ghorbani, W. Xing, Z. Lei, J. Lu, J. Zhang,

and X. Xu. Toward an adaptive web: The state of the art

and science. In Proceedings of Communication Network and

Services Research (CNSR) 2003 Conference, pages 108–

119, Moncton, NB, Canada, May 15–16 2003.

[6] M. Klein, J. Broekstra, D. Fensel, F. van Harmelen, and

I. Horrocks. Spinning the Semantic Web, chapter 4, pages

95–141. The MIT Press, 2003.

[7] W. Nejdl and M. Wolpers. Kbs hyperbook – a data-driven

information system on the web. In WWW8 Conference,

Toronto, May 1999.

[8] G. Neumann and J. Zirvas. Skill - a scalable internet-based

teaching and learning system. In Proceedings of WebNet 98,

World Conference on WWW, Internet and Intranet AACE,

pages 7–12, Orlando, Fl, November 1998.

[9] M. Specht and R. Opermann. Ace - adaptive courseware

environment. The New Review of Hypermedia and Multimedia, 4:141–161, 1998.

[10] M. Specht, G. Weber, S. Heitmeyer, and V. Schoch. Ast:

Adaptive www-courseware for statistics. In Workshop on

Adptive Systems and User Modeling on the World Wide Web

at UM’97 Conference, pages 91–95, Chia Laguna, Sardinia,

Italy, June 1997.

[11] G. Weber and M. Specht. User modeling and adaptive navigation support in www-based tutoring systems. In Proceedings of User Modeling’97, pages 289–300, 1997.