Math 103, Summer 2006 Diagonalizability & Complex Eigenvalues

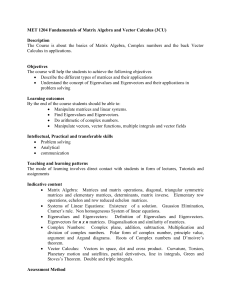

advertisement

Math 103, Summer 2006

Diagonalizability & Complex Eigenvalues

August 3, 2006

DIAGONALIZABILITY & COMPLEX EIGENVALUES

1. Similarity

We started class by doing a Mathematica example which showed two matrices which had the same geometric

action but with respect to different bases: first we described the action on a ‘nice’ basis and then we changed

back to standard coordinates to see how the action behaved. There were some fun moving pictures, so check it

out in the Mathematica Files section of the webpage.

This was our example of a general philosophy: changing basis shows us how two algebraically distinct

operators can have the same geometric action. With this in mind we gave the following

Definition 1.1. Two matrices A and B are said to similar if there exists some invertible matrix S so that

A = SBS −1 .

From our discussion yesterday, saying that two matrices are similar means that they have the same geometric

action on Rn , just on different bases. If you’ve seen equivalence relations before, you might be interested to

know that

Theorem 1.1. Similarity is an equivalence relation. This means that

• for any matrix A, A is similar to A;

• for any matrices A and B, if A is similar to B then B is similar to A;

• for any matrices A, B and C, if A is similar to B and B is similar to C, then A is similar to C.

What this means is that similarity takes the set of linear operators and puts them into a bunch of classes

(one class for all matrices that are similar to one another). These collections then give us the linear operators

which are qualitatively different.

2. Diagonalizability

Yesterday in class we said that if an operator T admits an eigenbasis for Rn (that is to say, if there exists

→

vectors −

v1 , · · · , −

v→

n which are eigenvectors for T with corresponding eigenvalues λi ) then after changing basis to

the eigenbasis, the B-matrix for T is diagonal (with diagonal entries λi ). This motivates the following definition.

Definition 2.1. A matrix is said to be diagonalizable if it is similar to a diagonal matrix.

With this new definition, the statement we made yesterday translates to the following

Theorem 2.1. A matrix A is diagonalizable if and only if A admits an eigenbasis for Rn .

aschultz@stanford.edu

http://math.stanford.edu/~aschultz/summer06/math103

Page 1 of 3

Math 103, Summer 2006

Diagonalizability & Complex Eigenvalues

August 3, 2006

→

Proof. We showed yesterday that if A admits an eigenbasis for Rn , say −

v1 , · · · , −

v→

n with corresponding eigenvalues

λ1 , · · · , λn , then

λ1

λ2

−1

A=S

S

..

.

λn

−

where of course S is the matrix whose columns are the vectors →

vi (in order). This shows one direction.

For the other direction, if we can write A = SDS −1 with D diagonal, then S is the desired eigenbasis of

A.

¤

We can also rephrase diagonalizability in terms of geometric multiplicities

Theorem 2.2. A matrix A is diagonalizable if and only if the geometric multiplicities of the eigenvalues of A

add up to n.

Proof. The geometric multiplicities of the eigenvalues of A add up to n if and only if A admits an eigenbasis,

and we apply the previous result.

¤

Corollary 2.3. If an n × n matrix A has n distinct eigenvalues, then A is diagonalizable.

Proof. Let λ1 , · · · , λn be the eigenvalues of A. Since each has algebraic multiplicity 1 the geometric multiplicty

of each must also be 1. This means that the sum of the geometric multiplicities of A is n, and we apply the

previous theorem.

¤

Diagonalizable matrices are nice because their action is really simple: by changing basis, it just becomes an

action which scales the coordinate vectors. These were the kinds of operators we hoped to find when we started

talking about linear dynamical systems, and in fact we can now rephrase a result which we’ve been thinking

about ever since we first talked about dynamical systems.

Theorem 2.4. Computing An is easy when A is diagonalizable.

Theorem 2.5. Let S be a matrix so that A = SDS −1 , where of course D is diagonal. This means that

An = (SDS −1 )(SDS −1 ) · · · (SDS −1 )(SDS −1 ) = SD(S −1 S)D(S −1 S)D · · · D(S −1 S)DS −1 = SDn S −1 .

|

{z

}

n times

But since Dn is easy to compute (because D is diagonal!), this means that An is easy to compute.

3. Complex eigenvalues

The goal of this section is to show that if a 2 × 2 matrix has eigenvalues a ± bi then A is similar to the matrix

µ

¶

a −b

.

b

a

Strangely enough, to prove this result we need to write some matrices with complex entries, even though this

is a statement about matrices with real values! Weird...

Here’s a warmup to the desired result.

aschultz@stanford.edu

http://math.stanford.edu/~aschultz/summer06/math103

Page 2 of 3

Math 103, Summer 2006

Diagonalizability & Complex Eigenvalues

Theorem 3.1. The matrix

µ

T =

a

b

−b

a

August 3, 2006

¶

is diagonalizable ‘over C.’

Here when I write ‘over C, I mean that when I write T = SDS −1 with D a diagonal matrix then the entries

of S and D are allowed to be complex (and not necessarily real).

Proof. We have seen before that the eigenvalues of T are a ± bi. One can compute

µ

¶

µ ¶

−bi −b

i

ker (T − (a + bi)I2 ) = ker

= Span

.

b −bi

1

To see this, take the matrix in the middle of the equation and add −i copies of row 1 into row 2 (and remember

that i2 = 1). Similarly one can show that

µ

¶

−i

1

is an eigenvector for a−bi. But this gives us an eigenbasis

for ¶

our matrix (admittedly, with complex coordinates)

µ

i −i

and so our change of basis formula says with R =

we have

1

1

µ

a −b

b

a

¶

µ

=R

¶

a + bi

a − bi

R−1 .

¤

aschultz@stanford.edu

http://math.stanford.edu/~aschultz/summer06/math103

Page 3 of 3