Available online at www.sciencedirect.com

ScienceDirect

When is a loss a loss? Excitatory and inhibitory

processes in loss-related decision-making

Ben Seymour1,2,3,4, Masaki Maruyama2,3 and

Benedetto De Martino4

One of the puzzles in neuroeconomics is the inconsistent

pattern of brain response seen in the striatum during evaluation

of losses. In some studies striatal responses appear to

represent loss as a negative reward (BOLD deactivation), while

in others as positive punishment (BOLD activation). We argue

that these discrepancies can be explained by the existence of

two fundamentally different types of loss: excitatory losses

signaling the presence of substantive punishment, and

inhibitory losses signaling cessation or omission of reward. We

then map different theories of motivational opponency to loss

related decision-making, and highlight five distinct underlying

computational processes. We suggest that this excitatory–

inhibitory model of loss provides a neurobiological framework

for understanding reference dependence in behavioral

economics.

Addresses

1

Computational and Biological Learning Laboratory, Department of

Engineering, University of Cambridge CB2 1PZ, United Kingdom

2

Center for Information and Neural Networks, National Institute for

Information and Communications Technology, 1-4 Yamadaoka, Suita

City, Osaka 565-0871, Japan

3

Immunology Frontier Research Center, Osaka University, 3-1 Yamadaoka, Suita, Osaka 565-0871, Japan

4

Department of Psychology, University of Cambridge, Downing Street,

Cambridge CB2 3EB, United Kingdom

Corresponding author: Seymour, Ben (bjs49@cam.ac.uk)

Current Opinion in Behavioral Sciences 2015, 5:122–127

This review comes from a themed issue on Neuroeconomics

Edited by John P O’Doherty and Colin C Camerer

For a complete overview see the Issue and the Editorial

Available online 3rd October 2015

http://dx.doi.org/10.1016/j.cobeha.2015.09.003

2352-1546/# 2015 Elsevier Ltd. All rights reserved.

Introduction

Over the past decade a set of divergent observations have

emerged in human neuroimaging studies of monetary

loss. In studies of the receipt (or prospect) of financial

loss, neuroimaging responses sometimes exhibit deactivation in BOLD signal of striatal brain areas associated

with motivation and decision-making (caudate, putamen,

and nucleus accumbens) [1,2,3,4], or little change at all

[5]. This has often been seen as consistent with a primary

Current Opinion in Behavioral Sciences 2015, 5:122–127

role of these regions in reward-related processing, and

these negative responses are usually seen in the same

regions showing activation to monetary gains. With

emerging evidence that striatal BOLD responses to reward were well described by prediction error activity in

the context of passive prediction tasks (Pavlovian learning), it was generally assumed that this activity represented a single reward-specific and putatively dopaminerelated signal [6,7].

However, this theory suffered when other studies involving loss, and especially involving primary punishments

such as pain, revealed positive activation in the striatum,

in very similar regions to those that showed deactivations

to financial loss [8,9]. Furthermore, the pattern of activity

resembled a prediction error, just like a reward prediction

with the opposite sign. This suggested that either the

striatum was encoding a more complex signal than originally thought — perhaps some sort of selective salience

signal [10], or that there was a second system for encoding

aversive outcomes that comes online with physical, but

less so financial, punishment. Why financial loss might

less reliably activate this system was unclear, but one

could posit it might be related to the fact that physical

punishments are primary outcomes ‘consumed’ immediately, whereas money is a secondary outcome whose

real outcome is fulfilled at a later date. A more reliable

way to ‘activate’ the striatum to loss was introduced with

a clever design from Delgado and colleagues: they had

subjects begin the experiment with a task in which

subjects could earn a decent sized money pot. Then

in a second, seemingly unrelated experiment, they

underwent a loss-conditioning study, which revealed

positive activation to monetary loss [11]. This result,

together with a more recent one [12], suggest that losing

money that had been earned on a previous task in some

way rendered it sufficient to reliably activate positive

aversive coding.

This raises the question as to what makes a loss look

sometimes primarily like a negative reward, and at other

times like a positive punishment. This is important,

because if there are substantially different ways of representing losses in the brain, then the associated loss

behavior may have very different characteristics.

To make matters more complex, subsequent studies involving the capacity to make active choices over monetary

loss or pain, that is, reducing or avoiding punishment, did

www.sciencedirect.com

When is a loss a loss? Seymour, Maruyama and De Martino 123

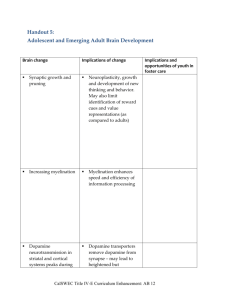

Animal learning theory provides a structured approach to

understanding the relationship between gains and losses,

and there is good evidence of the existence of two

separate motivational pathways for outcome prediction:

one governing rewards, and the other governing punishments [17]. In particular, accounts of interaction between

the two systems yield two distinct types of punishment:

excitatory, and inhibitory, depending on the context that

defines the nature of punishment. Accordingly, inhibitory

values emerge from two different instances for appetitiveaversive opponency: omission (Konorskian [18]) opponency, and offset (Solomon–Corbit [19]) opponency

(Figure 1).

Omission opponency describes the frustrative loss that

occurs when an expected reward does not occur. Here,

excitatory losses are due to the positive presence of a

punishment, and inhibitory losses due to absence of an

expected gain. A slightly different type of frustrative loss

occurs when a tonically presented reward terminates. In

this case, Solomon and Corbitt proposed the accrual of a

slow adaptive process from which acute changes were

compared. Both processes illustrate the clear distinction

between excitatory and inhibitory losses, with the inhibitory type being generated either comparison of neutral

outcome with an expectation of or tonic baseline level of

reward. This is exactly mirrored in the opposite valence:

inhibitory reward being evoked with the relief at the

termination or omission of punishment [20].

The existence of different types of loss offers an explanation into the pattern of brain responses seen above. In

most experiments, for ethical and practical reasons, loss is

operationalized by a reduction in the participant monetary compensation (future expected reward). This procedure would augment the inhibitory loss representation

and therefore tend toward a deactivation in striatal areas.

However, for primary punishments and financial outcomes that were already considered ‘owned’, we would

expect a dominant excitatory loss representation, and

activation of an aversive system observable in the striatum. In many situations, it may be that excitatory and

www.sciencedirect.com

Excitatory and Inhibitory Losses

excitatory

Lose $

(excitatory loss)

Win $

(excitatory reward)

inhibitory

Excitatory and inhibitory loss

Figure 1

Loss signal

Loss

omitted

Win signal

Win

omitted

(Konorskian relief)

(Konorskian frustration)

Losses

inhibitory

not fit either pattern simply. Here, for both money and

pain, striatal activity shows positive activation for avoidance actions and avoided outcomes [13,14]. Rather than

representing the magnitude of the expected punishment

(in probabilistic avoidance), it seemed to represent the

relative positive value of avoidance [15]. That is, activity

again looks like a reward signal — this time for actions,

with no consistent evidence of an aversive striatal system in

operation, even for painful outcomes. A positive aversive

signal is sometimes seen elsewhere, such as the anterior

insula cortex [16], but its contribution to decision making

was less clear.

Wins

Loss

omitted

(Solomon Corbit relief)

Win

omitted

(Solomon Corbit frustration)

Current Opinion in Behavioral Sciences

Excitatory and inhibitory processes underlying reward and

punishment. Excitatory values occur with the receipt, or prediction of

receipt, of a primary reward or punishment. Inhibitory values occur

with either the omission of an expected outcome (e.g. requiring, of

course, an expectation to be generated by some process, such as

Pavlovian Conditioning), or with the termination of a tonic or

repetitively received outcome.

inhibitory processes co-occur [21], and hence partially or

fully cancel out the subsequent fMRI BOLD response.

From loss prediction to decision-making

How then, is loss-related computation related to decisionmaking? Clearly, what motivates choice in the context of

any type of loss is a desire to reduce it, and this can be

used to define loss or punishment. The Konorskian and

Solomon–Corbitt framework deals with passive (Pavlovian) predictions, but are conventionally thought to govern

two distinct types of aversive decision: avoidance, and

escape [22,23]. The control of escape and avoidance may

be different, because the nature by which information

about outcomes is garnered is different, but in both cases

it is the absence of punishment that motivates behavior.

The paradox created by the ability of ‘nothing’ to act as an

incentive and reinforce actions has stimulated considerable research and debate [24]. Two putative solutions to

the avoidance problem are provided by inhibitory

rewards: in the one case (two-factor theory), behavior is

driven by the escape from fear (i.e. offset relief) elicited

by any signal that predicts punishment [25]. In a second

case (safety signal hypothesis), behavior is driven by the

Current Opinion in Behavioral Sciences 2015, 5:122–127

124 Neuroeconomics

(conditioned) reinforcement provided by the inhibitory

outcome state that signals the absence of punishment

(omission relief) [26,27,28]. There is good evidence for

both these processes [29], but they lead to a problem: can

they sustain behavior after repeated avoidance success,

because the inhibitory learning process should extinguish? One possibility is that a simple habit-based system

might take over, which stamps in the repetitive behavior

as if it were a reward [30].

However, these theories struggle to account for avoidance

in the absence of any signals (‘free-operant’ avoidance),

such as when a minimum baseline rate of action execution

is required to ensure no punishments are presented [31]

(e.g. pressing a lever every 20 s to ensure no electric shock

is delivered). This has led to theories of cognitive avoidance that appeal to the remembered representation of

avoided outcomes in the brain [32]. Similar to ‘modelbased’ control for reward-based decision-making [33],

this type of behavior relies on some sort of internal model

of the environmental structure and contingencies.

Together, this illustrates the fundamental difference

between reward-based and avoidance-based reinforcement, with avoidance being driven by a relief processes

generated through inhibitory interactions, and not

through a primary excitatory process. However, this

should only be manifest in avoidance tasks in which

stimulus driven learning is possible. In explicit tasks

(verbal or written), in which learning is not required or

possible, we would expect simply a model-based system

evaluating the best actions. In addition, during either type

of task, we would expect a reward-like habit system to

take control as soon as a clear pattern of avoidance

becomes successful. Importantly, therefore, in the case

of learned or explicit loss avoidance, one would predict an

overall similar reward-dominant pattern of brain activity

for both reward acquisition and loss avoidance — as is

typically seen.

However, the difference between the reward seeking and

avoidance should be observable early in learning, when

we would expect relief driven reinforcement to be represented as a negative punishment — a striatal deactivation

preceded by aversive activation induced by the pre-action

state, as occurs for negative Pavlovian prediction errors for

excitatory losses. However, to our knowledge previous

studies have not explicitly looked specifically at the

earliest versus later stages of learning. One reason may

be the methodological problems of doing extended training in fMRI studies, with confounding effects of time and

attention.

In the case of inhibitory losses, a plausible prediction is

that an aversively motivated avoidance system is not

required at all: since avoidance would require inhibition

of inhibition (e.g. an inhibitory safety signal reflecting the

Current Opinion in Behavioral Sciences 2015, 5:122–127

absence of loss that itself signaled the absence of reward).

Therefore, inhibitory loss avoidance problems could be

solved with just a reward system, by contrast to the

complex Pavlovian-instrumental interactions required

for excitatory loss avoidance.

A further difference comes from the responses directly

associated with the (excitatory or inhibitory) loss prediction itself. In particular, excitatory losses would be

expected to evoke much stronger innate responses (‘species-specific defence responses’ (SSDRs)) which directly

interfere with behavior [34]. In animal studies of primary

punishments, this direct Pavlovian response can exert

powerful control of behavior — sometimes called ‘Pavlovian warping’ [35,36]. Again, however, we would only

expect this excitatory Pavlovian response (a positive

striatal signal) early in avoidance behavior, because it

would extinguish as successful avoidance becomes the

norm [37].

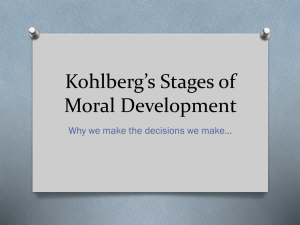

In summary, based on good evidence primarily from

animal studies, it seems probably that loss-related decision-making is under the control of at least five separate

mechanistic processes (Figure 2). As a result, the pattern

of behavior and accompanying neural activity depends

critically on a number of factors, in particular the level of

controllability over loss [38], the amount of experience,

Figure 2

Architecture of excitatory loss avoidance

Intemal model

Pre-action

state

Loss

Choice

mechanism

Innate response

se

(SSDR)

Conditioned

inhibition generates

safety signal

interference

SR habitization

No Loss

Offset relief following escape from preaction state induced fear

Current Opinion in Behavioral Sciences

The architecture of loss avoidance. A set of coordinated processes

mediate the acquisition and maintenance of avoidance learning, and

similarly, any decision over options involving choosing the lesser of

two punishments. The processes include first, an innate Pavlovian

response to anticipated loss (including species specific defence

reactions (‘SSDR’s), second, escape from fear associated with the

loss predictive pre-action state, third, conditioned reinforcement of a

safety signal, generated through conditioned reinforcement of the relief

state, fourth, goal-directed (model-based) internal model and cognitive

decision system, and fifth, habitization of the avoided action, after

prolonged experience.

www.sciencedirect.com

When is a loss a loss? Seymour, Maruyama and De Martino 125

the presence of cues signally the requirement to avoid,

the schedule of loss delivery, the tonic level of reward or

loss, conditioned and explicitly expected outcomes, the

presence of signals indicating the success of behavior, and

the nature of the loss itself (inhibitory or excitatory).

However, quite which systems are active in any task

might not readily apparent from basic analysis of neuroimaging data.

Relation to economic theories of loss

behavior

In the standard formulation of Expected Utility Theory

[39] the agents maximize their utility over a concave

utility-of-wealth. In this framework losses are just lower

levels of (positive) wealth. As the local curvature (concavity) of the utility function increases, agents behave in a

more risk-averse manner. Importantly Prospect Theory

[40] introduced a different functional form of utility in

which losses are conceptually different from gains, since

they are evaluated relative to a reference point. Notably

ventral striatum computations have been shown to be

sensitive to the manipulation in the reference-point

through market transactions [41]. Around the reference

point, the Prospect Theory utility function is S-shaped

and asymmetrical. The S-shape reflects concavity for

potential gains (risk adverse behavior for prospective

gains) and convexity for potential losses (risk seeking

behavior for prospective losses). The asymmetry relative

to the reference point reflects the fact that the function is

steeper in the loss domain. The magnitude of this asymmetry is characterized by a parameter called lambda (l)

that neatly account for loss averse behavior (the widespread

evidence people strongly prefer avoiding losses to acquiring gains). Therefore in Prospect Theory how the reference-point is set is crucial to distinguish a gain from a loss.

However, how the reference-point should be derived is

unclear. Tversky and Kahneman originally proposed that

the reference point is set by the status quo, a subject’s

wealth level at the time of each decision. Other alternative proposed are the mean of the chosen lottery [42] or a

lagged status quo [43], which predicts the willingness to

take unfavorable risks to regain the previous status quo. A

more recent proposal from Koszegi and Rabin [44,45]

suggest that subject’s expectations (and not the status

quo) shapes the reference point and determines what an

agent would perceived as loss. In this model the decisionmaker maximizes a linear combination of a consumption

utility m(w) that is the riskless intrinsic consumption

utility associated with a given level of wealth w and

m(r) the utility associated with a given reference level

of wealth:

uðwjrÞ ¼ mðwÞ þ mðmðwÞ mðrÞÞ

The reference dependent component of this account of

utility ðmðmðwÞ mðrÞÞÞ strongly resonates with the concept of inhibitory loss described we highlighted here. For

www.sciencedirect.com

example, a CEO that expect a profit for her company of

1.3 millions dollars (for the current year) will perceive a

profit of one million as a loss even if the company profit for

previous years were below one million. Similarly if the

same CEO expects that the company will produce a net

loss of one million at the end of the year, will perceive a

loss of half-million as a gain. Critically in this framework

the final utility is not only function of the reference

dependent component but it is also function of consumption utility (m(w)) that is the intrinsic utility for a given

level of wealth w. This other component of utility could

be mapped on what we have described here as an excitatory loss and would elicit an active averse representation

since it will impact directly on the wealth of the agent.

Therefore an intriguing possibility is that discrepancies

observed in previous studies arise from a manipulation

have impacted more or less on one of these two components that give rise to the final utility; that (as we propose

here) have a segregated computational representation in

the brain. One can imagine, for example, that the method

used in most experiments to generate losses by reducing

the participant monetary compensation by small amounts

might impact significantly on the reference dependent

component of utility (the participant expectation prior the

beginning of the experiment) but might have a negligible

impact on the overall level of the participant’s wealth

dependent utility Further studies should directly and

orthogonally manipulate these two components of utility

to test empirically this hypothesis.

Finally, an interesting view has recently emerged in

behavioral economics suggesting that reference-dependent behavior arises from modulation of attentional

resources. In this framework, loss averse behavior arises

naturally if one assumes that a loss (a reduction in consumption) has a stronger impact on an attention-biased

utility than a corresponding gain [46] without assuming an

asymmetry in the value function. Consistent with this

hypothesis it has been recently shown that losses have a

distinct effect on attention but do not lead to an asymmetry in subjective value [47]. However, the exact role

played by attention in shaping response to losses is still

unclear and further empirical investigation is required.

However, according to the framework proposed here it is

tempting to suggest that excitatory losses should be more

salient than inhibitory losses and therefore differentially

engage the decision-maker attentional resources.

Conclusions and predictions

Loss related behavior is both neurobiologically complex

and fundamentally different from reward-related behavior — a fact that is sometimes overlooked. Importantly

the experimental conditions under which loss behavior is

studied critically determines the recruitment of different

brain systems. Here, we propose that the relative contribution of two types of loss: excitatory and inhibitory

losses, and that their relative representation determines

Current Opinion in Behavioral Sciences 2015, 5:122–127

126 Neuroeconomics

the nature of the brain response in the striatum in passive

receipt of loss, and together with the recruitment of

inhibitory rewards, determines to some extent the nature

of loss related decision-making (avoidance).

It is important to note that other structures aside from the

striatum are also strongly implicated in the representation

and control over losses, in particular the amygdala and

insula cortex. The amygdala also represents distinct integrated reward and loss signals [48,49], but is typically a

little harder to charactise in imaging studies because of its

smaller size and susceptibility to artifact. The insula

cortex appears to have a more complex role in both

value-sensitive functions and ‘interoceptive’ sensory processing [50], making interpretation even more difficult.

Our model makes several testable predictions. First, in

the case of passive loss prediction of simultaneous reward

money and loss, the striatum is known to code a net

reward signal. But for simultaneous pain and reward,

evoking both positive reward and positive aversive systems, we would expect an amplified signal, even though

the net value would be close to zero.

Second, in purely aversive contexts in which subjects

genuinely felt they could only lose their own money

during an experiment, gains of money should be represented by negative responses of a dominant aversive

system in striatum.

Third, during excitatory but not inhibitory signaled loss

avoidance (but not free operant avoidance), we would

expect a positive striatal loss signal during very early

learning, that extinguishes along with cue-driven Pavlovian responses, and switches to becomes a positive signal as

successful avoidance is learned.

3.

Delgado MR et al.: Tracking the hemodynamic responses to

reward and punishment in the striatum. J Neurophysiol 2000,

84:3072-3077.

4.

Bartra O, McGuire JT, Kable JW: The valuation system: a

coordinate-based meta-analysis of BOLD fMRI experiments

examining neural correlates of subjective value. Neuroimage

2013, 76:412-427.

A comprehensive meta-analysis of studies of value computation in human

neuroimaging.

5.

Yacubian J et al.: Dissociable systems for gain- and lossrelated value predictions and errors of prediction in the human

brain. J Neurosci 2006, 26:9530-9537.

6. O’Doherty JP et al.: Temporal difference models and reward

related learning in the human brain. Neuron 2003, 38:329-337.

Pioneering study that introduced computational model-based fMRI analysis, applied here to the study of reward and value.

7.

O’Doherty J et al.: Dissociable roles of ventral and dorsal

striatum in instrumental conditioning. Science 2004,

304:452-454.

8.

Jensen J et al.: Direct activation of the ventral striatum in

anticipation of aversive stimuli. Neuron 2003, 40:1251-1257.

9.

Seymour B et al.: Temporal difference models describe higherorder learning in humans. Nature 2004, 429:664-667.

10. Zink CF et al.: Human striatal response to salient nonrewarding

stimuli. J Neurosci 2003, 23:8092-8097.

11. Delgado MR et al.: The role of the striatum in aversive learning

and aversive prediction errors. Philos Trans R Soc B Biol Sci

2008, 363:3787-3800.

This study implement a novel experimental manipulation in which subjects are required to ‘earn’ in an unrelated task the endowment used in

loss task. This work shows positive activation for losses in the striatum.

12. Canessa N et al.: The functional and structural neural basis of

individual differences in loss aversion. J Neurosci 2013,

33:14307-14317.

13. Pessiglione M et al.: Dopamine-dependent prediction errors

underpin reward-seeking behaviour in humans. Nature 2006,

442:1042-1045.

14. Kim H, Shimojo S, O’Doherty JP: Is avoiding an aversive

outcome rewarding? Neural substrates of avoidance learning

in the human brain. PLoS Biol 2006, 4:e233.

15. Seymour B et al.: Serotonin selectively modulates reward value

in human decision-making. J Neurosci 2012, 32:5833-5842.

16. Tanaka SC et al.: Neural mechanisms of gain–loss asymmetry

in temporal discounting. J Neurosci 2014, 34:5595-5602.

All authors declare that we have no conflicts of interest.

17. Dickinson A, Dearing MF: Inhibitory processes. Mechanisms of

Learning and Motivation: A Memorial Volume to Jerzy Konorski.

2014:203.

Acknowledgements

18. Konorski J: An Interdisciplinary Approach. Chicago: Chicago

University Press; 1967.

National Institute for Information and Communications Technology of

Japan, Japanese Society for the Promotion of Science, The Wellcome Trust

(UK).

19. Solomon RL, Corbit JD: An opponent-process theory of

motivation: I. Temporal dynamics of affect. Psychol Rev 1974,

81:119.

Conflict of interest statement

References and recommended reading

Papers of particular interest, published within the period of review,

have been highlighted as:

of special interest

of outstanding interest

1. Tom SM et al.: The neural basis of loss aversion in decision

making under risk. Science 2007, 315:515-518.

An important article that investigates for the first time the neural underpinning of loss aversion, showing a link between deactivation for losses in

striatum and the degree of behavioural loss aversion.

2.

Cooper JC, Knutson B: Valence and salience contribute to

nucleus accumbens activation. Neuroimage 2008, 39:538-547.

Current Opinion in Behavioral Sciences 2015, 5:122–127

20. Seymour B et al.: Opponent appetitive-aversive neural

processes underlie predictive learning of pain relief. Nat

Neurosci 2005, 8:1234-1240.

21. Seymour B et al.: Differential encoding of losses and gains in

the human striatum. J Neurosci 2007, 27:4826-4831.

22. Mackintosh NJ: The Psychology of Animal Learning. Academic

Press; 1974.

23. Bolles RC: Avoidance and escape learning: simultaneous

acquisition of different responses. J Comp Physiol Psychol

1969, 68:355.

24. Denrell J, March JG: Adaptation as information restriction: the

hot stove effect. Org Sci 2001, 12:523-538.

25. Mowrer O: Learning Theory and Behaviour. New York: Wiley;

1960.

www.sciencedirect.com

When is a loss a loss? Seymour, Maruyama and De Martino 127

26. Dinsmoor JA: Stimuli inevitably generated by behavior that

avoids electric shock are inherently reinforcing. J Exp Anal

Behav 2001, 75:311-333.

In this article is possible to find an extended debate about the control of

avoidance.

27. Seligman MEP, Binik YM: The Safety Signal Hypothesis. Operant–

Pavlovian Interactions. NJ: Erlbaum Hillsdale; 1977, 165-187.

28. Fernando ABP et al.: Safety signals as instrumental reinforcers

during free-operant avoidance. Learn Mem 2014, 21:488-497.

29. Gillan CM, Urcelay GP, Robbins TW: An associative account of

avoidance. In Cognitive Neuroscience of Associative Learning.

Edited by Honey R, Murphy RA. Wiley & Sons; 2015.

30. Gillan CM et al.: Enhanced avoidance habits in obsessive–

compulsive disorder. Biol Psychiatry 2014, 75:631-638.

31. Hineline PN: Negative reinforcement and avoidance. In

Handbook of Operant Behavior. Edited by Honig WK, Staddon

JER. New York: Prentice-Hall; 1977.

32. Seligman ME, Johnston JC: A cognitive theory of avoidance

learning. In Contemporary Approaches to Conditioning and

Earning. Edited by Lumsden MFJ, Barry D. Oxford, England: V. H.

Winston & Sons; 1973. 321 pp..

33. Dayan P, Niv Y: Reinforcement learning: the good, the bad and

the ugly. Curr Opin Neurobiol 2008, 18:185-196.

A thought provoking review that highlight successes and limitations of the

reinforcement learning framework in modern neuroscience

39. Von Neumann J, Morgenstern O: Theory of Games and Economic

Behavior. 60th Anniversary Commemorative Edn. Princeton

University Press; 2007.

40. Tversky A, Kahneman D: The framing of decisions and the

psychology of choice. Science 1981, 211:453-458.

41. De Martino B, Kumaran D, Holt B, Dolan RJ: The neurobiology of

reference-dependent value computations. J Neurosci 2009,

29:3833.

42. Kahneman D: Reference points, anchors, norms, and mixed

feelings. Organ Behav Hum Dec 1992, 51:296-312.

43. Thaler RH, Johnson EJ: Gambling with the house money and

trying to break even: the effects of prior outcomes on risky

choice. Manage Sci 1990, 36:643-660.

44. Koszegi B, Rabin M: A model of reference-dependent

preferences. Q J Econ 2006, 121:1133-1165.

Seminal paper in behavioral economics that introduce a novel referencedepend model of preference and loss aversion in which subject’s expectations play a key role in determining a reference point.

45. Koszegi B, Rabin M: Reference-dependent risk attitudes. Am

Econ Rev 2007, 97:1047-1073.

46. Sudeep B, Golman R: Attention and reference dependence.

Working Paper. 2013.

47. Eldad Y, Hochman G: Losses as modulators of attention: review

and analysis of the unique effects of losses over gains. Psychol

Bull 2013, 139:497.

35. Dayan P et al.: The misbehavior of value and the discipline of

the will. Neural Netw 2006, 19:1153-1160.

48. Paton JJ, Belova MA, Morrison SE, Salzman CD: The primate

amygdala represents the positive and negative value of visual

stimuli during learning. Nature 2006, 439:865-870.

Neurophysiological data from primates clearly demonstrating a variety of

aversive and reward based firing patterns in amygdala neurons.

36. Dayan P, Seymour B: Values and actions in aversion.

Neuroeconomics 2008:175-191.

49. Seymour B, Dolan R: Emotion, decision making, and the

amygdala. Neuron 2008, 58:662-671.

37. Starr MD, Mineka S: Determinants of fear over the course of

avoidance learning. Learn Motiv 1977, 8:332-350.

50. Craig AD: How do you feel — now? The anterior insula and

human awareness. Nat Rev Neurosci 2009, 10:59-70.

Highly influential (and occasionally controversial) theory on the function of

insula cortex that attempts to account for the plurality of animal and

human findings relating to the insula.

34. Bolles RC: Species-specific defense reactions and avoidance

learning. Psychol Rev 1970, 77:32.

38. Leotti LA, Delgado MR: The value of exercising control over

monetary gains and losses. Psychol Sci 2014, 25:596-604.

www.sciencedirect.com

Current Opinion in Behavioral Sciences 2015, 5:122–127