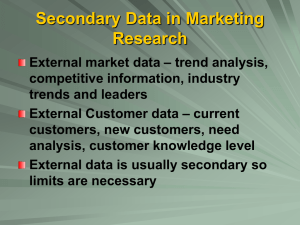

Marketing Research - University of Northern Iowa

advertisement