Robust Regression by Boosting the Median

advertisement

Robust Regression by Boosting the Median ?

Balázs Kégl1

Department of Computer Science and Operations Research

University of Montreal,

CP 6128 succ. Centre-Ville,

Montr´

eal, Canada H3C 3J7

kegl@iro.umontreal.ca

Abstract. Most boosting regression algorithms use the weighted average of base

regressors as their final regressor. In this paper we analyze the choice of the

weighted median. We propose a general boosting algorithm based on this approach. We prove boosting-type convergence of the algorithm and give clear conditions for the convergence of the robust training error. The algorithm recovers

A DA B OOST and A DA B OOST % as special cases. For boosting confidence-rated

predictions, it leads to a new approach that outputs a different decision and interprets robustness in a different manner than the approach based on the weighted

average. In the general, non-binary case we suggest practical strategies based on

the analysis of the algorithm and experiments.

1 Introduction

Most boosting algorithms designed for regression use the weighted average of the base

regressors as their final regressor (e.g., [5, 8, 14]). Although these algorithms have several theoretical and practical advantages, in general they are not natural extensions of

A DA B OOST in the sense that they do not recover A DA B OOST as a special case. The

main focus of this paper is the analysis of M ED B OOST, a generalization of A DA B OOST

that uses the weighted median as the final regressor.

Although average-type boosting received more attention in the regression domain,

the idea of using the weighted median as the final regressor is not new. Freund [6]

briefly mentions it and proves a special case of the main theorem of this paper. The

A DA B OOST.R algorithm of Freund and Schapire [7] returns the weighted median but

the response space is restricted to [0, 1] and the parameter updating steps are rather complicated. Drucker [4] also uses the weighted median of the base regressors as the final

regressor but the parameter updates are heuristic and the convergence of the method is

not analyzed. Bertoni et al. [2] consider an algorithm similar to M ED B OOST with response space [0, 1], and prove a convergence theorem in that special case that is weaker

than our result by a factor of two. Avnimelech and Intrator [1] construct triplets of weak

learners and show that the median of the three regressors has a smaller error than the

individual regressors. The idea of using the weighted median has recently appeared in

the context of bagging under the name of “bragging” [3].

?

This research was supported in part by the Natural Sciences and Engineering Research Council

(NSERC) of Canada.

The main result of this paper is the proof of algorithmic convergence of M ED B OOST.

The theorem also gives clear conditions for the convergence of the robust training error. The algorithm synthesizes several versions of boosting for binary classification. In

particular, it recovers A DA B OOST and the marginal boosting algorithm A DA B OOST %

of Rätsch and Warmuth [10] as special cases. For boosting confidence-rated predictions

it leads to a strategy different from Schapire and Singer’s approach [13]. In particular,

M ED B OOST outputs a different decision and interprets robustness in a different manner

than the approach based on the weighted average.

In the general, non-binary case we suggest practical strategies based on the analysis of the algorithm. We show that the algorithm provides a clear criteria for growing

regression trees for base regressors. The analysis of the algorithm also suggests strategies for controlling the capacity of the base regressors and the final regressor. We also

propose an approach to use window base regressors that can abstain. Learning curves

obtained from experiments with this latter model show that M ED B OOST in regression

behaves very similarly to A DA B OOST in classification.

The rest of the paper is organized as follows. In Section 2 we describe the algorithm

and state the result on the algorithmic convergence. In Section 3 we show the relation

between M ED B OOST and other boosting algorithms in the special case of binary classification. In Section 4 we analyse M ED B OOST in the general, non-binary case. Finally

we present some experimental results in Section 5 and draw conclusions in Section 6.

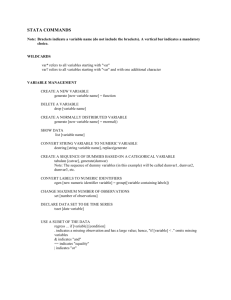

2 The M ED B OOST algorithm and the convergence result

The algorithm (Figure 1) basically follows the lines of A DA B OOST. The main difference is that it returns the weighted median of the base regressors rather than their

weighted average, which is consistent with A DA B OOST in the binary classification as

shown in Section 3.1. Other differences come from the subtleties associated with the

general case.

For the formal description, let the training data be Dn = (x1 , y1 ), . . . , (xn , yn )

where data points (xi , yi ) are from the set Rd × R. The algorithm maintains a weight

(t) (t)

distribution w(t) = w1 , . . . , wn over the data points. The weights are initialized

uniformly in line 1, and are updated in each iteration in line 12. We suppose that we are

given a base learner algorithm BASE(Dn , w) that, in each iteration t, returns a base

regressor h(t) coming from a subset of H = {h : Rd 7→P

R}. In general, the baselearner

n

should attempt to minimize the average weighted cost i=1 wi C h(t) (xi ), yi on the

1

0

training data , where C (y, y ) is an -tube loss function satisfying

C (y, y 0 ) ≥ I{|y−y0 |>} ,

(1)

where the indicator function I{A} is 1 if its argument A is true and 0 otherwise. The

most often we will consider two cost functions that satisfy this condition, the (0 − 1)

cost function

C(0−1) (y, y 0 ) = I{|y−y0 |>} ,

(2)

1

If the base learner cannot handle weighted data, we can, as usual, resample using the weight

distribution.

2

M ED B OOST(Dn , C (y 0 , y), BASE(Dn , w), γ, T )

1

w ← (1/n, . . . , 1/n)

2

for t ← 1 to T

3

h(t) ← BASE(Dn , w)

4

for i ← 1 to n

. attempt to minimize

n

X

`

´

wi C h(t) (xi ), yi

i=1

5

6

α(t)

`

´

θi ← 1 − 2C h(t) (xi ), yi

n

X

(t)

← arg min eγα

wi e−αθi

α

7

if α

(t)

9

i=1

=∞

. θi ≥ γ for all i = 1, . . . , n

return f

8

. base rewards

(t)

(·) = medα (h(·))

if α(t) < 0

. equivalent to

n

X

(t)

wi θi < γ

i=1

return f (t−1) (·) = medα (h(·))

10

11

for i ← 1 to n

12

wi

13

(t+1)

return f

(T )

(t)

← w i Pn

exp(−α(t) θi )

(t)

j=1 wj

exp(−α(t) θ

j)

(t)

θi )

(t) exp(−α

(t)

Z

= wi

(·) = medα (h(·))

Fig. 1. The pseudocode of the M ED B OOST algorithm. Dn is the training data, C (y 0 , y) ≥

I{|y−y0 |>} is the cost function, BASE(Dn , w) is the base regression algorithm that attempts

` (t)

´

P

(xi ), yi , γ is the robustness parameter, and T is

to minimize the weighted cost n

i=1 wi C h

the number of iterations.

and the L1 cost function

1

|y − y 0 |.

(3)

To emphasize the relation to binary classification and to simplify the notation in Figure 1

(t)

and in Theorem 1 below, we define the base rewards θi for each training point (xi , yi ),

(t)

i = 1, . . . , n, and base regressor h , t = 1, . . . , T , as

C(1) (y, y 0 ) =

(t)

θi = 1 − 2C (h(t) (xi ), yi ).

(4)

Note that by condition (1) on the cost function, the base rewards are upper bounded by

(

1

if h(t) (xi ) − yi ≤ ,

(t)

θi ≤

(5)

−1 otherwise.

After computing the base rewards in line 5, the algorithm sets the weight α (t) of the

base regressor h(t) to the value that minimizes

E (t) (α) = eγα

n

X

i=1

3

(t)

wi e−αθi .

If all base rewards are larger than γ, then α(t) = ∞ and E (t) (α(t) ) = 0, so the algorithm

returns the actual regressor (line 8). Intuitively, this means that the capacity of the set of

Pn

(t)

base regressors is too large. If α(t) < 0, or equivalently2 , if i=1 wi θi < γ, the algorithm returns the weighted median of the base regressors up to the last iteration (line 10).

Intuitively, this means that the capacity of the set of base regressors is too small, so we

cannot find a new base regressor that would decrease the training error. In general,

α(t) can be found easily by line-search because of the convexity of E (t) (α). In several

special cases, α(t) can be computed analytically. Note that

Pn in practice, BASE(Dn , w)

does not have to actually minimize the weighted cost3 i=1 wi C h(t) (xi ), yi . The

algorithm can continue with any base regressor for which α(t) > 0.

In lines 8, 10, or 13, the algorithm returns the weighted median of the base regressors. For the analysis of the algorithm, we formally define the final regressor in a more

(T )

(T )

- and 1−γ

-quantiles,

general manner. Let fγ+ (x) and fγ− (x) be the weighted 1+γ

2

2

respectively, of the base regressors h(1) (x), . . . , h(T ) (x) with respective weights

α(1) , . . . , α(T ) . Formally, for 0 ≤ γ < 1, let

(

)

PT

(t)

1−γ

(T )

t=1 α I{h(j) (x)<h(t) (x)}

(j)

fγ+ (x) = min h (x) :

,

(6)

<

PT

(t)

j

2

t=1 α

(

)

PT

(t)

1−γ

(T )

t=1 α I{h(j) (x)>h(t) (x)}

(j)

fγ− (x) = max h (x) :

.

(7)

<

PT

(t)

j

2

t=1 α

Then the weighted median is defined as

(T )

f (T ) (·) = medα (h(·)) = f0+ (·).

(8)

For the analysis of the robust error, we define the γ-robust prediction ybi (γ) as the fur(T )

(T )

thest of the two quantiles fγ+ (xi ) and fγ− (xi ) from the real response yi . Formally,

for i = 1, . . . , n, we let

( (T )

(T )

(T )

fγ+ (xi ) if fγ+ (xi ) − yi ≥ fγ− (xi ) − yi ,

(9)

ybi (γ) =

(T )

fγ− (xi ) otherwise.

With this notation, the prediction at xi is ybi = ybi (0) = f (T ) (xi ).

The main result of the paper analyzes the relative frequency of training points on

which the γ-robust prediction ybi (γ) is not -precise, that is, ybi (γ) has a larger L1 error

than . Formally, let the γ-robust training error of f (T ) defined4 as

n

L(γ) (f (T ) ) =

2

3

4

1X

I{|byi (γ)−yi |>} .

n i=1

(10)

Since E (t) (α) is convex and E (t) (0) = 1, α(t) < 0 is equivalent to E (t)0 (0) = γ −

Pn

(t)

i=1 wi θi > 0.

Equivalent to minimizing E (t)0 (0), which is consistent to Mason et al.’s gradient descent approach in the function space [9].

For the sake of simplicity, in the notation we suppress the fact that L(γ) depends on the whole

sequence of base regressors and weights, not only on the final regressor f (T ) .

4

If γ = 0, L(0) (f (T ) ) gives the relative frequency of training points on which the regressor f (T ) has a larger L1 error than . If we have equality in (1), this is exactly the

average cost of the regressor f (T ) on the training data. A small value for L(0) (f (T ) ) indicates that the regressor predicts most of the training points with -precision, whereas

a small value for L(γ) (f (T ) ) suggests that the prediction is not only precise but also

robust in the sense that a small perturbation of the base regressors and their weights

will not increase L(0) (f (T ) ).

The following theorem upper bounds the γ-robust training error L(γ) of the regressor f (T ) output by M ED B OOST.

Theorem 1. Let L(γ) (f (T ) ) defined as in (10) and suppose that condition (1) holds for

(t)

(t)

the cost function C (·, ·). Define the base rewards θi as in (4), let wi be the weight

of training point xi after the tth iteration (updated in line 12 in Figure 1), and let α(t)

be the weight of the base regressor h(t) (·) (computed in line 6 in Figure 1). Then

L(γ) (f (T ) ) ≤

T

Y

E (t) (α(t) ) =

T

Y

eγα

t=1

t=1

(t)

n

X

(t)

wi e−α

(t) (t)

θi

.

(11)

i=1

The proof (see Appendix) is based on the observation that if the median of the

base regressors goes further than from the real response yi at training point xi , then

most of the base regressors must also be far from yi , giving small base rewards to this

point. Then the proof follows the proof of Theorem 5 in [12] by exponentially bounding

the step function. Note that the theorem implicitly appears in [6] for γ = 0 and for

the (0 − 1) cost function (2). A weaker result5 with an explicit proof in the case of

y, h(t) (x) ∈ [0, 1] and γ = 0 can be found in [2].

Note also that since E (t) (α) is convex and E (t) (0) = 1, a positive α(t) means that

minα E (t) (α) = E (t) (α(t) ) < 1, so the condition in line 9 in Figure 1 guarantees that

the the upper bound of (11) decreases in each step.

3 Binary classification as a special case

In this section we show that, in a certain sense, M ED B OOST is a natural extension of the

original A DA B OOST algorithm. We then derive marginal boosting [10], a recently developed variant of A DA B OOST, as a special case of M ED B OOST. Finally, we show that,

as another special case, M ED B OOST provides an approach for boosting confidencerated predictions that is different from the algorithm proposed by Schapire and Singer

[13].

3.1

A DA B OOST

In the problem of binary classification, the response variable y comes from the set

{−1, 1}. In the original A DA B OOST algorithm, it is assumed that the base learners

5

is replaced by 2 in (9).

5

generate

binary functions

from a function set Hb that contains base decisions from the

set h : Rd 7→ {−1, 1} . A DA B OOST returns the weighted average

PT

α(t) h(t) (x)

(T )

gA (x) = t=1

PT

(t)

t=1 α

of the base decision functions, which is then converted to a decision by the simple rule

(

(T )

1

if gA (x) ≥ 0,

(T )

(12)

fA (x) =

−1 otherwise.

The weighted median f (T ) (·) = medα (h(·)) returned by M ED B OOST is identical to

(T )

fA in this simple case. Training errors of base decisions are counted in a natural

(0−1)

way by using the cost function C1

. With this cost function, the base rewards are

familiarly defined as

(

1

if h(t) (xi ) = yi ,

(t)

θi =

−1 otherwise.

The base decisions h(t) are found by minimizing the weighted error

P

(t)

(t) = h(t) (xi )6=yi wi (line 3 in Figure 1), and the optimal weights of the base decisions can be computed explicitly (line 6 in Figure 1) as

1 − (t)

1

.

α(t) = log

2

(t)

Another convenient property of A DA B OOST is that the stopping condition in line 9

reduces to (t) > 21 which is never satisfied if Hb is closed under multiplication by

−1. With these settings, L(0) (f (T ) ) becomes the training error of f (T ) , and Theorem 1

reduces to Theorem 9 in [7], that is,

L(0) (f (T ) ) ≤

T

q

Y

2 (t) (1 − (t) ).

t=1

One observation that partly explains the good generalization ability of A DA B OOST is

that it tends to minimize not only the training error but also the γ-robust training error.

In their groundbreaking paper, Schapire et al. [12] define the γ-robust training error of

(T )

the real valued function gA as

n

(γ) (T )

LA (gA )

1X n

o.

=

I (T )

n i=1 gA (xi )yi ≤γ

One of the main tools in this analysis is Theorem 5 [12] which shows that

q

T

Y

1−γ

(γ) (T )

2 (t)

LA (gA ) ≤

(1 − (t) )1+γ .

(13)

(14)

t=1

The following lemma shows that in this special case, the two definitions of the γ-robust

training errors coincide, so (14) is a special case of Theorem 1.

6

Lemma 1. Let

α(1) , . . . , α

(n)

h(1) , . . . , h(n)

∈ Hbt be a sequence of binary decisions, let

(T )

be a sequence of positive, real numbers, let gA (x) =

and define f (T ) (x) as in (8). Then

(γ)

PT

α(t) h(t) (x)

t=1

PT

,

(t)

t=1 α

(T )

LA (gA ) = L(γ) (f (T ) ),

(γ)

(T )

where LA (gA ) and L(γ) (f (T ) ) are defined by (13) and (10), respectively.

Proof. First observe that in this special case of binary base decisions, |b

yi (γ) − yi | > 1

is equivalent to ybi (γ)yi = −1. Because of the one-sided error,

(

(T )

fγ+ (xi )

ybi (γ) =

(T )

fγ− (xi )

if yi = −1,

if yi = 1,

(T )

(T )

- and 1−γ

-quantiles, respectively, dewhere fγ+ and fγ− are the weighted 1+γ

2

2

fined in (6-7). Without the loss of generality, suppose that yi = 1. Then ybi (γ)yi = −1

(T )

is equivalent to fγ− (xi ) = −1. Hence, by definition (7), we have

1−γ

≤

2

PT

t=1 I{1>h(t) (xi )} α

PT

(t)

t=1 α

(t)

=

PT

(T )

which is equivalent to gA (xi ) ≤ γ.

3.2

t=1 I{h(t) (xi )=−1} α

PT

(t)

t=1 α

(t)

=

PT

1−h(t) (xi ) (t)

α

2

,

PT

(t)

t=1 α

t=1

t

u

A DA B OOST%

Although Schapire et al. [12] analyzed the γ-robust training error in the general case of

γ > 0, the idea to modify A DA B OOST such that the right hand side of (11) is explicitly

minimized has been proposed only later by Rätsch and Warmuth [10]. The algorithm

was further analyzed in a recent paper by the same authors [11]. In this case, the optimal

weights of the base decisions can still be computed explicitly (line 6 in Figure 1) as

α

(t)

1

= log

2

1 − (t)

1−γ

×

1+γ

(t)

.

The stopping condition in line 9 becomes (t) > 12 − γ, so, in principle, it can become true even if Hb is closed under multiplication by −1. For the above choice of

α(t) ,Theorem 1 is identical to Lemma 2 in [10]. In particular,

L

(γ)

(f

(T )

s

q

T

Y

1−γ

(t)

(t)

1+γ

2 )≤

(1 − )

t=1

7

1

(1 −

γ)1−γ (1

+ γ)1+γ

.

3.3

Confidence-rated A DA B OOST

Another general direction in which A DA B OOST can be extended is to relax the requirement that base-decisions must be binary. In Schapire and Singer’s approach [13], weak

hypotheses h(t) range over R. As in A DA B OOST, the algorithm returns the weighted

sum of the base decisions which is then converted to a decision by (12). An upper

bound on the training error is proven for this general case, and the base decisions and

their weights (in lines 3 and 6 in Figure 1) are found by minimizing this bound. The

upper bound is formally identical to the right hand side of (11) with γ = 0, but with the

setting of base rewards to

(t)

θi = h(t) (xi )yi .

(15)

Interestingly, this algorithm is not a special case of M ED B OOST, and the differences

give some interesting insights. The first, rather technical difference is that the choice of

(t)

θi (15) does not satisfy (4) if the range of h(t) is the whole real line. In M ED B OOST,

Theorem 1 suggests to set = 1 in (10) so that L(0) (f (T ) ) is the training error of the

decision generated from f (T ) by (12). If the range of the base decisions is restricted

(1)

to [−1, 1]6 , then the choice of the cost function C1 generates base rewards that are

identical to (15) up to a factor of two. Note that the general settings of M ED B OOST

(2)

allow other cost functions, such as C1 (y, y 0 ) = (y − y 0 )2 , which might make the

optimization easier in practice.

The second, more fundamental difference between the two approaches is the decision they return and the way they measure the robustness of the decision. Unlike in

(γ) (T )

the case of binary base decisions (Lemma 1), in this case LA (gA ) is not equal to

(T )

L(γ) (f (T ) ). The following lemma shows that for given robustness of f (T ) (x), gA (x)

can vary in a relatively large interval, depending on the actual base predictors and their

weights.

Lemma 2. For every margin 0 < γ < 1 and 0 < δ ≤

set of base predictors and weights such that

(T )

1−γ

2 ,

it is possible to construct a

(T )

1. fγ− (x) ≥ 0 and gA (x) < − 1−γ

2 + δ,

(T )

(T )

2. fγ− (x) < 0 and gA (x) ≥ 1+γ

2 −δ

Proof. For the first statement, consider h(1) (x) = 0 with weight c(1) = 1+γ

2 , and

(1)

h(2) (x) = −1 with weight c(2) = 1−γ

.

For

the

second

statement,

let

h

(x)

=

1 with

2

1−γ

2δ

(2)

(2)

weight c(1) = 1+γ

,

and

h

(x)

=

−

with

weight

c

=

.

t

u

2

1−γ

2

The lemma shows that it is even possible that the two methods predict different labels on a given point even though the base predictors and their weights are identical.

Lemma 2 and the robustness definitions (10) and (13) suggest that average-type boosting gives high confidence to a set of base-decisions if their weighted average is far from

0 (even if they are highly dispersed around this mean), while M ED B OOST prefers sets

of base-decisions such that most of their weight is on the good side (even if they are

close to the decision threshold 0).

6

This is not a real restriction: allowing one-sided cost functions and looking at only the lower

quantiles for y = 1 and upper quantiles for y = −1 is the same as truncating the range of base

functions into [−1, 1].

8

3.4

Base decisions that abstain

Schapire and Singer [13] considers a special case of confidence-rated boosting when

base decisionsare binary but they areallowed to abstain, so they come from the subset

Ht of the set h : Rd 7→ {−1, 0, 1} . The problem is interesting because it seems to

be the most complicated case when the optimal weights can be computed analytically.

We also use a similar model in the experiments (Section 4) that illustrate the algorithm

in the general case.

If the final regressor f(T ) is converted to a decision differently from (12), this special case of Schapire and Singer’s approach is also a special case of M ED B OOST. In

general, the asymmetry of (12) causes problems only in degenerate cases. If the median

of the base decisions from Ht is returned, then there are non-degenerate cases when

f (T ) = 0. In this case (12) would always assign label 1 to the given point which seems

unreasonable. To solve this problem, let

(

PT

PT

1

if t=1 I{h(t) (x)=1} ≥ t=1 I{h(t) (x)=−1} ,

(T )

(16)

g (x) =

−1 otherwise.

be the binary decision assigned to the output of M ED B OOST. With this modification,

(0−0.5−1)

and by using the cost function C1

(y, y 0 ) = 21 I{|y−y0 |> 1 } + 21 I{|y−y0 |>1} ,

2

M ED B OOST is identical to the algorithm in [13]. The base rewards are defined as

if h(t) (xi ) = yi ,

1

(t)

θi = h(t) (xi )yi = 0

(17)

if h(t) (xi ) = 0,

−1 otherwise.

q

(t) (t)

(t)

(t)

Base decisions h(t) are found by minimizing 0 + 2 + − , where − =

Pn

(t)

(t)

=

i=1 wi I{h(t) (xi )yi =−1} is the weighted error in the tth iteration, 0

Pn

(t)

(t)

(t)

(t)

i=1 wi I{h(t) (xi )=0} is the weighted abstention rate, and + = 1 − − − 0 is

the weighted correct rate. The optimal weights of the base decisions (line 6 in Figure 1)

are

!

(t)

+

1

(t)

.

(18)

α = log

(t)

2

−

(t)

(t)

The stopping condition in line 9 reduces to − > + . For the γ-robust training error

Theorem 1 gives7

v

γ

u

q

T Y

u (t)

(t)

(t)

(t)

+

L(γ) (g (T ) ) ≤

0 + 2 + − t (t) .

−

t=1

7

Note that these settings minimize the upper bound for L(0) (f (T ) ). One could also minimize

L(γ) (f (T ) ) as in A DA B OOST% to obtain a regularized version. The minimization can be done

analytically, but the formulas are quite complicated so we omit them.

9

In the case of M ED B OOST, another possibility would be to let the final decision also

to abstain by not using (16) to convert f (T ) to a binary decision. In this case, we can

make abstaining more costly by setting γ to a small but non-zero value. Another option

(1)

is to choose C1 (y, y 0 ) as the cost function. In this case, the base rewards become

(t)

θi

1

= −1

−3

if h(t) (xi ) = yi ,

if h(t) (xi ) = 0,

otherwise,

so abstentions are also penalized. The optimization becomes more complicated but the

optimal parameters can still be found analytically.

4 The general case

In theory, the general fashion of the algorithm and the theorem allows the use of different cost functions, sets of base regressors, and base learning algorithms. In practice,

however, base regressors of which the capacity cannot be controlled (e.g., linear regressors, regression stumps) seem to be impractical. To see why, consider the case of the

(0 − 1) cost function (2) and γ = 0. In this case, Theorem 1 can be translated into the

following PAC-type statement on weak/strong learning [6]: “if each weak regressor is

-precise on more than 50% of the weighted points than the final regressor is -precise

on all points”. In the first extreme case, when we cannot even find a base regressor that

is -precise on more than 50% of the (unweighted) points, the algorithm terminates in

the first iteration in line 9 in Figure 1. In the second extreme case, when all points are

within from the base regressor h(t) , the algorithm would simply return h(t) in line 8 in

Figure 1. This means that the capacity of the set of the base regressors must be carefully

chosen such that the weighted errors

(t) =

n

X

(t)

I{|h(t) (xi )−yi |>} wi

(19)

i=1

are less than half but, to avoid overfitting, not too close to zero.

Regression trees A possible and practically feasible choice is to use regression trees

as base regressors. The minimization of the weighted cost (line 3 in Figure 1) provides

a clear criteria for splitting and pruning. The complexity can also be easily controlled

by allowing a limited number of splits. According to the stopping criterion in line 9 in

Figure 1, the growth of the tree must be continued until α(t) becomes positive, which

is equivalent to (t) < 1/2 if the (0 − 1) cost function (2) is used. The algorithm stops

either after T iterations, or when the tree returned by the base regressor is judged to

be too complex. The complexity of the final regressor can also be controlled by using

“strong” trees (with (t) 1/2) as base regressors with a nonzero γ.

10

Regressors that abstain Another general solution to the capacity control problem is

to use base regressors that can abstain. Formally, we define the cost function

(

1

if h(t) abstains on xi ,

0

(0−A−1)

(y, y ) = 2 (0−1)

C

0

C

(y, y ) otherwise,

so the base rewards become

(t)

θi

1

= 0

−1

if |h(t) (xi ) − yi | ≤ ,

if h(t) abstains on xi ,

otherwise.

In the experiments in Section 5 we use window base functions that are constant inside a

ball of radius r centered around data points, and abstain outside the ball. The minimization in line 3 in Figure 1 is straightforward since there are only finite number of base

functions with different average costs. E (t) (α) in line 6 can be minimized analytically

as in (18). The case of α(t) = ∞ must be handled with care if we want to minimize

not only the error rate but also the abstain rate. The problem can be solved formally by

setting γ to a small but non-zero value.

Validation To be able to assess the regressor and to validate the parameters of the

algorithm, suppose that the observation X and its response Y form a pair (X, Y ) of

random variables taking values in Rd × R. We define the -tube error of a function

f : Rd → R as

L (f ) = P(Y > f (X) + ) + P(Y < f (X) − ).

Suppose that the -mode function of the conditional distribution, defined as

µ∗ = arg inf L (f ),

f

exists and that it is unique. Let L∗ = L (µ∗ ) be the -tube Bayes error of the distribution of (X, Y ). Using an -tube cost function in M ED B OOST, L(0) (f (T ) ) is the

empirical -tube error of f (T ) , so, we can interpret the objective of M ED B OOST as that

of minimizing the empirical -tube error. For a given , this also gives a clear criteria

for validation as that of minimizing the -tube test error.

Validating seems to be a much tougher problem. First note that because of the

terminating condition in line 9 in Figure 1 we need base regressors with an empirical

weighted -tube error smaller than 1/2, so if is such that L∗ > 1/2 then overfitting

seems unavoidable (note that this situation cannot happen in the special case of binary

classification). The practical behavior of the algorithm in this case is that it either returns

an overfitting regressor (if the set of base regressors have a large enough capacity),

or it stops in the first iteration in line 9. In both cases, the problem of a too small can be detected. If is such that L∗ < 1/2, then the algorithm is well-behaving. If

L∗ = 0, similarly to the case of binary classification, we expect that the algorithm

works particularly well (see Section 5.1). At this point, we can give no general criteria

11

for choosing the best , and it seems that is a design parameter rather than a parameter

to validate. On the other hand, if the goal is to minimize a certain cost function (e.g,

quadratic or absolute error), then all the parameters can be validated based on the test

cost. In the second set of experiments (Section 5.2) we know µ∗ and we know that it is

the same for all so we can validate based on the L1 error between µ∗ and f (T ) . In

practice, when µ∗ is unknown, this is clearly unfeasible.

5 Experiments

The experiments in this section were designed to illustrate the method in the general

case. We concentrated on the similarities and differences between classification and

regression rather than the practicalities of the method. Clearly, more experiments will

be required to evaluate the algorithm from a practical viewpoint.

5.1

Linear base regressors

The objective of these experiments is to show that if L∗ ≈ 0 and the base regressors

are adequate in terms of the data generating distribution, then M ED B OOST works very

well. To this end, we used a data generating model where X is uniform in [0, 1] and

Y = 1 + X + δ where δ is a random noise generated by different symmetric distributions in the interval [−0.2, 0.2]. We used linear base regressors that minimize the

weighted quadratic error in each iteration. To generate the base rewards, we used the

(0−1)

cost function C

. We set γ = 0 and T = 100 although the algorithm usually

stopped before reaching this limit. The final regressor was compared to the linear regressor that minimized the quadratic error. Both the comparison and the validation of

was based on the L1 error between the regressor and µ∗ which is x + 1 if is large

enough. Table 1 shows the average L1 errors and their standard deviations over 10000

experiments with n = 40 points generated in each. The results show that M ED B OOST

beats the individual linear regressor in all experiments, and the margin grows with the

variance of the noise distribution. Since the base learner minimizes the quadratic error,

h(1) is the linear regressor, so we can say that additional base regressors improve the

best linear regressor.

Noise Best L1 distance from µ∗

STD

M ED B OOST

L INEAR

Noise

distribution

Noise PDF

in [−0.2.0.2]

Uniform

Inverted triangle

Quadratic

Extreme

2.5

0.1155 0.19 0.0133 (0.0078) 0.0204 (0.0108)

25|δ|

0.1414 0.2 0.0101 (0.0069) 0.0250 (0.0132)

1.25(1 − |5δ|)−1/2

0.1461 0.2 0.0066 (0.0053) 0.0261 (0.0136)

±0.2 w. prob. 0.5 − 0.5 0.2

0.21 0.0147 (0.0153) 0.0358 (0.0191)

Table 1. M ED B OOST versus linear regression.

12

5.2

Window base regressors that abstain

In these experiments we tested M ED B OOST with window base regressors that abstain

(Section 4) on a toy problem. The same data model was used as in Section 5.1 but this

time the noise δ was Gaussian with zero mean and standard deviation of 0.1. In each experiment, 200 training and 10000 test points were used. We tested several combinations

of and r. The typical learning curves in Figure 2 indicate that the algorithm behaves

similarly to the binary classification case. First, there is no overfitting in the overtraining

sense: the test error plus the rate of data points where f (t) abstains decreases monotonically. The second similarity is that the test error decreases even after the training error

crosses L∗ which may be explained by the observation that M ED B OOST minimizes the

γ-robust training error. Interestingly, when L∗ > 1/2 (Figure 2(a)), the actual test error

goes below L∗ , and even the test error plus the rate of data points where f (t) abstains

approaches L∗ quite well. Intuitively, this means that the algorithm “gives up” on hard

points by abstaining rather than overfitting by trying to predict them.

(b)

(a)

1

0.4

training error

test error

training abstain

test abstain

training error + abstain

test error + abstain

bound

bayes error

0.8

training error

test error

training abstain

test abstain

training error + abstain

test error + abstain

bound

bayes error

0.35

0.3

0.25

0.6

0.2

0.4

0.15

0.1

0.2

0.05

0

0

0

50

100

t

150

200

0

50

100

t

150

200

Fig. 2. Learning curves of M ED B OOST with window base regressors that abstain. (a) = 0.05,

r = 0.02. (b) = 0.16, r = 0.2.

We also compared the two validation strategies based on test error and L1 distance

from µ∗ . For a given , we found that the test error and L1 distance were always minimized at the same radius r. As expected, the L1 distance tends to be small for ’s for

which L∗ < 1/2. When validating , we found that the minimum L1 distance is quite

stable in a relatively large range of (Table 2).

best r in L1 sense L1 dist. from µ∗ best r by test error test error L∗

0.1 0.12

0.13 0.16

0.16 0.2

test error −L∗

0.032

0.12

0.359

0.3274 0.032

0.033

0.16

0.227

0.1936 0.033

0.032

0.2

0.14

0.1096 0.03

Table 2. Validation of the parameters of M ED B OOST.

13

6 Conclusion

In this paper we presented and analyzed M ED B OOST, a boosting algorithm for regression that uses the weighted median of base regressors as final regressor. We proved

boosting-type convergence of the algorithm and gave clear conditions for the convergence of the robust training error. We showed that A DA B OOST is recovered as a special

case of M ED B OOST. For boosting confidence-rated predictions we proposed a new approach based on M ED B OOST. In the general, non-binary case we suggested practical

strategies and presented two feasible choices for the set of base regressors. Experiments

with one of these models showed that M ED B OOST in regression behaves similarly to

A DA B OOST in classification.

Appendix

Proof of Theorem 1

First, observe that by the definition

(9) of the

ybi (γ), it follows from

robust prediction

(T )

(T )

|b

yi (γ) − yi | > that max fγ+ (xi ) − yi , fγ− (xi ) − yi > . Then we have three

cases.

(T )

(T )

1. fγ+ (xi ) − yi > fγ− (xi ) − yi .

(T )

(T )

(T )

(T )

Since fγ+ (xi ) − yi > and fγ+ (xi ) ≥ fγ− (xi ), we also have that fγ+ (xi ) −

(T )

yi > . This together with the definition (6) of fγ+ implies that

PT

α(t) I{h(t) (xi )−yi >}

1−γ

≥

, thus

PT

(t)

2

t=1 α

t=1

PT

t=1

(t)

Since

1−θi

2

≥ I{|h(t) (xi )−yi |>} by (5), we have

so

γ

T

X

α(t) ≥

T

X

α(t) I{|h(t) (xi )−yi |>}

1−γ

≥

.

PT

(t)

2

t=1 α

PT

(t)

(t)

(t) 1−θi

t=1 α

2

PT

(t)

α

t=1

α(t) θi .

≥

1−γ

, and

2

(20)

t=1

t=1

(T )

(T )

2. fγ+ (xi ) − yi < fγ− (xi ) − yi .

(T )

(20) follows similarly as in Case 1 from the definition (7) of fγ− and the fact that

(T )

in this case yi − fγ− (xi ) > .

(T )

(T )

3. fγ+ (xi ) − yi = fγ− (xi ) − yi .

(T )

If fγ+ (xi ) ≥ yi then (20) follows as in Case 1, otherwise (20) follows as in Case 2.

14

We have shown that |b

yi (γ) − yi | > implies (20), hence

n

L(γ) (f (T ) ) =

1X

I{|byi (γ)−yi |>}

n i=1

n

≤

1X n

o

PT

I PT

(t)

n i=1 γ t=1 α(t) − t=1 α(t) θi ≥0

!

T

T

n

X

X

1X

(t) (t)

(t)

α θi

α −

≤

exp γ

(since ex ≥ I{x≥0} ) (21)

n i=1

t=1

t=1

!

!

T

T

n

X

X

X

1

(t)

α(t)

α(t) θi

= exp γ

exp −

n

t=1

t=1

i=1

! T

T

n

X

Y

X

(T +1)

α(t)

= exp γ

Z (t)

wi

(by line 12 in Figure 1)

t=1

= exp γ

T

X

α(t)

t=1

=

T

Y

!

t=1

T

Y

i=1

Z (t)

t=1

(t)

eγα Z (t)

t=1

=

T

Y

t=1

e

γα(t)

n

X

(t)

wi e−α

(t) (t)

θi

,

i=1

Pn

(t) (t)

(t)

where Z (t) = i=1 wi e−α θi is the normalizing factor used in line 12 in Figure 1.

Note that from (21), the proof is identical to the proof of Theorem 5 in [12].

t

u

References

1. R. Avnimelech and N. Intrator. Boosting regression estimators. Neural Computation,

11:491–513, 1999.

2. A. Bertoni, P. Campadelli, and M. Parodi. A boosting algorithm for regression. In Proceedings of the International Conference on Artificial Neural Networks, pages 343–348, 1997.

3. P. Bühlmann. Bagging, subagging and bragging for improving some prediction algorithms.

In M.G. Akritas and D.N. Politis, editors, Recent Advances and Trends in Nonparametric

Statistics (to appear). 2003.

4. H. Drucker. Improving regressors using boosting techniques. In Proceedings of the 14th

International Conference on Machine Learning, pages 107–115, 1997.

5. N. Duffy and D. P. Helmbold. Leveraging for regression. In Proceedings of the 13th Conference on Computational Learning Theory, pages 208–219, 2000.

6. Y. Freund. Boosting a weak learning algorithm by majority. Information and Computation,

121(2):256–285, 1995.

7. Y. Freund and R. E. Schapire. A decision-theoretic generalization of on-line learning and an

application to boosting. Journal of Computer and System Sciences, 55(1):119–139, 1997.

8. J. Friedman. Greedy function approximation: a gradient boosting machine. Technical report,

Dept. of Statistics, Stanford University, 1999.

15

9. L. Mason, P. Bartlett, J. Baxter, and M. Frean. Boosting algorithms as gradient descent. In

Advances in Neural Information Processing Systems, volume 12, pages 512–518. The MIT

Press, 2000.

10. G. R¨

atsch and M. K. Warmuth. Maximizing the margin with boosting. In Proceedings of the

15th Conference on Computational Learning Theory, 2002.

11. G. R¨

atsch and M. K. Warmuth. Efficient margin maximizing with boosting. Journal of

Machine Learning Research (submitted), 2003.

12. R. E. Schapire, Y. Freund, P. Bartlett, and W. S. Lee. Boosting the margin: a new explanation

for the effectiveness of voting methods. Annals of Statistics, 26(5):1651–1686, 1998.

13. R. E. Schapire and Y. Singer. Improved boosting algorithms using confidence-rated predictions. Machine Learning, 37(3):297–336, 1999.

14. R. S. Zemel and T. Pitassi. A gradient-based boosting algorithm for regression problems. In

Advances in Neural Information Processing Systems, volume 13, pages 696–702, 2001.

16