data compression modelling: huffman and arithmetic

advertisement

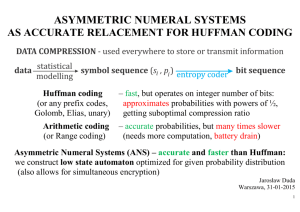

Vikas Singla, Rakesh Singla, and Sandeep Gupta DATA COMPRESSION MODELLING: HUFFMAN AND ARITHMETIC Vikas Singla1, Rakesh Singla2, and Sandeep Gupta3 1 Lecturer, IT Department, BCET, Gurdaspur Lecturer, IT Department, BHSBIET, Lehragaga 3 Lecturer, ECE Department, BHSBIET, Lehragaga singla_vikas123@yahoo.com 1 asingla_123@yahoo.co.in 2,3 2 Abstract arises. This problem is traditionally solved by data compression. The system transmits several different data types, which require specific compression methods. Typical compression methods can be divided into three groups according to their principle [2]. Statistical compression methods, which create their models in dependence of probabilities of short parts (usually simple symbols), belong into the first group. The second group is constructed of compression methods, which replace repeated data with reference to its previous occurrence. The last group of methods predicts next symbol in dependence of precedent symbols and stores only the difference from this prediction. This paper deals with the second group; The paper deals with formal description of data transformation (compression and decompression process). We start by briefly reviewing basic concepts of data compression and introducing the model based approach that underlies most modern techniques. Then we present the arithmetic coding and Huffman coding for data compression, and finally see the performance of arithmetic coding. And conclude that Arithmetic coding is superior in most respects to the better-known Huffman method. As its performance is optimal without the need for blocking of input data. It also accommodates adaptive models easily and is computationally efficient. II. DATA COMPRESSION I. INTRODUCTION Data compression is the art to replace the actual data by the coded data by using model-based paradigm for coding, to the from an input string of symbols and a model, an encoded string is produced that is (usually) a compressed version of the input. The decoder, which must have access to the same model, regenerates the exact input string from the encoded string. Input symbols are drawn from some well-defined set such as the ASCII or binary alphabets; the encoded string is a plain sequence of bits. Compression is achieved by transmitting the The UK’s Open University is one of the world’s largest Universities, with over 160,000 currently enrolled distance-learning students distributed throughout the world. Requirements on development of a support tool for geographic position visualization, off-line analysis and on-line presence and messaging arose[1]. Spatial data can be stored in raster or vector form. Since the system is distributed, the problem of lowcost data transmission from server to clients 64 Data Compression Modelling: Huffman and Arithmetic more probable symbols in fewer bits than the less probable ones. The model is a way of calculating, in any given context, the distribution of probabilities for the next input symbol and more complex models can provide more accurate probabilistic predictions and hence achieve greater compression. The effectiveness of any model can be measured by the entropy of the message with respect to it, usually expressed in bits/symbol. Shannon’s fundamental theorem of coding states that, given messages randomly generated from a model, it is impossible to encode them into less bits (on average) than the entropy of that model[3]. A message can be coded with respect to a model using either Huffman or arithmetic coding. III. DATA COMPRESSION MODELING There are two dimensions along which each of the schemes discussed here may be measured, algorithm complexity and amount of compression. When data compression is used in a data transmission application, the goal is speed. Speed of transmission depends upon the number of bits sent, the time required for the encoder to generate the coded message, and the time required for the decoder to recover the original ensemble. In a data storage application, although the degree of compression is the primary concern, it is nonetheless necessary that the algorithm be efficient in order for the scheme to be practical. [4] Entropy: Information theory uses the term entropy as the measure of how much information is encoded in a message. The word entropy is taken from thermodynamics. The higher the entropy of message more the information it contains. The entropy of a symbol is defined as negative logarithm of its probabilities. To determine the information content of a message in bits, we express the zero order entropy H(p) as n H(p) = - Σ Pi log2 Pi bits/symbol i=1 where n= no. of separate symbols & Pi = Probability of occurrence of symbol i. For a memory less source, entropy defines a limit on compressibility for the source [5]. The entropy of an entire message is simply the sum of the entropy of all individual symbols. IV. THE HUFFMAN CODING The HUFFMAN coding creates a variable - length codes that are integral no. of bits. Symbol with high probabilities get shorter codes. Huffman code has a unique prefix attribute, which means that they can be correctly decoded despite being of variable length. Decoding a stream of Huffman codes is generally done by following a binary decoder tree. • The two free nodes with lowest weight are located. • A parent node for these two nodes is created. It is assigned a weight equal to the sum of the child nodes. • The parent node is added to the list of free nodes, and the two child nodes are removed from the list. • One of the child nodes is designated as the path taken from the parent node when decoding a 0 bit. The other is arbitrarily set to the 1 bit. • The previous steps are repeated until only one free node is lift. This free node is designated the root of the tree[6]. Example: - Let us consider user select a file, which contains the symbol A, B, C, D and E with the count 15, 7, 6, 6 and 5 respectively. Then the Huffman code by using the above process is given as International Journal of The Computer, the Internet and Management Vol. 16.No.3 (September-December, 2008) pp 64- 68 65 Vikas Singla, Rakesh Singla, and Sandeep Gupta less than 1 and greater than or equal to 0. This single number can be uniquely decoded to create the exact stream of symbols that went into its construction. In order to construct the output number, the symbols being encoded have to have a set probabilities assigned to them.[7]. It can be explained by using an example given below: Example: - If I was going to encode the random message "BILL GATES", I would have a probability distribution that is given in table 1. Figure 1. Huffman coding tree TABLE 1. PROBABILITY TABLE Figure 2. Huffman code table Calculation of low and high value Range = High – Low Low = Low + Range * Low Range (c) High = Low + Range * High range(c) Figure 3. Compression with before and after compression TABLE 2. SYMBOL AND THEIR RANGE V. THE ARITHMETIC CODING Arithmetic coding completely bypasses the idea of replacing an input symbol with a specific code. Instead, it takes a stream of input symbols and replaces it with a single floating point output number. The longer (and more complex) the message, the more bits are needed in the output number. It was not until recently that practical methods were found to implement this on computers with fixed sized registers. The output from an arithmetic coding process is a single number 66 Data Compression Modelling: Huffman and Arithmetic TABLE 3. LOW AND HIGH VALUE VI. DECODING OF INPUT VALUE The way around this problem is to use arithmetic. The output from an arithmetic coding process is single number less then one and greater than 0. This single number can be uniquely decoded to create the exact string of symbols that went into its construction. To construct output number, the symbols are assigned set probabilities. Let us, consider a file which contains the following strings “BILL GATES” this example has the probability distribution as shown in table 1. Decompressing Process Symbol = find symbol (number) Range = high range (symbol) – low range (symbol) Number = number – low range (symbol) Number = number / range TABLE 4. DECOMPRESSING PROCESS Once a character probabilities are known, individual symbols needs to be assigned a range along a “probability line” nominally 0 to1. it does not matter which character are assigned which segment of range, as long as it is done in the same manner by both encoder and a the decoder. The nine – character symbol set use here would look like as shown in table 2, each character assign the portion of the zero to 1 range that corresponding to its probability of appearance. The most significant portion of arithmetic – coded message belong to the first symbol – or B in a example. To decode the first character properly, the final code message has to be a number greater or equal to 0.20 and less than 0.30. To encode this number, track the range it could fall in. After the first character is encode the low and for this range 0.20 and high and 0.30. During the rest of encoding process each new symbol will further restrict the possible range of the output number. The next character to be encoded, the letter I, owns the range 0.50 to 0.60 in the new sub range of current established range. Applying this logic will further restrict our number to 0.25 to 0.36. The algorithm or formula to accomplish this for a message of any length is shown with table 3. In addition, the entire process of encoding for our example is International Journal of The Computer, the Internet and Management Vol. 16.No.3 (September-December, 2008) pp 64- 68 67 Vikas Singla, Rakesh Singla, and Sandeep Gupta VIII. REFERENCES shown in table 3. Therefore, the final low value, 0.253167752, will uniquely encode the message “BILL GATES” using our present coding scheme. The decoding algorithm is just achieved by just reversing the process of encoding. To encode the given value, find the first symbol in the message by seeing 0.2573167752 falls between 0.2 and 0.3, the first character must be B. Then remove B, from the encoded number. Since we know the low and high ranges of B, giving 0.0573167752. Then divided by 0.1 which is in the range of next letter, I. [1] Komzak, J. and Eisenstadt, M (1998). Visualization of entity distribution in very large scale spatial and geographic information systems. KMI-TR-113, Knowledge Media Institute, Open University, Milton Keynes, UK, June 2001 [2] Salomon, D.: Data Compression, Springer-Verlag, New York, 1998. [3] Shannon, C.E.. and Weaver (1949). W. The Mathematical Theory of Communication. University of Illinois Press, Urbana, Ill., 1949 [4]http://www.ics.uci.edu/~dan/pubs/DCSec1.ht VII. CONCLUSION The ability of arithmetic coding to compress the text file is better than Huffman in many aspects because it accommodate adaptive models and provide separation between model and coding. In arithmetic coding there is no need to translate each symbol into an integral number of bits, but it involves the large computation on the data like multiplication and division. The disadvantage of arithmetic coding is that it runs slowly, complicated to implement and it does not produce prefix code. ml [5] http://www.iucr.org/iucr-top/cif/cbf/ PAPER [6]ww.iucr.org/iucrtop/cif/cbf/PAPER/ huffman.html [7] http://www.dogma.net/markn/articles/arith [8] Ian H. Witten, Radford M. Neal, John G. Cleary, Arithmetic coding for data compression [9] Paul G. Howard, Jeffrey Scott Vitter, Arithmetic Coding for Data Compression 68

![Information Retrieval June 2014 Ex 1 [ranks 3+5]](http://s3.studylib.net/store/data/006792663_1-3716dcf2d1ddad012f3060ad3ae8022c-300x300.png)