CM613 lossless sem

advertisement

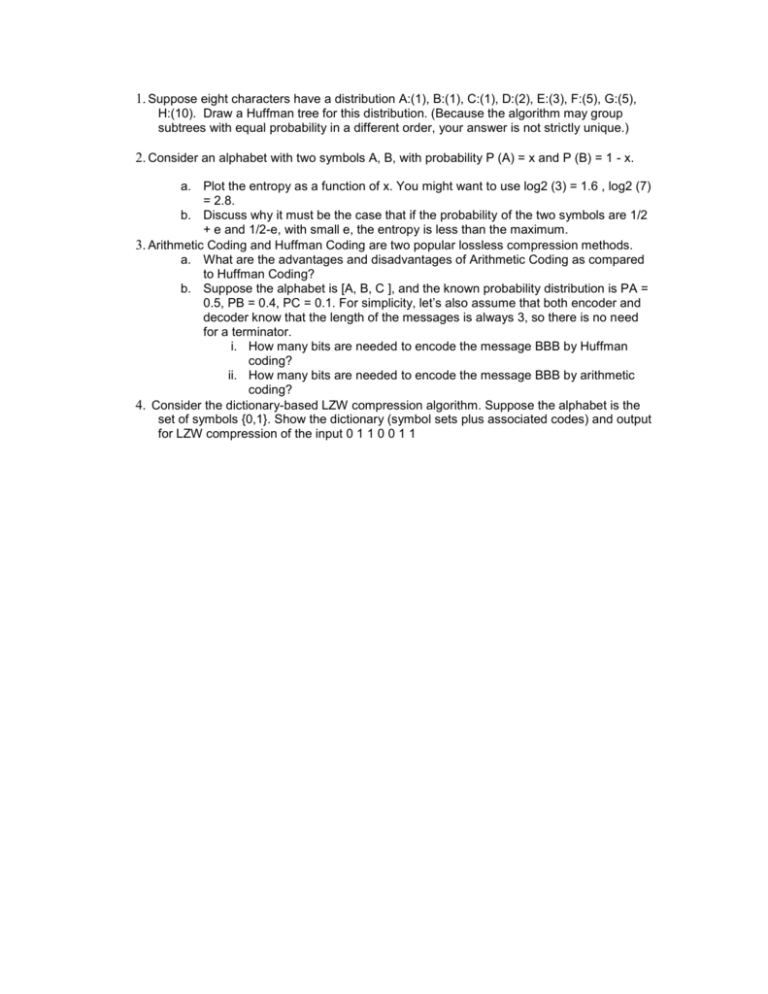

1. Suppose eight characters have a distribution A:(1), B:(1), C:(1), D:(2), E:(3), F:(5), G:(5),

H:(10). Draw a Huffman tree for this distribution. (Because the algorithm may group

subtrees with equal probability in a different order, your answer is not strictly unique.)

2. Consider an alphabet with two symbols A, B, with probability P (A) = x and P (B) = 1 - x.

a. Plot the entropy as a function of x. You might want to use log2 (3) = 1.6 , log2 (7)

= 2.8.

b. Discuss why it must be the case that if the probability of the two symbols are 1/2

+ e and 1/2-e, with small e, the entropy is less than the maximum.

3. Arithmetic Coding and Huffman Coding are two popular lossless compression methods.

a. What are the advantages and disadvantages of Arithmetic Coding as compared

to Huffman Coding?

b. Suppose the alphabet is [A, B, C ], and the known probability distribution is PA =

0.5, PB = 0.4, PC = 0.1. For simplicity, let’s also assume that both encoder and

decoder know that the length of the messages is always 3, so there is no need

for a terminator.

i. How many bits are needed to encode the message BBB by Huffman

coding?

ii. How many bits are needed to encode the message BBB by arithmetic

coding?

4. Consider the dictionary-based LZW compression algorithm. Suppose the alphabet is the

set of symbols {0,1}. Show the dictionary (symbol sets plus associated codes) and output

for LZW compression of the input 0 1 1 0 0 1 1

![Information Retrieval June 2014 Ex 1 [ranks 3+5]](http://s3.studylib.net/store/data/006792663_1-3716dcf2d1ddad012f3060ad3ae8022c-300x300.png)