Mean Field Simulation for Monte Carlo Integration

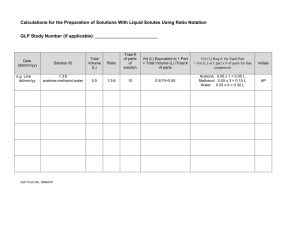

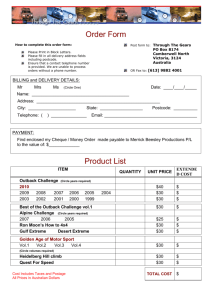

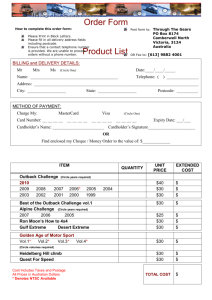

advertisement