FUTURE RESEARCH IN COMPUTER ARITHMETIC Eric Schwarz

advertisement

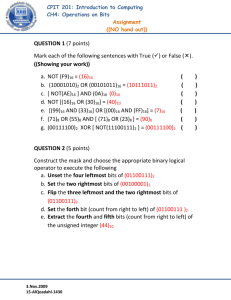

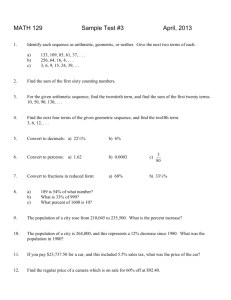

FUTURE RESEARCH IN COMPUTER ARITHMETIC Eric Schwarz Systems & Technology Group IBM Corporation 2455 South Road Poughkeepsie, NY 12601, USA Email: eschwarz@us.ibm.com ABSTRACT There are several areas in computer arithmetic which are fertile for new discoveries. This paper will introduce some of these fields of research with the expectation that there will be tremendous advances in the next decade. Some of these fields are already matured with key problems still unsolved while others are nebulous at this time in history. I will address three arithmetic fields: the multiplication operation, the division operation, and decimal floating-point. Each has critical issues which are going under intense research and development. 1. INTRODUCTION Computer arithmetic is a field as old as computers. Number systems have transformed from many proprietary systems to several standardized floating-point systems. In the 1950s both decimal and binary fixed-point arithmetic [1] were popular. Decimal arithmetic provided users familiarity and could easily be displayed and understood. Binary was much easier to express in Boolean logic which was easy to implement with the advent of the transistor. Binary became very popular with very few computers supporting decimal in the 1960s and 1970s. In 1985 a binary floating-point system was standardized [2] putting an end to most proprietary formats. This system is now available on almost all microprocessors. In today’s age of miniaturization, multiple processors can fit on a single chip, and users started demanding a hardware implemented arithmetic system that can handle financial transactions. The next floating-point standard includes both a binary and decimal number system[3]. Number systems are still evolving as well as the fundamentals of computer arithmetic. In early computer design, memory capacity was small, so the use of lookup tables in hardware implementations were limited. Also serial implementations of algorithms were popular, such as arithmetic using on-line algorithms. As technology advanced and miniaturization of circuits increased, parallel algorithms have grown in popularity. The technology has changed over the years, and now wire lengths and floorplans are very important. More recently, one of the most important parameters of design has become power consumption. Not only has technology changed the design, but also the clear description of the fundamental concepts has changed it too. Education has helped power the development of new algorithms. These expositions have trained many new designers and have made complex concepts more easily understood and compounded into an even more complicated concepts. This paper will show some interesting works which simplify the understanding of key fundamentals of computer arithmetic. Concepts are being advanced in every facet of computer arithmetic. For instance the simplest arithmetic operation, addition, is being transformed. Low power adder design is a hot topic and many papers detail circuits which can reduce their power. Even algorithms such as the Carry Ripple Adder (CRA) are being investigated. CRAs are the smallest adders, and surprisingly, can be fast since most carries only propagate a few bits[4, 5]. Recently, the fundamentals of End Around Carry (EAC) adders were explored[6]. EAC adders have been used in industry for many years but there were no details in books or papers. The book chapter in [6] explains and proves their design with easy to understand concepts. The advances in both algorithms and basic description of fundamentals are key to future developments in this field. This paper will detail some key developments in some of my favorite areas of computer arithmetic. First developments in binary multiplication by Stamatis Vassiliadis will be detailed which are now the subject of much research in this field. Second unsolved problems in division will be explored. And finally, the reinvigorated decimal floating-point field will be discussed. 114 FUTURE RESEARCH IN COMPUTER ARITHMETIC 1 s s s s xxxxxxxxxxxxxxxxxxxxxxxx 2 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 3 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 4 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 5 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 6 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 7 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 8 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 9 s s s xxxxxxxxxxxxxxxxxxxxxxxx ooh 10 x x x x x x x x x x x x x x x x x x x x x x x x o o h 1 2 3 4 5 6 7 8 9 10 Fig. 1. Partial Product Array for Radix-8 2. MULTIPLICATION Binary multiplication in computers is performed either serially, with a shift and add scheme, or in parallel by producing all the partial products and summing them in a counter tree. The multiplier, Y , is separated into groups of multiple bits called digits, Di . Multiples of the multiplicand, X, are usually formed for every possible value of a digit. And then the multiples are multiplexed to create each partial product. (n−1)/r P =X ∗Y =X ∗( Di ∗ r i ) (1) i=0 (n−1)/r P = ( (X ∗ Di ) ∗ ri ) (2) i=0 Most designs are based on an algorithm developed by Andrew Booth [7]. Booth popularized a technique for reducing the range of the magnitude of the digit of the multiplicand which simplified the required multiples. Without Booth’s method, a digit of 2 bits (denoted by Di ) would have the values of 0, 1, 2, or 3. Booth scanned all the bits and applied the String Recoding Theorem to reduce consecutive ones. A series of ones is replaced by a positive one, in a bit location one more significant than the most significant bit of the string, and a negative one, in the bit position of the least significant bit, as shown by the following: n−1 2i = 2n − 20 . (3) i=0 Booth suggested scanning a number in groups of bits called digits. Booth viewed all the bits in a digit, and peeked at 1 bit in the next digit to determine whether this digit was part of string being recode. By using this technique a digit of 2 bits will recode to the set of values -2, -1, 0, +1, +2. The Booth method of recoding digits was proved in 1975 by Rubinfield [8] for a digit of 2 bits called a radix-4 recoding (r = 4). Stamatis Vassiliadis [9] simplified the proof and expanded it for other radices such as radix-8 recoding. This paper provides the rules for how to perform the scanning of the multiplicand such that it terminates correctly and includes the correction for the beginning and ending of a string. This insures the correctness of implementations. Several multipliers rely on these rules such as the IBM S/390 G4 and G5 parallel enterprise servers which use a radix-8 multiplier[10, 11, 12]. Stamatis’s paper includes a proof of a technique for sign extension and hot one encoding which is in almost every implementation today. For a radix-8 multiplier, sign extension encoding consists of 3 bits to the left of every partial product except the most significant (10) and the least significant (1) as shown in Figure 1. The 3 bits are equal to 11Si = 111 for positive partial products and 11Si = 110 for negative partial products. This 1989 paper is the first paper to show the first partial product’s sign encode can be simplified to four bits equal to Si Si Si Si = 1000 for positive partial product and Si Si Si Si = 0111 for a negative partial product. Prior to this paper the encoding of the first partial product was the same as the other partial products and an additional row was implemented to add 1 into the least significant bit of sign encode. This encoding saved a row in the partial product array. 115 Eric Schwarz The hot one encoding is placed on the right side (lesser significant positions) of each partial product and encodes a hot one which is required to two’s complement negative partial products. It is shown with 3 bits equal to 00h = 00Si−1 . So if the partial product above the hot encode is negative then the “h” equals 1 in this row. 2.1. Fixed-Point Multiplication Fixed-point multiplication was not implemented very efficiently until this work was extended in 1991 by Stamatis Vassiliadis [13]. In 1971 fixed-point multiplication was shown to require correction terms[14]. For example: (n−1) P = X ∗ Y = (−xn ∗ 2n + (n−1) xi ∗ 2i ) ∗ (−yn ∗ 2n + i=0 yj ∗ 2j ) (4) j=0 (n−1) P = (xn ∗ yn ∗ 22n ) − (yn ∗ (n−1) xi ∗ 2n+i ) − (xn ∗ i=0 yj ∗ 2n+j ) + P M AG (5) j=0 (n−1) (n−1) P M AG = ( i=0 xi ∗ yj ∗ 2i+j ) (6) j=0 P M AG is the partial product matrix of a normal magnitude multiplication and there are 3 additional terms. These 3 terms are sign correction terms. They consist of 2 vectors and 1 bit which can be summed to a normal partial product matrix with 2 additional rows. Vassiliadis in 1991 showed an alternative to requiring additional rows. Instead the normal sign-magnitude multiplier can be extended to implement fixed-point. The initial scanning of the multiplier, Y , is sign-extended and the sign encoding is changed to be dependent on the sign of the partial product, which is the exclusive OR of the sign of the multiple of the multiplicand and the sign of the multiplier. This simplified fixed-point multiplier implementations and made it easily possible to implement both fixed-point and floating-point multiplication on the same multiplier. 2.2. Future of Mutliplication Currently it is common to implement the reduction of the partial product matrix with a counter tree which consists of 3:2 counters or 4:2 counters. In 1990 on the RS/6000, 7:3 counters[15] were used but they were essentially made up of 3:2 counters internally. On other processors, larger counters were tried such as 9:2 counters but they too were composed of 3:2 counters. The larger counters were used to make floorplanning and wiring of components simpler and not to reduce delay. 4:2 counters were invented by Arnold Weinberger in 1981[16] but they intially had the delay of 2 x 3:2 counters. They became very popular when it was shown that they could be implemented with only 1.5 times the delay of a 3:2 counter[17] using passtransistor multiplexors. To utilize pass-transistor multiplexors the equations of a 4:2 counter are rewritten to take advantage of orthogonal signals. Stamatis Vassiliadis [18] in 1993 showed a very interesting technique for implementing a 7:3 counter which did not utilize 3:2 counters internally. Instead OR gates are used to mimic threshold gates and are assembled in a binary tree. Every 2 inputs are input to a block. In the following equations, juxstaposition is used to indicate an AND gate and a“+” indicates a logical OR gate. 2(x : y) indicates a threshold function of exactly 2 for the bits from x to y, and 1 + (x : y) indicates the sum of 1 or more for the same bits x to y. 2(0 : 1) = x(0) x(1) (7a) 1+ (0 : 1) = x(0) + x(1) 2(2 : 3) = x(2) x(3) (7b) (7c) 1+ (2 : 3) = x(2) (7d) (7e) + x(3) 2(4 : 5) = x(4) x(5) 1+ (4 : 5) = x(4) 116 + x(5). (7f) FUTURE RESEARCH IN COMPUTER ARITHMETIC In the second level, signals representing four bit groups are formed: 4(0 : 3) = 2(0 : 1) 2(2 : 3) + (8a) + 3 (0 : 3) = 2(0 : 1) 1 (2 : 3) + 2 (0 : 3) = 2(0 : 1) + + 1 (4 : 6) = 1 (4 : 5) + + 2(2 : 3) + 1 (2 : 3) + (8b) (8c) (8d) (8e) 1+ (4 : 5) x(6) + + 1 (0 : 1) 2(2 : 3) 1 (0 : 1) 1 (2 : 3) 1 (0 : 3) = 1 (0 : 1) + 3(4 : 6) = 2(4 : 5) x(6) + + + + 2+ (4 : 6) = 2(4 : 5) + (8f) x(6). (8g) In the third level, thresholds of the seven bits are known: 6+ (0 : 6) = 4(0 : 3) 2+ (4 : 6) + + 4 (0 : 6) = 4(0 : 3) + + + (9a) + 3 (0 : 3) 1 (4 : 6) + + 2 (0 : 3) 2 (4 : 6) + 2+ (0 : 6) = 2+ (0 : 3) 3+ (0 : 3) 3(4 : 6) + (9b) + 1 (0 : 3) 3(4 : 6) 1+ (0 : 3) 1+ (4 : 6) + (9c) 2+ (4 : 6). (9d) In the last level, each bit of the 3 bit sum (c2, c1, s) are formed: c2 = 4+ (0 : 6) (10a) c1 = 6+ (0 : 6) (10b) (10c) + 4+ (0 : 6) 2+ (0 : 6) s = XOR V ECT OR(X(0 : 7)). More interesting implementations are now possible with Stamatis Vassiliadis’s 7:3 counter. It is possible to design a fast counter with these equations by applying orthogonality to some of the threshold functions. Recently these 7:3 counters have been subject of several similar patents [19, 20, 21]. Stamatis Vassiliadis has laid a foundation of knowledge in multiplier design. In 1989 he wrote the proofs of basic Booth multiplication, and in 1991 he expanded these equations to implement fixed-point multiply. And lastly in 1993 Stamatis Vassiliadis laid the foundation for more complex counter tree design. 3. DIVISION Addition and multiplication operations are easily pipelined in floating-point units but the division operation presents problems to pipelining. Division is commonly implemented with either a non-restoring subtractive algorithm or a multiplicative quadratically converging algorithm. Neither of these methods can compete with the latency of multiplication. The division operation requires a breakthrough in research to make it close to the performance of multiply or add. Two areas of research have focused on accelerating the latency of division: direct solution and remainder avoidance. 3.1. Direct Solution An interesting algorithm for the direct solution of division was attempted in 1993[22]. Division is the inverse of multiplication. So if the equations of multiplication can be back-solved as a set of linear equations, it would be possible to combinatorially evaluate division. Take for example the multiplication of Q ∗ B = 1. (11) Solving for Q will yield the reciprocal of B. The partial product array is formed in Figure 2. By choosing a redundant digit set for Q, such that propagation is prohibited between columns, then each column forms a separate equation. There is one new quotient digit for each column, so there is an equal number of unknowns as there are 117 Eric Schwarz b2 b3 q2 q3 .. .. . . q4 b2 q4 b3 q4 q3 b2 q3 b3 q3 b4 q3 q2 b2 q2 b3 q2 b4 q2 b5 q2 q1 b2 q1 b3 q1 b4 q1 b5 q1 · · · q0 b2 q0 b3 q0 b4 q0 b5 q0 · · · 1 1 1 1 1 ··· X 0. 0. q0 . 1 q1 b4 q4 .. . b4 q4 ··· ··· b5 ··· .. . =B =Q ≈ 1.0 Fig. 2. Partial Product Array for Reciprocal Operation linear equations. Solving for Q after much reduction yields: q[0] q[1] q[2] q[3] q[4] q[5] = = = = = = 0 b2 + b3 b2 b3 + b4 1 + b3 + (b2 |b4 ) + b5 1 + b2 b3 b4 + b2 b3 b5 + b6 b4 + b2 b3 b4 + (b3 |b5 ) + (b2 |b3 |b5 ) + b4 b5 + (b2 |b6 ) + b7 (12) (13) (14) (15) (16) (17) The equations for each bit of Q can be reduced to the sum of Boolean elements which are weighted by a different power of 2. These elements can be summed up using a partial product array. The elements for a divide or reciprocal operation could be multiplexed with the elements for multiplication. So far research into reusing a 53x53 bit multiplier for the reciprocal operation has yielded a 12 bit approximation. There is hope that a breakthrough in this research could directly solve for the equations of reciprocation or even the division operation. 3.2. Remainder Avoidance Another research area that could result in a significant performance benefit for the division operation is solving the dilemma of how to round an intermediate result from a quadratically converging algorithm. For fixed-point division where the quotient is only computed to the integer radix point, thus limiting its precision when the dividend and divisor have a large precision, the solution is very elegant. Peter Markstein has shown an algorithm [23, 24] that eliminates the remainder calculation and can produce an exactly truncated result. This is done by perturbing the result to always make it an underestimate but within the error tolerance needed. This only holds true for fixed-point division. For floating-point division, a problem under research is how to perturb the intermediate result to properly round it to the target precision. The best solution so far is to maintain extra guard bits (G), examine those guard bits, and eliminate a remainder calculation in all but 1 case out of 2G cases[25, 26, 27, 28]. Q is the result needed which has N bits of accuracy and that the approximate solution has been normalized to less than 1.0 and greater than or equal to 1/2. The approximate solution is called Q and has N + k bits with an error of plus or minus 2−(N +j) where k ≥ j > G > 0. Q needs to be transformed into an N + G bit number with an accuracy of plus or minus the last bit, which is weighted 2−(N +G) . To do this, first Q is incremented by more than 2−(N +j) but less than 2−(N +G) . This perturbation prepares the intermediate result for the subsequent truncation error. The intermediate result is then truncated to N + G bits and yields an approximation to less than plus or minus 2−(N +G) . Lastly, depending on rounding mode, 1 out of 2G combinations of guard bits will require a remainder calculation and all the other cases do not. Thus, most of the time the remainder does not need to be calculated. It is rumored the IBM 360 model 91 implemented 10 extra guard bits of precision and if they were all one, the intermediate result was incremented. Research is near a breakthrough where the remainder calculation will not be needed for floating-point division. 118 FUTURE RESEARCH IN COMPUTER ARITHMETIC 4. DECIMAL FLOATING-POINT The soon to be ratified IEEE 754R Standard[3] proposes a new format and system for representing decimal floating-point numbers. It has been implemented in software using the DecNumber package and its first hardware implementation on the IBM Power6 server (P570) was released in June 2007 [29, 30]. The first hardware implementation is a non-pipelined design. The design is area efficient and consists mainly of a large 36 digit adder that can be split into 2 x 18 digit adders. The adder takes 2 cycles but can be pipelined every cycle. The overall operation of floating-point addition is not pipelined and requires: 1) expansion from encoded format (DPD) to BCD format, 2) alignment, 3) addition, 4) post alignment correction, 5) rounding, and 6) compression from BCD format to encoded format (DPD). Multiplication is decomposed into 1 digit by 16 or 34 digit multiplies. For the 16 digit case, one 18 digit adder creates partial products every cycle and the other sums partial products. This design is very simple and area efficient. The main difficulty in this type of design is performance. The decimal unit must notify the issue queue up to 8 cycles in advance that it will complete to eliminate stall cycles. The latency is very data dependent and one must take into account all special cases. Decimal addition can be pipelined[31]. This is simple to do and mainly involves extra registers and shifters to take care of the worst case alignment of both operands. Wang shows a method for injection based rounding where the rounding is mostly performed in the adder. This will shorten the pipeline depth. There are still some problems to overcome in these designs since all cases must be implemented including overflow, underflow, subnormal detection, quasi-super normals, and many other items. The biggest problem is taking care of all cases in a pipelined design without stalling. Decimal multiplication could also be pipelined[32, 33]. There have been amazing breakthroughs in designing multipliers. Lang[33] proposed a very elegant counter tree to reduce carries separate from sums in the tree. Vazquez[32] suggests an interesting encoding of BCD numbers to use a 4221 weighting of bits instead of 8421. By using 4221 the digit can not be illegal which is when it is greater than 9. This enables the sum digit of a 3:2 counter to automatically be within a valid range. The carry digit requires a special function to multiply it by 2 in this encoding. The authors suggest going to yet another encoding and then going back to 4221 encoding, but either way, there is function needed to do this transformation. Overall decimal multiplication then begins to resemble parallel binary multiplication with these discoveries. Still the design has to be made small enough to implement. The next step will be building a pipelined decimal multiply-adder which should not be too difficult once a decimal multiplier is available. The field of decimal floating-point arithmetic is a hot bed of research with very few papers written between the 1950s [1] and 1990s. Now, the field is alive again with great design advances. We are close to a breakthrough in this field. 5. CONCLUSION Computer arithmetic is a very old field that is now blossoming with recent discoveries. These ideas have come from education and changes in the technology and number systems. Education plays a key part in this advancement; understanding through proofs is key to insuring correctness in implementations. It is also vital that these descriptions express the underlying meaning of the concepts. Stamatis Vassiliadis was a key contributor to this field through his detailed analysis brought forward in his prolific writing on computer arithmetic. His publications have established a foundation for researchers to continue expanding this field further. The field of computer arithmetic is forever expanding around these discoveries and elegant proofs of basic concepts. 6. REFERENCES [1] R. Richards, Arithmetic Operations in Digital Computers. New York: D. Van Nostrand Company, Inc., 1955, ch. 9. [2] “IEEE standard for binary floating-point arithmetic, ANSI/IEEE Std 754-1985,” The Institute of Electrical and Electronic Engineers, Inc., New York, Aug. 1985. [3] The Institute of Electrical and Electronic Engineers, Inc., “IEEE standard for floating-point arithmetic, ANSI/IEEE Std 754-Revision,” http://754r.ucbtest.org/, Oct. 2006. [4] V. Bartlett and E. Grass, “Completion-detection technique for dynamic logic,” IEE Electronics Letters, vol. 33, no. 2, pp. 1850–1852, Oct. 1997. [5] S. Majerski and M. Wiweger, “NOR-Gate Binary Adder with Carry Completion Detection,” IEEE Trans. on Electronic Computers, vol. EC-16, pp. 90–92, Feb. 1967. [6] E. Schwarz, Binary floating-point unit design in High-Performance Energy-Efficient Microprocessor Design, V. Oklobdzija and R. Krishnamurthy, Eds. Boston: Springer, 2006. 119 Eric Schwarz [7] A. D. Booth, “A signed multiplication technique,” Quarterly J. Mech. Appl. Math., vol. 4, pp. 236–240, 1951. [8] L. Rubinfield, “A proof of the modified Booth’s algorithm for multiplication,” IEEE Trans. Comput., pp. 1014–1015, Oct. 1975. [9] S. Vassiliadis, E. M. Schwarz, and D. J. Hanrahan, “A general proof for overlapped multiple-bit scanning multiplications,” IEEE Trans. Comput., vol. 38, no. 2, pp. 172–183, Feb. 1989. [10] E. M. Schwarz, B. Averill, and L. Sigal, “A radix-8 CMOS S/390 multiplier,” in in Proc. of Thirteenth Symp. on Comput. Arith., Asilomar, CA, July 1997, pp. 2–9. [11] E. M. Schwarz, L. Sigal, and T. McPherson, “CMOS floating point unit for the S/390 parallel enterpise server G4,” IBM Journal of Research and Development, vol. 41, no. 4/5, pp. 475–488, July/Sept. 1997. [12] E. M. Schwarz and C. A. Krygowski, “The S/390 G5 floating-point unit,” IBM Journal of Research and Development, vol. 43, no. 5/6, pp. 707–722, Sept./Nov. 1999. [13] S. Vassiliadis, E. M. Schwarz, and B. M. Sung, “Hard-wired multipliers with encoded partial products,” IEEE Trans. Comput., vol. 40, no. 11, pp. 1181–1197, Nov. 1991 (also see correction in IEEE TOC, 42(1), p.127). [14] C. Baugh and B. Wooley, “A two’s complement parallel array multiplication algorithm,” IEEE Trans. Comput., vol. C-22, no. 12, pp. 1045–1047, Dec. 1971. [15] R. K. Montoye, E. Hokenek, and S. L. Runyon, “Design of the IBM RISC system/6000 floating-point execution unit,” IBM Journal of Research and Development, vol. 34, no. 1, pp. 59–70, Jan. 1990. [16] A. Weinberger, “4:2 Carry-Save Adder Module,” IBM Tech. Disclosure Bulletin, vol. 23, pp. 3811–3814, Jan. 1981. [17] N. Ohkubo et. al., “A 4.4ns CMOS 54 x 54-b multiplier using pass-transistor multiplexor,” IEEE J. Solid-State Circuits, vol. 30, no. 3, pp. 251–257, March 1995. [18] S. Vassiliadis and E. Schwarz, “Generalized 7/3 Counters,” U.S. Patent No. 5,187,679, Feb. 16, 1993. [19] D. Rumynin, S. Talwar, and P. Meulemans, “Parallel Counter and a Multiplication Logic Circuit,” U.S. Patent No. 6,883,011B2, Apr. 19, 2005. [20] ——, “Parallel Counter and a Multiplication Logic Circuit,” U.S. Patent No. 6,938,061B1, Aug. 30, 2005. [21] ——, “Parallel Counter and a Logic Circuit for Performing Multiplication,” U.S. Patent No. 7,136,888B2, Nov. 14, 2006. [22] E. M. Schwarz, “High-radix algorithms for high-order arithmetic operations,” Ph.D. dissertation, Dept. Elec. Eng., Stanford Univ., Jan. 1993. [23] P. Markstein, IA-64 and Elementary Functions: Speed and Precision. Prentice-Hall, 2000. [24] M. Cornea, C. Iordache, J. Harrison, and P. Markstein, “Integer divide and remainder operations in the intel ia-64 architecture,” in RNC4, Proceedings of the 4th International Conference on Real Numbers and Computers, 2000, pp. 161–184. [25] E. Schwarz and T. McPherson, “Method and system of rounding for division and square root,” U.S. Patent No. 5,764,555, June 9, 1998. [26] E. Schwarz, “Method and system of rounding for quadratically converging division and square root,” U.S. Patent No. 5,729,481, Mar. 17, 1998. [27] ——, “Method and system of rounding for quadratically converging division and square root,” U.S. Patent No. 5,737,255, Apr. 7, 1998. [28] E. M. Schwarz, “Rounding for quadratically converging algorithms for division and square root,” in Proc. of 29th Asilomar Conf. on Signals, Systems, and Computers, Oct. 1995, pp. 600–603. [29] S. Carlough and E. Schwarz, “Power6 Decimal Divide,” in Proc. of 18th Application-specific Systems, Architectures and Processors (ASAP 2007), 2007, pp. 128–133. [30] B. McCredie, “Power Roadmap,” in MicroProcessor Forum (http://www2.hursley.ibm.com/decimal/IBM-Power-RoadmapMcCredie.pdf), Oct. 2006. [31] L. Wang and M. Schulte, “Decimal floating-point adder and multifunction unit with injection-based rounding,” in Proc. of Eighteenth Symp. on Comput. Arith., 2007, pp. 56–65. [32] A. Vazquez, E. Antelo, and P. Montuschi, “A new family of high-performance parallel decimal multipliers,” in Proc. of Eighteenth Symp. on Comput. Arith., 2007, pp. 195–204. [33] T. Lang and A. Nannarelli, “A radix-10 combinational multiplier,” in Proc. of 40th Asilomar Conf. on Signals, Systems, and Computers, 2006, pp. 313–317. 120