A Comparison of Binomial Proportion Interval

advertisement

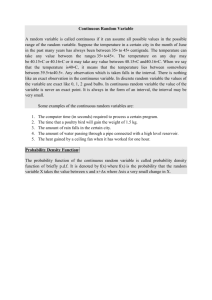

A Comparison of Binomial Proportion Interval

Estimation Methods

John Ulicny, Precision Metrics Inc., Valley Forge PA

ABSTRACT

Some recent research has confirmed that the

standard Wald (normal) confidence interval

approximation for the binomial proportion is

inadequate in key respects, especially for extreme

values of p . Even for moderately large values of n

the coverage probabilities can prove to be erratic for

various specific values of p . This fact makes

general rules for the use of the Wald method

problematic.

Alternative methods have been

proposed, and this paper compares some of those

alternatives with new capabilities built in to SAS

Version 8.2 to calculate exact intervals. A decision

rule is proposed for the selection of an appropriate

technique. Also, a few extensions to other discrete

distributions will be considered.

This topic is relevant to both statisticians and

programmers because of the fact that binomial

proportion estimation is so commonly needed in a

wide variety of situations, and the Wald (normal

approximation) technique is taught in even the most

basic statistics courses. Non-statisticians therefore

often handle the task of interval estimation of this

type.

INTRODUCTION

Binomial random variables arise frequently in data

analysis. They are based on a sum of much simpler

variables called Bernoulli random variables.

When a situation exists where one of only two

possible mutually exclusive outcomes can occur,

then this forms a particular kind of sample space. It

is common to assign a designation of “success” to

one of the events in the sample space and “failure”

to the other. Furthermore it is helpful to map these

designations into a numeric variable.

We can

create a variable, Y, with a value 1 corresponding to

a successful outcome and a 0 for failure. A variable

that can take on a value of 1 or 0 in this way is

called a Bernoulli random variable. A sum of such

random variables is called a binomial random

variable when each term in the sum occurs

independently of all others, and the probability of a

success is constant.

The probability of observing exactly “ x ” successes

in a sum of “ n ” Bernoulli trials is expressed as

(1.1)

n

P ( X = x) = p x (1 − p ) n − x

x

For example the probability of observing 2 heads in

10 tosses of a fair coin could be stated as

10

P ( X = 2) = (.5) 2 (.5)10− 2 = .044

2

In practice the value of the population parameter p is

usually unknown and must be estimated from a

sample of data. This sample estimate is obtained by

counting the successes and dividing by the total

number of trials. Let’s call p̂ the estimate. Then

(1.2)

pˆ = ∑ yi / n = x / n

But since the estimate of p is a function of the

observed values of a random variable, this estimate

is itself a random variable and is subject to random

fluctuation. By building a confidence interval using

our estimate of p and some assumptions we are

able to specify two values between which the true

p will lie with a stated probability. We can call this

probability the “nominal confidence level”. If we call

pL and pU lower and upper confidence limits

respectively, then we can attempt to make a

statement such as

(1.3)

P ( pL ≤ p ≤ pU ) = .95

where pL and pU are random quantities between

which we claim to be 95 percent confident that the

true value of p lies. Here I will mention that the

value of p is fixed but unknown, and it is the

confidence interval around p that varies randomly.

For simplicity I will use a confidence level of .95

throughout this paper. The same conclusions will

apply to other levels.

In addition it is common to

state the confidence level as (1 − α ) because it

makes it easier to relate confidence coefficients to

alpha levels in hypothesis tests. In this paper I will

use α =.05.

The term “coverage” is used to denote the

probability that a confidence interval computed from

a sample of data will actually enclose the true value

of p . This coverage will in general vary depending

on the values of p and n . In addition, the

“confidence coefficient” is defined as the smallest

coverage probability across all possible values of

p.

One of our primary goals is to choose a method with

coverage as close as possible to the nominal

confidence level. Finding values for the confidence

limits in equation (1.3) that provide a level of

confidence exactly equal to .95 for all values of p is

not possible.

A confidence interval is defined by the formula that

generates pL and pU along with a confidence

coefficient. This way of defining the interval implies

that an “exact “ interval with a nominal confidence

level of .95 needs to meet the requirement that its

minimum coverage is .95, as opposed to a

requirement that coverage is .95 everywhere. This

makes exact methods very conservative. Moreover

the term “exact” is a bit counterintuitive.

METHODS

PROC FREQ in SAS/BASE version 8 calculates

exact binomial confidence intervals as well as

intervals based on the normal approximation.

The other method you can request from PROC

FREQ is the normal approximation. It uses the fact

n becomes large,

p̂ becomes

that

as

approximately normally distributed with mean p

and variance p (1 − p ) / n . This method does not

guarantee coverage of (1 − α ) and in fact has very

erratic coverage properties. The interval limits are:

pL = pˆ − zα / 2 pˆ (1 − pˆ ) / n

and

pU = pˆ + zα / 2 pˆ (1 − pˆ ) / n

Consider this simple example: Let’s assume that a

machine produces widgets with a defect rate of 10

percent. If we select a random sample of 10 widgets

produced by the machine, then there are 11 possible

values for the random variable X, the number of

defects. Each item produced has an independent

probability of being defective. The following tables

list the exact and normal approximation confidence

interval methods and their coverage properties:

Table 1A

Binomial Confidence Intervals with p=.1 and

n=10

Nominal Confidence Level=.95

To obtain an exact confidence interval we must

solve the pair of equations for pL and pU

n

n

k=x

x

n

k =0

∑ k p

k

L

(1 − pL ) n −k = α / 2

k

U

(1 − pU ) n −k = α / 2

and

∑ k p

The following equivalent formulas are the ones SAS

uses. They are derived form the above equations

and involve an F-table lookup which is much faster.

n − x +1

pL = 1 +

xF2 x ,2( n − x +1),1−α / 2

Normal Approximation Method

X

P(X=x)

0 0.34868

1 0.38742

2 0.19371

3

0.0574

4 0.01116

5 0.00149

6 0.00014

7 0.00001

8 3.60E-07

9 9.00E-09

10 1.00E-10

Total Coverage

Lower

0

0

0

0.016

0.0964

0.1901

0.2964

0.416

0.5521

0.7141

1

Upper

0

0.2859

0.4479

0.584

0.7036

0.8099

0.9036

0.984

1

1

1

Coverage Coverage

Flag Probability

0

0

1

0.3874

1

0.1937

1

0.0574

1

0.0112

0

0

0

0

0

0

0

0

0

0

0

0

0.6497

−1

n−x

pU = 1 +

( x + 1) F2( x +1),2( n − x ),α / 2

−1

Table 1A lists each possible value of x that could

have occurred along with the confidence interval that

it would have generated based on the normal

method. If the confidence interval covered the value

of p , which in this case is .1, then the coverage flag

column is set to 1. The coverage probability is just

the coverage flag times P(X=x). The total coverage

for this method with p =.1 and n =10 is defined as

the sum of coverage probabilities over all x . As you

can see the coverage value of .6479 is quite

substantially below the nominal coverage of .95.

Table 1B

Binomial Confidence Intervals with p=.1 and

Exact Method

X

P(X=x)

0 0.34868

1 0.38742

2 0.19371

3

0.0574

4 0.01116

5 0.00149

6 0.00014

7 0.00001

8 4.00E-07

9 9.00E-09

10 1.00E-10

Lower

0

0.0025

0.0252

0.0667

0.1216

0.1871

0.2624

0.3475

0.4439

0.555

0.6915

Upper

0.3085

0.445

0.5561

0.6525

0.7376

0.8129

0.8784

0.9333

0.9748

0.9975

1

Coverage Coverage

Flag Probability

1

0.3487

1

0.3874

1

0.1937

1

0.0574

1

0.0112

0

0

0

0

0

0

0

0

0

0

0

0

Total Coverage

0.9984

n=10

Nominal Confidence Level=.95

It’s easy to see in Table 1B that the exact method’s

confidence interval captures the true value of p

when x =0. This is not the case with the normal

approximation, and so the exact method’s coverage

comes much closer to the stated nominal confidence

level of .95. In fact it’s actually quite conservative.

This is sample code to generate some of the results

in the preceding tables for x=3=

∑y

data FreqDemo;

input y @@;

datalines;

1 0 0 0 0 1 0 1 0 0

; run;

proc sort data=FreqDemo;

by descending y; run;

proc freq data=freqdemo order=data;

tables y/binomial; run;

And here is a partial listing of results:

Binomial Proportion for y = 1

Proportion

0.3000

ASE

95% Lower Conf Limit

95% Upper Conf Limit

0.1449

0.0160

0.5840

Exact Conf Limits

95% Lower Conf Limit

95% Upper Conf Limit

0.0667

0.6525

In the previous example the number of observations

was kept small to keep the calculations simple, but

the two methods present problems with coverage

properties even for larger n and p .

Now I would like to discuss and evaluate two other

intervals suggested by Brown et al. The first is the

Wilson interval and the second is the Jeffreys

interval.

The Wilson interval is based on inverting the

standard test for an asymptotically normally

distributed parameter estimate. Instead of using the

standard error of the estimate, it uses the standard

error of the parameter value under the null

hypothesis.

That is, instead of using

pˆ (1 − pˆ ) / n it uses p (1 − p ) / n .

That change results in the following confidence

limits:

X + z2 / 2 z n

±

n + z2

n + z2

ˆ ˆ + z 2 /(4n)

pq

The Jeffreys interval takes a Baysian approach. A

significant difference between this approach and the

others is that the population parameter in the

Baysian approach is considered to be a random

variable. Using Baysian methodology we assume

that the parameter, p , has a prior distribution which

reflects our beliefs about the likely values of p

before the sample was drawn.

For technical

reasons the choice for this “prior” distribution for p is

often a Beta with two parameters a1 and a2 . The

distribution of p is then essentially revised by

information obtained in the data sample and thus a

new “posterior” distribution is obtained.

If p has a beta prior then its mean is a1 /( a1 + a2 ) .

The estimate of p after the sample is drawn is

x a1 + a2 a1

n

pˆ J =

+

a1 + a2 + n n a1 + a2 + n a1 + a2

Close inspection of this equation reveals that it is

ˆ (= x / n) and

essentially a weighted average of p

the mean of the prior distribution, a1 /( a1 + a2 ) . As

n gets larger, more weight is given to information

from the sample.

The beta distribution as used here is called the

“natural conjugate prior” for the binomial and has the

very desirable property that the posterior distribution

for p is also a beta. Brown et al assume a prior of

Beta(1/2,1/2).

The confidence interval then

becomes simply the posterior beta variate evaluated

at the appropriate quantiles. Hence:

pL = B(.025; x + .5, n − x + .5) , x > 0

pL = 0 , x = 0

and

pU = B(.975, x + .5, n − x + .5) , x < n

pU = 1 , x = 1

Using the Wilson method to calculate confidence

intervals in example 1 would result in a coverage

probability of .930 while the Jeffreys method gives

coverage of .987. It is clear that for the situation

portrayed in example 1 the Wilson method is

superior in terms of coverage.

Segments of macro code listed below show

calculations of the Wilson and Jeffreys intervals. The

variable ‘PR’ contains P ( X = x ) and ‘PHAT’ is p̂ .

Note the use of the PDF, PROBIT and BETAINV

functions.

These SAS functions make the

calculation of probabilities and quantiles easy.

* -------------------------------------;

* Section to process the Wilson method ;

* -------------------------------------;

%WilsonMth:

data _zzbintmp;

do x=&xlow to &xhigh;

pr=pdf('binomial',x,&popp,&num);

z=probit(&clevel+(1-&clevel)/2);

x1=x+z**2/2;

n1=&num+z**2;

phat=x1/n1;

offset=(z*(&num**.5)/n1)*

((x/&num)*(1x/&num)+z**2/(4*&num))**.5;

*-----ll=lower limit, ul=upper limit;

ll=max(phat-offset,0);

ul=min(phat+offset,1);

*----set coverage flag to 1 if interval ;

*----covers the population proportion;

cflag = (ll le &popp) and

(ul ge &popp);

output;

end;

run;

%goto ProcessResult;

* -----------------------------------;

* Section to process Jeffreys method ;

* -----------------------------------;

%JeffMth:

data _zzbintmp;

do x=&xlow to &xhigh;

pr=pdf('binomial',x,&popp,&num);

*-----ll=lower limit, ul=upper limit;

ll=max(betainv((1-&clevel)/2,

x+.5,&num-x+.5),0);

ul=min(betainv(1-(1-&clevel)/2,

x+.5,&num-x+.5),1);

if x=0 then ll=0;

if x=&num then ul=1;

*----set coverage flag to 1 if interval ;

*----covers the population proportion;

cflag = (ll le &popp) and (ul ge &popp);

output;

end;

run;

%goto ProcessResult;

OTHER CONSIDERATIONS

Three other considerations that are important in

evaluating a confidence interval are

•

•

•

MIL - Mean interval length

MC - Mean coverage

RMSE - root mean squared error.

They are defined below.

Define the expected interval length as

n

n

µ n , p = ∑ ( pU − pL ) p x (1 − p) n − x

x =0

x

Then the mean interval length with respect to a

uniformly distributed p is

1

MILn = ∫ µ n , p dp .

0

Mean coverage is defined similarly as

CONCLUSIONS

1

MCn = ∫ Cn , p dp .

0

And the root mean squared error is

1/ 2

1

RMSEn = ∫ (Cn , p − (1 − α )) 2 dp

0

.

In the above two equations Cn , p is the coverage for

a given method at specific values of n and p .

A shorter confidence interval is preferred, all other

things being equal, and so a smaller MIL is better.

The MC and RMSE give a summary of the coverage

characteristics over a range (uniformly weighted in

this case) of population proportion values. Other

distributions for p can be specified, and once again

the most commonly used distribution is a beta.

Refer to table 2 for a summary of these measures

for various values of n . A numerical technique

known as Romberg integration was programmed in

SAS and used to produce the measures. Romberg

integration repeatedly applies the trapezoidal rule to

the functions involved until convergence to within a

certain tolerance is achieved.

All measures are

thus approximate.

Note that as n increases, the measures improve for

all four methods. For instance the exact method has

This happens

coverage of 100% when n =5.

because the exact method needs to be very

conservative to ensure that coverage never falls

below 95% for a given combination of n , which is

equal to 5 in this case, and p . But as n increases,

the mean coverage for the exact method falls all the

way to 96.67%.

Note also that the normal

approximation method never really achieves mean

coverage as good as any of the other methods.

According to SAS version 8 documentation, “Exact

statistics can be useful in situations where the

asymptotic assumptions are not met, and so the

asymptotic p-values are not close approximations for

the true p-values.” While this paper has addressed

confidence intervals rather than hypothesis tests and

hence p-values, the same advantage of the exact

method over the normal (asymptotic) method can be

seen by referencing the performance measures in

table 2. The SAS documentation goes on to state

that “Asymptotic results may also be unreliable

when the distribution of the data is sparse, skewed

or heavily tied.” The distribution of a binomial

random variable will be heavily skewed when p is

extreme.

A major advantage of the exact method is that it

provides a guarantee that coverage will never fall

below the nominal confidence level. The price to be

paid for this guarantee however is the extreme

conservativeness of the method. The Wilson and

Jeffreys methods both provide a significantly closer

match to nominal confidence, especially at small

values of n . Moreover, these two methods provide

for smaller mean interval widths at all values of n .

The addition of exact methods to the statistical

capabilities of SAS is welcome. This paper has

attempted to compare four methods, two of which

are built-in to SAS (the normal approximation

method and the exact method).

The normal

approximation (Wald) method should virtually never

be used. The exact method can be used in

situations where the researcher must guarantee

coverage at least as large as the nominal stated

coverage.

The Wilson or Jeffreys methods are

appropriate when a high degree of accuracy is

required, but coverage may be allowed to fall below

the nominal level. The Wilson method is simpler to

apply. The Jeffreys method relies on Bayesian

techniques and will be more appropriate to use in

situations amenable to such techniques.

Table 2

Binomial Confidence Interval Comparisons*

Nominal Confidence Level=.95

Method

Mean

Coverage

Root Mean

Squared Error

Mean

Interval

Length

n=5

Normal

Exact

Wilson

Jeffreys

64.17%

100.00%

95.43%

96.02%

39.85%

5.00%

3.54%

3.54%

0.4600

0.6779

0.5581

0.5606

n=10

Normal

Exact

Wilson

Jeffreys

76.97%

98.43%

95.44%

95.33%

28.61%

3.39%

3.39%

3.13%

0.4034

0.5085

0.4354

0.4326

n=15

Normal

Exact

Wilson

Jeffreys

82.22%

97.90%

95.26%

95.15%

23.47%

2.62%

2.97%

2.62%

0.3531

0.4210

0.3687

0.3656

n=25

Normal

Exact

Wilson

Jeffreys

86.55%

97.46%

95.11%

95.03%

18.25%

3.37%

2.81%

2.22%

0.2881

0.3277

0.2943

0.2920

n=50

Normal

Exact

Wilson

Jeffreys

90.09%

96.90%

95.71%

95.71%

2.19%

2.50%

2.05%

2.05%

0.2112

0.2306

0.2129

0.2117

n=100

Normal

Exact

Wilson

Jeffreys

92.23%

96.67%

95.08%

95.00%

2.02%

2.30%

2.45%

1.99%

0.1518

0.1614

0.1522

0.1516

Sample

Size

*

All values are approximations based on numerical integration.

REFERENCES

Agresti, A. and Coull, B. A. (1998). Approximate is

Better than “Exact” for Interval Estimation of Binomial

Proportions. The American Statistician 52, 119-126.

Brown, L. D., Cai T. T. and DasGupta A. (2001).

Interval Estimation for a Binomial Proportion.

Statistical Science (forthcoming).

Collett, D. (1991). Modelling Binary Data, London:

Chapman and Hall.

Leemis, L. M. and Trivedi, K. S. (1996).

A

Comparison of Approximate Interval Estimators for

the Bernoulli Parameter. The American Statistician

50, 63-68.

Burden, R., Faires J. D., and Reynolds A. C. (1981).

Numerical Analysis 2nd Edition.

Boston: PWS

Publishing.

SAS Institute Inc. SAS/STAT User’s Guide, Version

8. Cary NC: SAS Institute Inc., 1999.

Casella, G. and Berger, R. L. (1990).

Inference, Belmont: Duxbury Press.

Acknowledgements:

Statistical

SAS is a Registered Trademark of the SAS Institute,

Inc. of Cary, North Carolina.