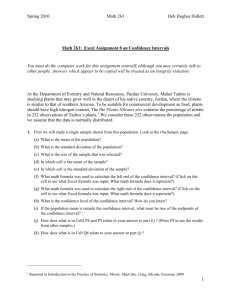

Interval Estimation for a Binomial Proportion

advertisement